Dynamic Mesh Generation

In the spring semester, I created a rough prototype of a drawing/rhythm game that drew lines on a canvas by following DDR-style arrows that scrolled on the screen. For my first Unity exercise, I prototyped an algorithm to dynamically generate meshes given a set of points on a canvas. I plan to use this algorithm in a feature that I will soon implement in my game.

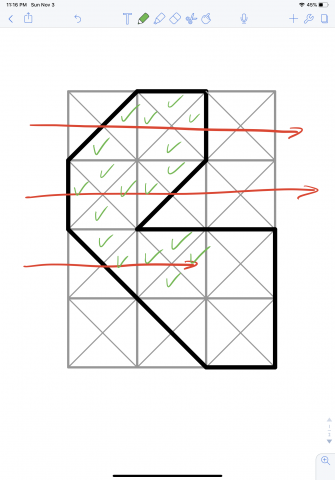

There are a couple assumptions I make to simplify this implementation. Firstly, I assume that all vertices are points on a grid. Second, I assume that all lines are either straight or diagonals. These assumptions means that I can break up each square in the grid into four sections. By splitting it up this way, I can scan each row from left to right. I check if my scan intersects with a left edge, a forward diagonal edge, or a back diagonal edge. I then use this information to determine which vertices and which triangles to include in the mesh.

A pitfall of this algorithm is double-counting vertices, but that can be addressed by maintaining a data structure that tracks which vertices have already been included.

Shaders

My second Unity exercise is also a prototype of a feature I want to include in my drawing game. It's also an opportunity to work with shaders in Unity, which I have never done before. The visual target I want to achieve would be something like this:

I have no idea how to do this, so the first thing I did was scour the internet for pre-existing examples. My goal for a first prototype is to just render a splatter effect using Unity's shaders. This thread seems promising, and so does this repository. I am still illiterate when it comes to the language of shaders, so I asked lsh for an algorithm off the top of their head. The basic algorithm seems simple: sample a noise texture over time to create the splatter effect. lsh also suggests additively blending two texture samples. lsh was also powerful enough to spit out some pseudocode in the span of 5 minutes:

P = uv Col = (0.5, 1.0, 0.3) //whatever Opacity = 1.0 // scale down with time radius = texture(noisetex, p) splat = length(p) - radius output = splat * col * opacity

Thank you lsh. You are too powerful.

After additional research, I decided that the Unity tool I'd need to utilize are Custom Render Textures. To be honest, I'm still unclear about the distinction between using a render texture as opposed to straight-up using a shader, but I was able to find some useful examples here and here. (Addendum: After talking to my adviser, it's clear that the custom render texture is outputting a texture, whereas the direct shader outputs to the camera).

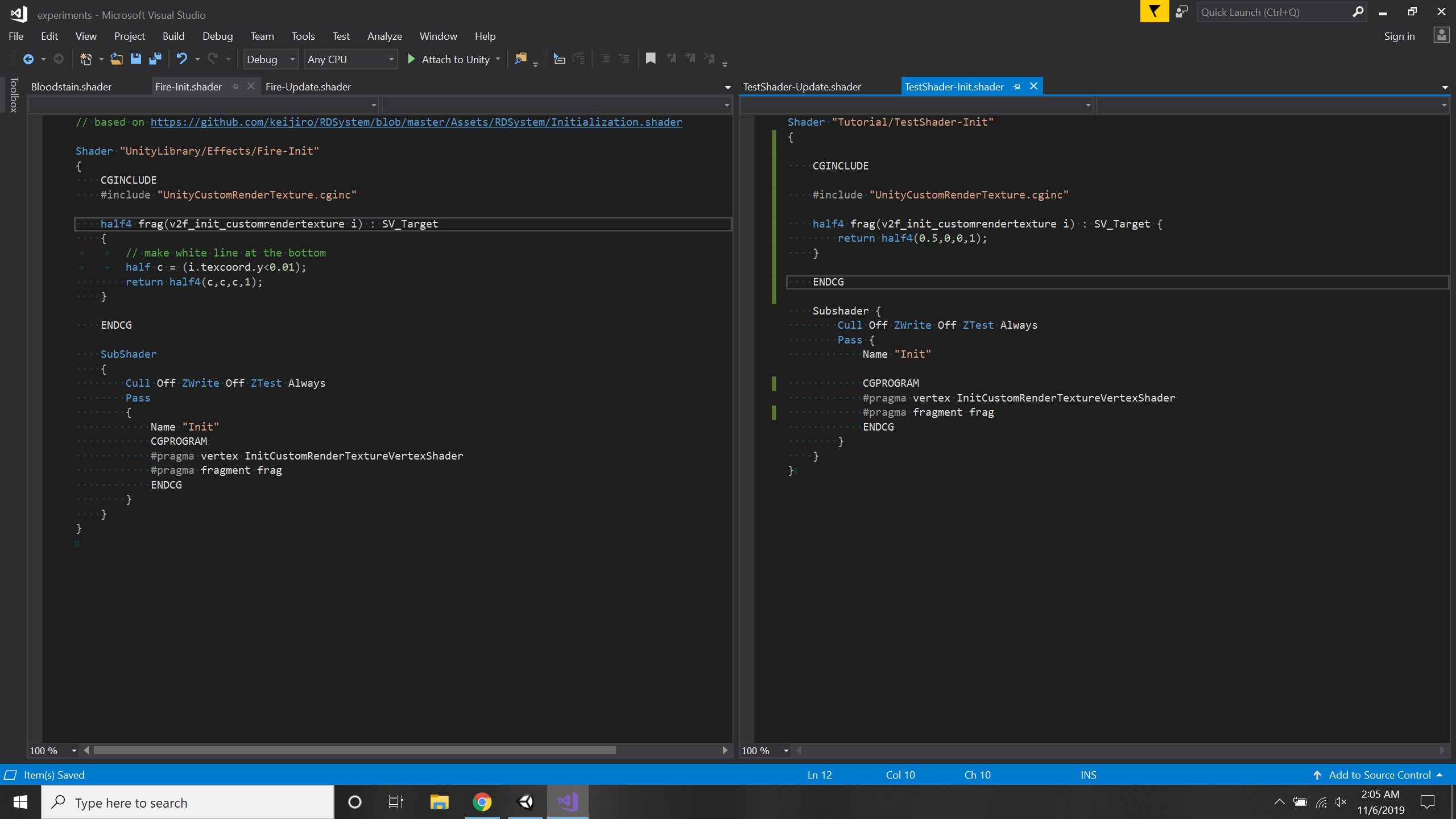

Step 1: Figure out how to write a toy shader using this very useful tutorial.

Step 2: Get this shader to show up in the scene. This means creating a material using the shader and assigning the material to a game object.

I forgot to take a screenshot but the cube I placed in the scene turns a solid dark red color.

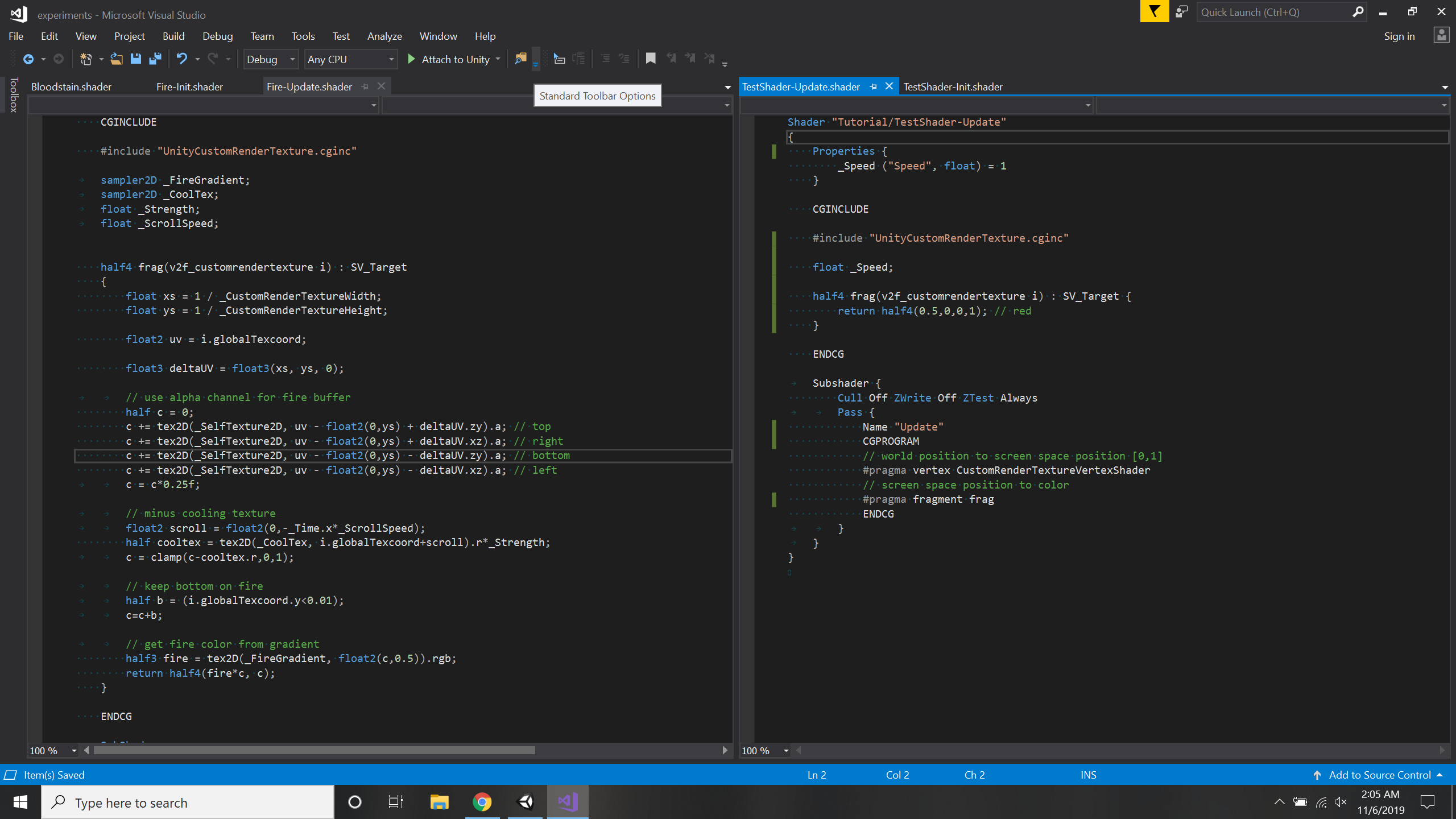

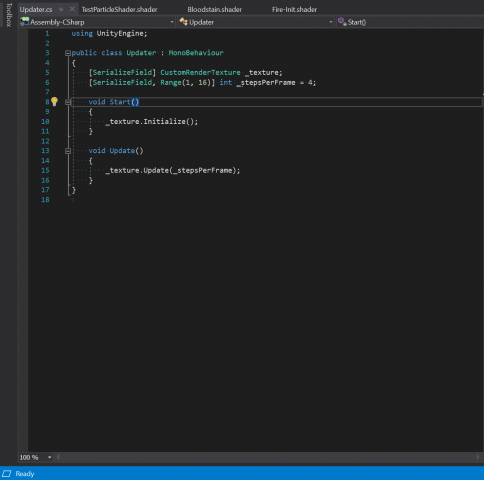

Step 3: Change this from a material to a custom render texture. To do this, I based this off of the fire effect render texture I linked to earlier. I create two shaders for this render texture, one for initialization and one for updates. It's important that I make a dynamic texture for this experiment because the intention is to create a visual that changes over time.

I then assign this to the fields in the custom render texture.

But this doesn't quite work...

After thirty minutes of frustration, I look at the original repository that the fire effect is based on. I realize that that experiment uses an update script that manually updates the texture.

I assign this to a game object and assign the custom render texture I created to the texture field. Now it works!

Step 4: Meet with my adviser and learn everything I was doing wrong.

After a brief meeting with my adviser, I learned the distinction between assigning a shader to a custom render texture to a material to an object, versus directly assigning a shader to a material to an object. I also learned to change the update method of the custom render texture, as well as how to acquire the texture from the previous frame in order to render the current frame. The result of this meeting is a shader that progressively blurs the start image at each timestep:

I'm very sorry to my adviser for knowing nothing.

Step 5: Actually make the watercolor shader... Will I achieve this? Probably not.