AR Golden Experience Requim Stand from Jojo's Bizarre Adventure. Collaborated with sansal.

In Jojo's Bizare Adventure, Stands are a "visual manifestation of life energy" created by the Stand user. It presents itself hovering nearby the Stand user and posseses special (typically fighting) abilies. For this project, we used pose estimation in order for the stand to appear at the person's left shoulder. We used a template and package from Fritz.ai.

The code uses pose estimation to estimate the head, shoulders, and hands. If all the parts are within the camera view, the Stand model moves towards the left shoulder.

This is more of a work-in-progress; we ran into a lot of complications with the pose estimation and rigging of the model. Initially, we wanted to have the Stand to appear when the person stikes a certain pose .(Jojo Bizzare Adventure is famous for its iconic poses). However, the pose estimation was not that accurate, and very slow; made it impossible to train any models. In addition, Fritz AI had issues with depth, so we could not control the size of the 3D model (it would be really close or far away). We also planned to have the Stand model do animations, but ran into rigging issues.

Some Adjustments to be made:

- rig and animate the model

- add text effects (seen in the show)

- add sound effects (seen in the show)

- make the Stand fade in

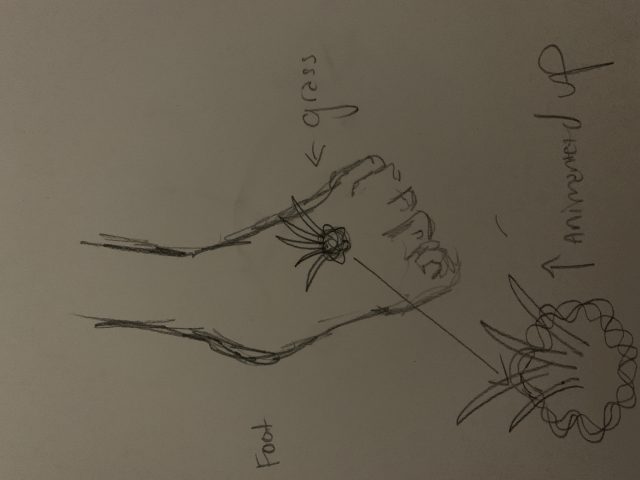

some work in progress photos

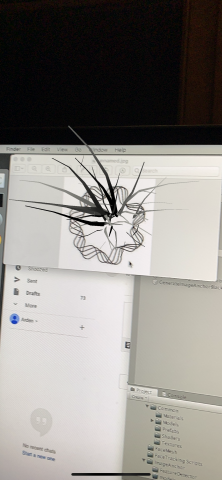

3D model made in Blender

3D model made in Blender

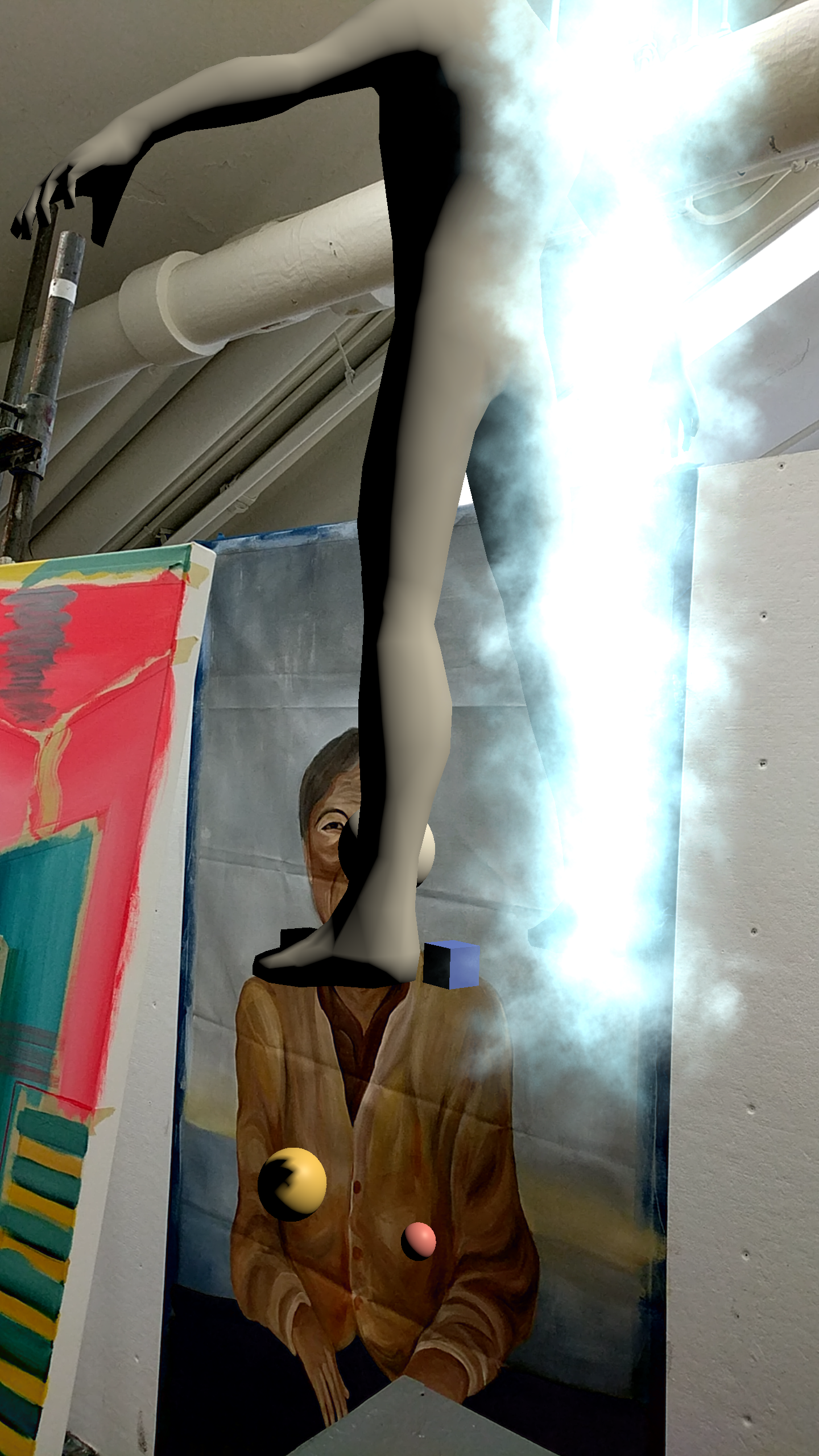

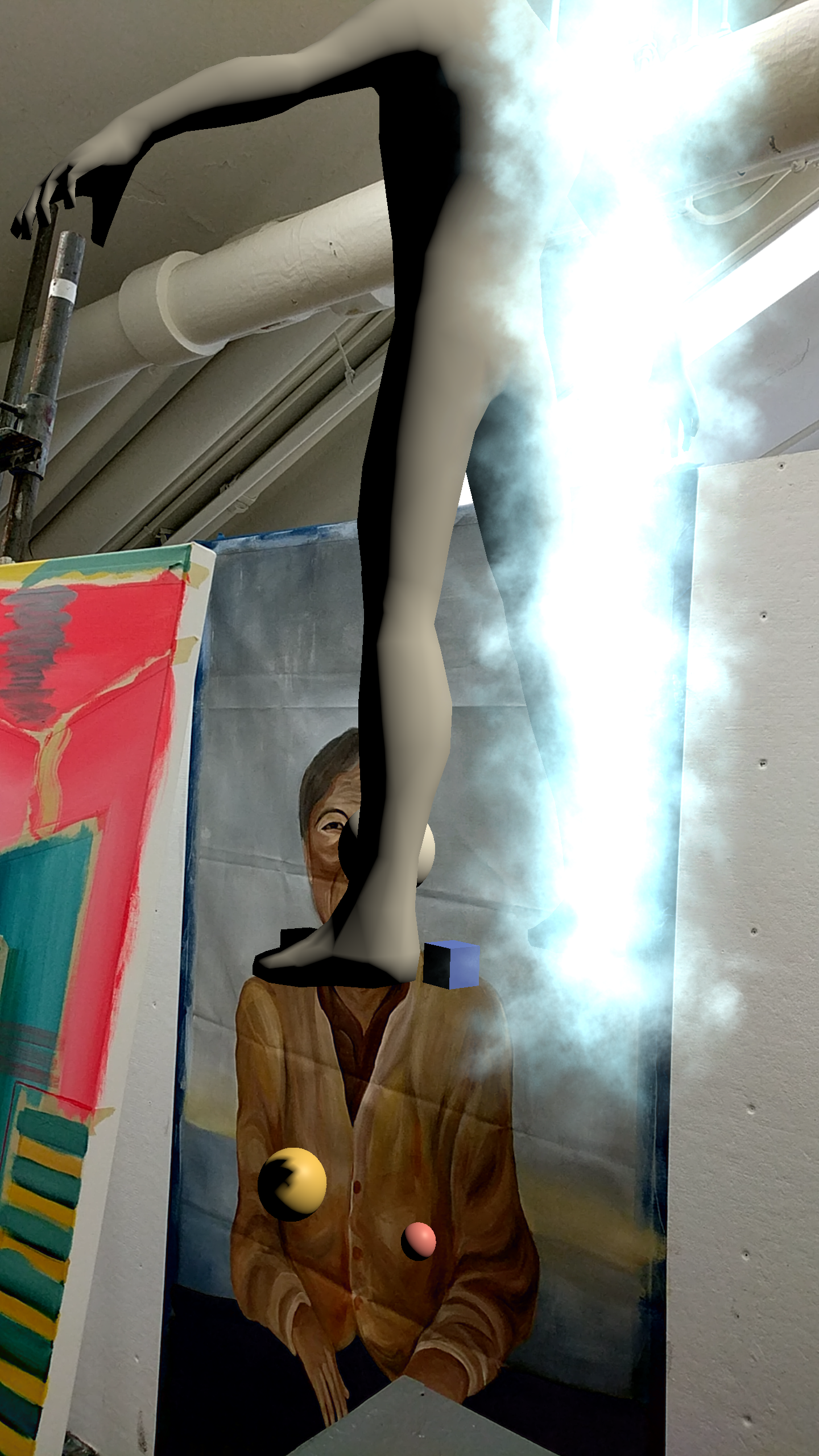

Fritz AI sometimes able to detect the head (white sphere), shoulders (cubes), and hands (colored spheres). 3D model moves to the left shoulder.

Fritz AI sometimes able to detect the head (white sphere), shoulders (cubes), and hands (colored spheres). 3D model moves to the left shoulder.

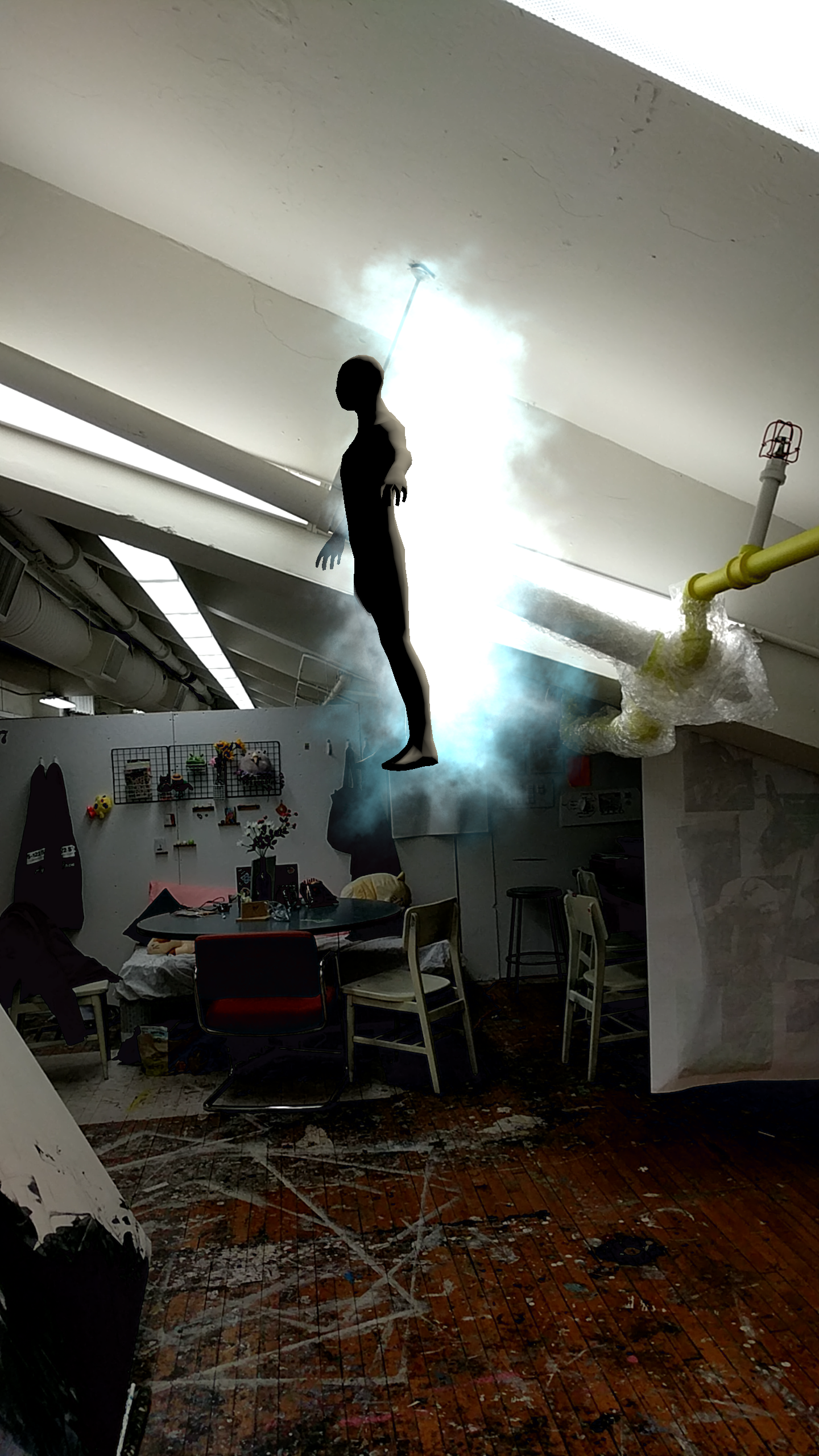

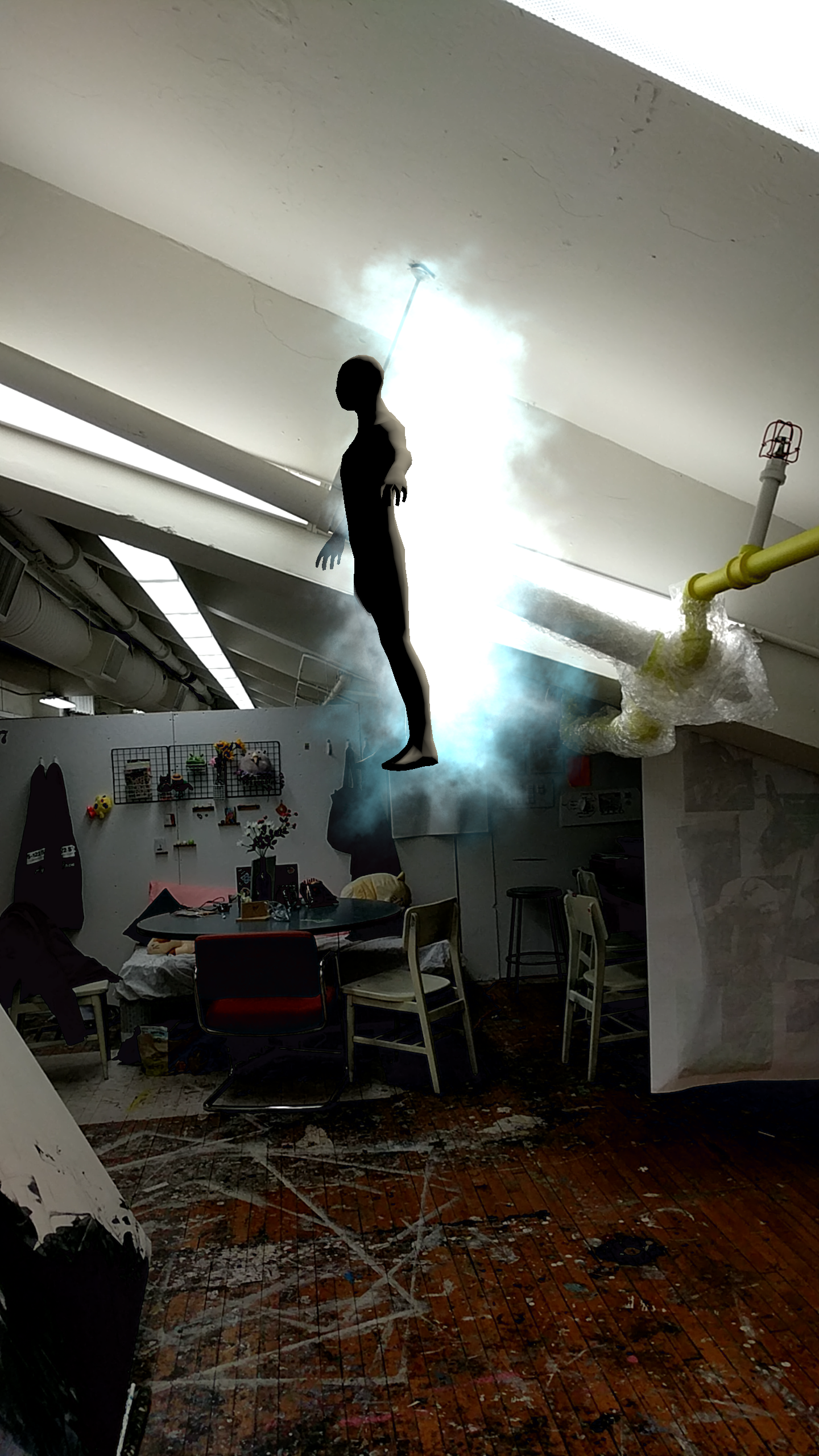

Was not able to instansiate the 3D model to the shoulder point, so the model just appears at the origin point.

Was not able to instansiate the 3D model to the shoulder point, so the model just appears at the origin point.

Fritz AI works only half of the time. The head, shoulders, and hands are way off.

Fritz AI works only half of the time. The head, shoulders, and hands are way off.

This an AR app that lets you place a wooden toy train set on flat vertical surfaces.

This an AR app that lets you place a wooden toy train set on flat vertical surfaces.