Untitled Duck AR by Meijie and Vicky is an augmented reality duck that appears on your tongue when you open your mouth, and is triggered to yell with you when you stick out your tongue.

https://www.youtube.com/watch?v=xt1FgOcHXko&feature=youtu.be

Process

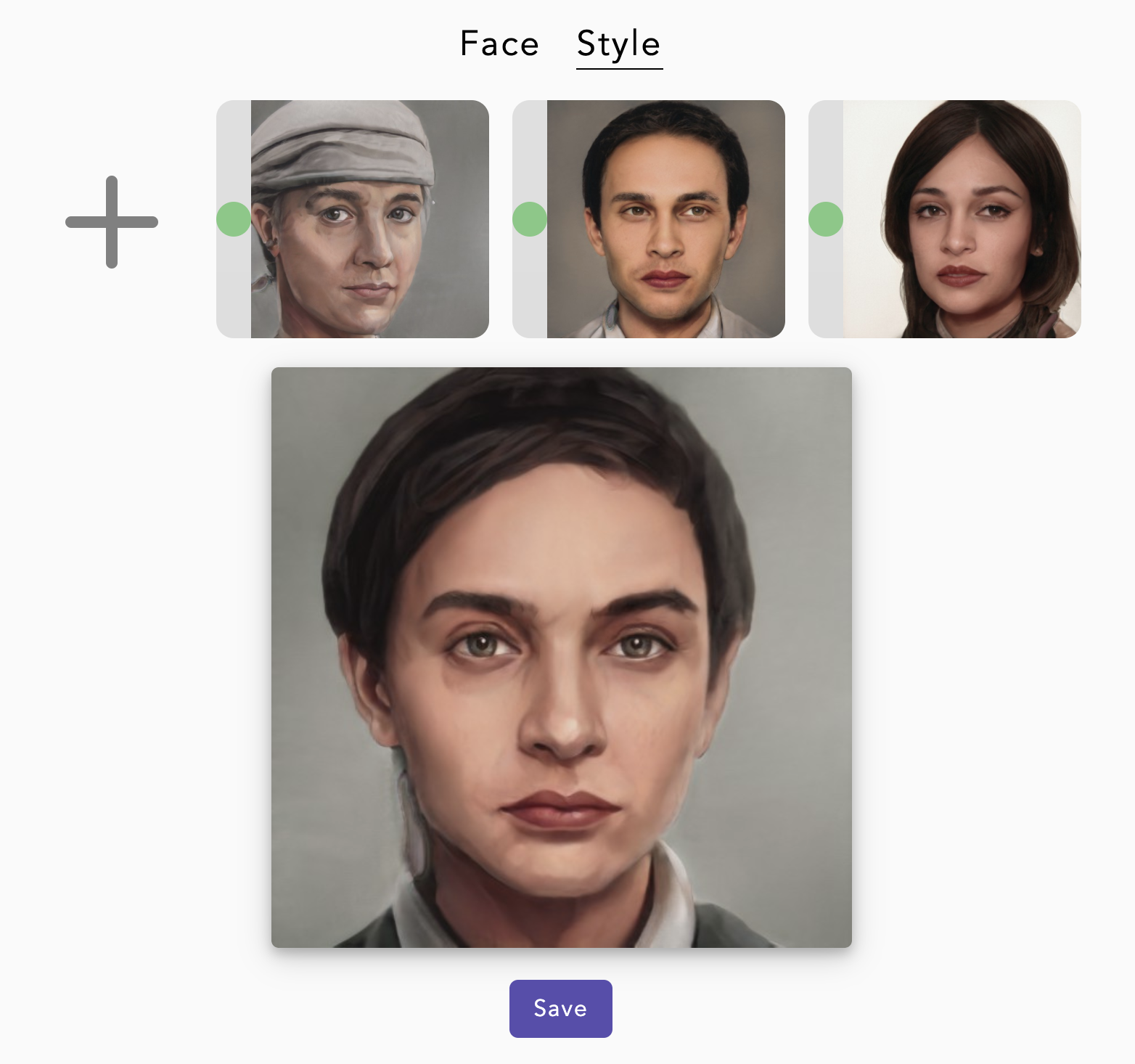

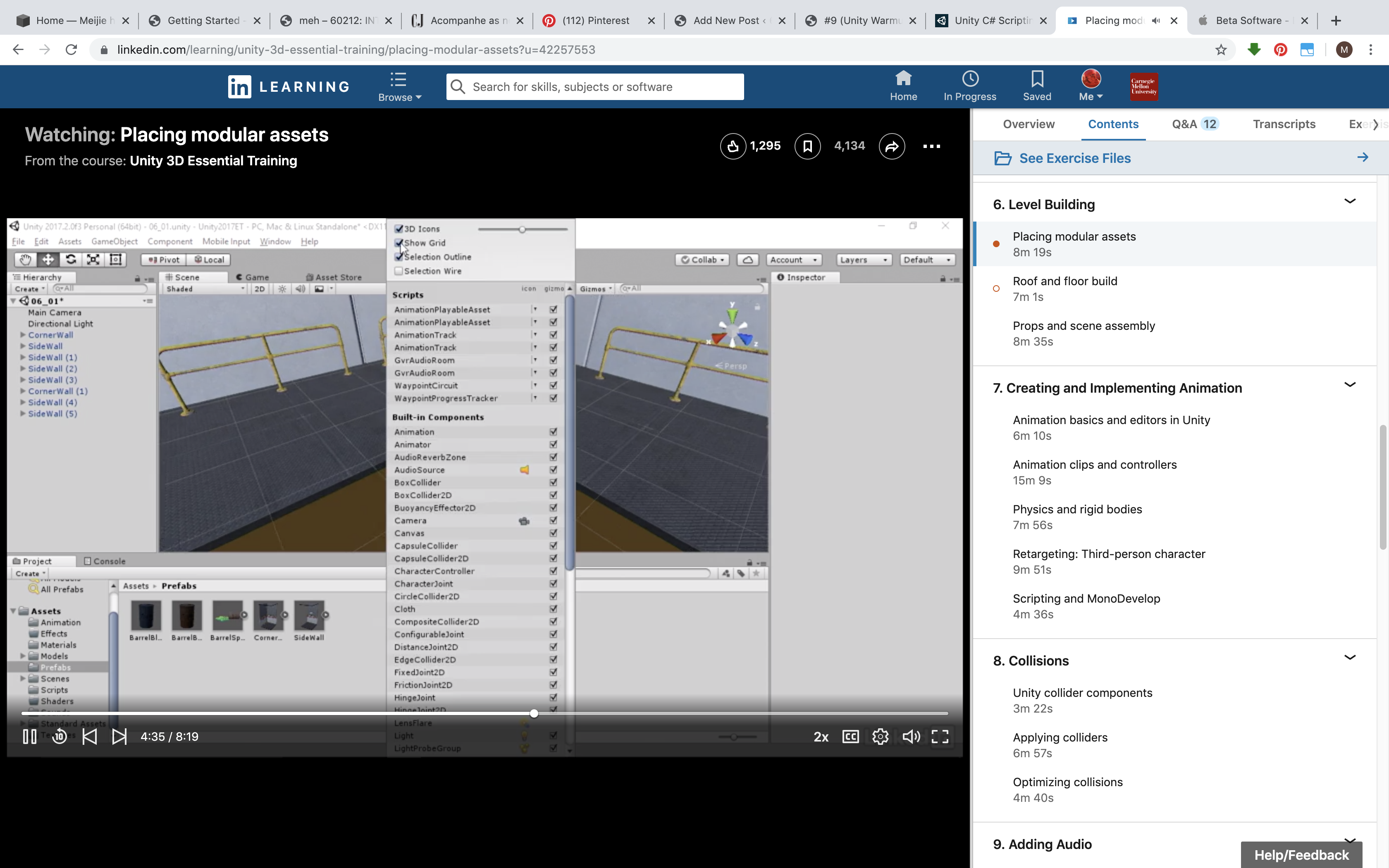

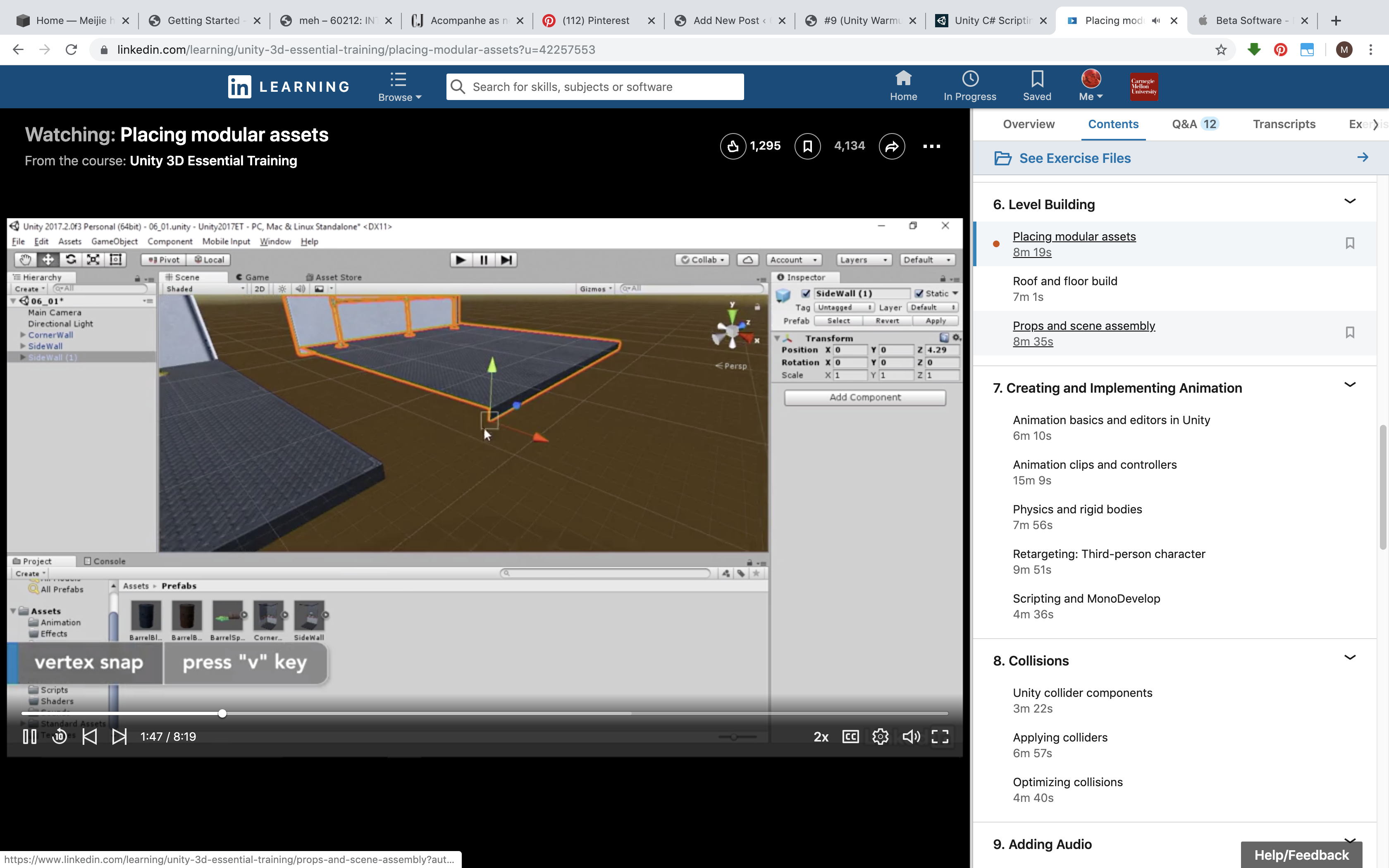

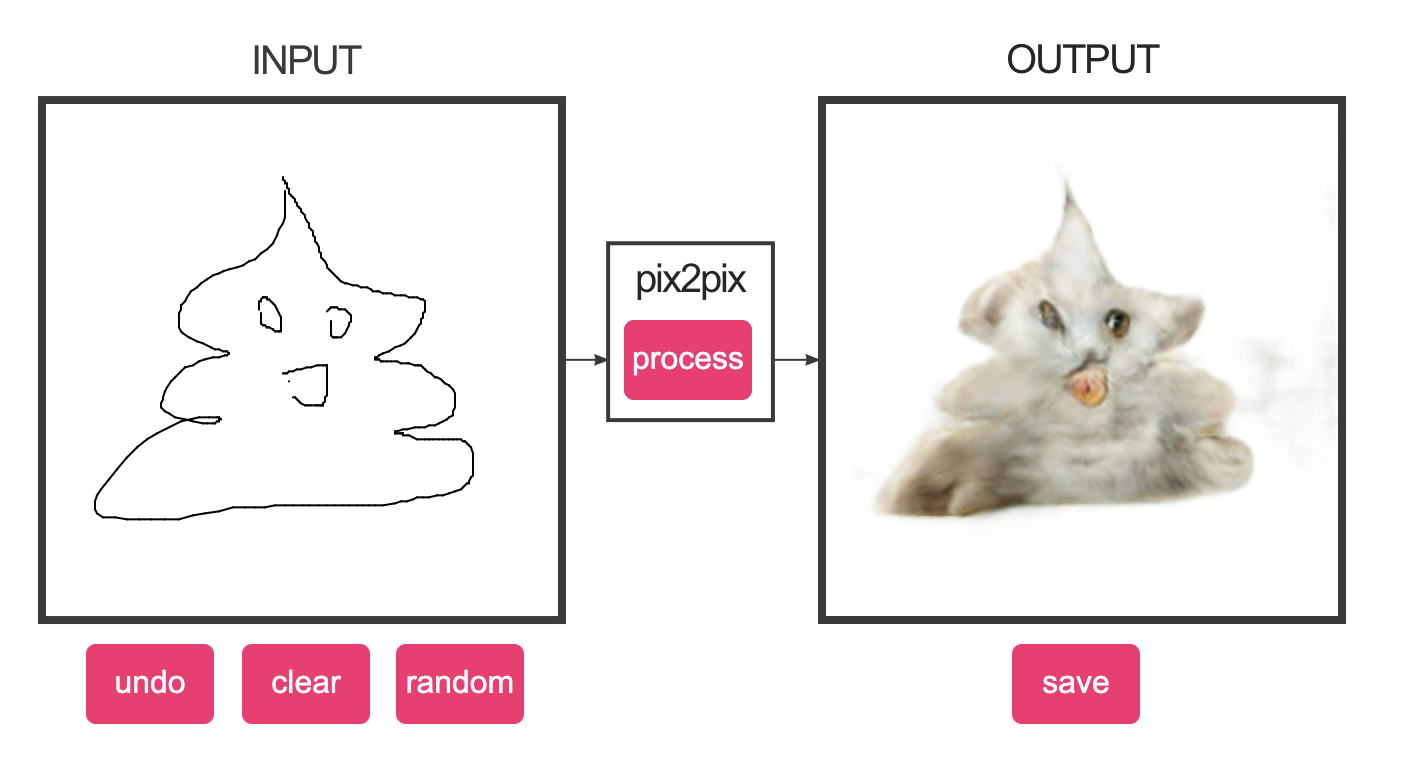

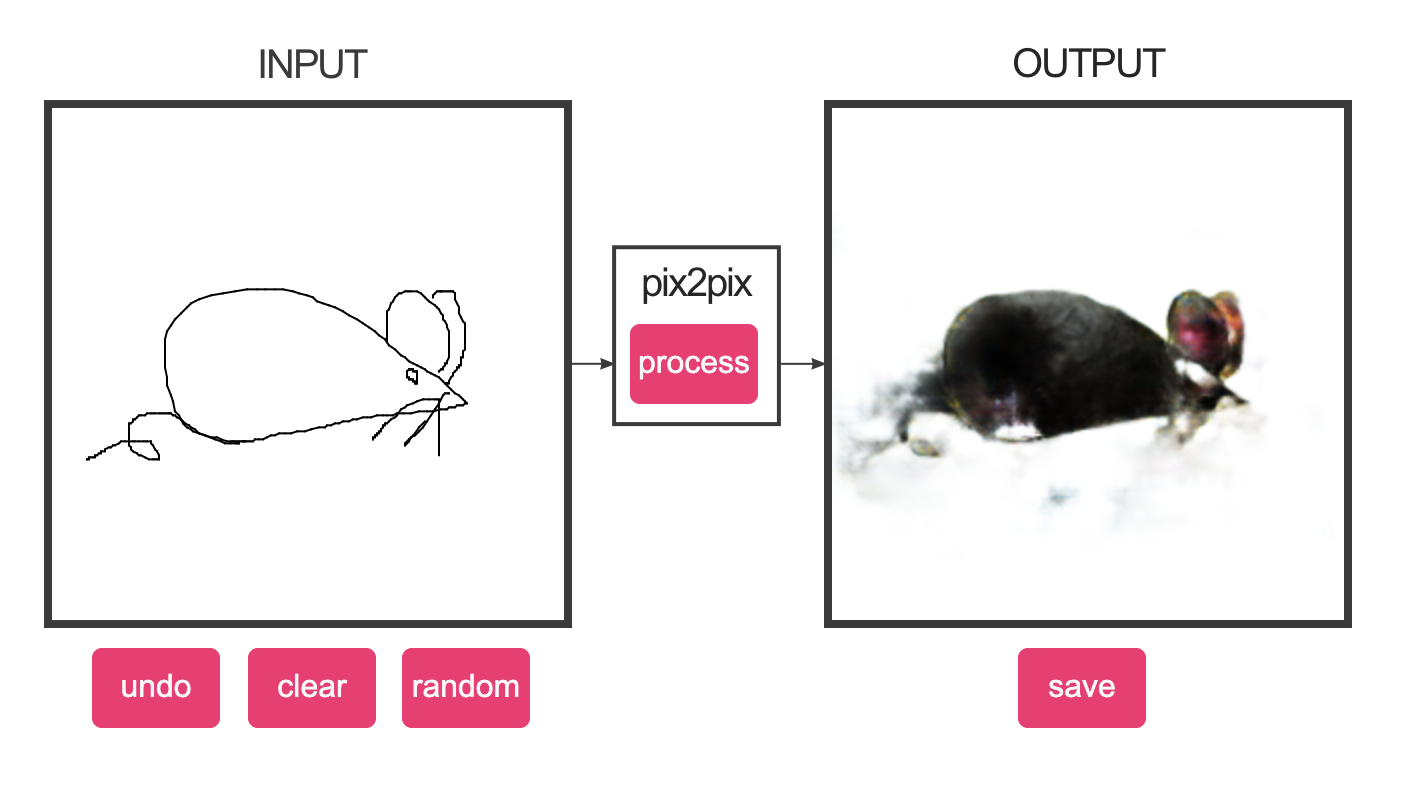

We initially started off by using a bunch of our limited developer builds (heads up for future builds: there is a limit of 10 per week, per free developer account lol) by testing the numerous different types of build templates that we could use to implement AR over our mouth, most particularly image target, face feature tracker, and face feature detector.

We actually got to successfully have an image tracker work for Meijie's open mouth, however, it was a very finicky system because she would have to force her mouth to be in the same exact shape, and very similar lighting, in order for it to register. We plugged in an apple prefab, and thought it was quite humorous as it almost was like being a big stuffed with an apple.

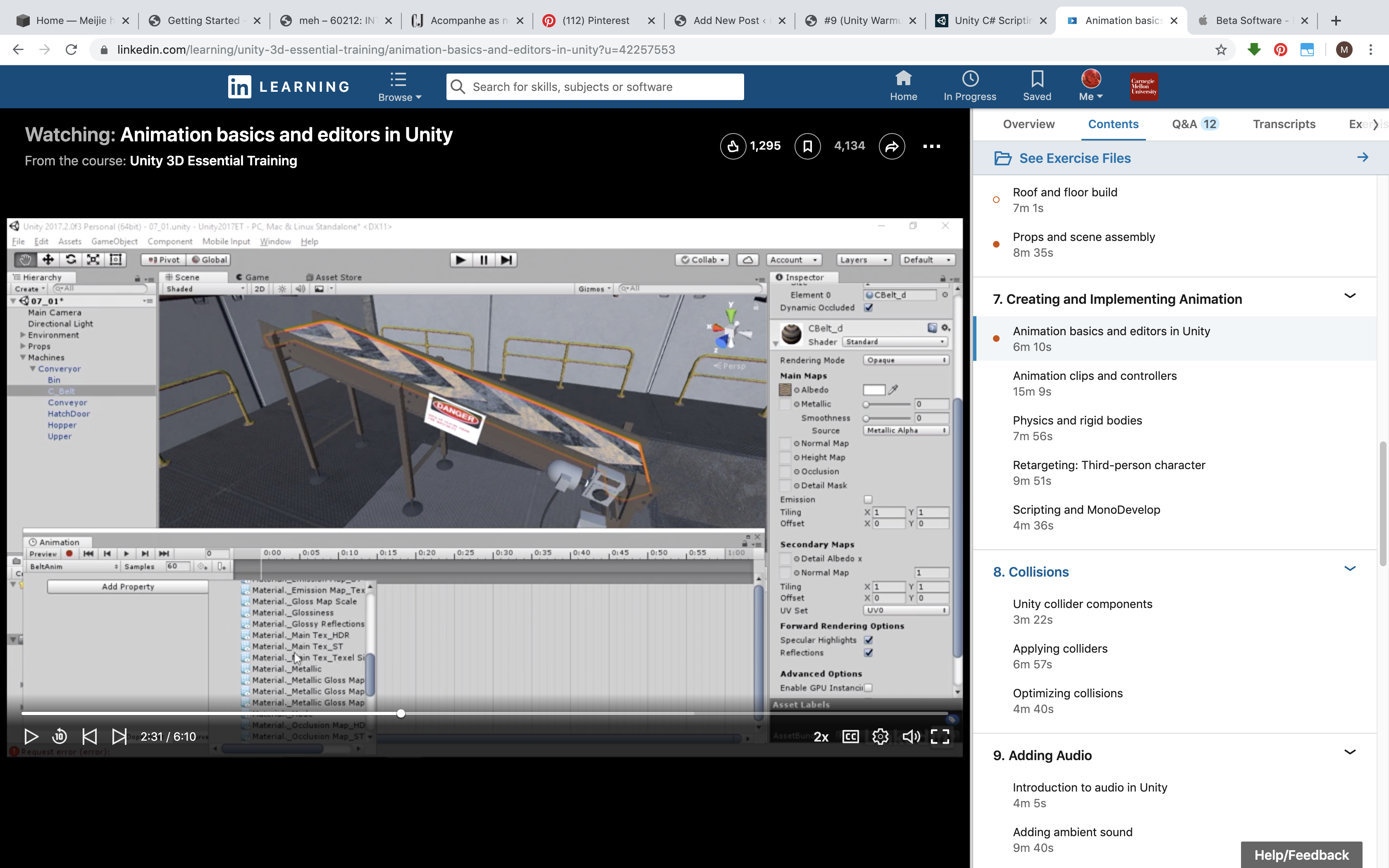

With this, we initially wanted to explore having an animation of some sort take place in the mouth. However, that proved difficult due to the lack of accuracy with small differences in depth, and also the amount of lighting that would need to be taken into consideration. Also, because the image target had some issues with detecting the mouth, we decided to migrate to the face mesh and facial feature detector.

We combined both the face mesh and feature detector, to trigger a duck to appear on the tongue when the mouth is open.

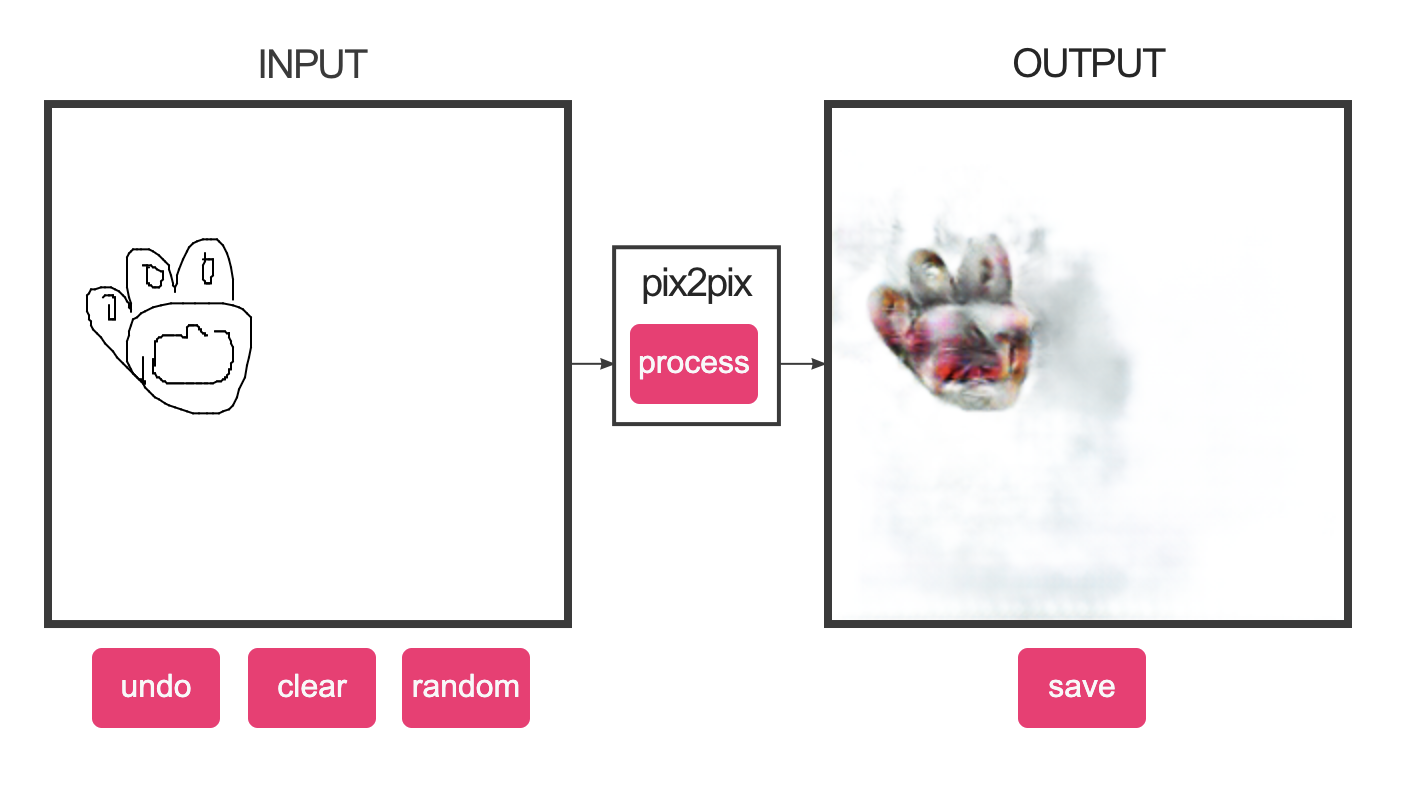

(cat paw!)

(cat paw!)