Author: tli

tli-UnityExercises

Dynamic Mesh Generation

In the spring semester, I created a rough prototype of a drawing/rhythm game that drew lines on a canvas by following DDR-style arrows that scrolled on the screen. For my first Unity exercise, I prototyped an algorithm to dynamically generate meshes given a set of points on a canvas. I plan to use this algorithm in a feature that I will soon implement in my game.

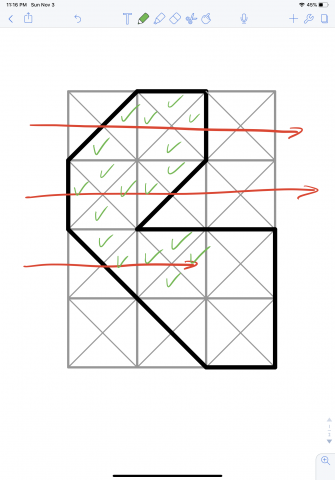

There are a couple assumptions I make to simplify this implementation. Firstly, I assume that all vertices are points on a grid. Second, I assume that all lines are either straight or diagonals. These assumptions means that I can break up each square in the grid into four sections. By splitting it up this way, I can scan each row from left to right. I check if my scan intersects with a left edge, a forward diagonal edge, or a back diagonal edge. I then use this information to determine which vertices and which triangles to include in the mesh.

A pitfall of this algorithm is double-counting vertices, but that can be addressed by maintaining a data structure that tracks which vertices have already been included.

Shaders

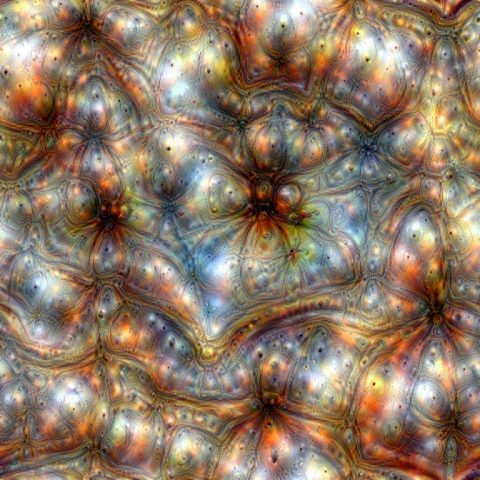

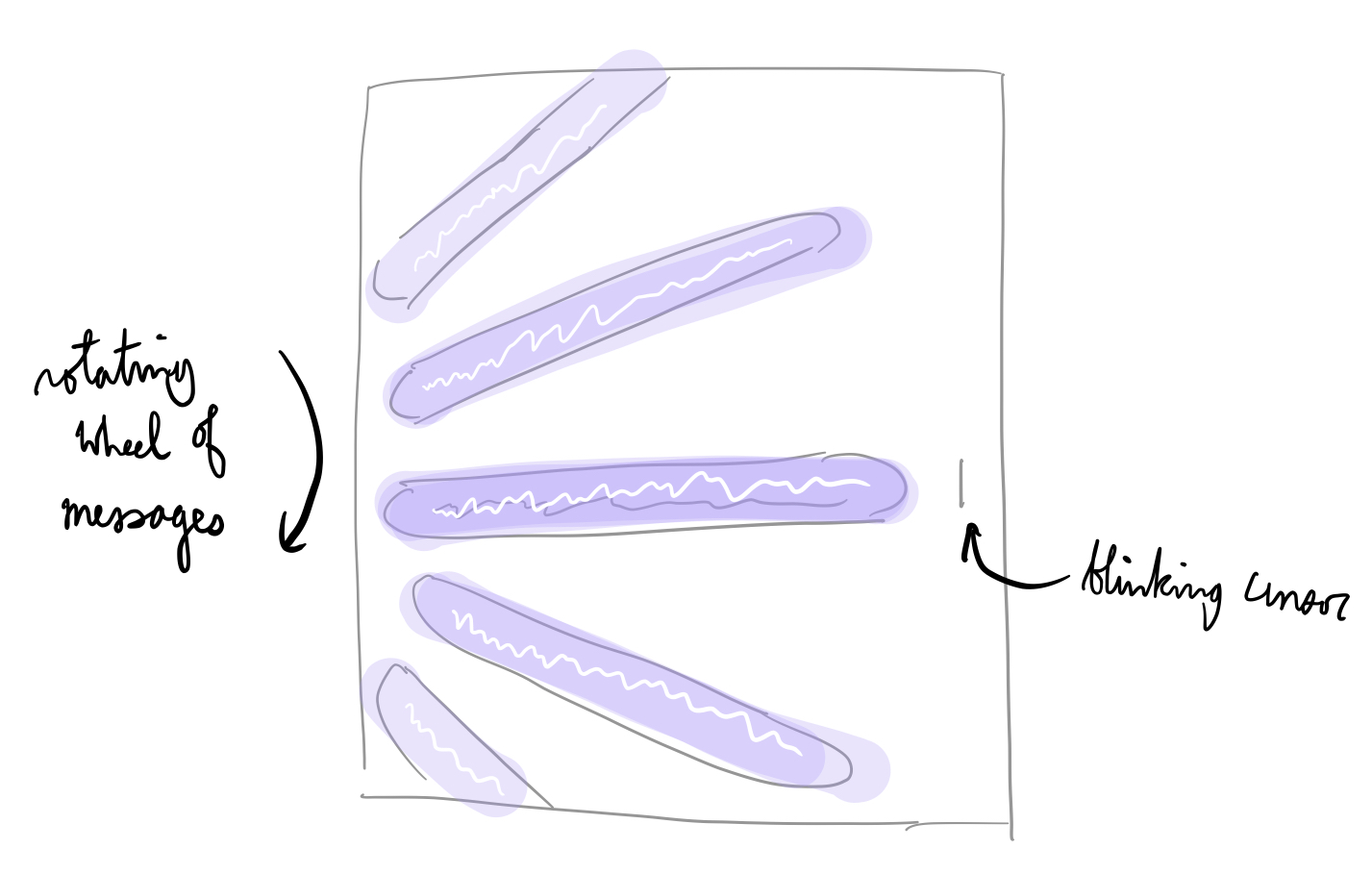

My second Unity exercise is also a prototype of a feature I want to include in my drawing game. It's also an opportunity to work with shaders in Unity, which I have never done before. The visual target I want to achieve would be something like this:

I have no idea how to do this, so the first thing I did was scour the internet for pre-existing examples. My goal for a first prototype is to just render a splatter effect using Unity's shaders. This thread seems promising, and so does this repository. I am still illiterate when it comes to the language of shaders, so I asked lsh for an algorithm off the top of their head. The basic algorithm seems simple: sample a noise texture over time to create the splatter effect. lsh also suggests additively blending two texture samples. lsh was also powerful enough to spit out some pseudocode in the span of 5 minutes:

P = uv Col = (0.5, 1.0, 0.3) //whatever Opacity = 1.0 // scale down with time radius = texture(noisetex, p) splat = length(p) - radius output = splat * col * opacity

Thank you lsh. You are too powerful.

After additional research, I decided that the Unity tool I'd need to utilize are Custom Render Textures. To be honest, I'm still unclear about the distinction between using a render texture as opposed to straight-up using a shader, but I was able to find some useful examples here and here. (Addendum: After talking to my adviser, it's clear that the custom render texture is outputting a texture, whereas the direct shader outputs to the camera).

Step 1: Figure out how to write a toy shader using this very useful tutorial.

Step 2: Get this shader to show up in the scene. This means creating a material using the shader and assigning the material to a game object.

I forgot to take a screenshot but the cube I placed in the scene turns a solid dark red color.

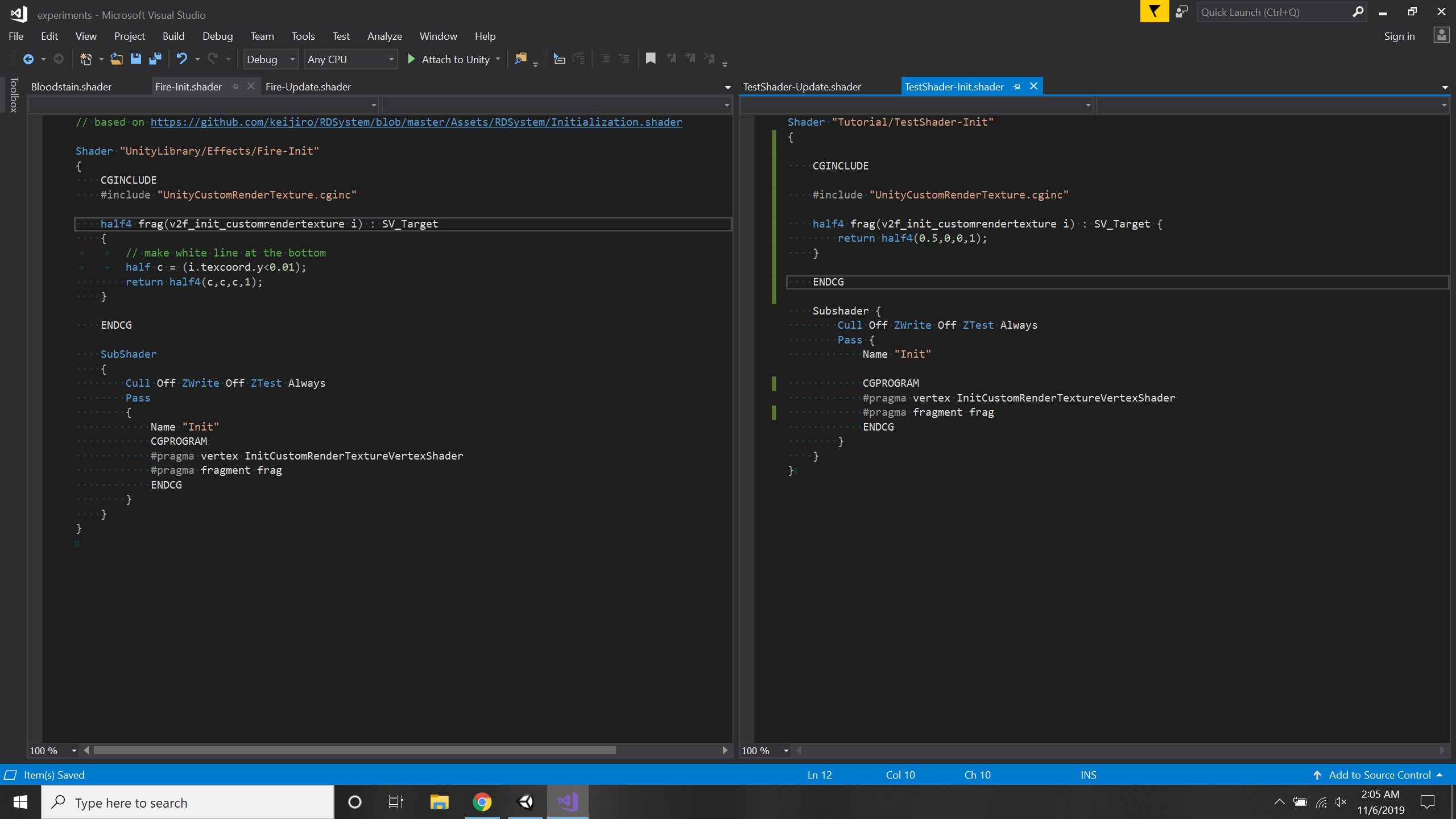

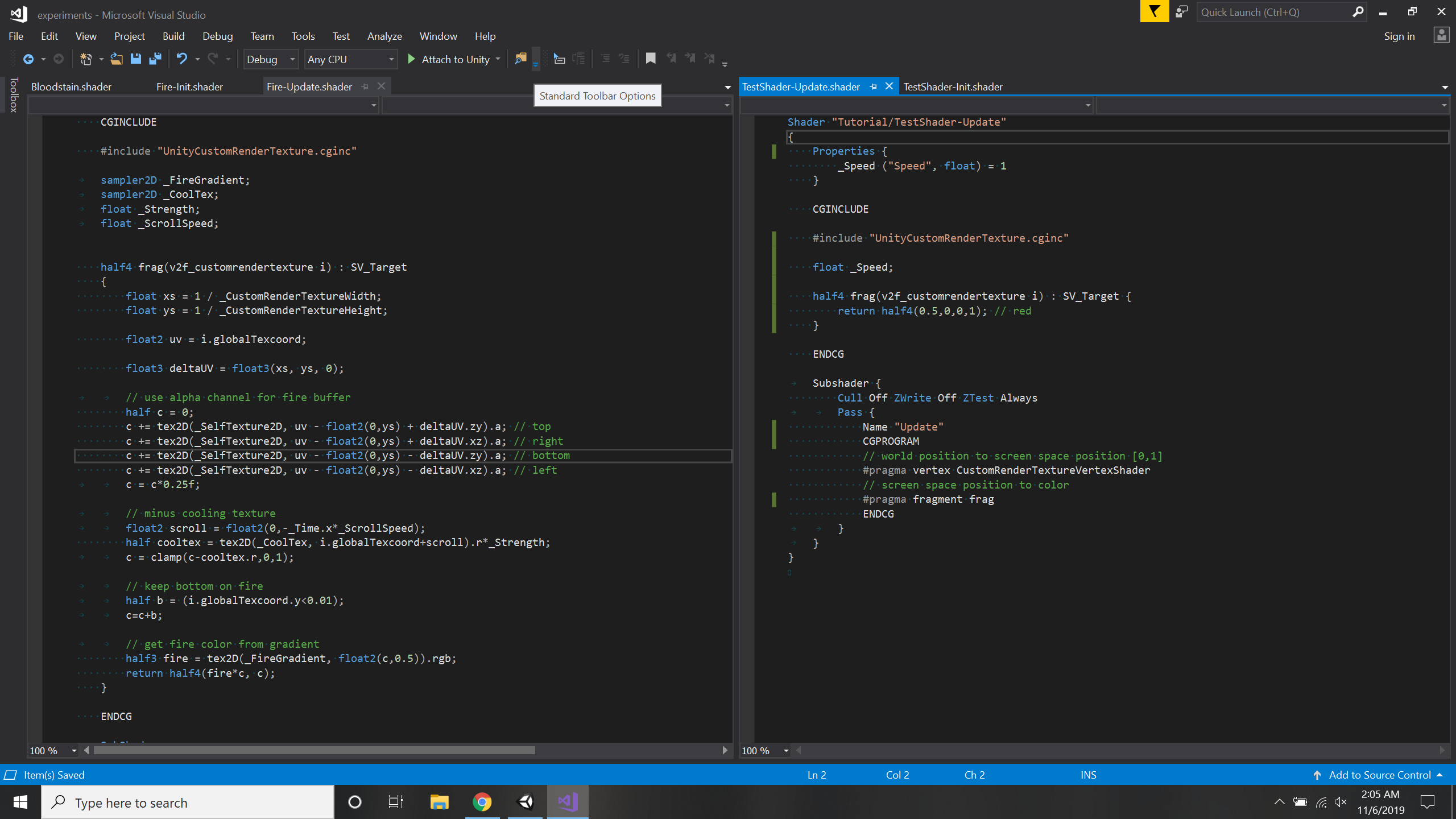

Step 3: Change this from a material to a custom render texture. To do this, I based this off of the fire effect render texture I linked to earlier. I create two shaders for this render texture, one for initialization and one for updates. It's important that I make a dynamic texture for this experiment because the intention is to create a visual that changes over time.

I then assign this to the fields in the custom render texture.

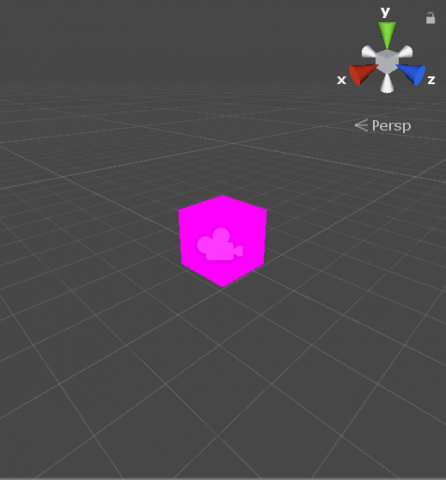

But this doesn't quite work...

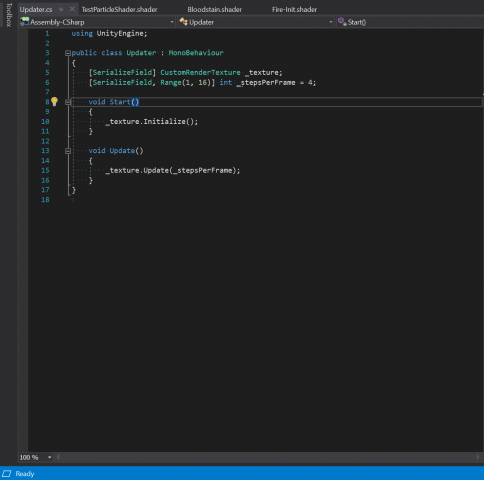

After thirty minutes of frustration, I look at the original repository that the fire effect is based on. I realize that that experiment uses an update script that manually updates the texture.

I assign this to a game object and assign the custom render texture I created to the texture field. Now it works!

Step 4: Meet with my adviser and learn everything I was doing wrong.

After a brief meeting with my adviser, I learned the distinction between assigning a shader to a custom render texture to a material to an object, versus directly assigning a shader to a material to an object. I also learned to change the update method of the custom render texture, as well as how to acquire the texture from the previous frame in order to render the current frame. The result of this meeting is a shader that progressively blurs the start image at each timestep:

I'm very sorry to my adviser for knowing nothing.

Step 5: Actually make the watercolor shader... Will I achieve this? Probably not.

tli-SituatedEye

It's a bird! It's a plane! It's a drawing canvas that attempts to identify whether the thing you're drawing is a bird or a plane.

I am not too happy with the result of this project because it's basically a much worse version of Google Quick Draw. Initially, I wanted to use ml5 to make a model that would attempt to categorize images according to this meme:

I would host this as a website where users who stumble across my web page would upload images to complete the chart and submit it for the model to learn. However, I ran into quite a few technical difficulties, including but not exclusive to:

- submitting images to a database.

- training the model on newly submitted images.

- making the model persistent. The inability to save/load the model was the biggest roadblock to this idea.

- cultivating a good data set in this way.

My biggest priority for choosing a project idea was mainly finding a concept where the accuracy of the model wouldn't obstruct the effectiveness of its delivery, so something as subjective as this meme was a good choice. However, I had to pivot to a much simpler idea that could work on the p5.js web editor due to all the problems that came with the webpage-on-Glitch approach. I wanted to continue with this meme format, but again, issues with loading/submitting images made me pivot to using drawings, instead. With the drawing approach, the meme format no longer made sense, hence the transformation of the labels to bird/plane.

I don't have much to say about my Google Quick Draw knockoff besides I'm mildly surprised by how well it works even with the many flaws of my data set.

Some images from the dataset:

An image of using the canvas:

A video:

tli-MachineLearning

A. Pix2Pix

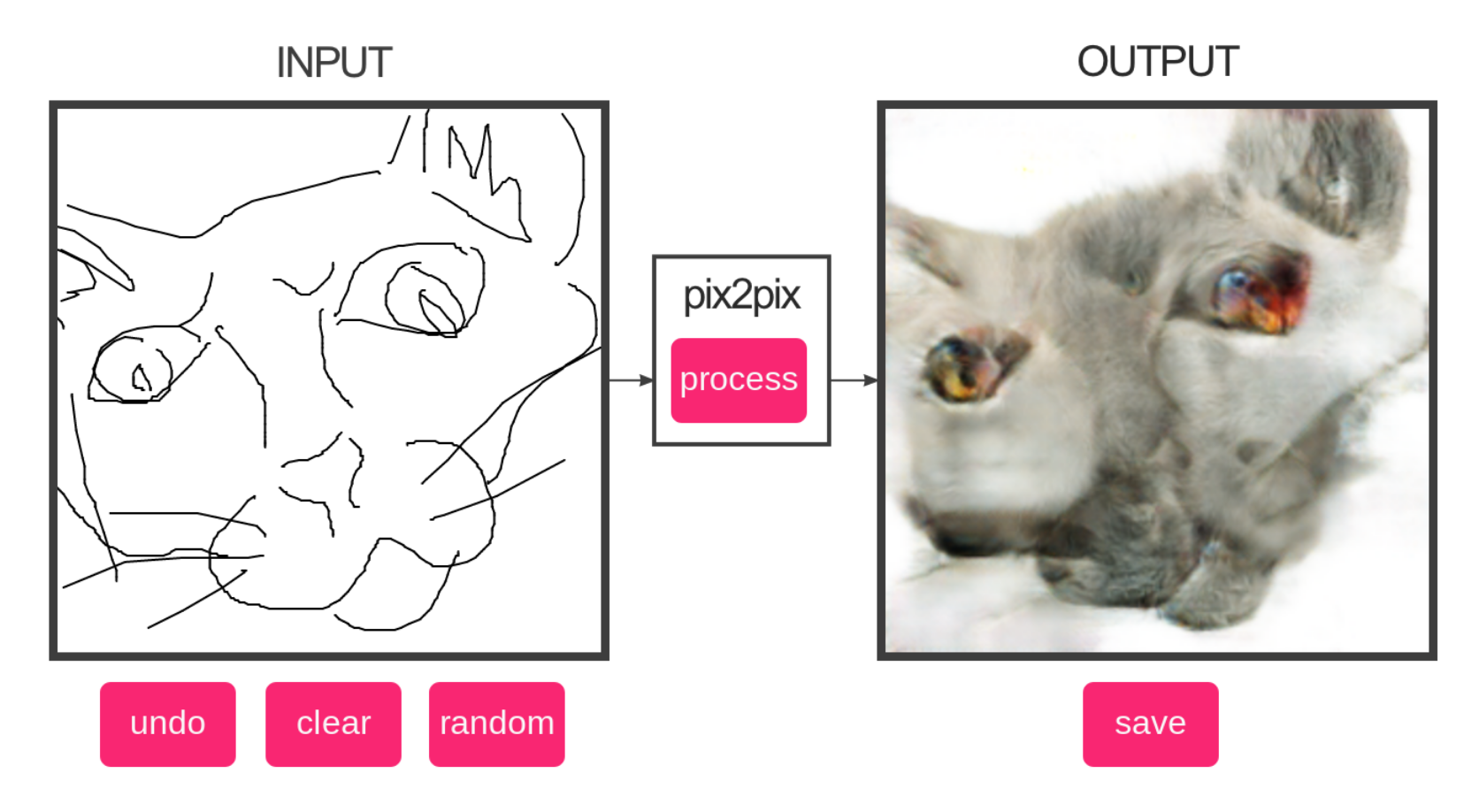

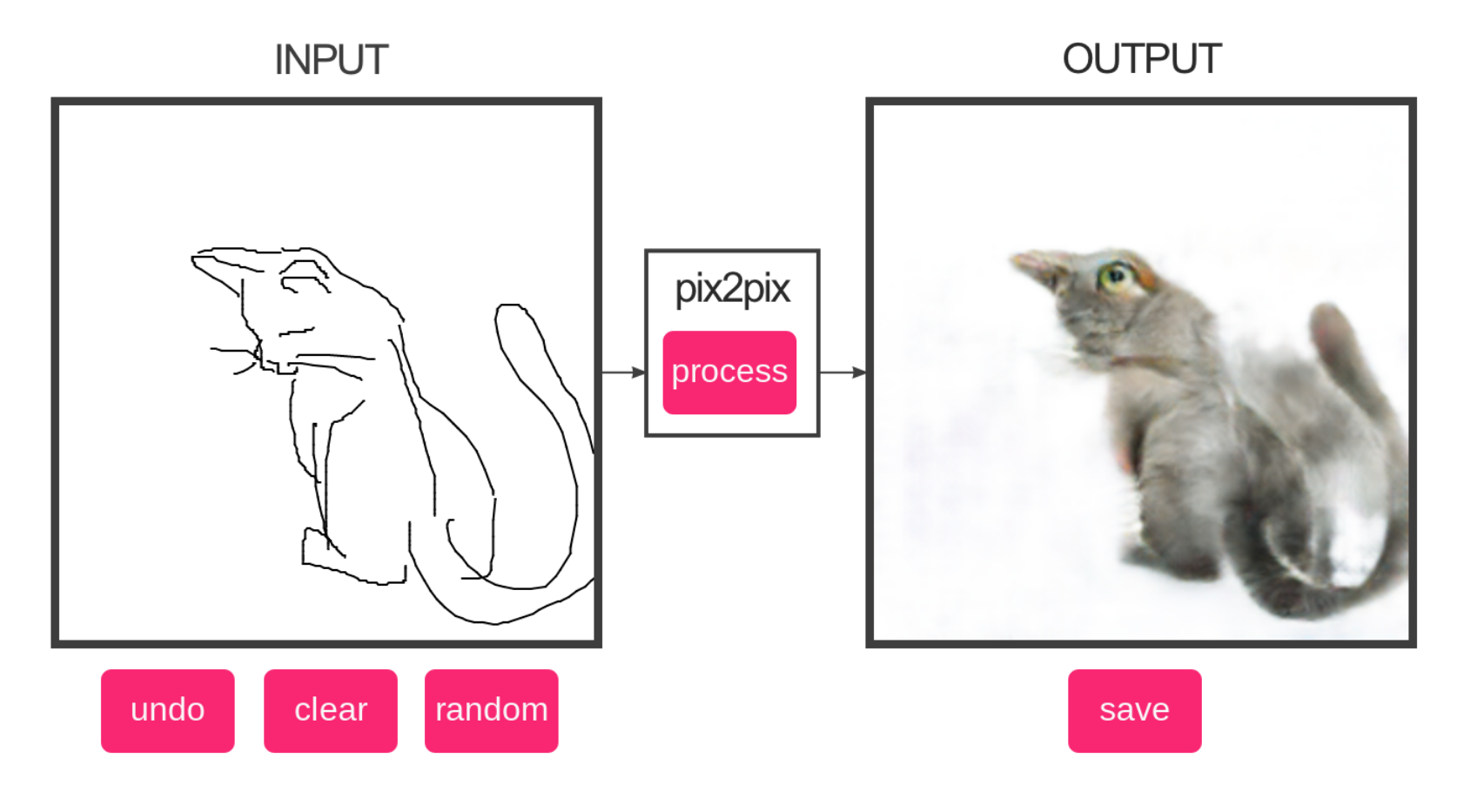

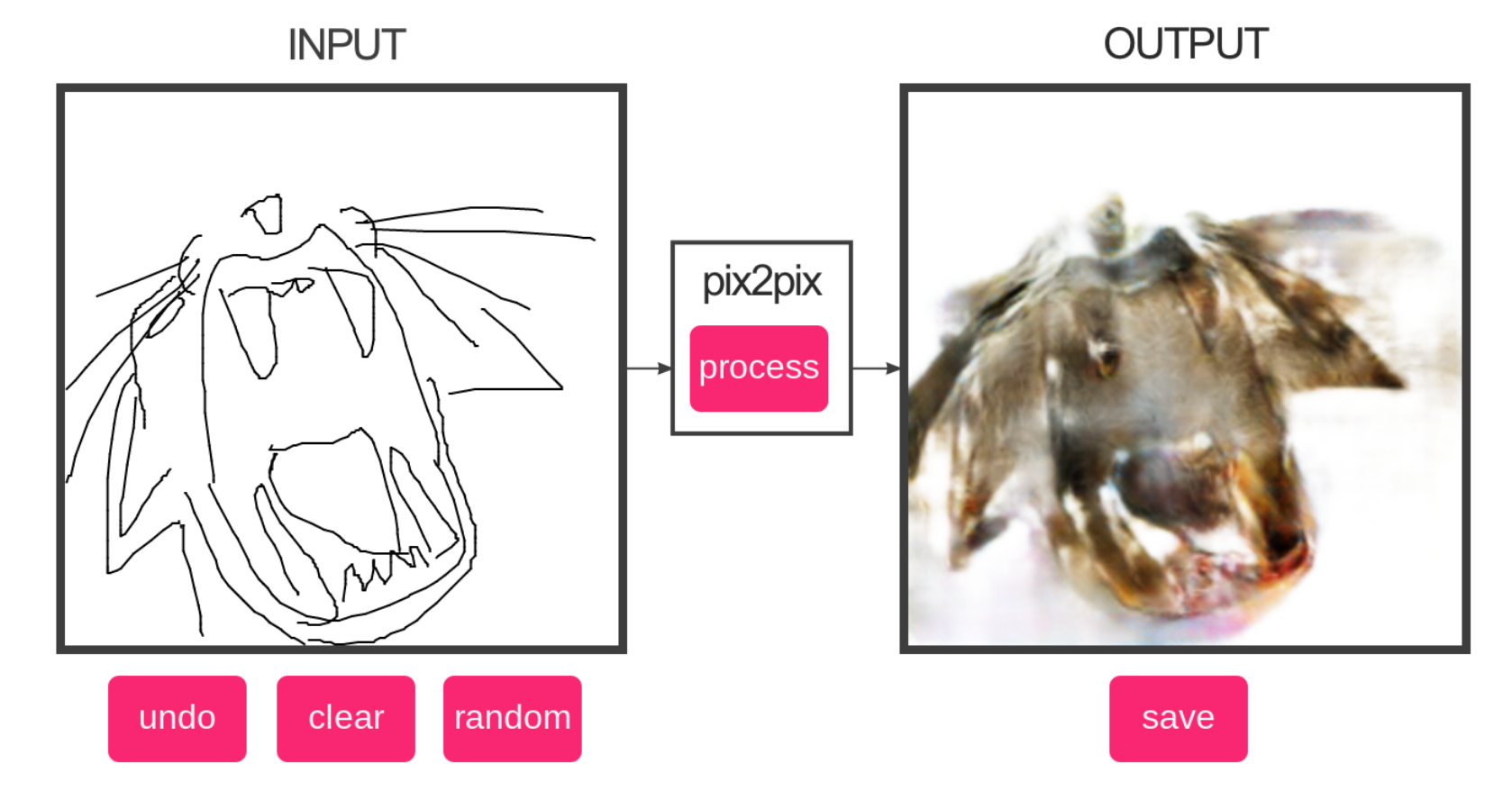

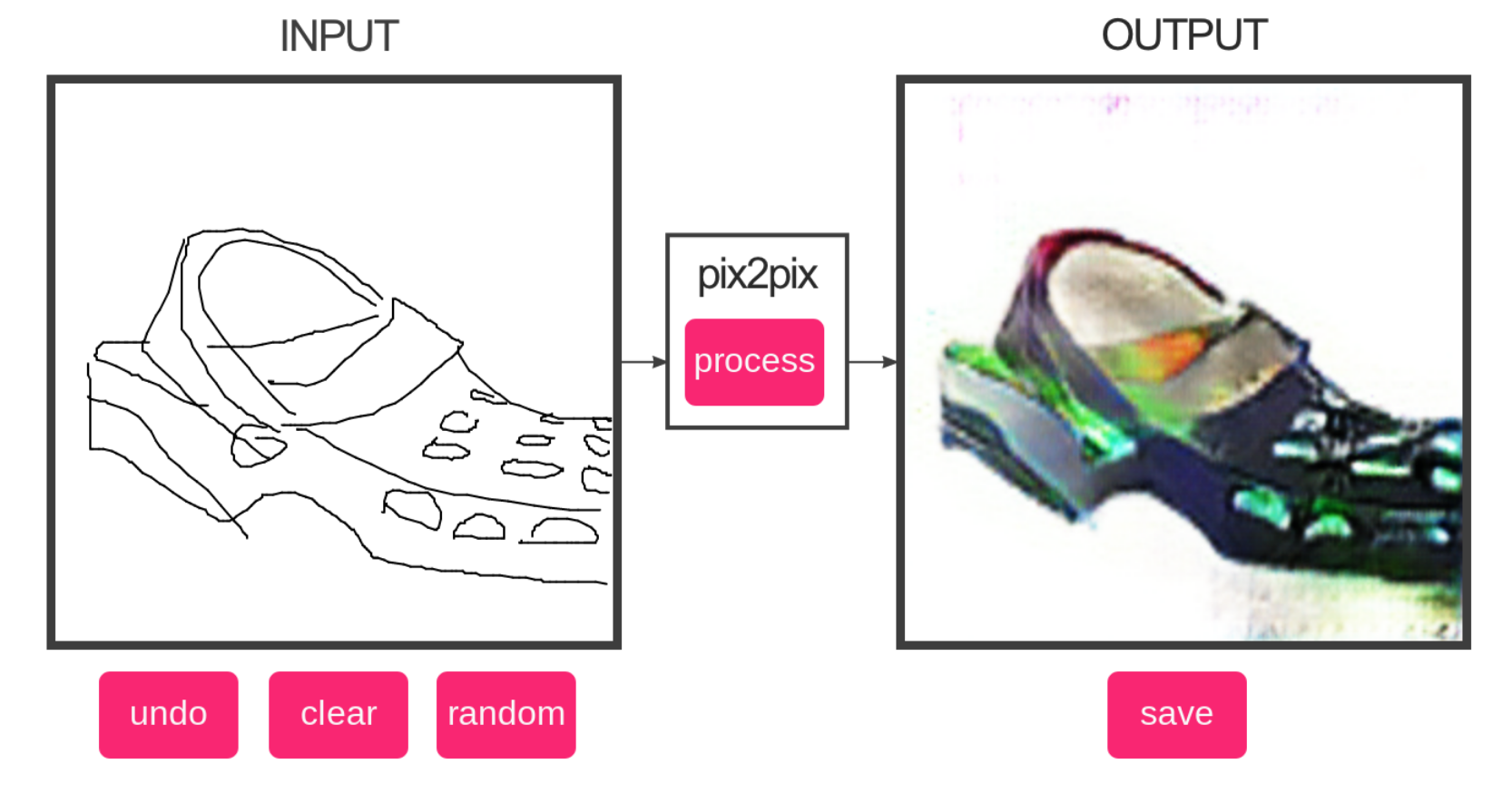

It interesting to see how edges2cats understood unconventional angles of cats.

Bonus crocs:

B. GANPaintStudio

I think it was interesting how the network interpreted "erasing" a semantic. The door brush in particular seems to un-doorify doors by making them windows.

C. ArtBreeder

This tool is much better at creating uncanny and discomforting images than a human is. I find that interesting because fear is such a difficult emotion to inspire in an image.

D. Infinite Patterns by Alexander Mordvintsev

I used a sketch I made recently as the input image. The CPPN tool seemed to struggle with the simple color palette but the DeepDream tool was able to create a psychedelic pattern.

E. GPT-2

I first tried the title of the most popular fanfic in Archive of Our Own.

I am Groot. The only thing you did wrong was get in my way." "What did you just say?" "I said, 'I am Groot.'" "That has got to sting." "It does." "But don't you feel like you can let yourself go?" "Yes, I do." "I will let you go if it hurts." "Then I'll tell you everything. So go on." She was so sweet. She'd never met a man with such a beautiful face. She had her own power. "I don't want to talk about it," she said. "Let's forget it." Then she started on her story. "And why the tree there?" he asked. "I thought it was just for fun. It was nothing bad." "And the flower?" "Well, I don't remember, but it's my favorite. I wanted to bring flowers to you when you found out I can't fly." "

Then Comes a Mist and a Weeping Rain by Faith Wood (faithwood) Fandoms: Harry Potter - J. K. Rowling Tags No Archive Warnings Apply Draco Malfoy/Harry Potter Harry Potter Draco Malfoy Gregory Goyle Millicent Bulstrode Ernie Macmillan Hermione Granger Ron Weasley Humor Romance Hogwarts Eighth Year HP: EWE Post - Deathly Hallows Complete Summary It always rains for Draco Malfoy. Metaphorically. And literally. Ever since he had accidentally Conjured a cloud. A cloud that's ever so cross. "You shouldn't have conjured one at first," Goyle said. "I didn't know the clouds could get so big," Hermione said dryly. "Then why did you do it again?" "I had to," Hermione said. "I suppose the weather's going to kill one of us eventually," Goyle said. "But at least we won't have to face those clouds every time we meet." - E

F. Google AI Experiments

I'm interested in how a lot of these experiments incorporate AI with visual or aural input and output from the user. I tried shadow art, but I found that the entropy introduced by video capture made it difficult to use in the conditions I was in. Additionally, the interaction itself wasn't very interesting. The second experiment I tried, semi-conductor, was more compelling to me because rather than having just an on-screen reaction, the interaction actually felt like I was influencing an orchestra in multiple dimensions.

tli-telematic

Two Faced is a collaborative face created by two networked face trackers. One user controls the bottom half of the face. The other controls the top.

Initially, I wanted to create a chat room extension of my mouth project from the previous assignment. However, the difficulty of networking video across the Internet made me simplify my approach. I still wanted to play with faces, and I still wanted to include an interesting real time interaction between multiple people, so my second idea was the collaborative face. In an expanded project, I would experiment with which parts of the face I would separate between different clients. I would also distort a real image of a face instead of drawing simple polygons because I really wanted to emphasize the uncanny-ness of splitting up a face among multiple people. A less necessary optimization would possibly to gamify the collaborative face and request the users to react to a social situation collaboratively. For example, I could record a first-person video of a social scenario and take pictures of the collaborative face at key moments when the users are expected to react. At the end of the scenario, I would present the users with all captured images.

tli-techniques

Reference and examples of p5.js

I'm surprised to learn that p5.js has built-in support for soft body dynamics. I find it difficult to create things with organic movement through code, so this is something I could utilize to achieve that goal.

Libraries of p5.js

I'm interested in this library because it seems very powerful for physical computing projects, which I would like to explore.

Glitch.com blocks

I have wanted to send emails in projects in the past, so I'm pleased to see a building block that already demonstrates how.

I'm intrigued by this music-making library and by how it has built-in support for music visualization as well as generative music through machine learning models. I took a computer music class in the past that explored novel ways to generate musical sound through computation, so this could be an opportunity to expand on that experience.

tli-CriticalInterface

2. (To) interface is a verb (I interface, you interface ...). The interface occurs, is action.

Notable propositions:

- Be gentle with your keyboard, after 70 years typewriting your fingers will appreciate it.

- 'View source' of a webpage, and print. Replace each verb you do not understand, by a verb you are familiar with. Read the text out loud.

- Do not click today.

I'm interested in the second tenet because it frames the interface in terms of verbs rather than a physical or visual artifact that users interact with. The interface emphasizes what a person can do--and conversely what a person can't do. In this way, the interface provides an insidious way to obscure what can and cannot be achieved within a system.

The last proposition "do not click today" reminds me of this dilemma the most. As a CS student, I'm anecdotally familiar with a preference among the technically savvy for command line interfaces over GUIs. While both provide an avenue to do, computers were truly designed with command line interfaces, hence interacting with the command line tends to be much more powerful than interacting with the GUI. I think this is a clear example of how the presentation of the interface has powerful implications for what a user can do and how much power a user has within a system.

tli-LookingOutwards04

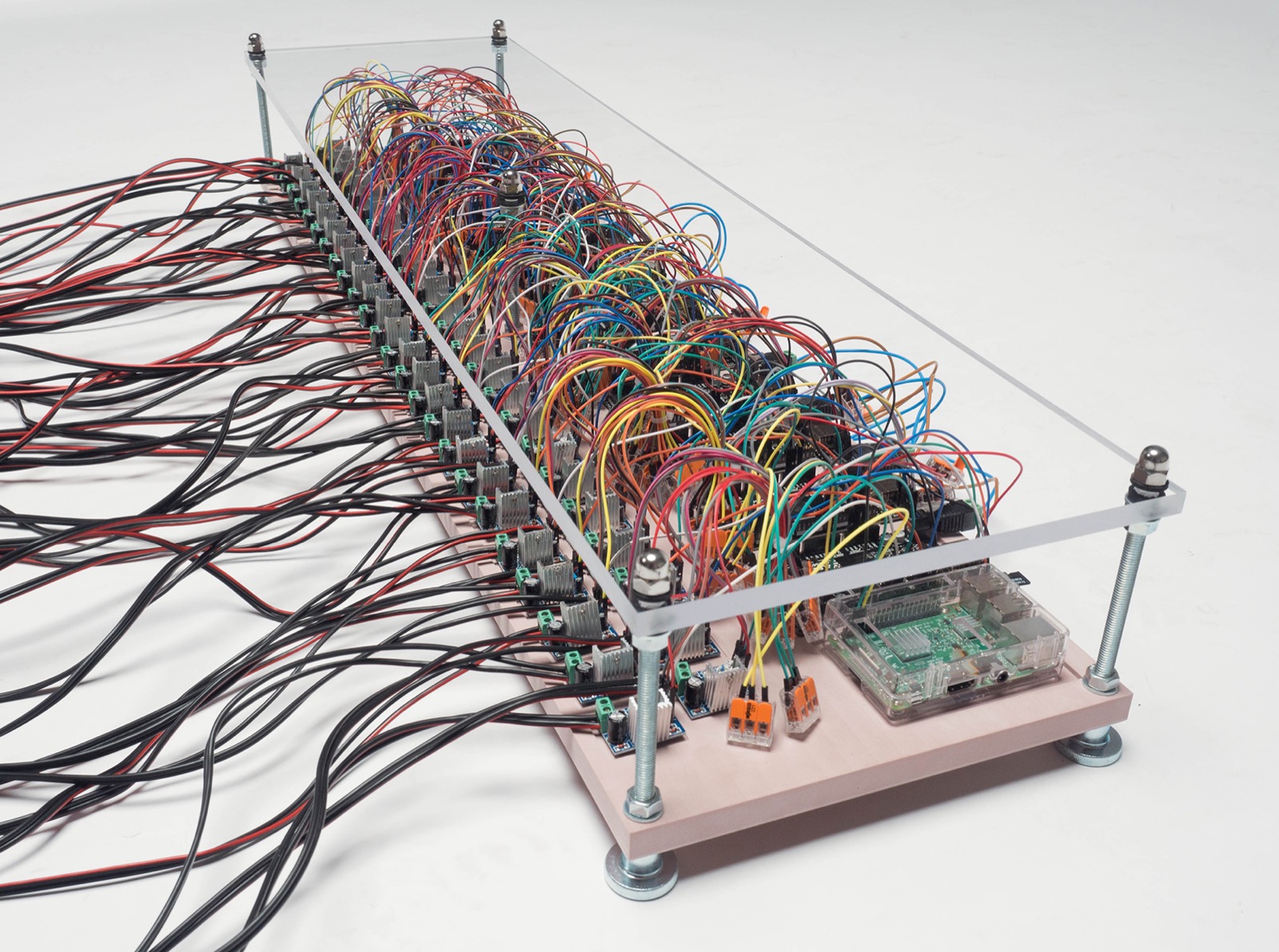

Oh my () by Noriyuki Suzuki is a Raspberry Pi and Arduino installation that exclaims "oh my god" in the appropriate language when the word "god" is tweeted on Twitter. I chose this project for my looking outwards because I am always fascinated by how social interactions manifest in a digital world, and how we can use technology as art to highlight this. Taking a deeply social construct like "god" and projecting it through the disembodied voice of a machine is a perfect example of the type of work I'd like to create. The chaotically frequent outbursts coupled with the stream of text updated in real time perhaps demonstrates something about the uncanniness of an attempt to capture "god" within the output of a machine.

I also chose this project because I'm interested in gaining more experience creating art with the Raspberry Pi and the Arduino. I created a two-channel synchronized video installation with a Raspberry Pi three years ago, and I haven't touched a technical installation since. My work is all very intangible, and I want to explore creating work that alters the space and the presence of the world.

Perhaps my only complaint about this work is that it's so heavy-handed that I don't have much to say about it besides the obvious and besides what I want to pull from it into my own practice. Sure, there could be a whole conversation about religion, but there always is, and that's not enough to make a work of art significant. Maybe I am just a heathen.

tli-Body

https://editor.p5js.org/helveticalover/sketches/jm5nlNcB7

This project was inspired by nothing but my exhaustion and my desire to finish this before 3 am. I was playing with masks in p5.js and isolating parts of my face recursively, trying to think of an idea. I was reminded of Ann Hamilton's work with mouth photography that we discussed in class, so I decided to make an interactive version of that through p5.js. The masking and the scaling is quite sloppy, so if I have the time and willpower, I'd like to come back and refine it in the future. I wanted to play with orifices on the face in particular, so maybe I can also expand this to other parts of the face like the eyes or maybe the nostrils. Maybe this says something about how you reveal an image of yourself by opening your orifices (e.g. talking), or maybe I am just trying to invent meaning to a quick sketch at 1:54am. It would be interesting to explore this as a social media communication form, like a chat lobby where everyone is depicted in this form. Maybe in the future, if I can figure out how to use handsfree.js on glitch.

Again, I have no sketches because I thought of this idea at about 11pm.

tli-Clock

https://my-time-with-you.glitch.me/

This clock is a rather personal one. I took every message I sent to this individual, mapped it to a time of day, and presented it in a cyclical loop--a clock of my interactions with this individual. I explore the thought that my moods and my interactions with other people are cyclical, and I take it to a personal extreme by presenting my messages as a clock. What does it mean to be stuck in an emotional cycle with another person? I was seeking some closure by creating this, but I am not sure I achieved that.

I downloaded a JSON of all my messages to this person from Facebook, parsed it within Glitch, and served the most relevant messages to any client who connects to the web page in the form of a clock. The sizes of the text box as well as the minute rollover are jank, but I think this prototype delivers the concept well enough.

9/19/2019 Addendum

In a future, I think it would be interesting if I made this a web service that allows users to upload their chat histories. I had a discussion with jackalope about highly personal art and whether they're interesting. While we strongly disagree on whether personal art is interesting as art, I think we both agree that personal art is interesting to the person in question. So maybe a next step will be opening this experience up to other people.

I went back and fixed the rollover issues, the colors of the message boxes, and added a black line to indicate the passage of the minute. I recorded additional GIFs: