The past message sender, the keeper-upper, the interrupter

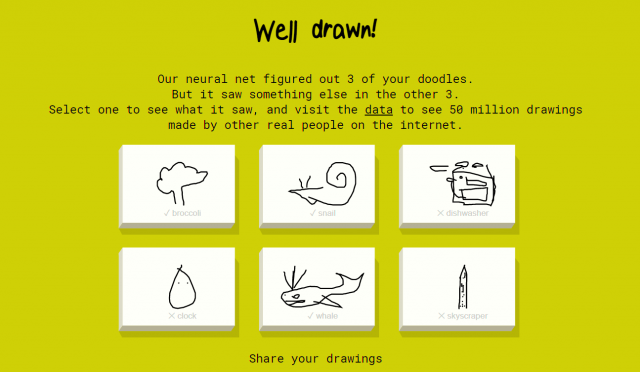

An app that plays with the idea of trying to keep up with an ongoing conversation and coming up with something to add only once the conversation has already moved on. The chat app sends the previously written message in the conversation and sends it in your name when you add a new comment. This creates a new way to try to navigate a communication space, because the user has a lack of control over the direction of the conversation as they press send, the messages intercept and disrupt the smooth flow of send and reply, they are all collaborating simultaneously while at the same time, always one step behind.

Link to webpage Link to Glitch Program

- Process -

For the telematic piece I wanted to create a translating chat app which takes outgoing typed messages and translates them on the screen in the language of the other chatters (excluding your language), losing the original written text (in translation (heh!)). All incoming messages to you are translated into your chosen language, creating a possibility for dialogue and language untangling across boundaries.

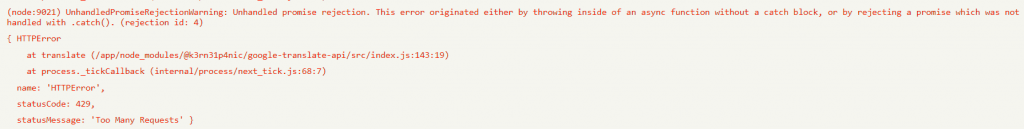

I thought that using a google translate API to change the text would be a great opportunity to learn more about APIs as a part of this project. I wish that I had not chosen to do so while also learning how to navigate glitch, and node.js and trying to untangle why examples of working translations immediately failed when remixed. I ran into so many obstacles and problems trying to implement the google translate API in glitch, and found an alternate resource and got the language detection and translation working! This was a glorious yet short lived victory as I later discovered that the alternate API resource limited the amount of translations it would allow, thus stopping the program from working entirely at 10:40pm on Tuesday (yay!):

I'm incredibly frustrated that I was unable to get this to work, however I feel that I learned a lot from the process (not necessarily the things I set out to learn, however still useful). Link to this project. I will be continuing to work on this.

So setting that aside and working with some of the framework that I had in place for the translation project, I switched gears in order to have a functioning program.