ngdon-LookingOutwards09

For part of my last project I was doing generative text, so I looked into ways neural networks can be used to do so. Golan pointed me to a couple of resources, and one of them seems particularly interesting and effective: http://karpathy.github.io/2015/05/21/rnn-effectiveness/

This author applied recurrent neural networks to wikipedia articles, Shakespeare, code, and even research papers, and the result is very realistic.

The model learned how to start and end quotation marks and brackets, which I think is one of the more difficult problems in text generation.

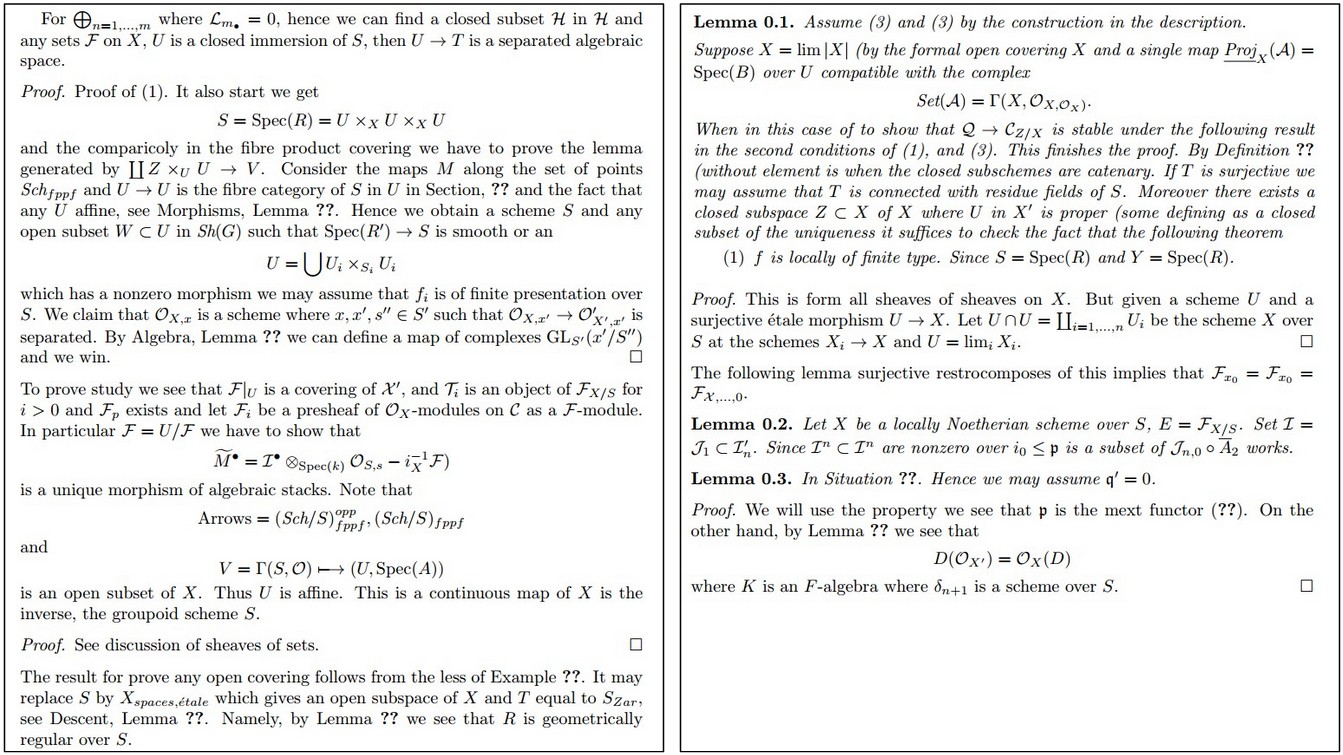

Generative paper.

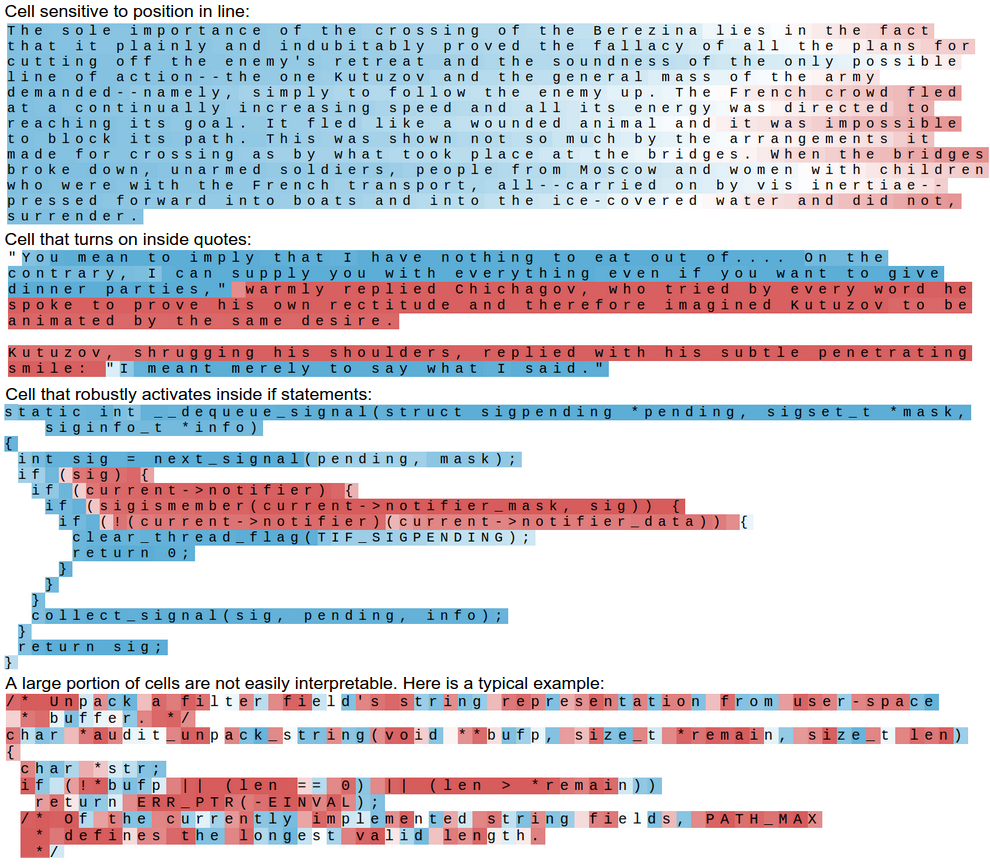

Generative code.

I’m particularly fascinated by the way neural networks magically learn. Unfortunately I never got them to work so well in my final project.

I was also planing to generate plants alongside my imaginary animals, so I also researched about L-system, which is a type of grammar that can be used to describe the shape of a plant.

I read this paper: http://algorithmicbotany.org/papers/abop/abop-ch1.pdf, in which usage and variations of l-systems are extensive explained.

I’m very interested in this system because it condenses a complicated graphic such a that of a tree into a plain line of text. And simply changing the order of a few symbols can result in a vast variety of different tree shapes.

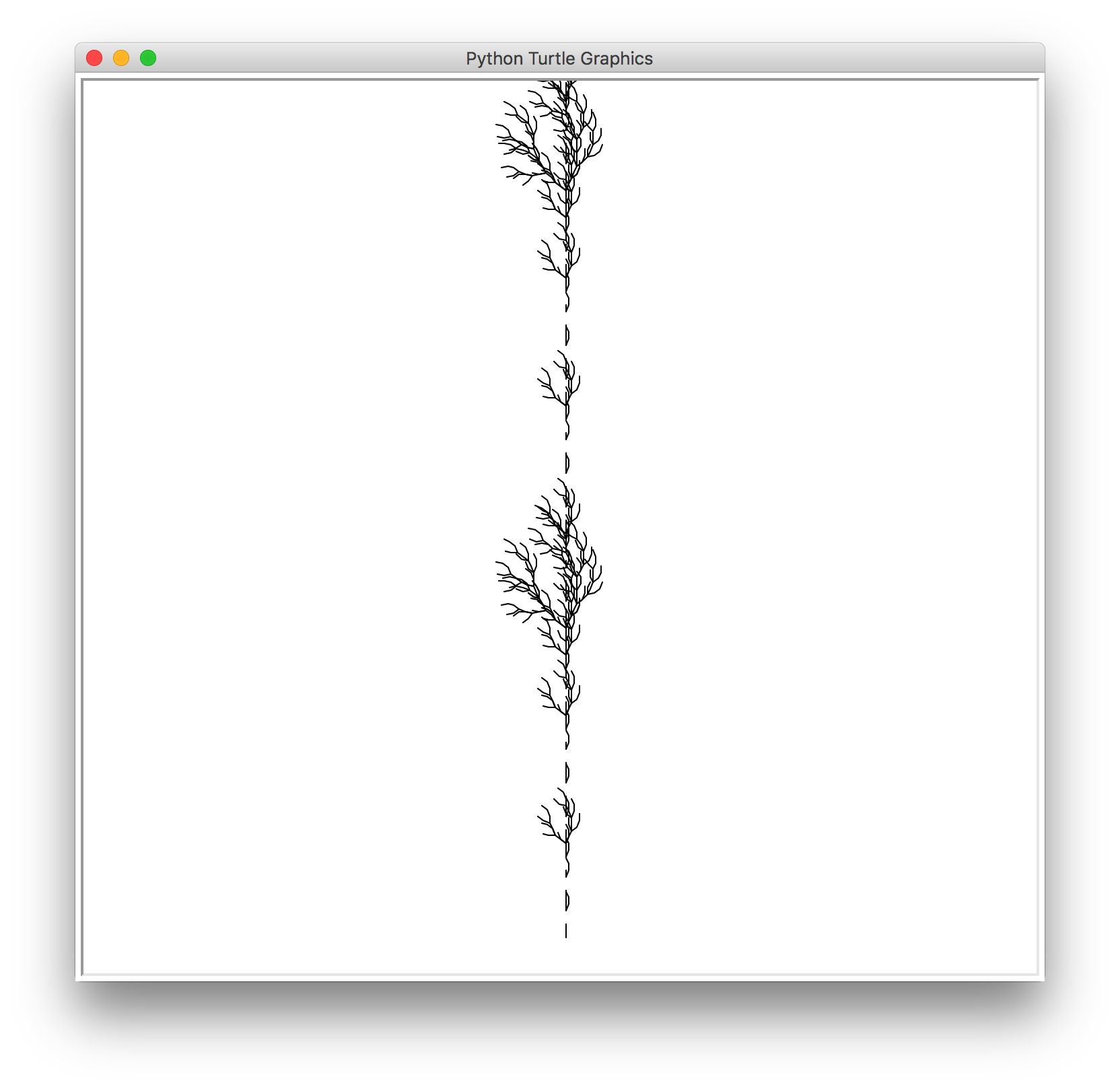

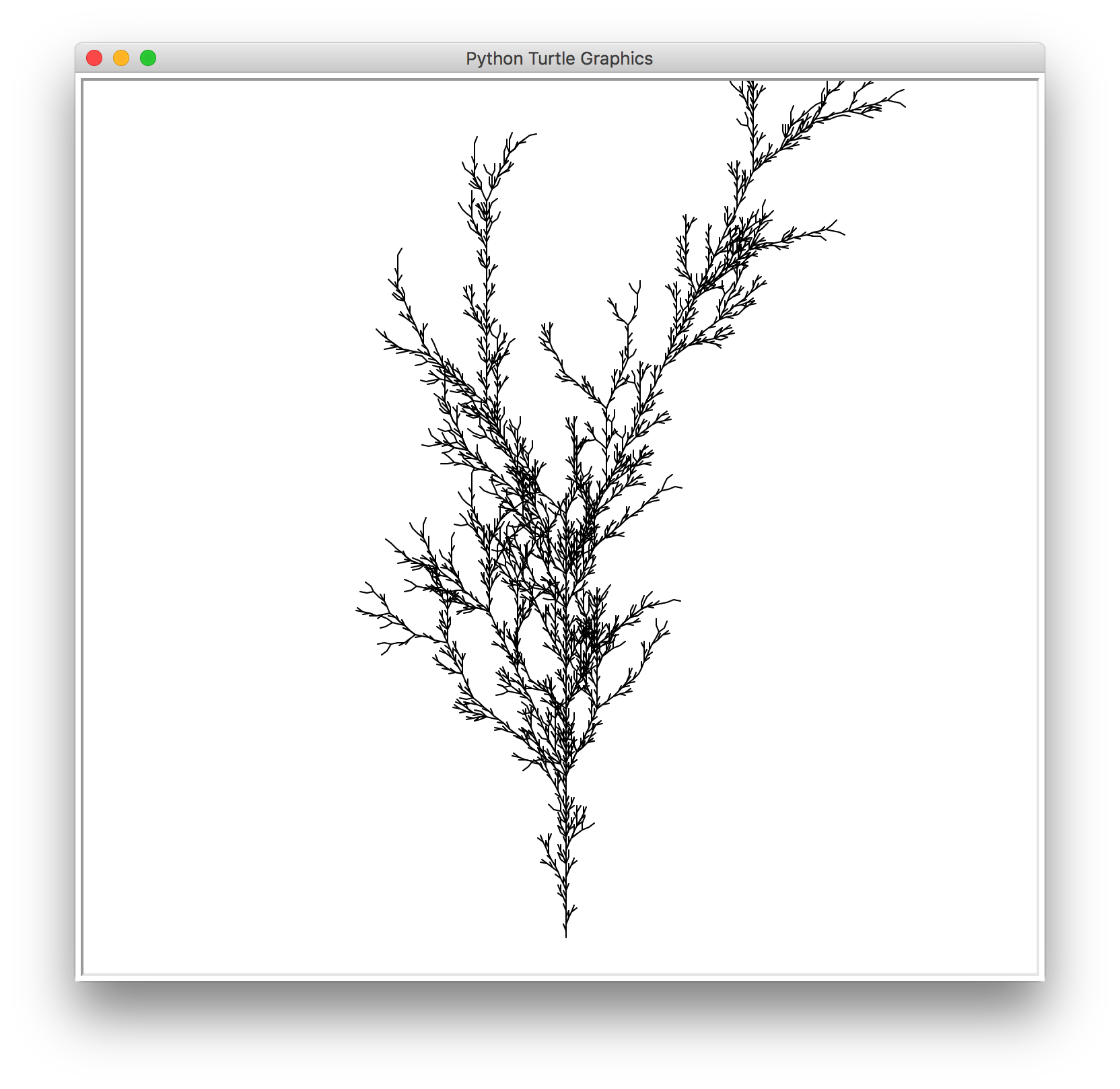

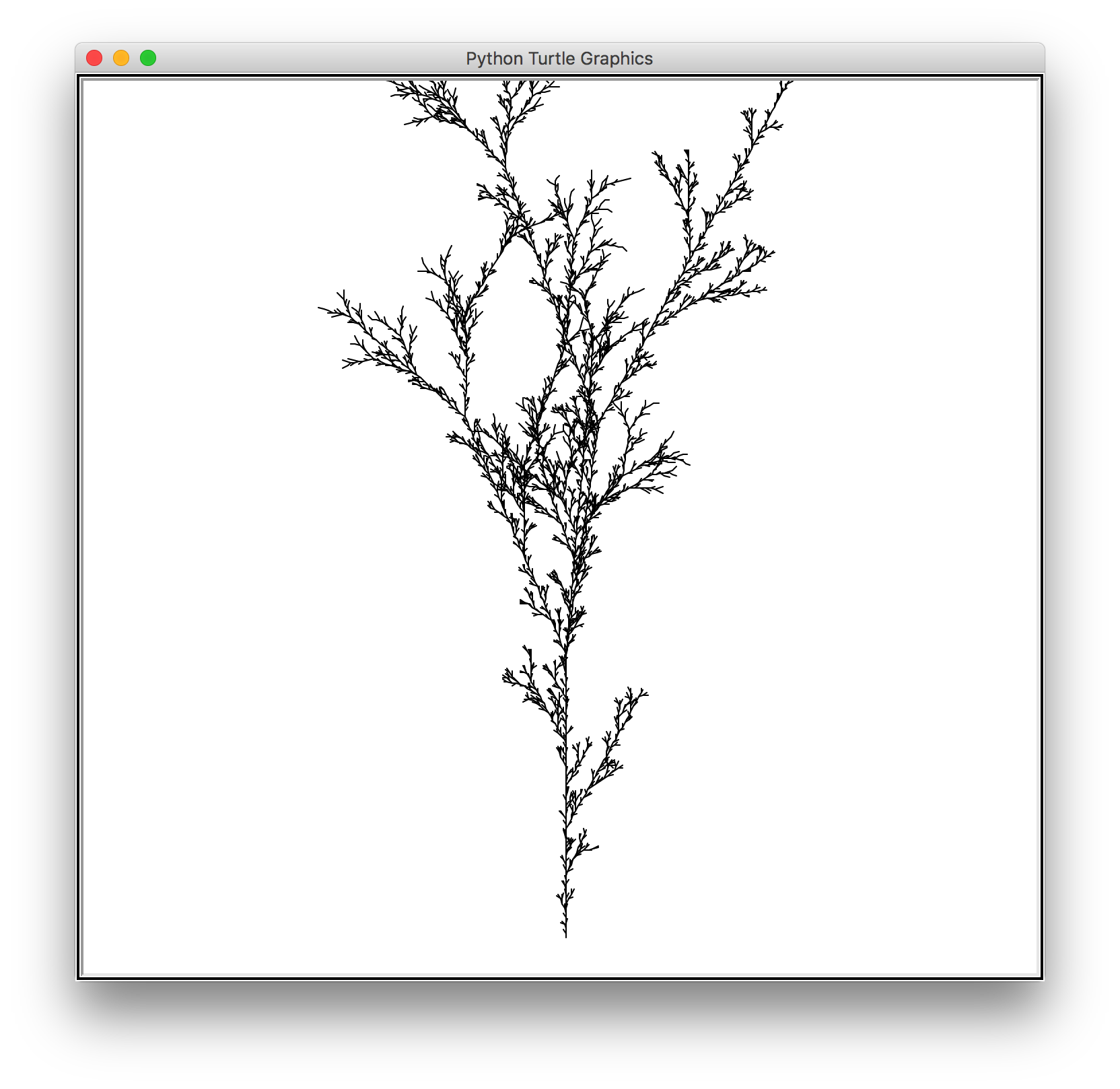

For example, the above image can be simply described by the rule

(X → F−[[X]+X]+F[+FX]−X), (F → FF)

As I’m also doing text generation, I thought about the interesting possibilities of applying text generation methods onto the rules. So the shapes of the trees will not be limited by the number of rules that I can find, and exotic and alien-looking trees can be easily churned out.

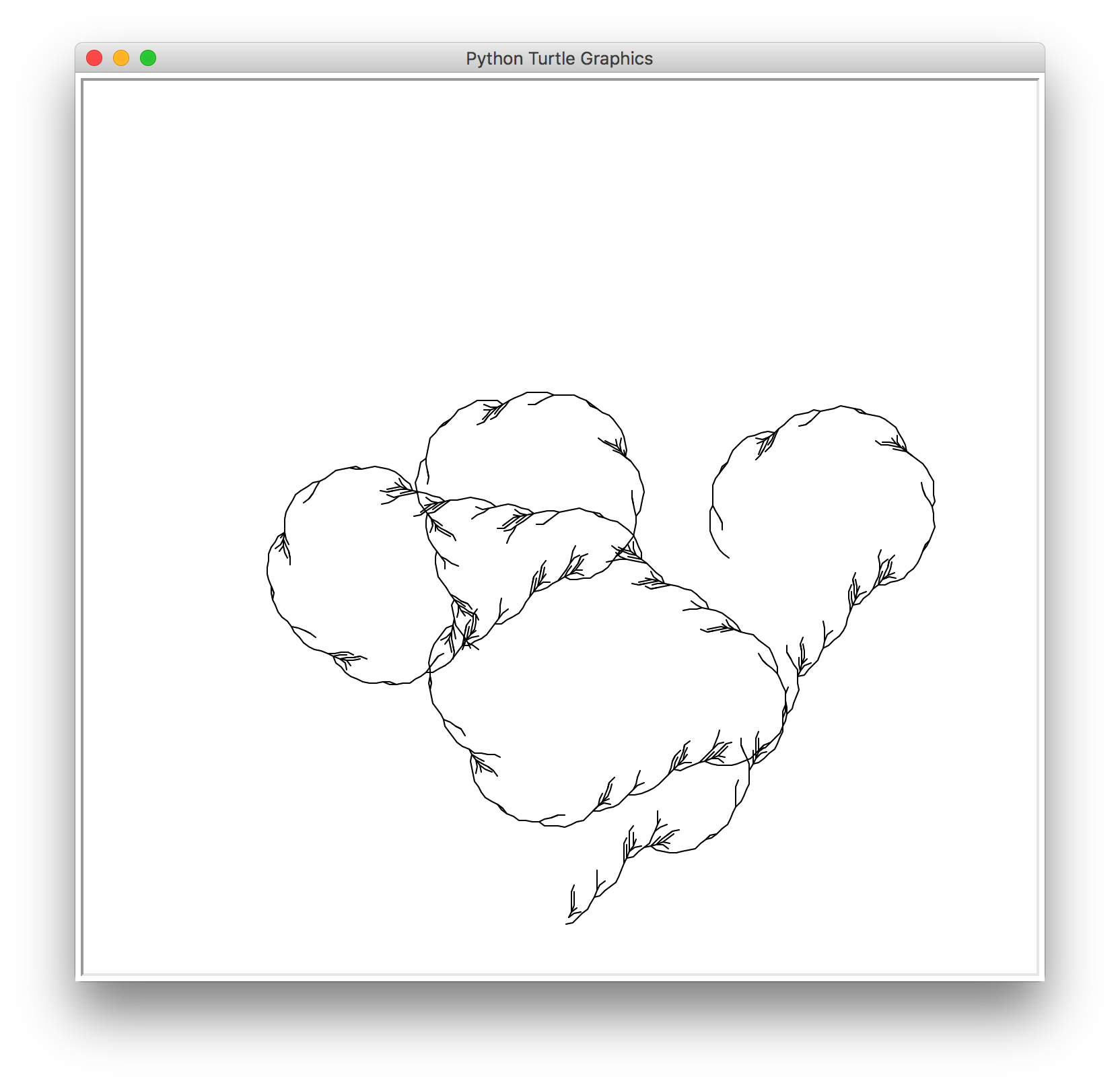

Below are a couple of outputs I was able to get by applying Markov chain to existing l-system rules using python.

However more often the program generates something that resembles a messy ball of string. Therefore I’m still working on it.