Zaport-tyvan-ARSCULPTURE

Zaport & I built our ARsculpture together

Zaport & I built our ARsculpture together

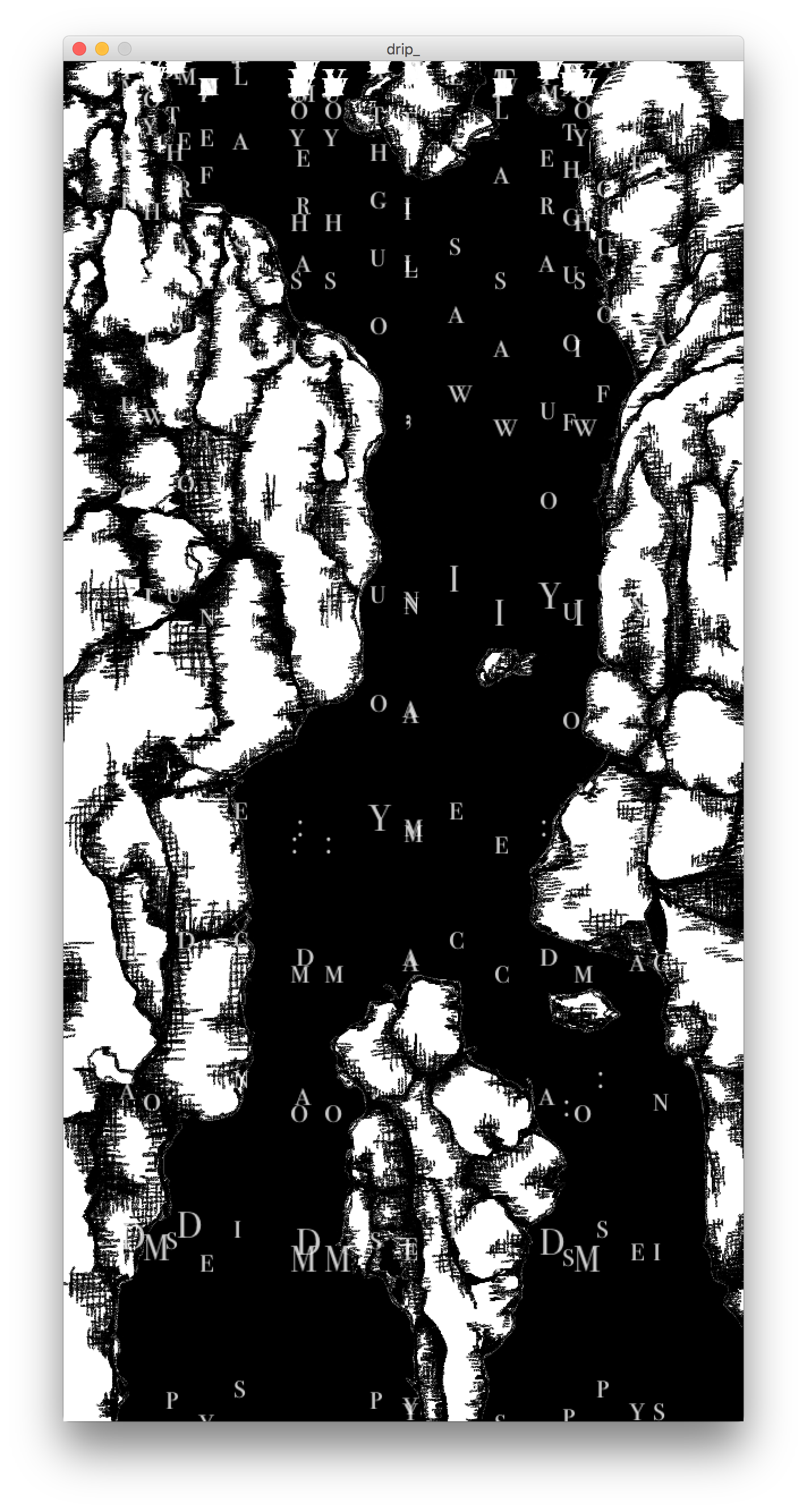

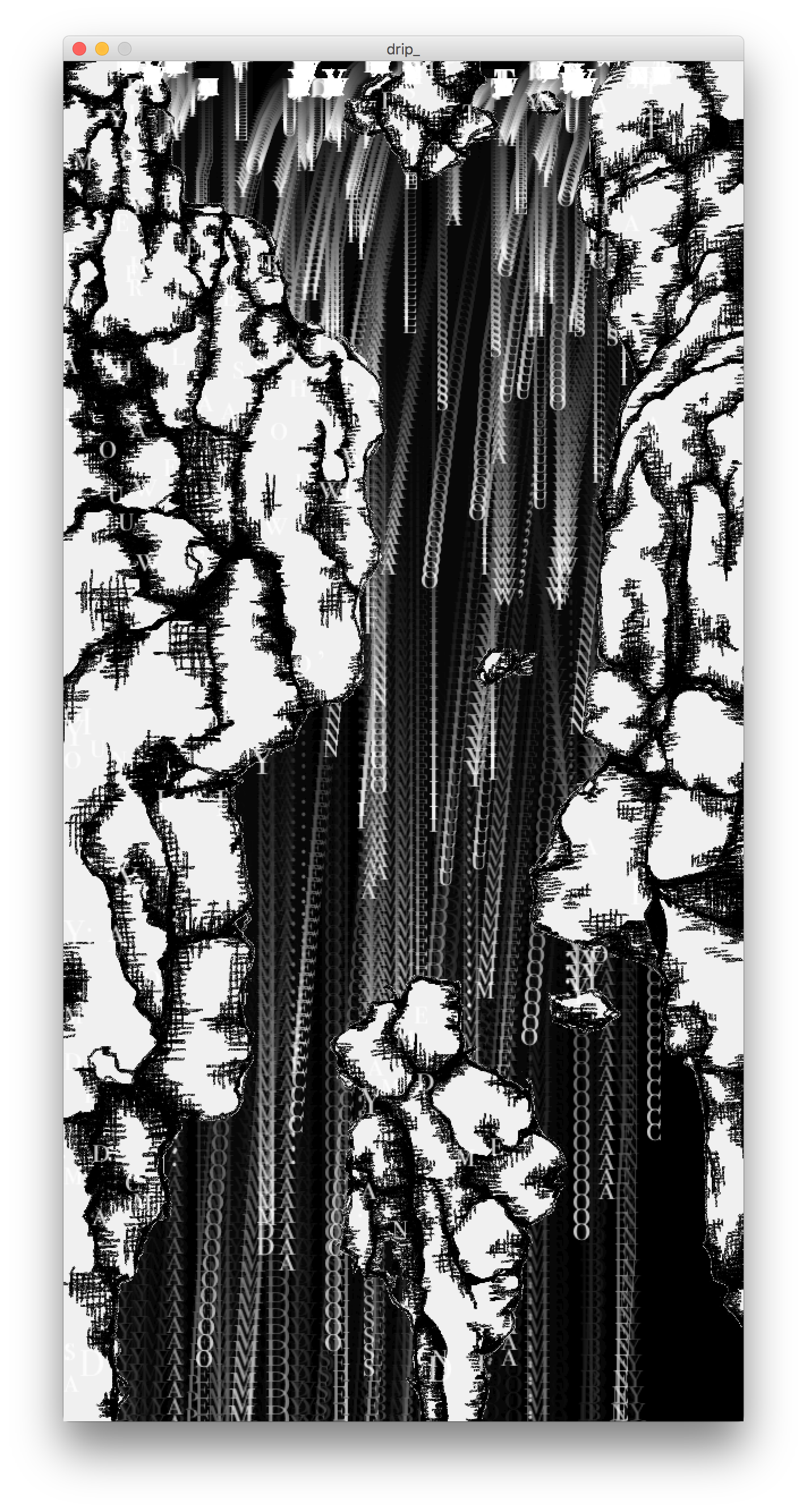

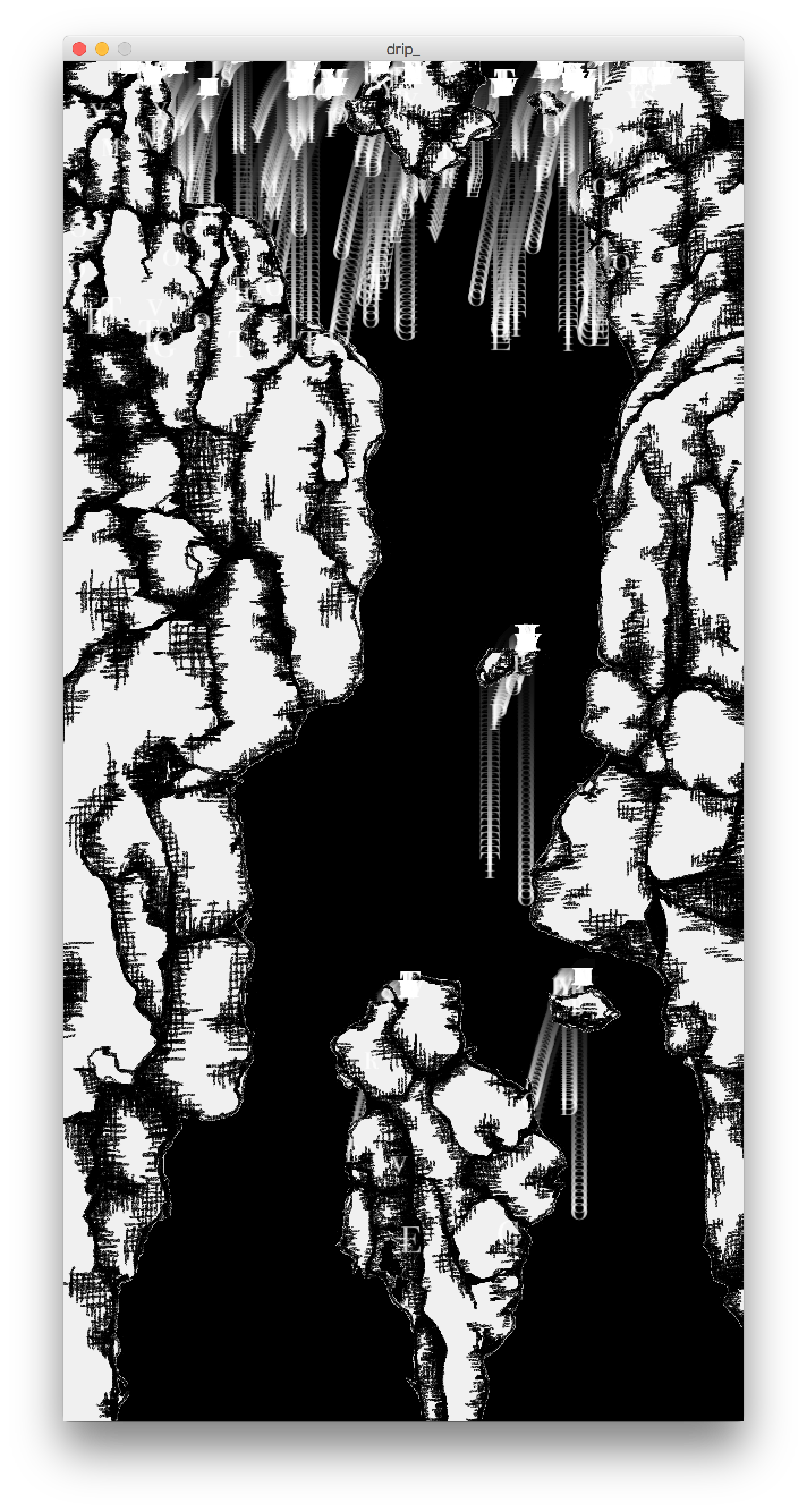

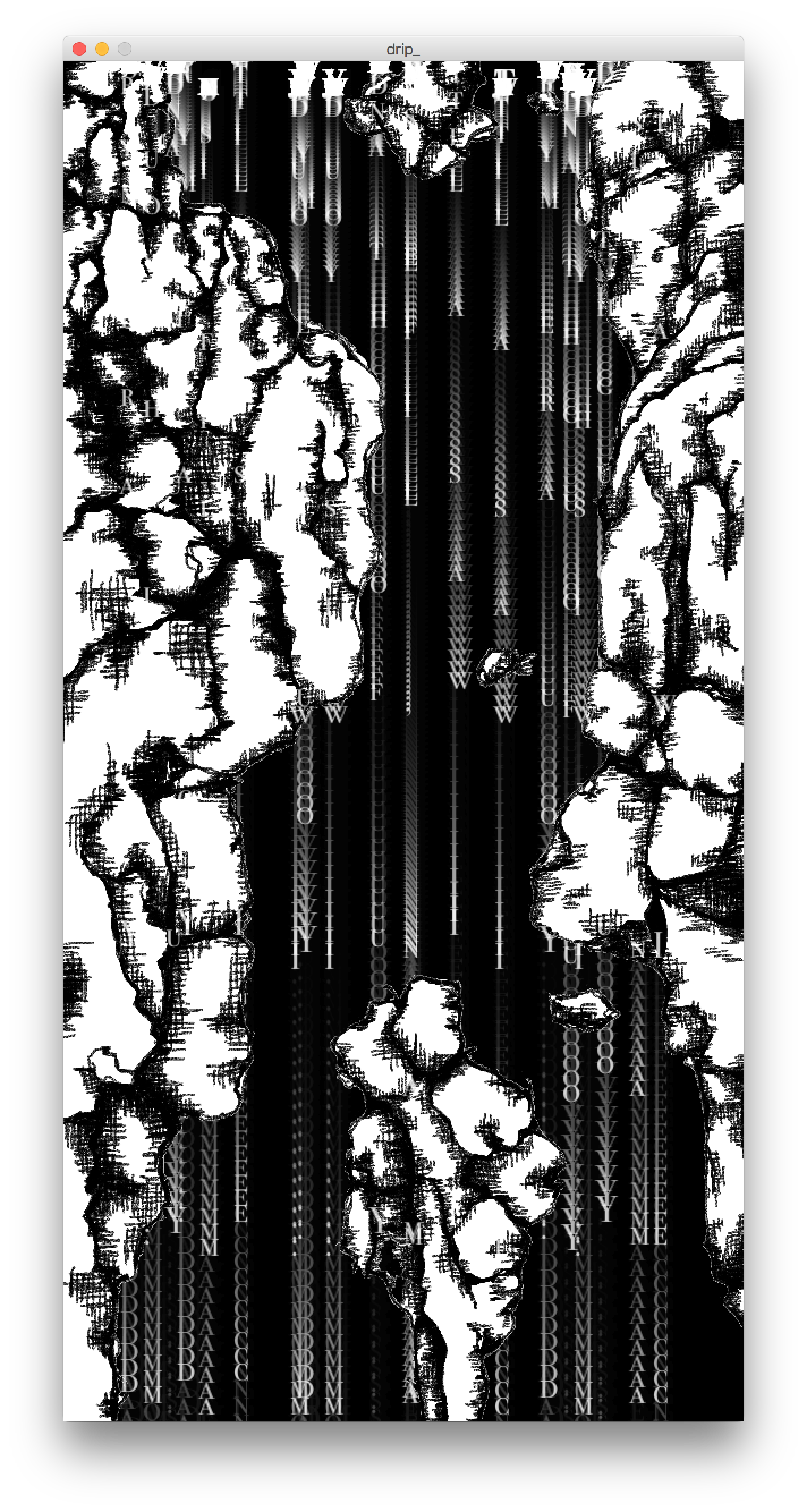

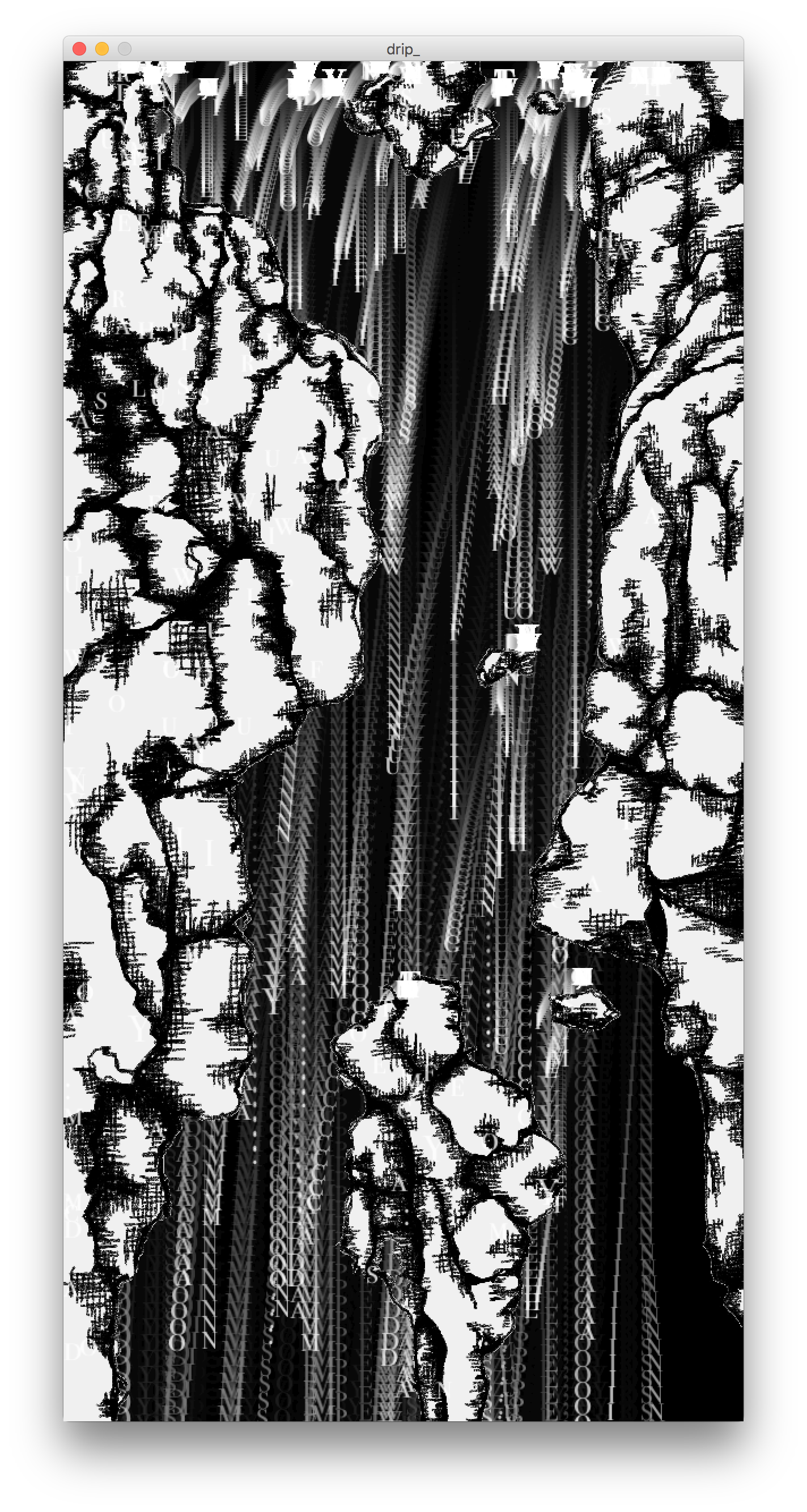

This project started out by me not really being inspired by the asemic writing challenge and wanting to find a way to solve that problem without making a nonsensical text based language. I thought of the way people live through death, and specifically last goodbyes. I intended on making this waterfall with statements from individuals hypothetically telling the people they love the most goodbye for ever and always. I had planned on also not using the plotter but instead laser cutting these statements and waterfall into layers of reflective paper that would then exist as a print and live happily along side the other versions of this project. However, although the further execution of this project may be interesting, I am happy with this extreme deviation from the project guidelines.

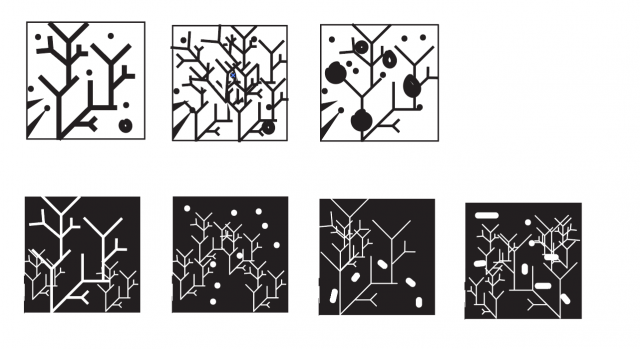

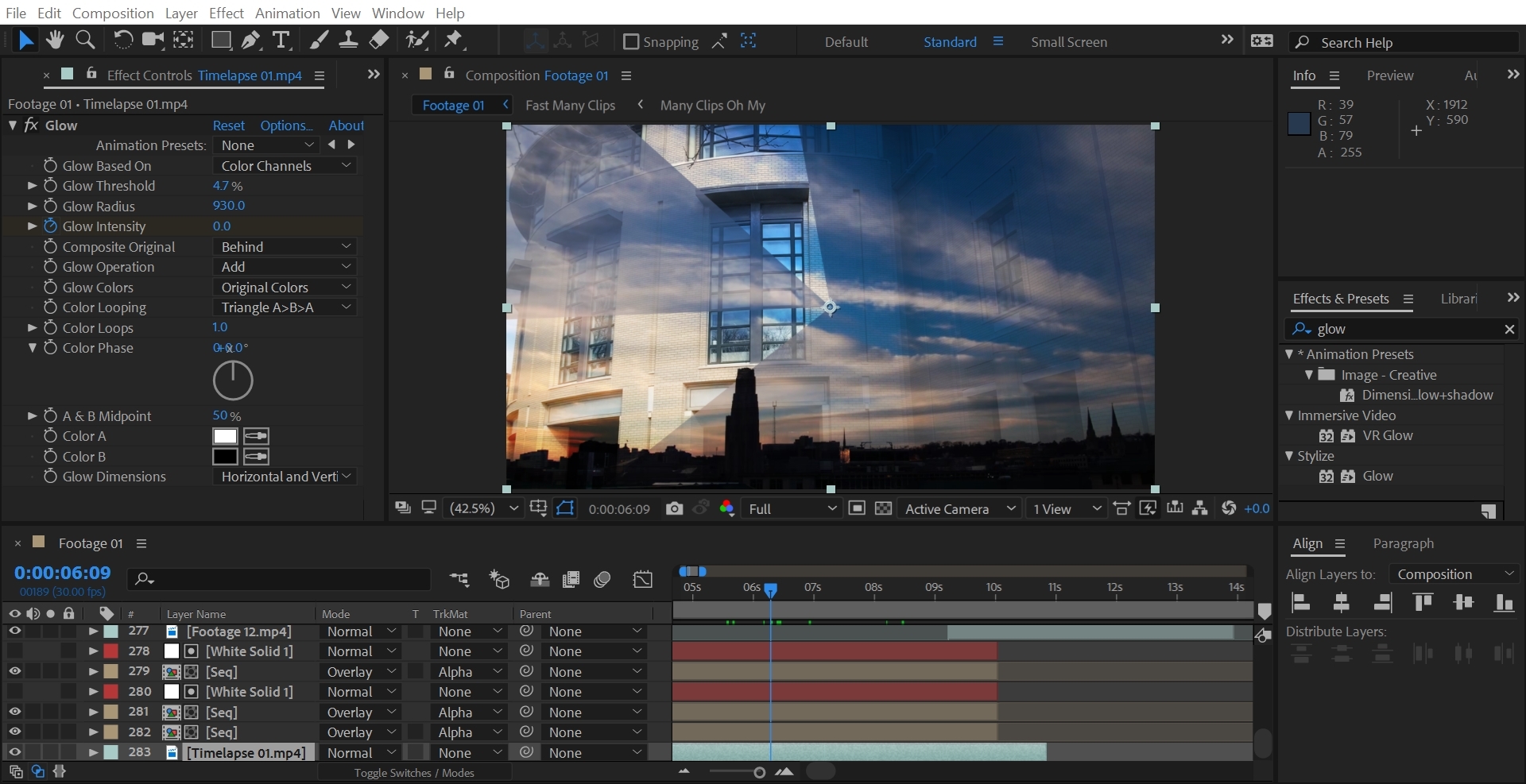

Some screenshots of the process and different versions of the river:

PImage bg; ArrayList peter1 = makeDropFromString(50, 10, "To my Dad: You are my role model for how to live a life where nothing was handed to you, and everything important was taken away from you. The amount of pain you put yourself through all your life for mom and us was never lost on me. You are an inspiration to me and what made me what I am proud to be today. For the rest of my life, even if you won't be there, I'll feel you there, I'll feel your humor, your pushing for me to take control of my destiny. I will never forget you or the things you taught me, and I'll only celebrate your memory, dad. I love you forever.".split("")); ArrayList peter2 = makeDropFromString(70, 20, "To my Mom: I wish you didn't have to go. It's only going to start hurting once the moment passes, because as you are here dying, I can't even imagine reaching for a phone and not having the ability to call you or talk to you. I can't imagine not wanting to make sure you know I'm okay- that there's somebody out there who is thinking about me and I'm forgetting about them. You taught me right from wrong my whole life, and you lived it. You were never a hypocrite when you could have been, you treated everyone with love even when they spat in your face. You never manipulated- you weren't good at it- instead you just spoke from the heart. You only ever spoke from the hear. You gave me things I can't pay back in ten lifetimes. I love you infinitely.".split("")); ArrayList peter3 = makeDropFromString(90, 10, "To Pops: You fought and lied and scrounged and tricked, but you also taught me that even when things looked terrible and life was at it's worst, you still had to be able to look at yourself in the mirror every morning. And as long as you could do that, then there was something okay. I always felt that I was a continuation of Peter, and wanted to make you feel that you could die knowing that one Peter Sheehan had survived and thrived in the world. It was all the times you encouraged me to be an artist, not for Peter, but because you saw I loved it. You gave me wisdom that has kept me sane, healthy, and alive. You are the fight in me that activates when it feels like there's nothing left to fight for. You are the knowledge in me when I feel lost. You are the courage to do the right thing even when it's dangerous. I can't ever forget about you, and will always love you.".split("")); ArrayList aman = makeDropFromString(120, 30, "Goodbye aman , life is over, love aman , aman.".split("")); ArrayList dank = makeDropFromString(150, 0, "Ever since I was a little girl I wanted to be just like you. Thank you for loving so much and doing everything you possibly could for me.".split("")); ArrayList n = makeDropFromString(180, 0, "Bye.".split("")); ArrayList peter1a = makeDropFromString(205, 15, "To my Dad: You are my role model for how to live a life where nothing was handed to you, and everything important was taken away from you. The amount of pain you put yourself through all your life for mom and us was never lost on me. You are an inspiration to me and what made me what I am proud to be today. For the rest of my life, even if you won't be there, I'll feel you there, I'll feel your humor, your pushing for me to take control of my destiny. I will never forget you or the things you taught me, and I'll only celebrate your memory, dad. I love you forever.".split("")); ArrayList peter2a = makeDropFromString(230, 30, "To my Mom: I wish you didn't have to go. It's only going to start hurting once the moment passes, because as you are here dying, I can't even imagine reaching for a phone and not having the ability to call you or talk to you. I can't imagine not wanting to make sure you know I'm okay- that there's somebody out there who is thinking about me and I'm forgetting about them. You taught me right from wrong my whole life, and you lived it. You were never a hypocrite when you could have been, you treated everyone with love even when they spat in your face. You never manipulated- you weren't good at it- instead you just spoke from the heart. You only ever spoke from the hear. You gave me things I can't pay back in ten lifetimes. I love you infinitely.".split("")); ArrayList peter3a = makeDropFromString(270, 10, "To Pops: You fought and lied and scrounged and tricked, but you also taught me that even when things looked terrible and life was at it's worst, you still had to be able to look at yourself in the mirror every morning. And as long as you could do that, then there was something okay. I always felt that I was a continuation of Peter, and wanted to make you feel that you could die knowing that one Peter Sheehan had survived and thrived in the world. It was all the times you encouraged me to be an artist, not for Peter, but because you saw I loved it. You gave me wisdom that has kept me sane, healthy, and alive. You are the fight in me that activates when it feels like there's nothing left to fight for. You are the knowledge in me when I feel lost. You are the courage to do the right thing even when it's dangerous. I can't ever forget about you, and will always love you.".split("")); ArrayList amana = makeDropFromString(300, 20, "Goodbye aman , life is over, love aman , aman.".split("")); ArrayList danka = makeDropFromString(340, 0, "Ever since I was a little girl I wanted to be just like you. Thank you for loving so much and doing everything you possibly could for me.".split("")); ArrayList na = makeDropFromString(400, 30, "Bye.".split("")); ArrayList peter1b = makeDropFromString(420, 15, "To my Dad: You are my role model for how to live a life where nothing was handed to you, and everything important was taken away from you. The amount of pain you put yourself through all your life for mom and us was never lost on me. You are an inspiration to me and what made me what I am proud to be today. For the rest of my life, even if you won't be there, I'll feel you there, I'll feel your humor, your pushing for me to take control of my destiny. I will never forget you or the things you taught me, and I'll only celebrate your memory, dad. I love you forever.".split("")); ArrayList peter2b = makeDropFromString(450, 30, "To my Mom: I wish you didn't have to go. It's only going to start hurting once the moment passes, because as you are here dying, I can't even imagine reaching for a phone and not having the ability to call you or talk to you. I can't imagine not wanting to make sure you know I'm okay- that there's somebody out there who is thinking about me and I'm forgetting about them. You taught me right from wrong my whole life, and you lived it. You were never a hypocrite when you could have been, you treated everyone with love even when they spat in your face. You never manipulated- you weren't good at it- instead you just spoke from the heart. You only ever spoke from the hear. You gave me things I can't pay back in ten lifetimes. I love you infinitely.".split("")); ArrayList peter3b = makeDropFromString(470, 0, "To Pops: You fought and lied and scrounged and tricked, but you also taught me that even when things looked terrible and life was at it's worst, you still had to be able to look at yourself in the mirror every morning. And as long as you could do that, then there was something okay. I always felt that I was a continuation of Peter, and wanted to make you feel that you could die knowing that one Peter Sheehan had survived and thrived in the world. It was all the times you encouraged me to be an artist, not for Peter, but because you saw I loved it. You gave me wisdom that has kept me sane, healthy, and alive. You are the fight in me that activates when it feels like there's nothing left to fight for. You are the knowledge in me when I feel lost. You are the courage to do the right thing even when it's dangerous. I can't ever forget about you, and will always love you.".split("")); ArrayList amanb = makeDropFromString(500, 20, "Goodbye aman , life is over, love aman , aman.".split("")); ArrayList dankb = makeDropFromString(380, 30, "Ever since I was a little girl I wanted to be just like you. Thank you for loving so much and doing everything you possibly could for me.".split("")); ArrayList peter1c = makeDropFromString(100, 0, "To my Dad: You are my role model for how to live a life where nothing was handed to you, and everything important was taken away from you. The amount of pain you put yourself through all your life for mom and us was never lost on me. You are an inspiration to me and what made me what I am proud to be today. For the rest of my life, even if you won't be there, I'll feel you there, I'll feel your humor, your pushing for me to take control of my destiny. I will never forget you or the things you taught me, and I'll only celebrate your memory, dad. I love you forever.".split("")); ArrayList peter2c = makeDropFromString(200, 30, "To my Mom: I wish you didn't have to go. It's only going to start hurting once the moment passes, because as you are here dying, I can't even imagine reaching for a phone and not having the ability to call you or talk to you. I can't imagine not wanting to make sure you know I'm okay- that there's somebody out there who is thinking about me and I'm forgetting about them. You taught me right from wrong my whole life, and you lived it. You were never a hypocrite when you could have been, you treated everyone with love even when they spat in your face. You never manipulated- you weren't good at it- instead you just spoke from the heart. You only ever spoke from the hear. You gave me things I can't pay back in ten lifetimes. I love you infinitely.".split("")); ArrayList peter3c = makeDropFromString(440, 25, "To Pops: You fought and lied and scrounged and tricked, but you also taught me that even when things looked terrible and life was at it's worst, you still had to be able to look at yourself in the mirror every morning. And as long as you could do that, then there was something okay. I always felt that I was a continuation of Peter, and wanted to make you feel that you could die knowing that one Peter Sheehan had survived and thrived in the world. It was all the times you encouraged me to be an artist, not for Peter, but because you saw I loved it. You gave me wisdom that has kept me sane, healthy, and alive. You are the fight in me that activates when it feels like there's nothing left to fight for. You are the knowledge in me when I feel lost. You are the courage to do the right thing even when it's dangerous. I can't ever forget about you, and will always love you.".split("")); ArrayList amanc = makeDropFromString(300, 15, "Goodbye aman , life is over, love aman , aman.".split("")); ArrayList dankc = makeDropFromString(520, 20, "Ever since I was a little girl I wanted to be just like you. Thank you for loving so much and doing everything you possibly could for me.".split("")); ArrayList peter3d = makeDropFromString(400, 520, "To Pops: You fought and lied and scrounged and tricked, but you also taught me that even when things looked terrible and life was at it's worst, you still had to be able to look at yourself in the mirror every morning. And as long as you could do that, then there was something okay. I always felt that I was a continuation of Peter, and wanted to make you feel that you could die knowing that one Peter Sheehan had survived and thrived in the world. It was all the times you encouraged me to be an artist, not for Peter, but because you saw I loved it. You gave me wisdom that has kept me sane, healthy, and alive. You are the fight in me that activates when it feels like there's nothing left to fight for. You are the knowledge in me when I feel lost. You are the courage to do the right thing even when it's dangerous. I can't ever forget about you, and will always love you.".split("")); ArrayList amand = makeDropFromString(450, 815, "Goodbye aman , life is over, love aman , aman.".split("")); ArrayList dankd = makeDropFromString(297, 826, "Ever since I was a little girl I wanted to be just like you. Thank you for loving so much and doing everything you possibly could for me.".split("")); int x = 0; int y = 0; PFont font; void setup() { size(600, 1200); bg = loadImage("river of dead goodbyes.png"); frameRate(60); String[] fontList = PFont.list(); printArray(fontList); font = loadFont("BodoniSvtyTwoSCITCTT-Book-30.vlw"); background(0); textFont(font, 32); } void draw() { fill(0, 0, 0, 15); noStroke(); image(bg, 0, 0, 600, 1200); rect(0, 0, width, height); fill(255, 200); for (int i = 0; i < peter3d.size(); i++) { peter3d.get(i).draw(); peter3d.get(i).update(); } for (int i = 0; i < amand.size(); i++) { amand.get(i).draw(); amand.get(i).update(); } for (int i = 0; i < danka.size(); i++) { dankd.get(i).draw(); dankd.get(i).update(); } for (int i = 0; i < peter1a.size(); i++) { peter1a.get(i).draw(); peter1a.get(i).update(); } for (int i = 0; i < peter2a.size(); i++) { peter2a.get(i).draw(); peter2a.get(i).update(); } for (int i = 0; i < peter3a.size(); i++) { peter3a.get(i).draw(); peter3a.get(i).update(); } for (int i = 0; i < amana.size(); i++) { amana.get(i).draw(); amana.get(i).update(); } for (int i = 0; i < danka.size(); i++) { danka.get(i).draw(); danka.get(i).update(); } for (int i = 0; i < peter1b.size(); i++) { peter1b.get(i).draw(); peter1b.get(i).update(); } for (int i = 0; i < peter2b.size(); i++) { peter2b.get(i).draw(); peter2b.get(i).update(); } for (int i = 0; i < peter3b.size(); i++) { peter3b.get(i).draw(); peter3b.get(i).update(); } for (int i = 0; i < amanb.size(); i++) { amanb.get(i).draw(); amanb.get(i).update(); } for (int i = 0; i < dankb.size(); i++) { dankb.get(i).draw(); dankb.get(i).update(); } for (int i = 0; i < peter1c.size(); i++) { peter1c.get(i).draw(); peter1c.get(i).update(); } for (int i = 0; i < peter2c.size(); i++) { peter2c.get(i).draw(); peter2c.get(i).update(); } for (int i = 0; i < peter3c.size(); i++) { peter3c.get(i).draw(); peter3c.get(i).update(); } for (int i = 0; i < amanc.size(); i++) { amanc.get(i).draw(); amanc.get(i).update(); } for (int i = 0; i < dankc.size(); i++) { dankc.get(i).draw(); dankc.get(i).update(); } for (int i = 0; i < peter1.size(); i++) { peter1.get(i).draw(); peter1.get(i).update(); } for (int i = 0; i < peter2.size(); i++) { peter2.get(i).draw(); peter2.get(i).update(); } for (int i = 0; i < peter3.size(); i++) { peter3.get(i).draw(); peter3.get(i).update(); } for (int i = 0; i < aman.size(); i++) { aman.get(i).draw(); aman.get(i).update(); } for (int i = 0; i < dank.size(); i++) { dank.get(i).draw(); dank.get(i).update(); } } ArrayList makeDropFromString(int x, int y, String[] deadMessage) { ArrayList Dripping = new ArrayList(); for (int i = 0; i<deadMessage.length; i++) { Dripping.add(new Drop(x, y, 10 * i + int(random(-10,10)), deadMessage[i])); } return Dripping; } class Drop { float velocity; float xvelocity = 0; float acceleration = .09; int x; int y; String letter; int d; Drop(int sx, int sy, int delay, String l) { x = sx; y = sy; letter = l; velocity = 0; d = delay; } void update() { if (d> 0) { d -=1; return; } velocity += acceleration; y += velocity; xvelocity += random(-0.07, 0.07); x += xvelocity; } void draw() { //textSize(32); //fill(170, 0, 0); text(letter, x, y); } } |

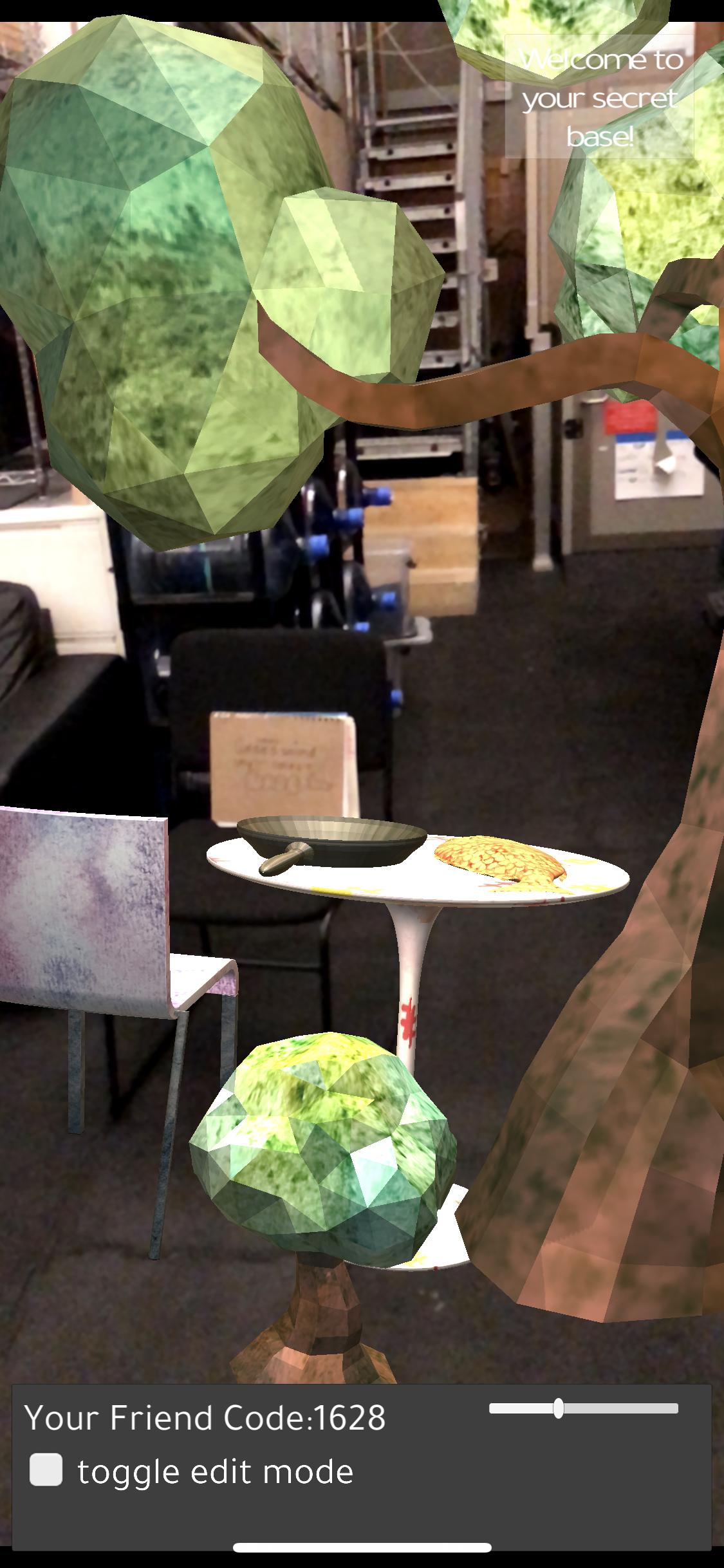

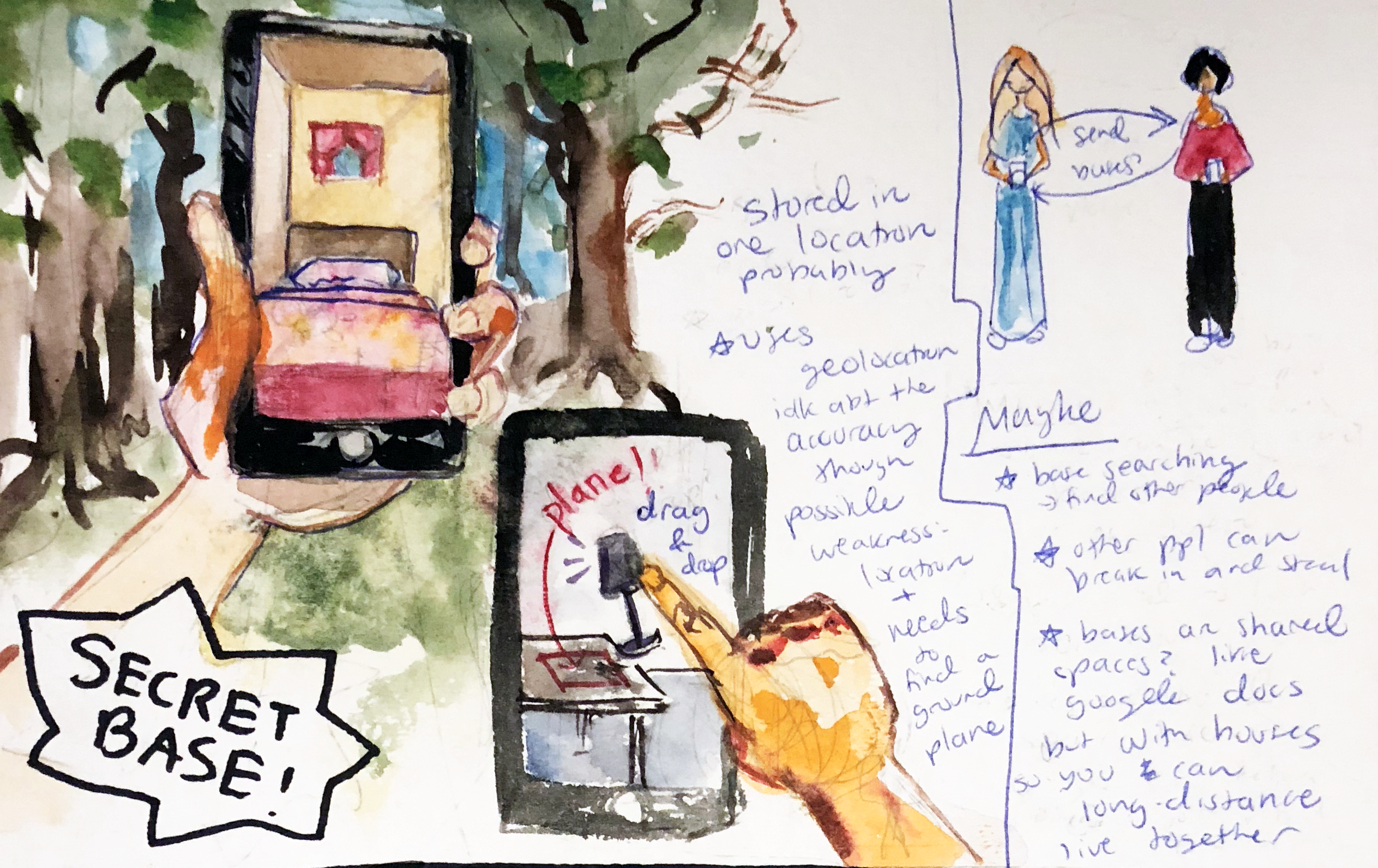

Description: “It’s a treehouse, but without the tree.”

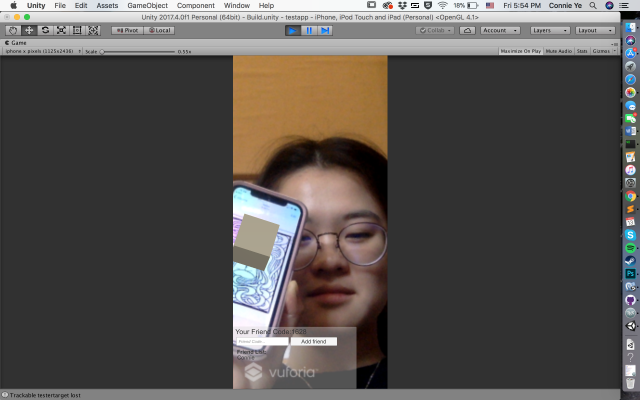

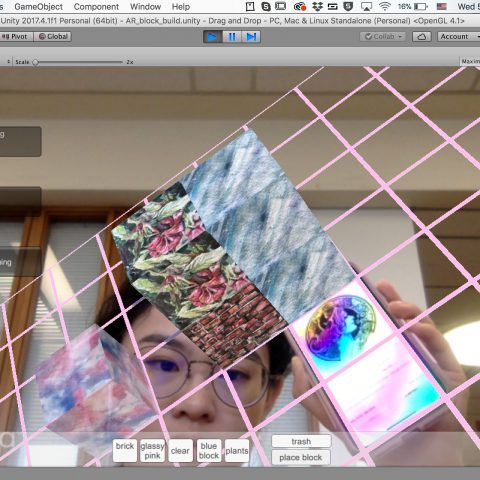

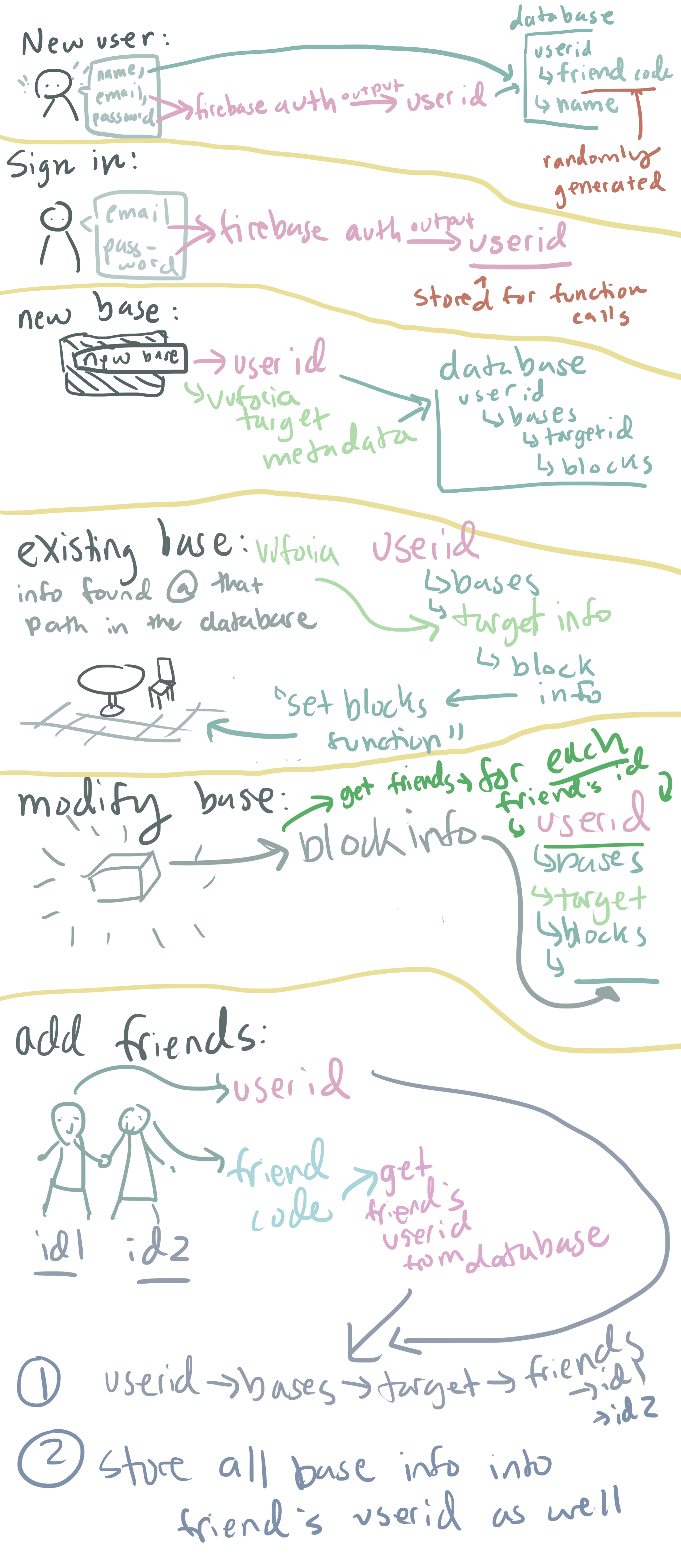

Using distinctive images (posters, stickers, wall patterns) from real life, users can build “secret bases” that will pop up any time the app detects those images. These bases will be stored and can be sent to the users’ friends through a friend code.

These friends also have the ability to see and modify the same structure if they are within proximity of the same image.

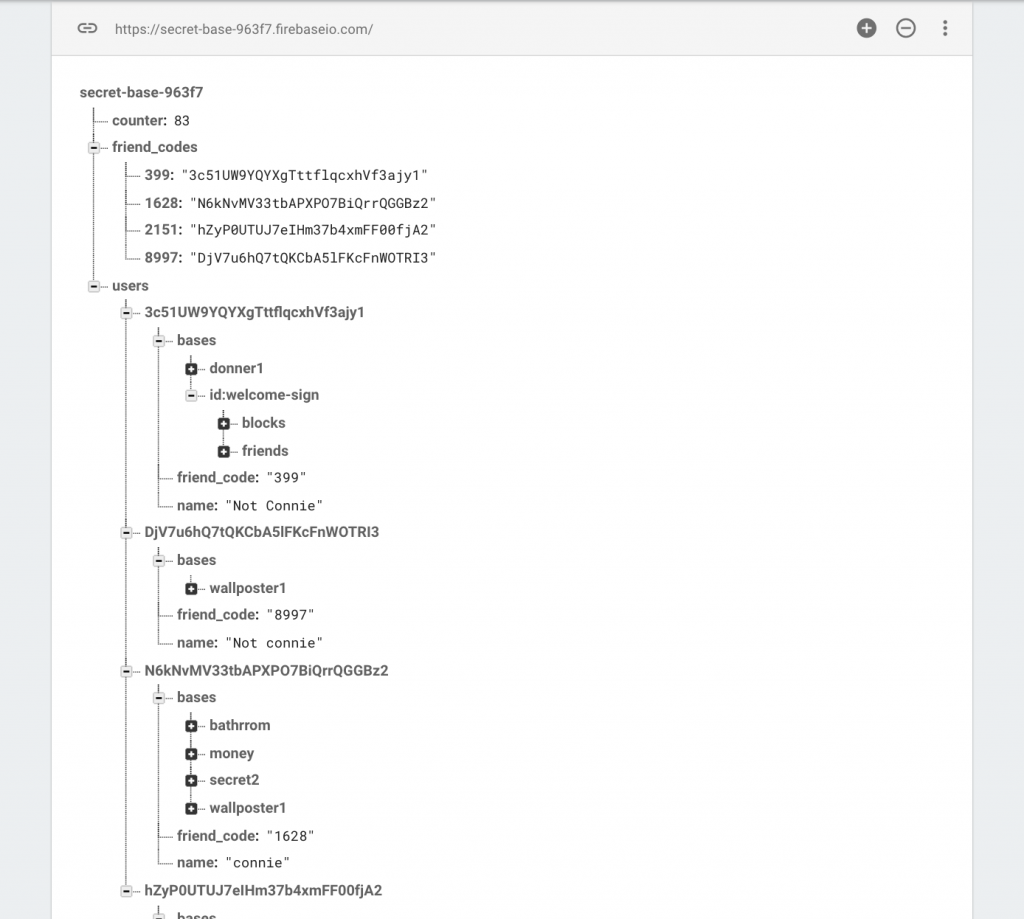

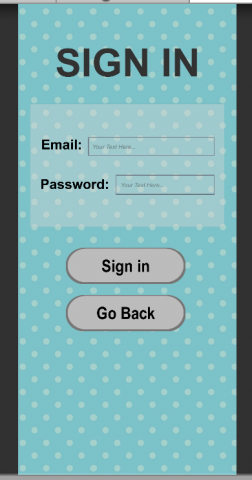

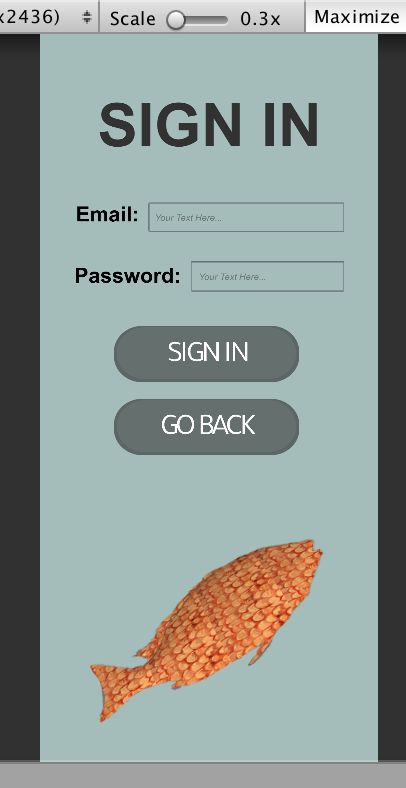

All the furniture was textured with hand-painted watercolor textures, and the “sharing” aspect uses both Firebase authorization for user sign in and Firebase’s database to remember base information. The AR was made using vuforia’s cloud database and extended tracking.

For people who are interested in “”AR sharing”””, I’ve uploaded the relevant code to github here 🙂 —->>> https://github.com/khanniie/Secret_Base_Firebase

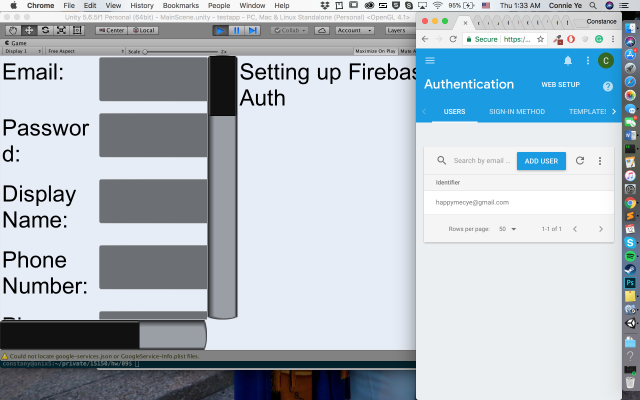

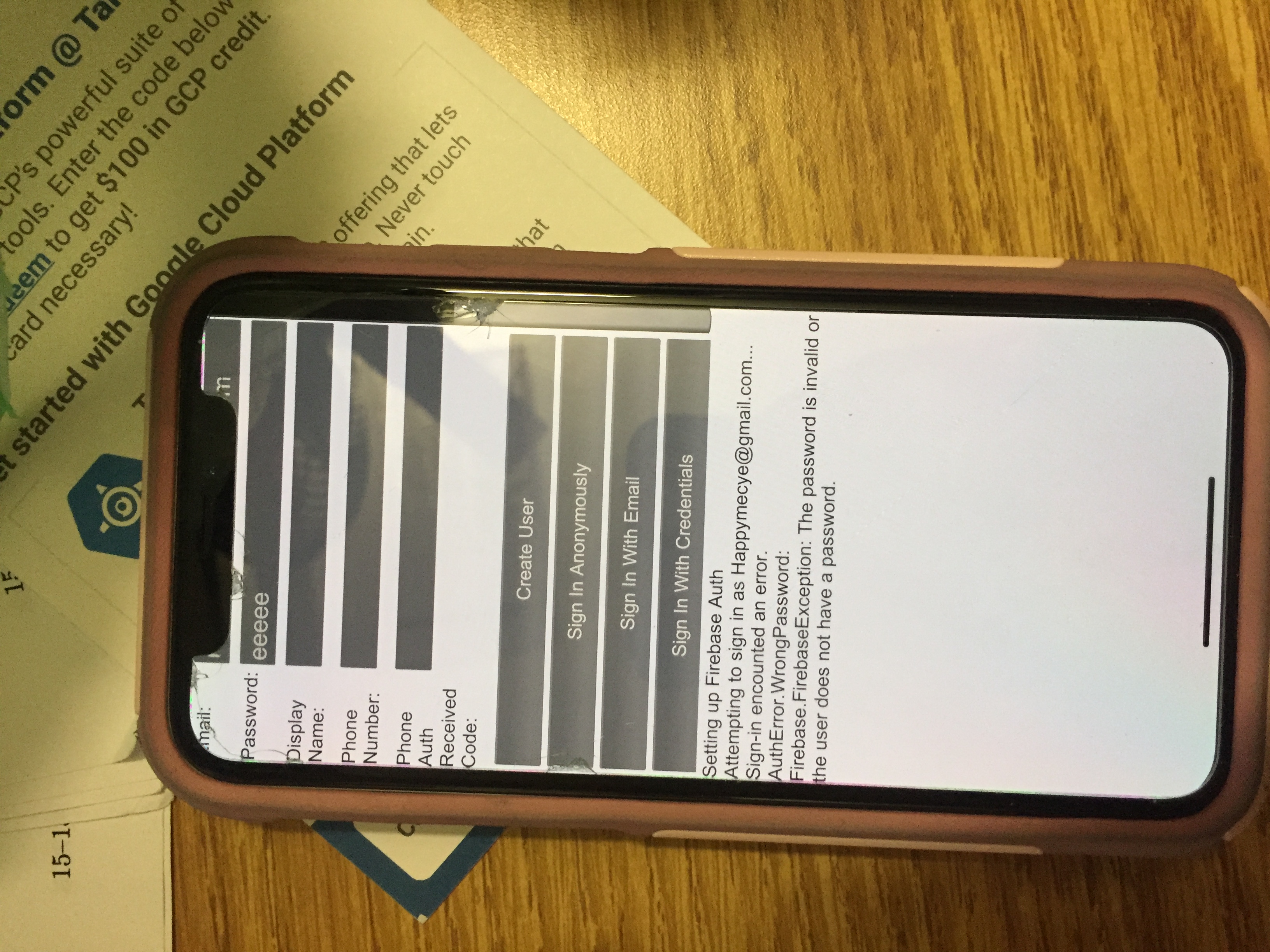

Above: Testing with Firebase’s quickstart apps! I spent a really long time trying to work out the annoyingly small errors, so it ended up taking me the whole week to figure it out. But, in case anyone else is interested, I’ve documented the errors and fixes here –> /conye/04/06/conye-artech/

Above: Database is working!

Above: User auth is working too!! 🙂

Basic demo: I had a small cube that I updated using a slider. The slider’s value info would be sent to firebase, which then sent the information back and told the app to change the size of the cube.

Above are thumbnails of my texture making process! I would watercolor them, take a picture of those watercolors, edit them in photoshop, and make a normal map for the texture if I thought it should be bumpy.

Above: Basic grid and block placing implementation. The block has my brick watercolor textures, which was super exciting for me because after I used photoshop to create a normal map for the texture, it looked sooooo good.

Above: Block building, but built into AR!

This week, I spent a lot of time trying to get the firebase details correct. I was able to send and retrieve data, and authorize users, but I didn’t have a structured way of implementing my idea yet. What I ended up with looked like this:

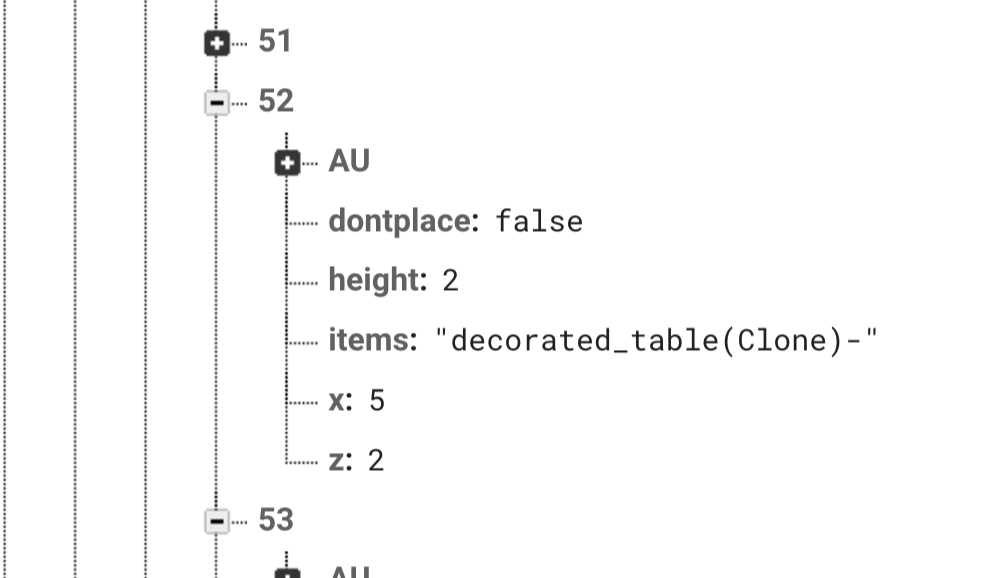

In Firebase:

The above: is how one unit of the grid keeps its info. If there are multiple items stacked at that square, the items variable/key will store a single string with the furniture names concatenated together with a “-” character in between.

Also, in the last few hours on Thursday, I updated my hideous UI!

Before and after:

Example image targets that I used for my secret bases.

My biggest regret was that I wasn’t able to implement dynamically uploading image targets to Vuforia’s cloud database within the app, but after spending 8 hours debugging I had to give up and move on. I think I was almost there, but every time I just get “error: bad request”, so I think I’m encoding my access keys wrong or something. At least I learned a lot about how HTTP requests work.

Overall, this was a crazy hard and time consuming project for me, but I learned a lot about unity and firebase in the process. I’m really happy with how it turned out and am kind of happily surprised by how functional it is.

BIG THANK YOUS

to

Nitesh for meeting up with me to work, and for staying late on thursday to help me document!!! Also for being a good friend overall.

Rain for being the best 15-251 partner, without Rain I would have been doing proofs instead of this project. Also thank you to Rain for being a good friend in general!!!

Sophia C. for being a Very Good Buddy and for meeting up with me to do work :))

Golan for being a really great teacher!!! And for his advice on the project direction, he told me to add furniture instead of just blocks, and my project is decidedly much better looking because of it.

Claire for being so encouraging all the time, and for her advice on the watercolor textures!

Also: Yixin, Peter, Sophia Q. and everyone else for brightening up my days in Golan’s studio!! I’m always super inspired by you guys.

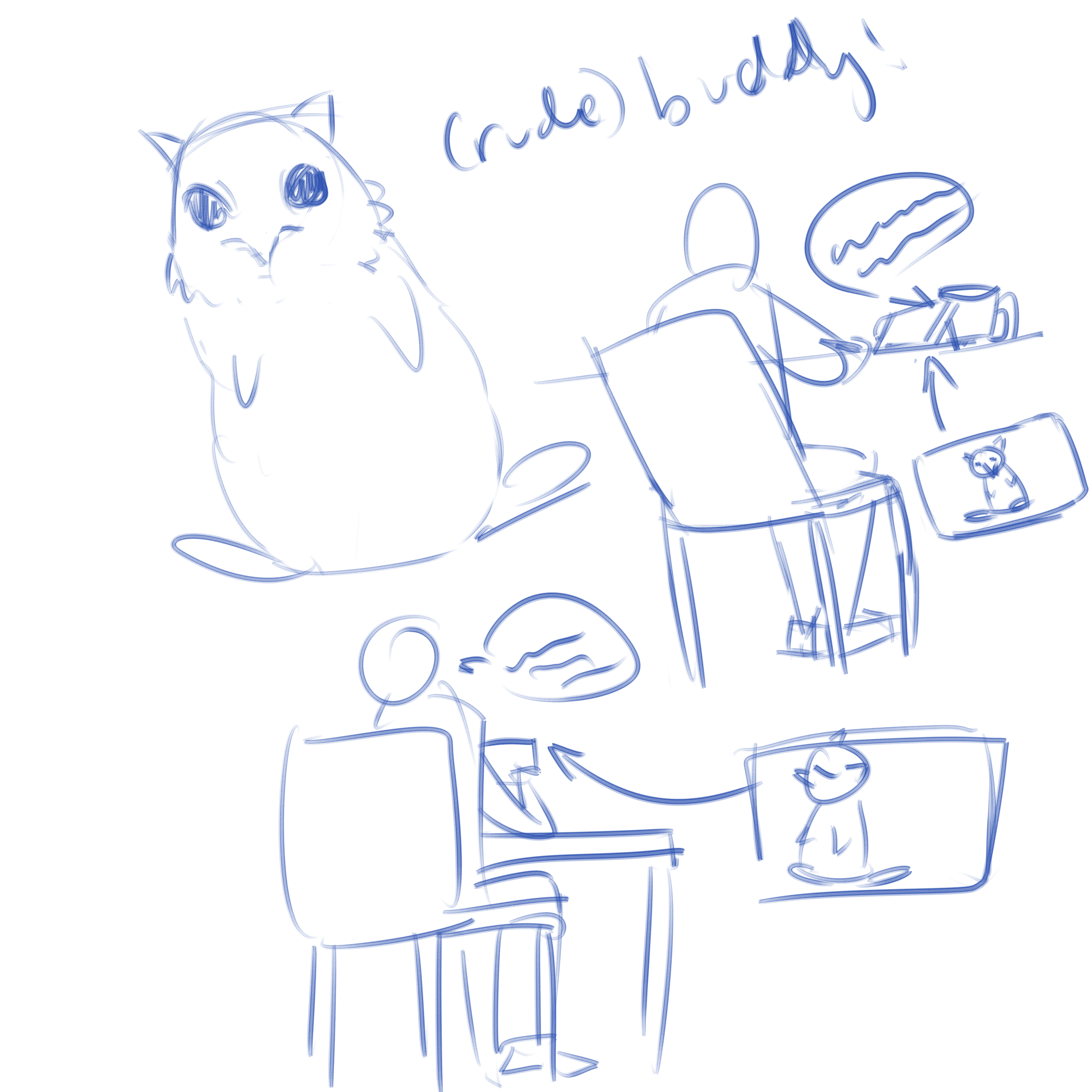

A mildly obnoxious study buddy.

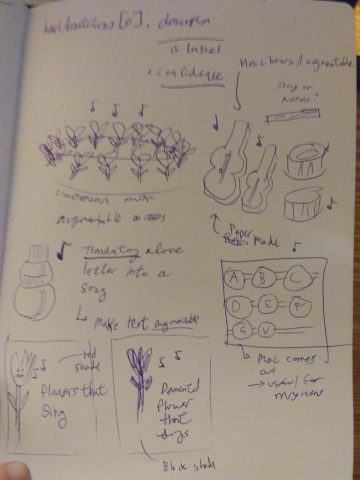

Initial Sketch

Unity setup

Different states of the buddy: idle, happy, angry. Kinda hard to see in the video, but present in the app.

Idle

Happy

Angry

The original idea was the make a rude buddy that only wanted to talk to you when you didn’t want to talk to it. After hearing feedback for the first week of the project, I decided to keep the obnoxiousness but turn my buddy into a kind of productivity aid. To use it, you just load up the android app while you’re working and it’ll continuously spout vaguely useful advice at you. If you attempt to pick up the phone, potentially to procrastinate, it’ll ask you if you’ve finished your work and then respond in accordance with your reply.

I’m happy with the final look of the animations, although the timing of the animations besides idle could be lengthened in the app. I’m also just happy that I got most of the features working, since using Unity produced a number of hurdles that I never expected to be hurdles. For example, I first rigged and animated my modeled creature with various NURBS controllers, but then soon discovered that either Unity or the FBX exporter of Maya hated the controllers, and thus I had to remove all my controllers and re-animate everything while committing the sin of animating by directly moving joints. Some weaknesses of the project: variety of interaction is pretty limited, creature doesn’t interact much with the environment, a few small bugs in the speech remain.

This was a solo project, but I’d like to thank Miyehn who discussed the original idea with me, Zbeok for some Unity troubleshooting, all my classmates that gave helpful comments when we last critiqued these projects, and my housemate who washed my dishes for me while I pulled an all nighter.

Walk through these portals to reveal layers of other worlds that exist alongside ours.

The Process:

This project is the result of a long struggle I had learning to use Stencil Filters with AR in Unity. I originally started by creating portals that could hide specific objects, and moved on to learn how to edit shaders from unity assets and even the unity default shaders to allow objects to be hidden from sight unless you looked at them through these portals.

Once I figured out how to pass through the portals so that the objects would become visible once you walked through, I was able to start working on their organization and on what the portals would contain.

Originally I was working on layering the portals so that it would create a maze or dungeon game forcing the user to look for the next portal level, however it proved incredibly difficult for more than 2 portals, and also felt too restrictive for the user. I wanted to encourage a sense of exploration and discovery with these portals so I shifted to a method where the user could wander through several different kinds of portals and see what objects each one contained, and see how the different objects exist together.

I also implemented light estimation techniques in order to create virtual objects that would adjust their brightness to match that of the real world around them. This is done using a shader that can access the phone’s camera and read the average brightness value of a camera image in as an input to help adjust that of the in-game objects.

Resources:

Special Thanks:

to aahdee, farcar, creatyde, sheep, and Aman for teaching me about shaders and the logic behind using multiple stencil shaders at once

to conye for helping with documentation and moral support

And of course to Golan, Claire, and the Studio without which this would not have been possible

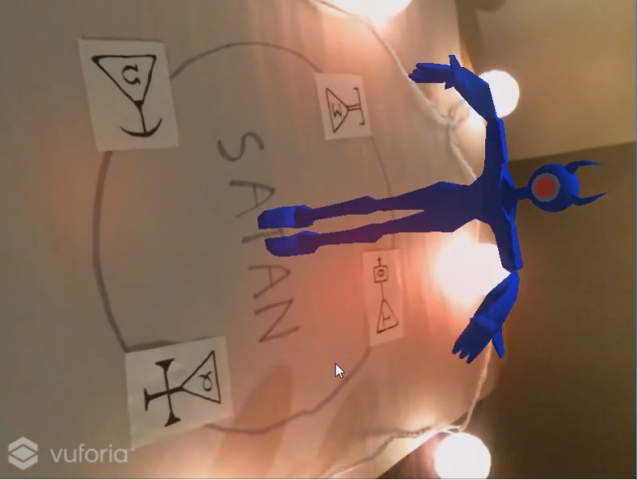

Description: Ever wanted to summon Satan? Well, this is kind of the closest you can get on the market.

Video:

Screencap:

GIF:

Process shots:

Concept art regarding the initial idea. I made a few digital sketches as well.

An in progress model shot, before animation but after rigging.

Vuforia screengrab of my debugging.

The initial setup I had to test my homography graphics setup.

About this Project:

Because I haven’t seen a technology that allows you to summon demons (yet), I’ve created that demon-raising tech to fill in that niche. It combines AR image target technology, perspective correction, Google text recognition, and some 3D animation magic to bring Satan from hell. All you need to do is to draw a magic circle on the ground, put the proper symbols down, and call upon him!

This was a uniquely challenging project in the sense that the largest problem was finding the proper method of doing what was needed. I could have executed this project in many different ways, so choosing what was best for the project was hard. In addition, I had to quickly sift through new technology that I didn’t quite understand. For instance, importing 3D models from extraneous programs, or even implementing completely new libraries were topics that were pretty new to me in the context of Unity. I think it all went well, given the very loose nature of the project’s conception, but I’d like to be much more ambitious next time.

Acknowledgements:

Many thanks to the following people, who helped immensely in the creation and success of this project.

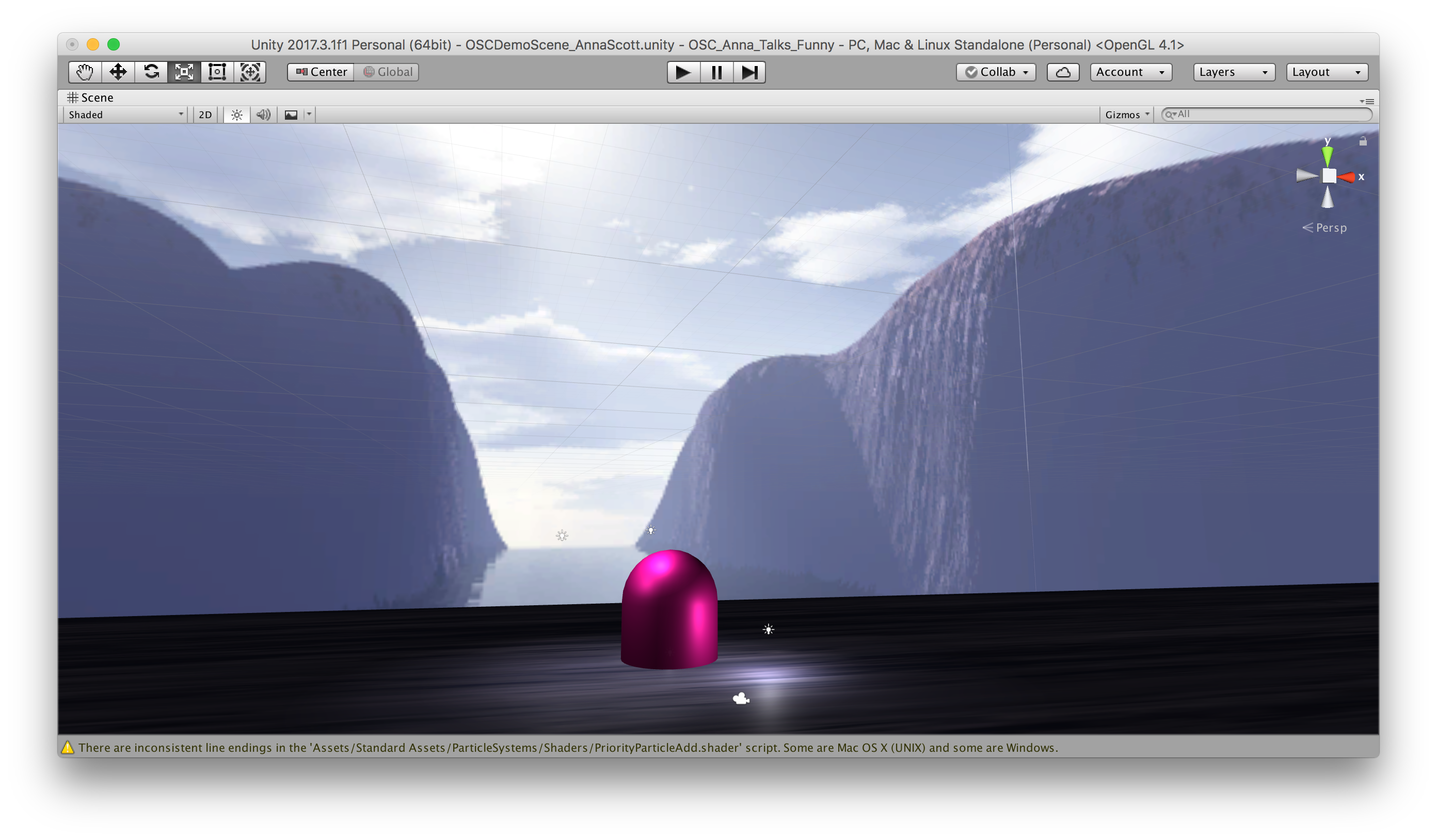

Collaboration with Scott Leinweber

Disclaimer: Due to run-time issues between my programs and Unity, I’m currently not able to get an in-time screen recording of the live interaction.

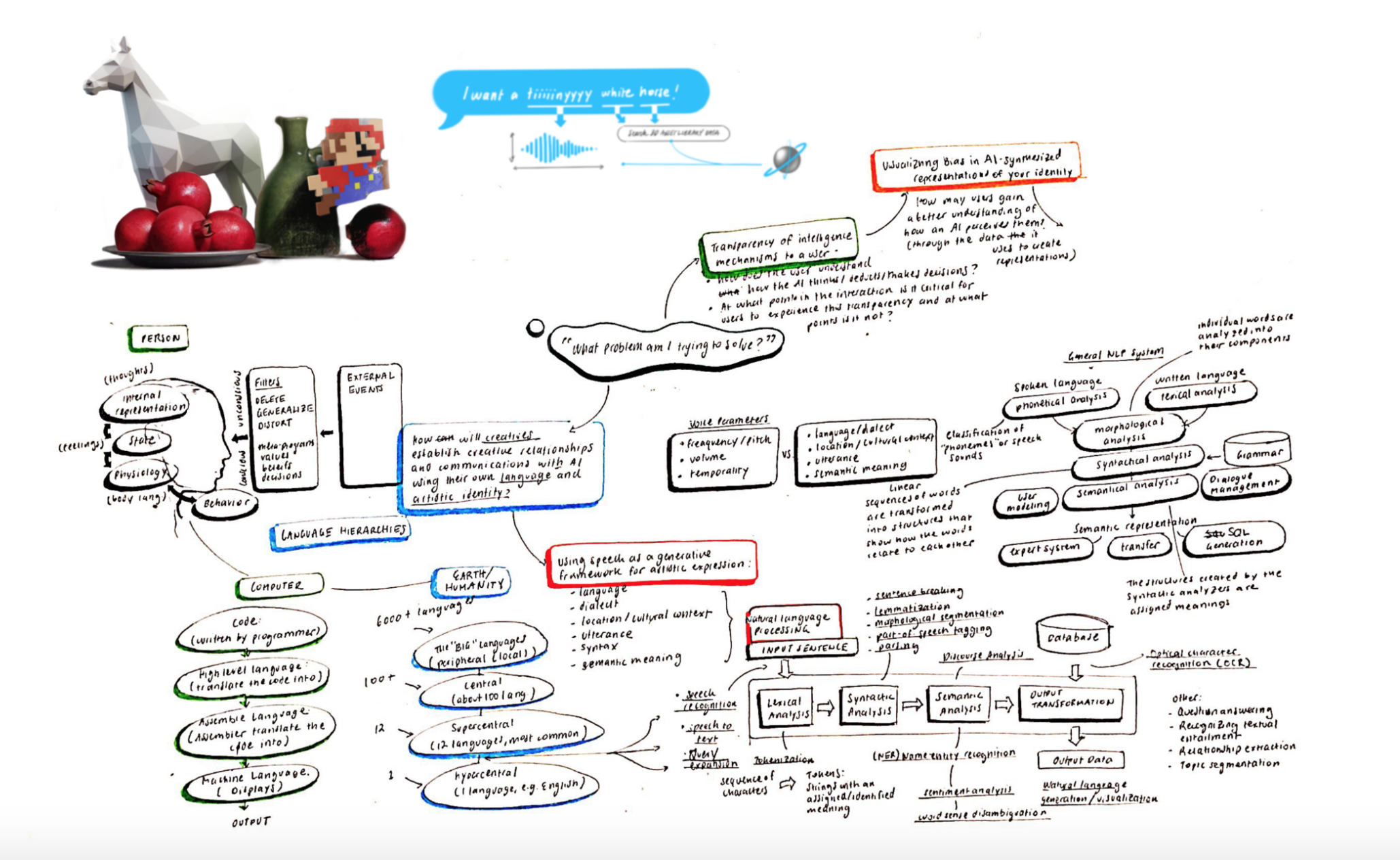

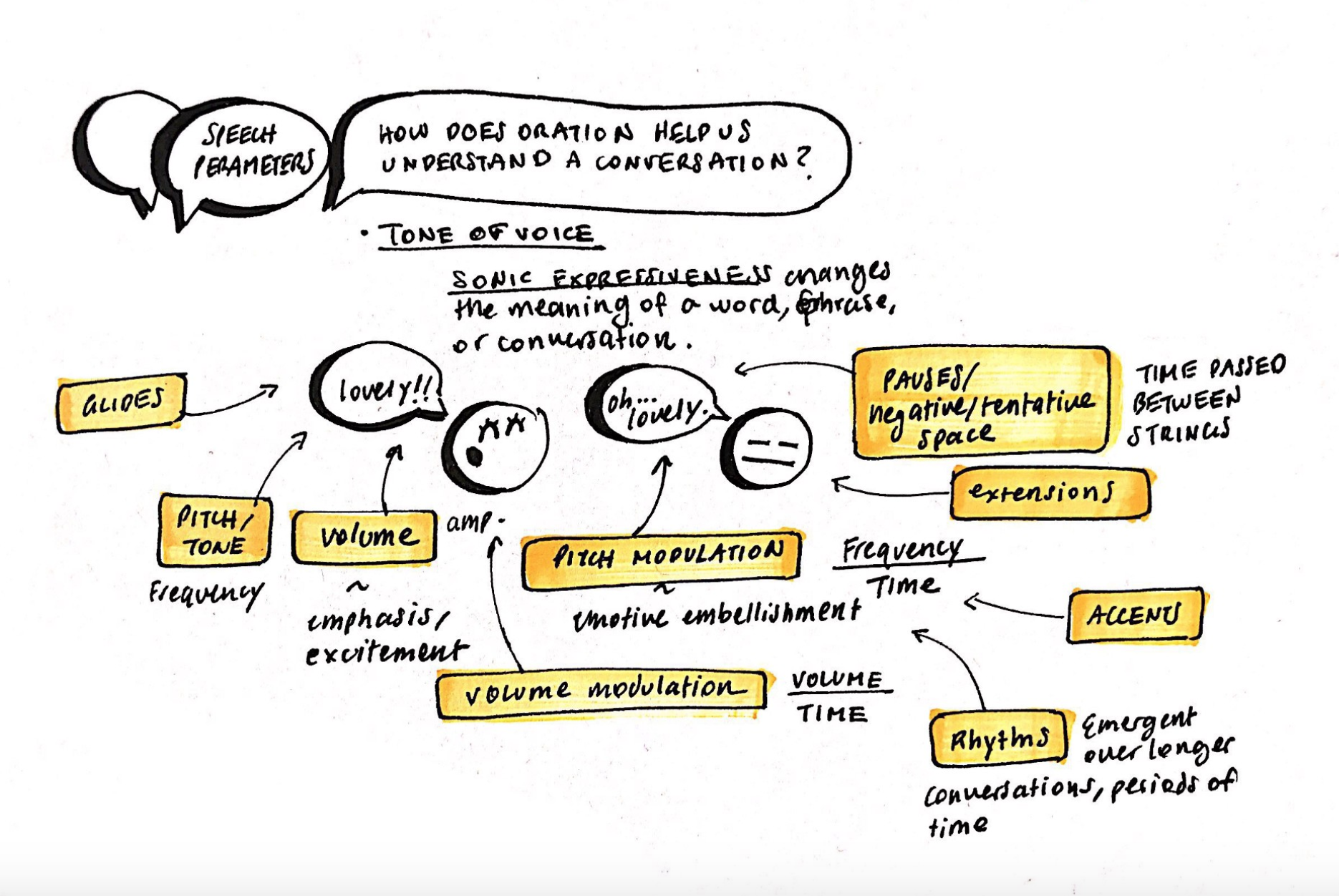

I pursued a concept where I poise my voice as the authority to manipulate an object based on my satisfaction or dissatisfaction with it. Stemming from my interest in artifacts of utterance and how verbal-visual systems facilitate cross-contextual translation, I’ve begun to explore how voice interactions construct intimate power structures between machines and people.

This is a game that is fun for me and is only for me.

Research

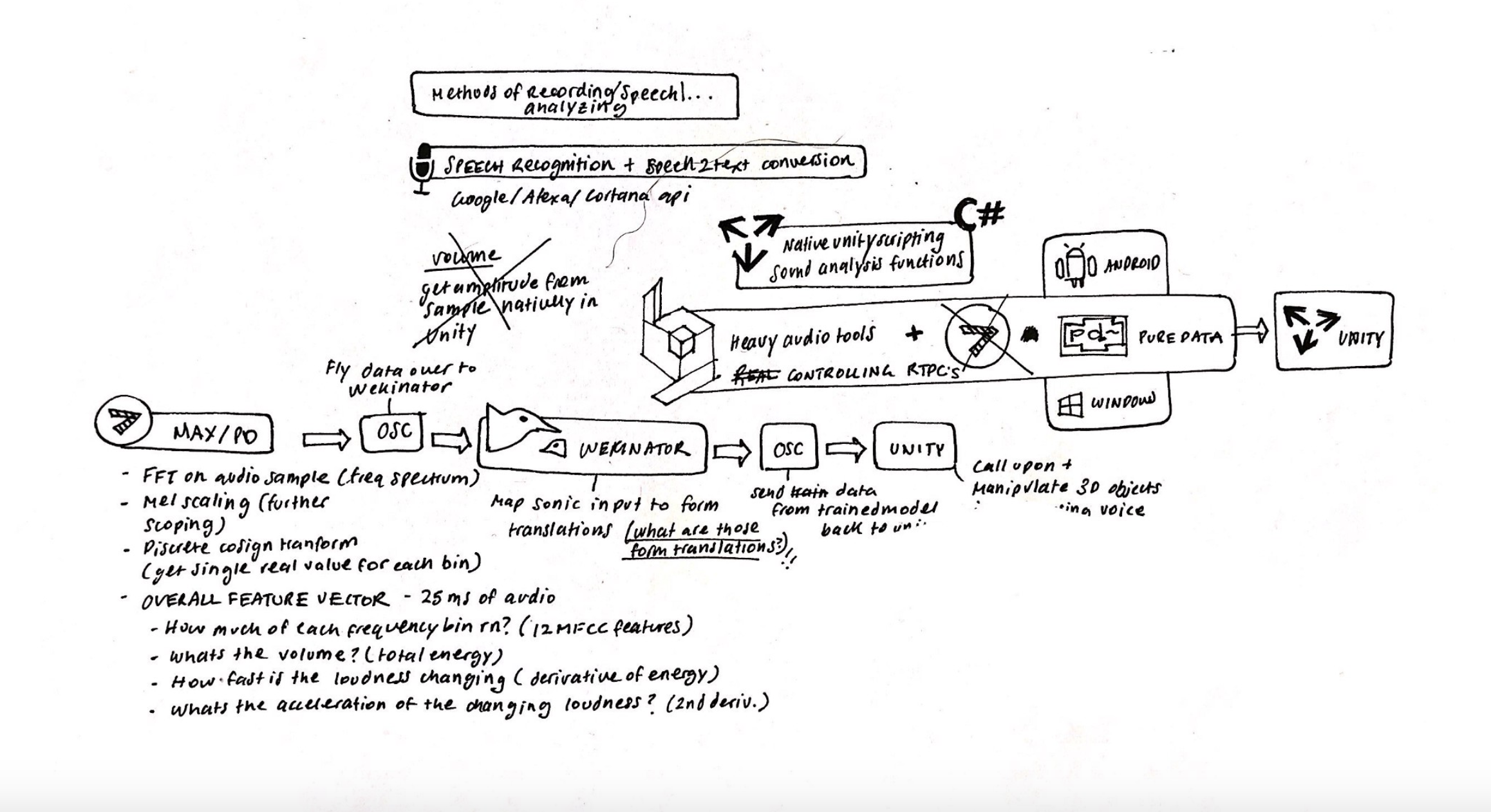

To understand how to build a speech detection and analysis system for this context, I read up on modern speech processing techniques and phrase to phoneme transcription systems while also exploring different models of audiovisual meaning-making.

Technical Implementation

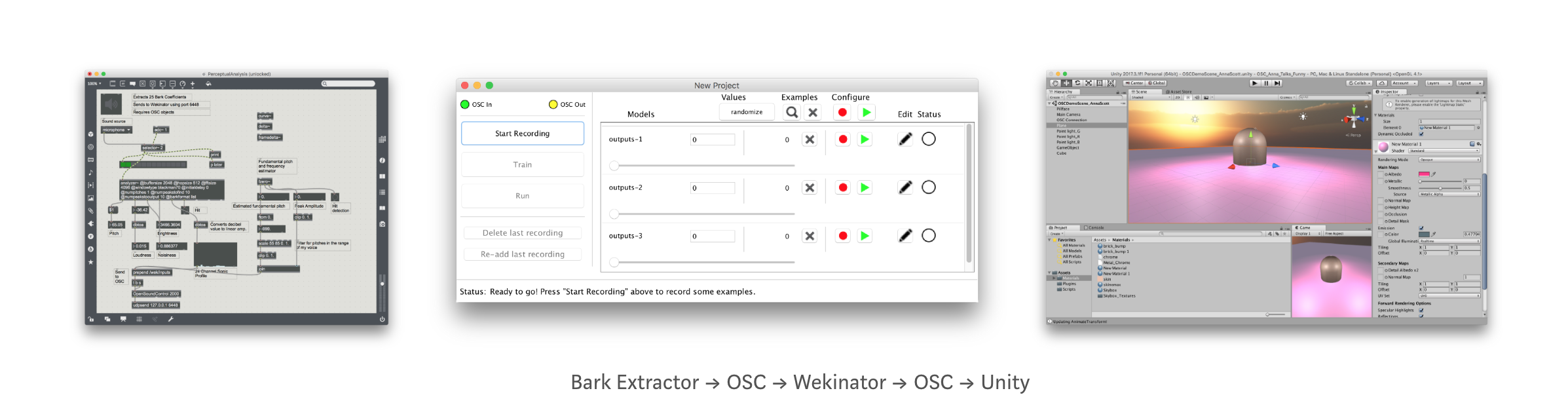

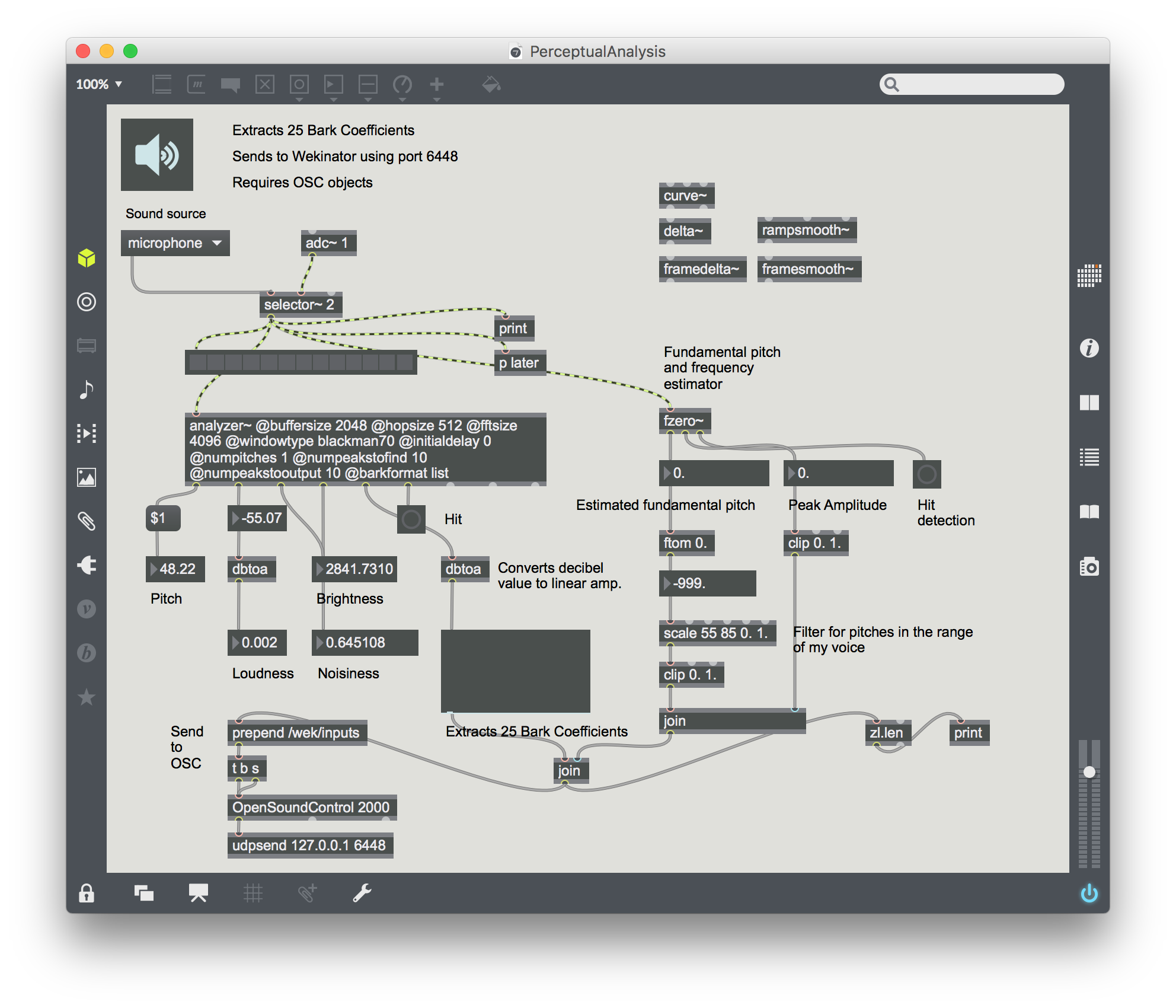

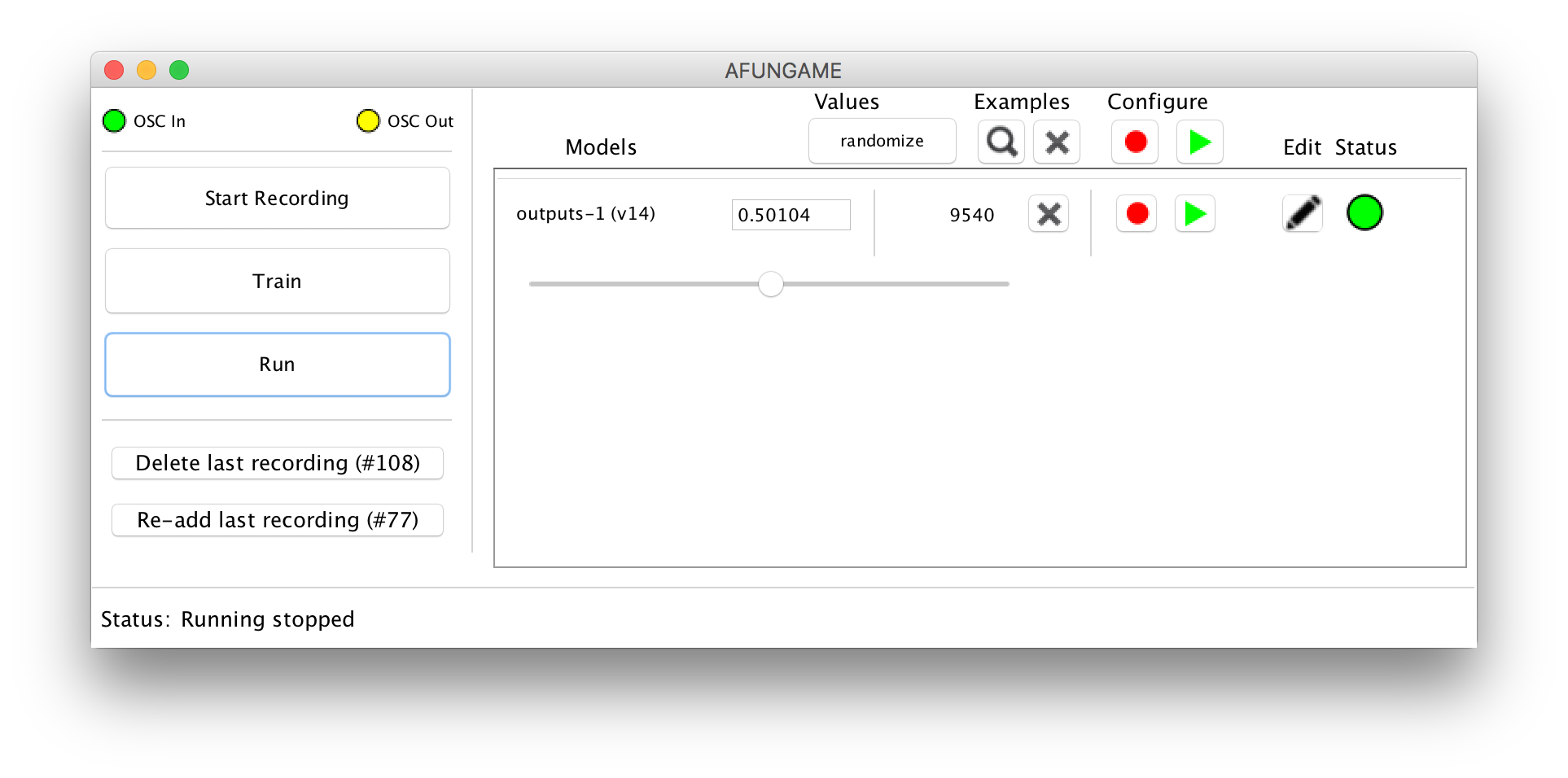

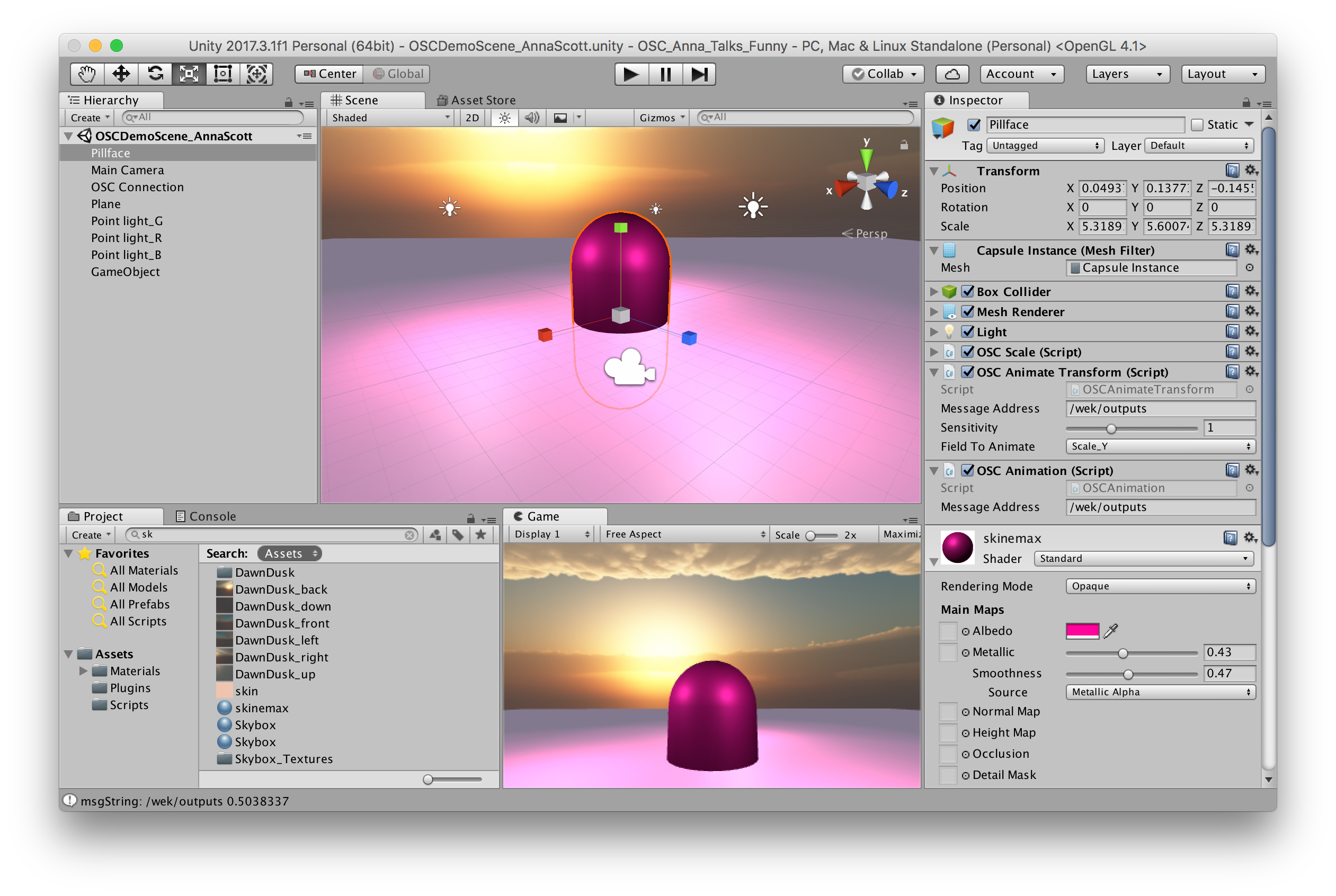

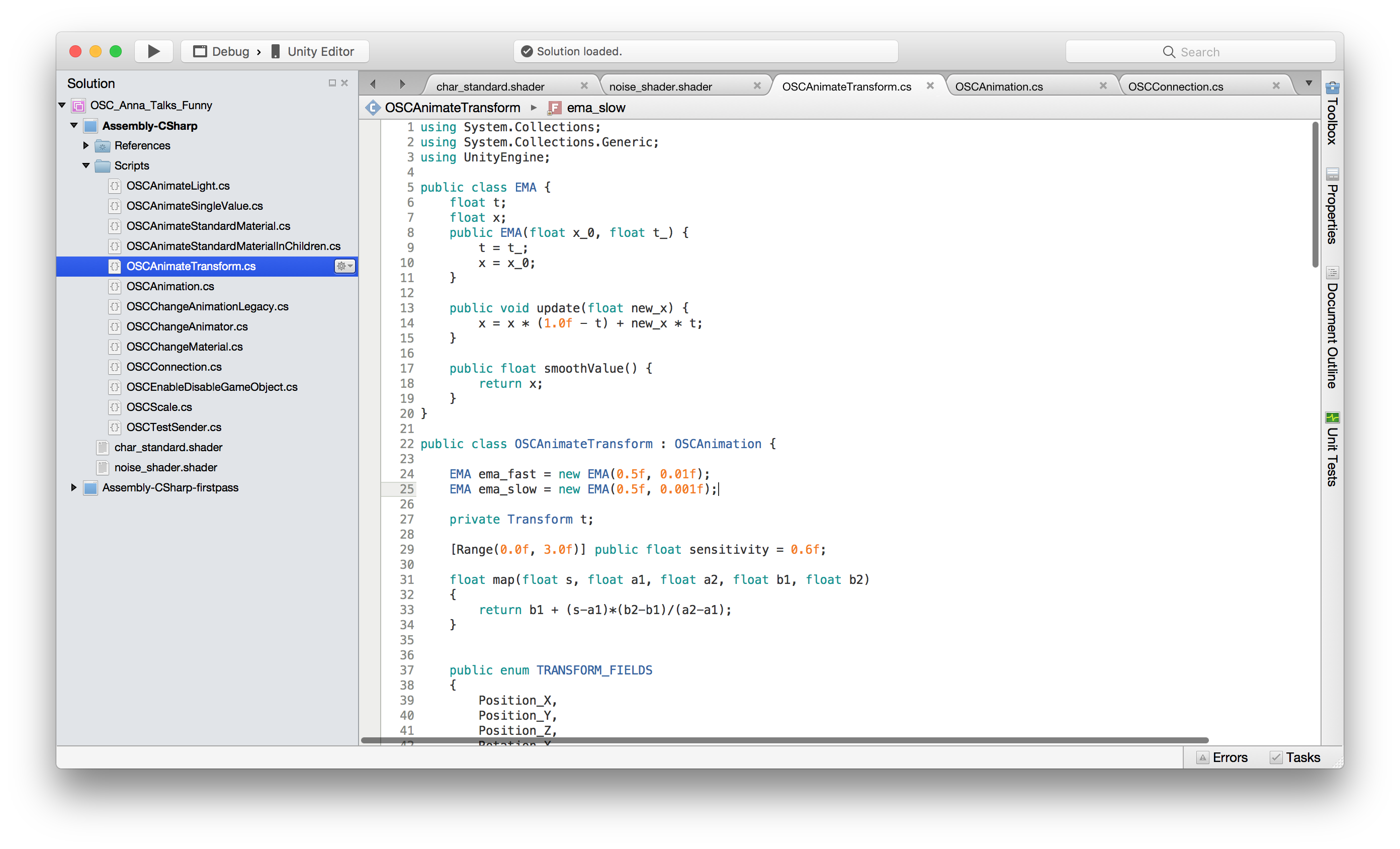

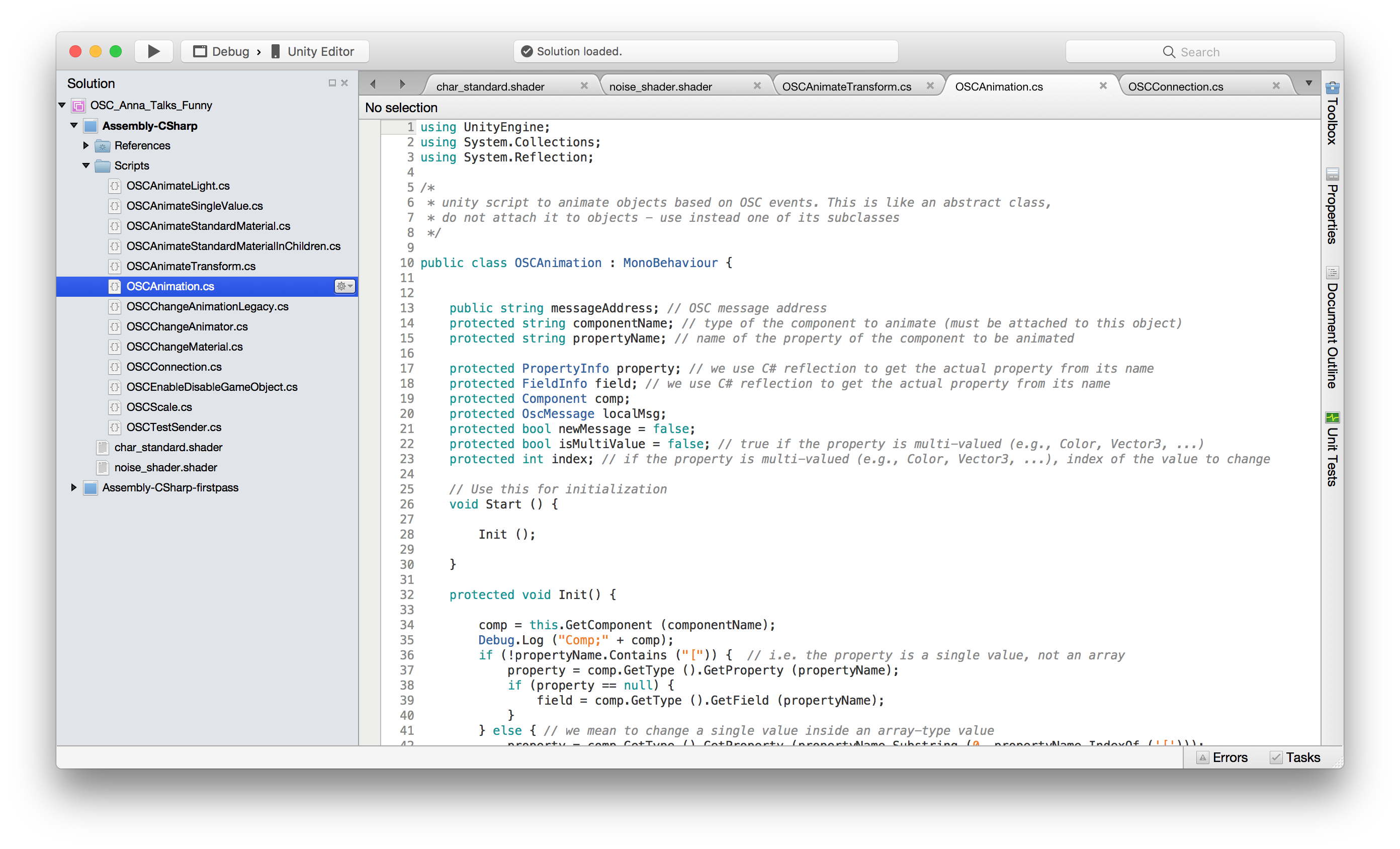

This project consists of applications: Max/MSP, OSC, Wekinator and Unity. I’m also using a MOTU to interface a mic with the Max patch, doing the pre-processing for incoming audio.

Max Patch Functionality: Extracts 25 Bark Coefficients, the fundamental pitch and peak amplitude of an incoming audio signal, sends 27 output features using port 6448 over Open Sound Control.

Wekinator Neural Net Model: Maps 27 features from Max to 1 output.

Unity Scripts (UnityOSC/C#): Listen to output value from Wekinator to control the Y scaling of the cylinder and brightness of the point lights.

Central Resources

Rebecca Fiebrink’s Machine Learning for Artists and Musicians course at Goldsmith’s University, https://www.kadenze.com/courses/machine-learning-for-musicians-and-artists-v

Google, google.com

Cognitive Architectures: Models of Perceiving and Interpreting the World https://medium.com/r/?url=https%3A%2F%2Fwww.wikipendium.no%2FTDT4137_Cognitive_Architectures

Unity OSC Receiver by heaversm: https://medium.com/r/?url=https%3A%2F%2Fgithub.com%2Fheaversm%2Funity-osc-receiver

Special thanks to

Thank you to Scott Leinweber, a collaborator on this project. Scott’s major contribution for this instance of the project was reworking C# scripts to get Unity OSC up and running

Thank you to Luke Dubois, composer, artist and co-creator of Jitter for Max/MSP who generously sat down, schooled me on voice and noise processing in Max and helped me rework my patch

Thank you to Aman Tiwari for helping me debug in dire times of need

Thank you to Claire Hentschker for project consulting, conceptual free-styling and moral support

Thank you to Nitesh Sridhar for misc.!

Thank you to Golan and the Frank-Ratchye Studio for Creative Inquiry for giving me a space and community to work in

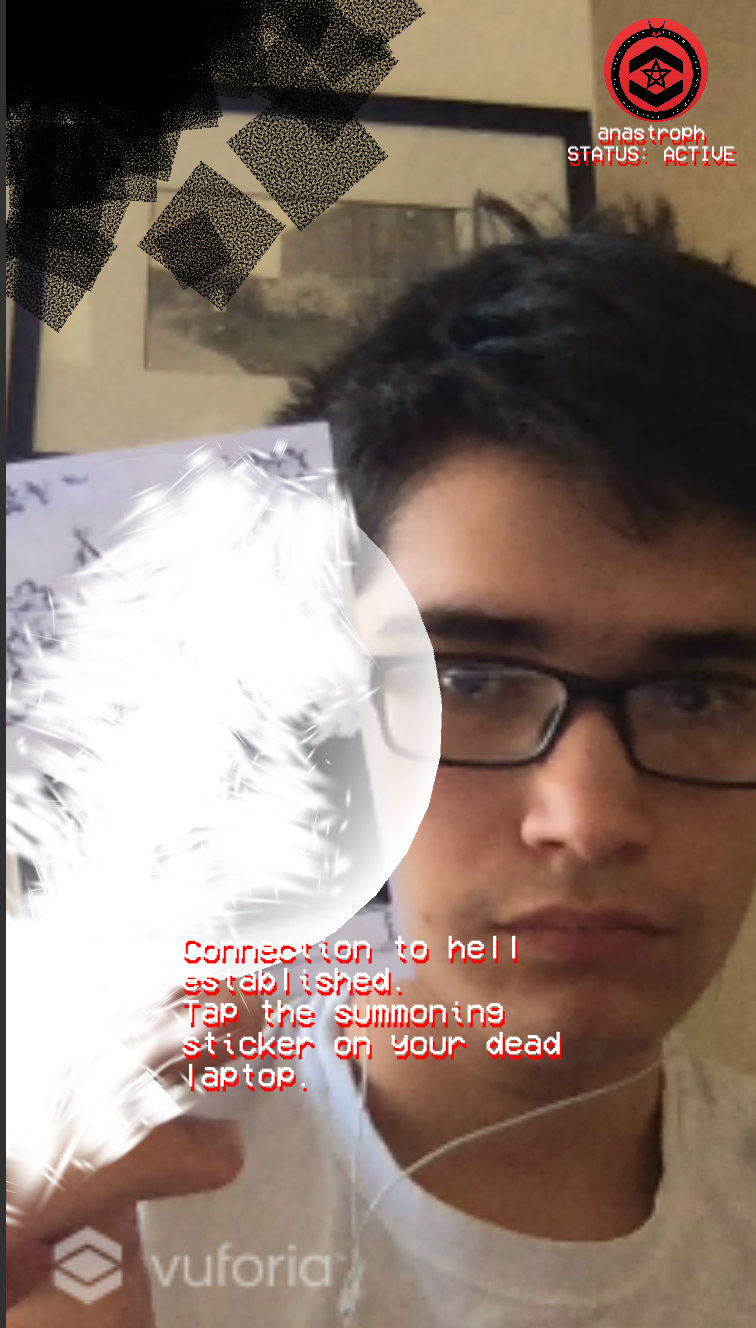

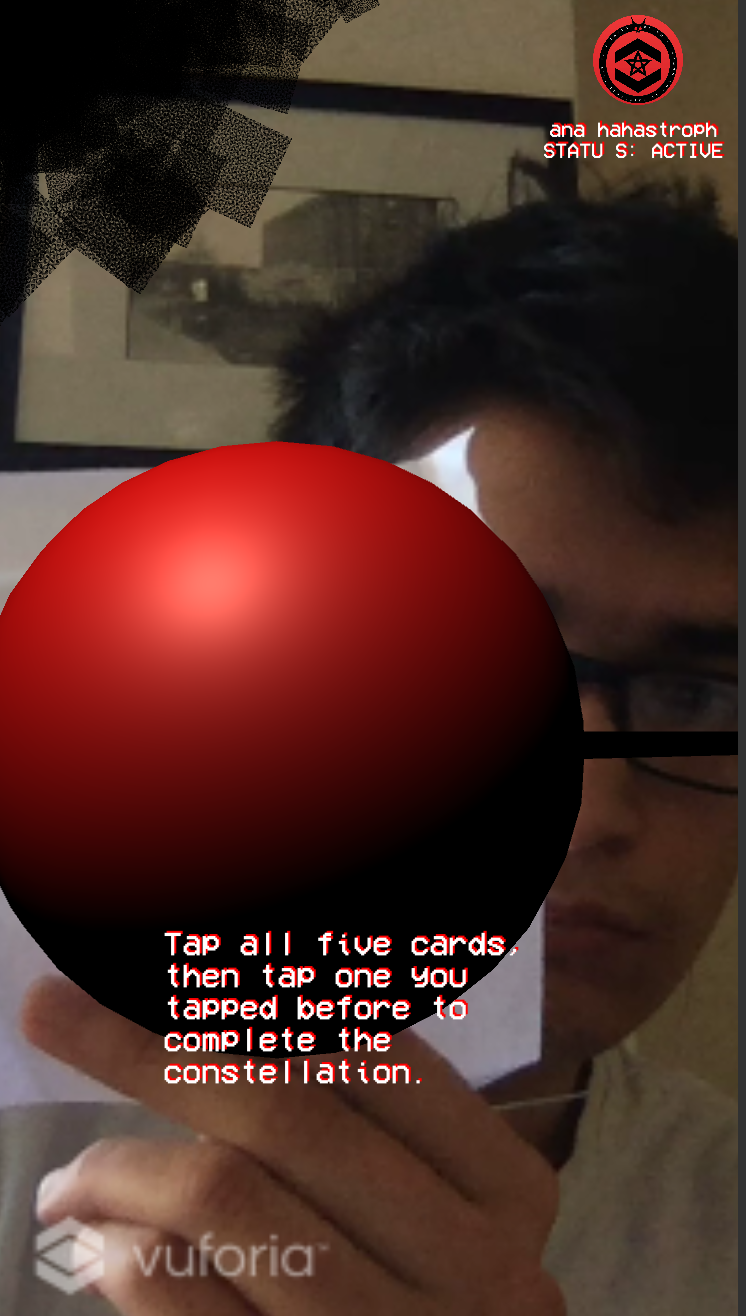

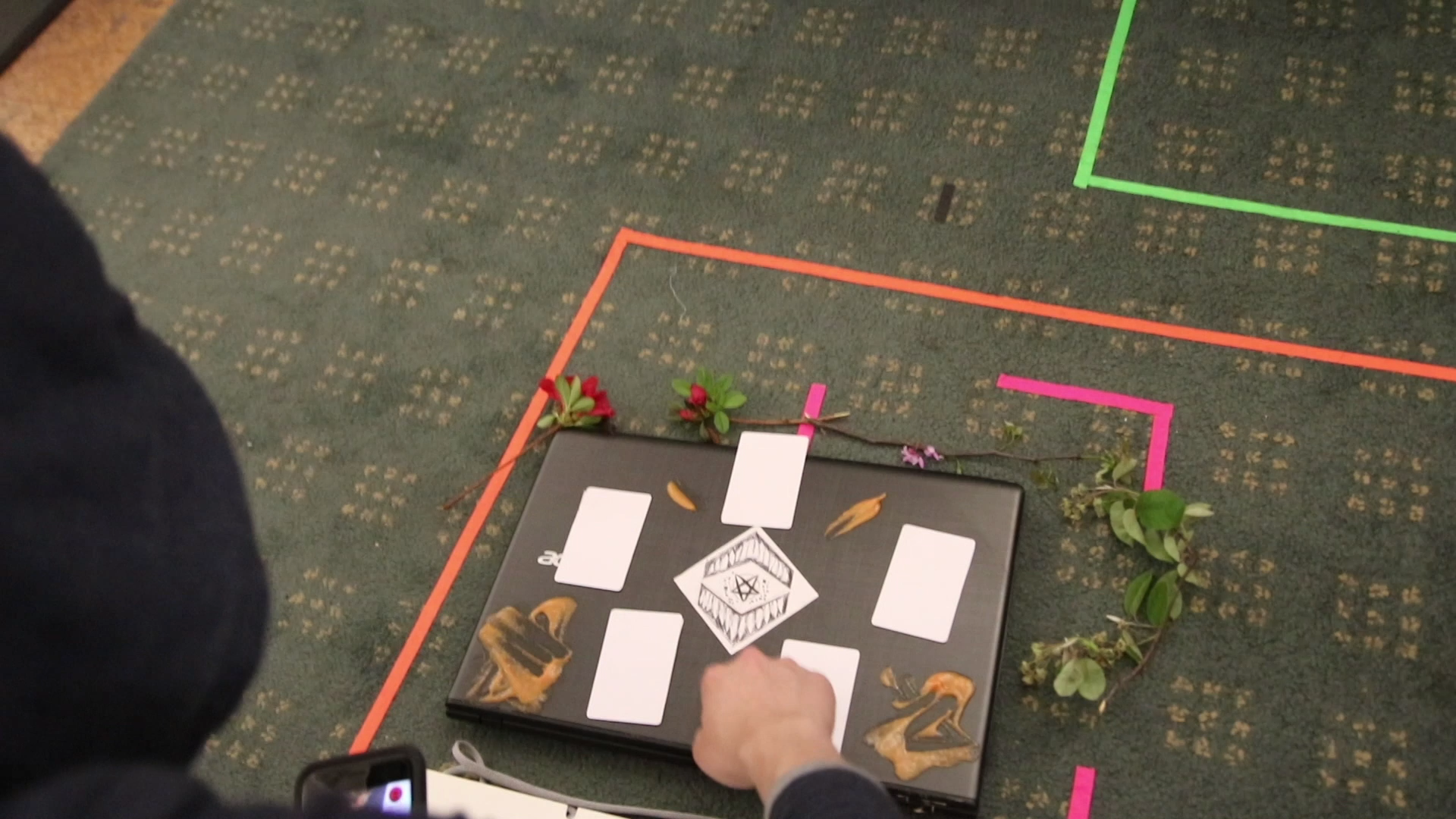

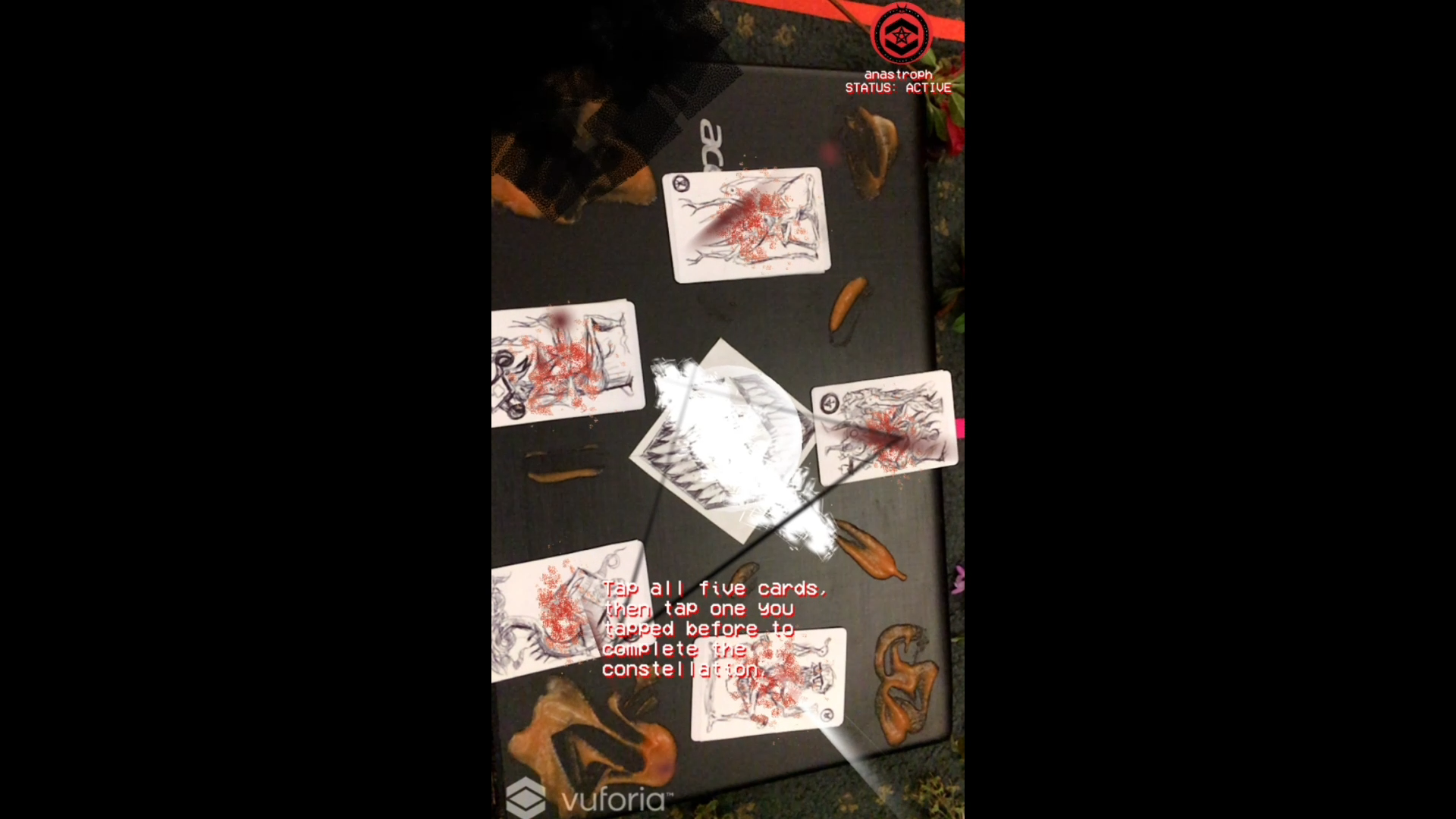

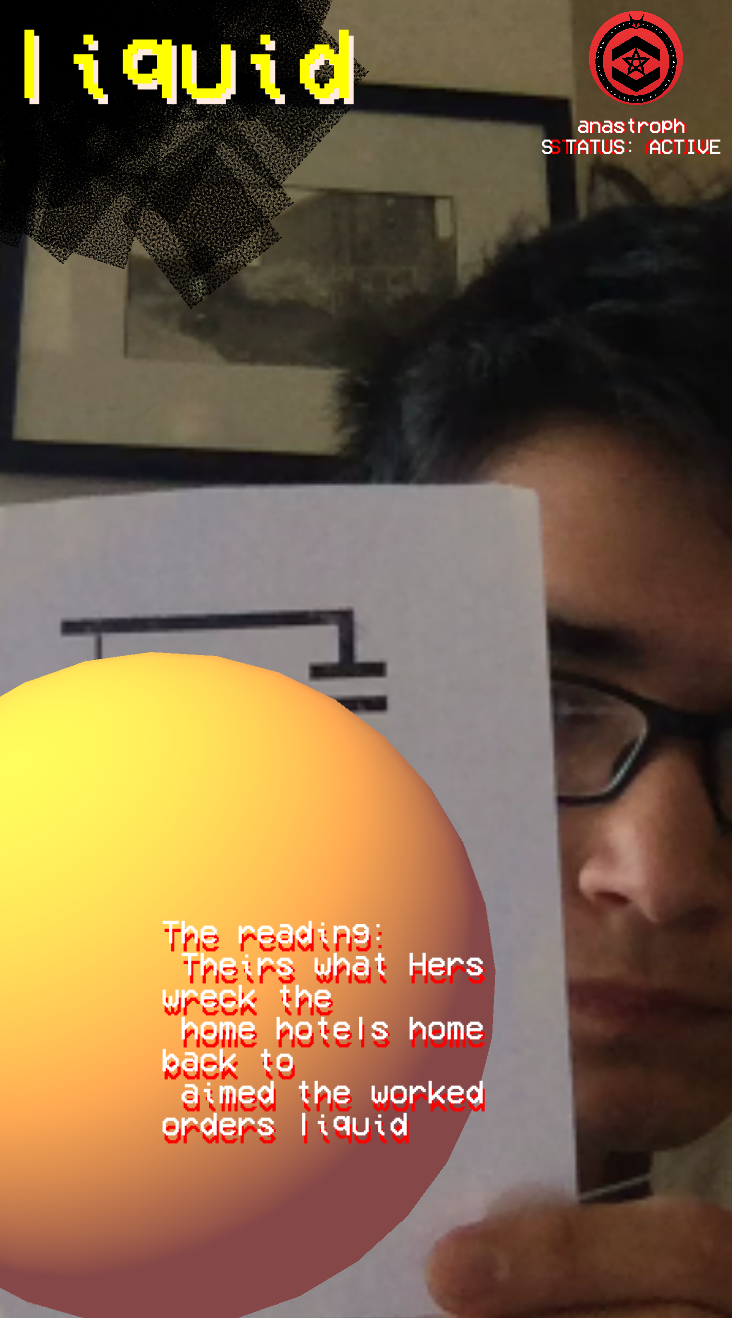

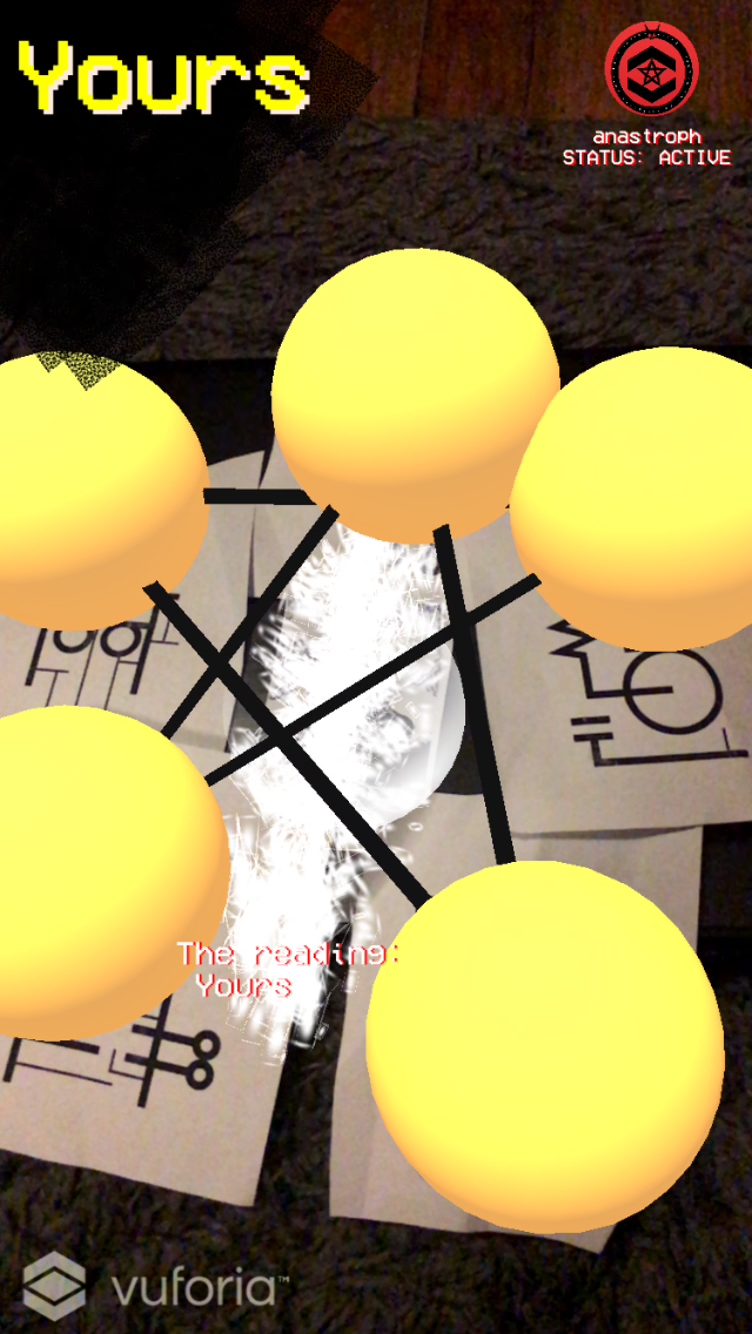

Tweet: Hold a seance for your dead laptop in AR.

About the Final:

Originally, I wanted to make a game in which you attempt to communicate and guide your dead lover through the after life, for honestly no particular reason and without a real intention beside the gimmick of using cards to communication. After my laptop crashed on April 11th, it was suggested by Claire (half joking) to make a game about summoning my dead laptop. I decided to run with it and the experience became way more interesting.

I think the documentation is more interesting than the actual AR app. I like the fake glitching and UI elements when dealing with the preface to the AR, but the AR is underwhelming, and the reading seems inconsequential. In the future, I would want to find a way to make the reading feel more impactful, though I’m not sure how. Now, how it generates is an Anastrophe (a sentence where the words can be rearranged and pretty much manipulated in any order to still make some sense) that is randomly ordered based on the order you tap the cards.

In terms of difficulty, my biggest problem was juggling this project with my other project for Suzie Silver’s class, “Short Leash” that I’m still working on also in Unity. However, the ways of thinking about how to manage the instantiations and keeping control of game objects overlapped between both projects. I also used some of the sounds I made for that for the documentation for this project.

Video

Still Image

Process Photos

Acknowledgements

Thank you to Claire Hentschker for the idea, Golan Levin for the extension and understanding, the BBC sound library for sounds in the documentation, Josh Kery for allowing me to use equipment in the beginning stages, and Nitesh Sridhar for filming the documentation.

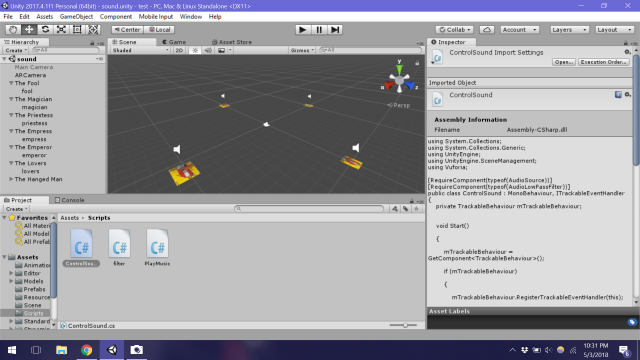

Augmented Reality Sound and Objects

TWEET: Scan over household objects and hear the music the objects play

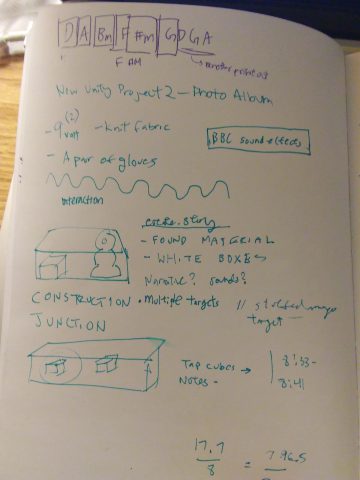

This was built from a previous project I did where I had music cubes (bottom of post) and it would play sounds. It was also built from my Augmented Photo Album project, where I had video and sounds overlay on specific moments in family. In order to iterate/make it inter-actionable on those and make it more interesting, I chose household products that would juxtapose each other, and create sounds that would layer.

Each object is different from one another, and I thought the collection of objects can also speak to the person who owns it. In this collection, I was thinking about a newly wed, or even a mom who has kids. I think it is interesting concept that you can look at an object objectively, and it will give clues about the personality and role of the person who owns it. Sometimes, they may even reveal embarrassing secrets, such as the stool softener.

In terms of difficulties, I had trouble coming up with a collection of items and sounds that made sense and were interesting at the same time. I think it could be pushed further if I embedded secrets and more surprises in these sounds. Technically, the build on the phone was a bit slow as I was detecting many objects at a time, and the sounds didn’t fade out as I wanted it to. If I were to iterate, I want to pay more attention to the narrative of these objects and experiment with more interesting sounds. I was stuck at the purpose of my AR music of objects, that I think I could have pushed the idea a bit further.

I had more objects with me, but decided to cut them out because their image targets were hard to detect.

INDIVIDUAL SOUNDS FROM BBC SOUND LIBRARY

OBJECTS:

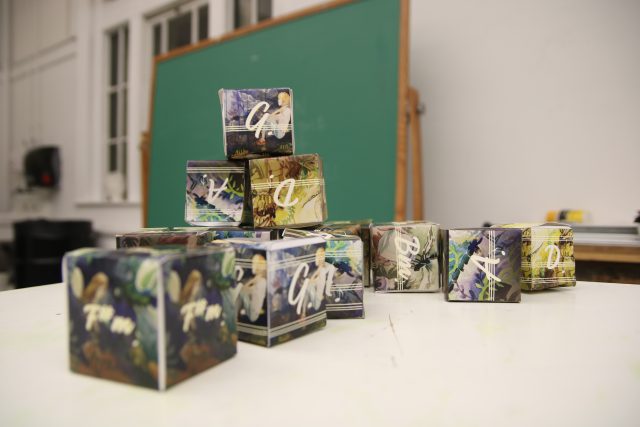

AR MUSIC CUBES:

Before this project, I came up with the idea of linking sounds to physical music notes in origami boxes as a way for the audience to create their own music (inspired by AR paper cubes). After talking to Golan, I agree that the music notes might be too similar and I should look at more experimental sounds since I can’t say I’m an expert at music. Also, I agree that the image targets can be more graphic. The AR final objects piece above built upon this idea as it was a way for me to create music out of physical things.

I thought this AR music boxes was interesting because of the layers of notes. I really wanted to push that in my final project with layers of interesting sounds from seemingly “random objects”

SKETCHES:

Throughout my final project, I thought the best way to detect objects were making custom QR codes and realized that they were really small, and were not ideal in detection. Near the end, I finally realized that I just needed objects with logos that had good image detection, and half the objects that I had planned to augment, were gone. Here are the QR codes that I created, but didn’t put in.

Thank you to Peter Sheehan for teaching me how to build to the phone, Josh Kery for helping me document and giving me conceptual advice, Alex Petrusca for helping me out with the sound part of the scripting, Golan Levin for giving me conceptual advice, and BBC sounds for the sound effects.

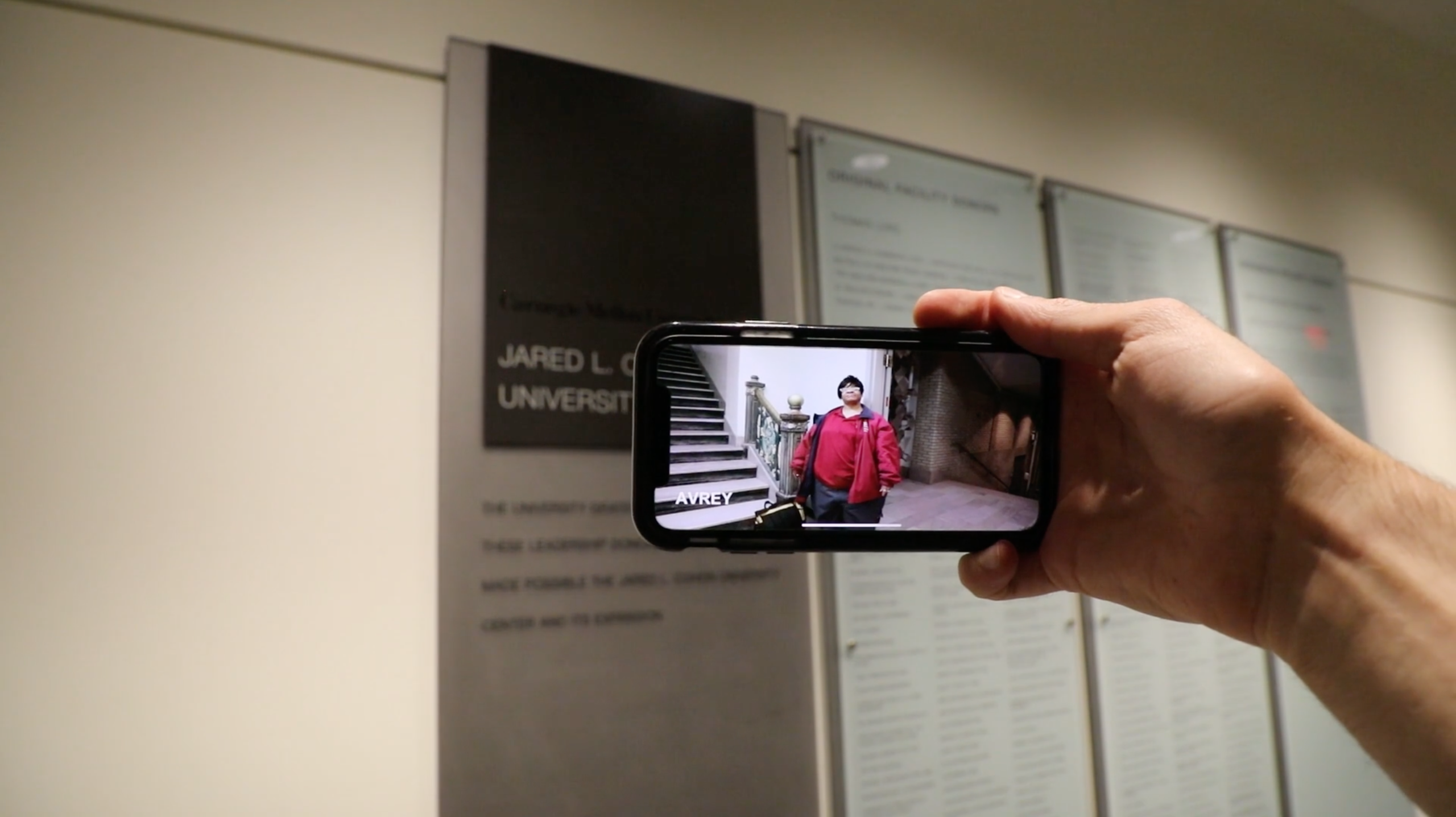

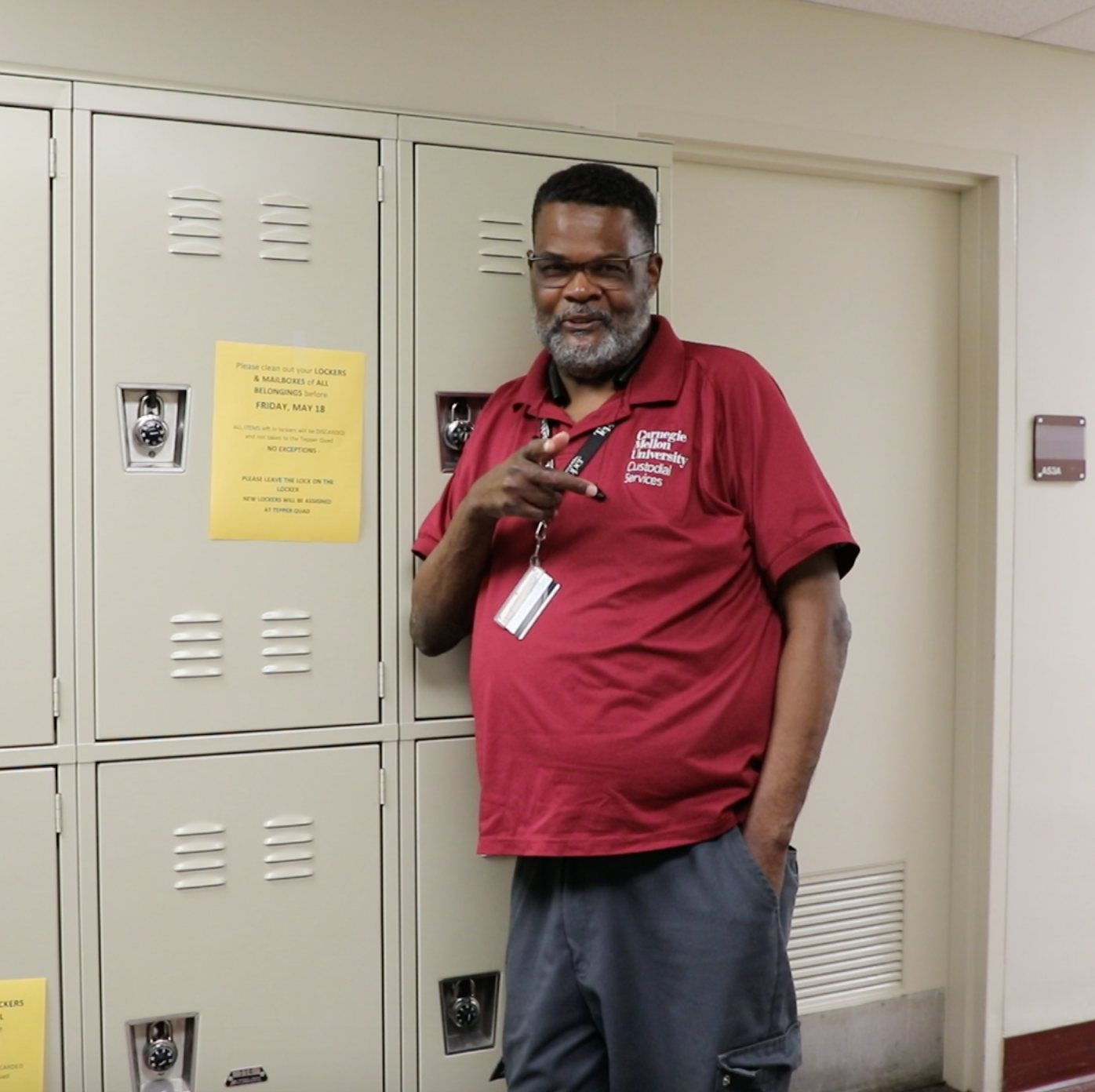

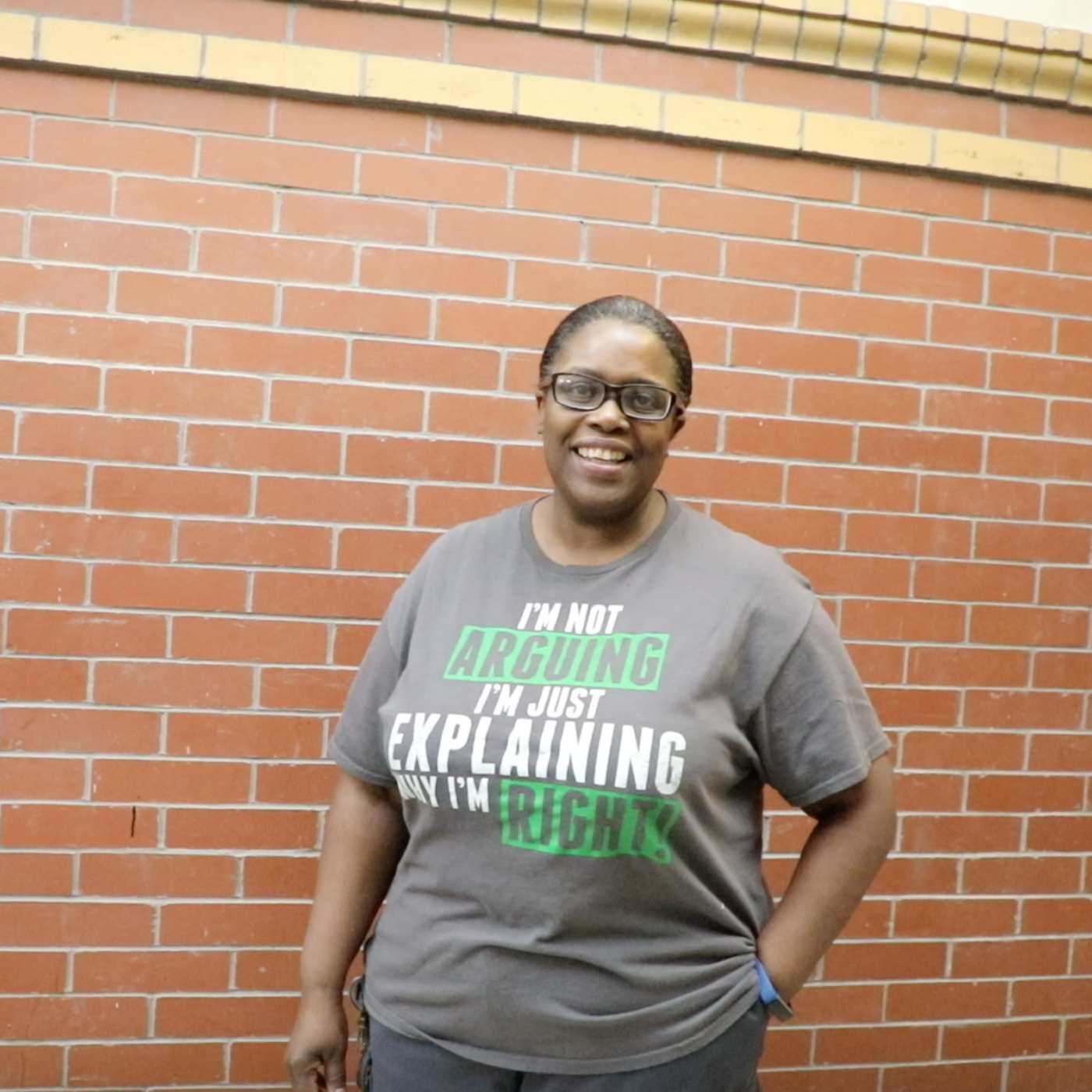

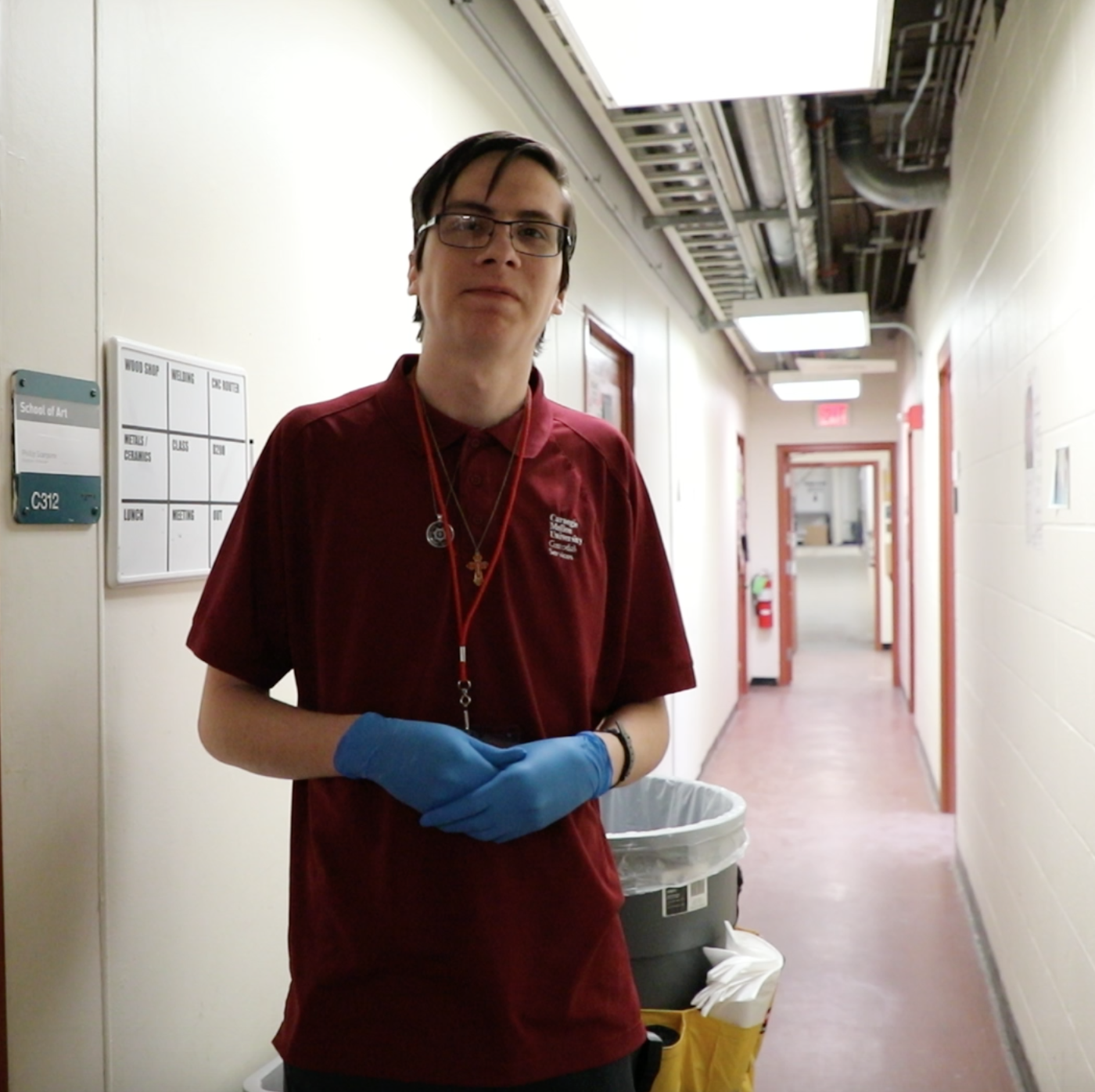

CMU Stories reinterprets who we commemorate and how through the stories of Avrey, Leon, Latasha, Tiana, and Nikola, five incredible night-shift custodial staff at Carnegie Mellon University.

OVERVIEW

This piece aims to give image and voice to those that contribute to CMU in ways we often overlook by overlaying existing narratives of commemoration with the stories of night-shift custodial staff. I approached this piece thinking about the medium, augmented reality, and how that would translate into project. The seen vs. unseen relationship and the ability to give life to a scene or story gave way to thinking about who in my life is rarely seen, yet very present. Those that work the night shift, often 11pm to 6am, as custodial workers fit into this relationship, as they are often unseen, though incredibly important. In my project, I was interested in learning more from night-shift custodial workers, and not just about their jobs, but who they are. I was interested in stories. Thus, over the course of three days, ranging from 9:30pm to 2am, I circled CMU’s campus meeting and speaking with any working staff I came across. The five videos in this application reflect a small fraction of the more than thirty meetings I had. The hours I spent interacting with people are just as important, if not more important, than the final product. While I was doing this work, I was thinking about where these videos would exist within the framework of augmented reality. I decided on using existing memorializing plaques as images targets and then overlaying the videos of Avrey, Leon, Latasha, Tiana, and Nikola as a way of reinterpreting commemoration. The memorials and images of gratitude that are visible often honor money and power. Yet we don’t take time to honor, not even thank, the individuals that sustain our campus on a daily basis. I hope this app respects the night-shift custodial staff I worked with, and serves as a small piece of gratitude for the work that they do. By extracting the videos form their original contexts, I attempt to begin a conversation about who we should be thanking around our school.

STILL SHOTS

ANIMATED GIF

ACKNOWLEDGMENTS

First and foremost, thank you Avrey, Leon, Latasha, Tiana, and Nikola for being collaborators on this piece. It belongs to you, I was merely an organizer. Additionally, thank you to all the night-shift custodial staff that took time to speak with me over the course of this project. Of course, thank you Golan Levin and Claire Hentschker for guidance, and a wonderful course. Thank you to all my classmates, especially my “buddy” Tyvan, who supported me, not just on this project, but throughout the semester!

Avrey Taylor

Leon Murphy

Latasha Scruggs

Tiana Handesty

Nikola Mirkovic

Vinyl & controller setups are large and expensive.

Two audio track crossfade without beat/tempo match.

interactive textile interface – acrylic and silver-nano inks on polyester substrate.

Bluno Nano and CapSense arduino library.

Bluno can easily connect arduino sensors to Android & iOS. Although, this project is not serially connected to Unity on Android, the example code connects to an app created in Android Studio and the Bluno connects to Unity on iOS.

This program uses an initialization stage to calculate a baseline for each screen

printed touch sensor, then uses a multiplier to calculate a touch threshold. To

increasethe correctness of the sensor data, I will implement a touch calibration

step into the setup sequence.

#include <CapacitiveSensor.h> // infinEight Driver // Ty Van de Zande 2018 /* * CapitiveSense Library Demo Sketch * Paul Badger 2008 * Uses a high value resistor e.g. 10M between send pin and receive pin * Resistor effects sensitivity, experiment with values, 50K - 50M. Larger resistor values yield larger sensor values. * Receive pin is the sensor pin - try different amounts of foil/metal on this pin */ // Arcitecture // TBD static int IN1 = 18; static int IN2 = 17; static int IN3 = 16; static int ledGROUND = 23; static int ledONE = 21; static int ledTWO = 20; static int ledTHREE = 19; int SENSE1; int SENSE2; int SENSE3; long THRESH1; long THRESH2; long THRESH3; float mult = 1.7; void setup() { pinMode(ledONE, OUTPUT); pinMode(ledTWO, OUTPUT); pinMode(ledTHREE, OUTPUT); pinMode(ledGROUND, OUTPUT); Serial.begin(9600); Serial.println("Prepping"); initializeSensors(); } void loop() { updateSensors(); //printSensors(); digitalWrite(ledONE, LOW); digitalWrite(ledTWO, LOW); digitalWrite(ledTHREE, LOW); areWeTouched(); delay(10); } void areWeTouched() { if(SENSE1 > THRESH1 || SENSE1 == -2){ // printSensors(); digitalWrite(ledONE, HIGH); Serial.println("3"); }; if(SENSE2 > THRESH2 || SENSE2 == -2){ // printSensors(); digitalWrite(ledTWO, HIGH); Serial.println("2"); }; if(SENSE3 > THRESH3 || SENSE3 == -2){ // printSensors(); digitalWrite(ledTHREE, HIGH); Serial.println("1"); }; } void printThresh(int one, int two, int three) { Serial.print(one); Serial.print(" . "); Serial.print(two); Serial.print(" . "); Serial.print(three); Serial.println(" "); } void updateSensors() { SENSE1 = touchRead(IN1); SENSE2 = touchRead(IN2); SENSE3 = touchRead(IN3); // Array not working??? // int SENSESTATES[] = {SENSE1, SENSE2, SENSE3, SENSE4}; // int lisLEN = sizeof(SENSESTATES); // for(int i = 0; i < lisLEN; i++){ // Serial.print(i); // Serial.print(": "); // Serial.print(SENSESTATES[i]); // } } void printSensors() { Serial.print(SENSE1); Serial.print(" . "); Serial.print(SENSE2); Serial.print(" . "); Serial.print(SENSE3); Serial.println(" "); } void initializeSensors() { int cts = 104; //int mult = 20; long temp1 = 0; long temp2 = 0; long temp3 = 0; for(int i = 0; i < 20; i++){ updateSensors(); //printSensors(); } Serial.println("Collecting Summer Readings"); for(int i = 0; i < cts; i++){ if (i % 4 == 0) { Serial.print("|"); } updateSensors(); temp1 += SENSE1; temp2 += SENSE2; temp3 += SENSE3; } Serial.println(" "); Serial.println("Averaging thresholds"); THRESH1 = mult * (temp1 / cts); THRESH2 = mult * (temp2 / cts); THRESH3 = mult * (temp3 / cts); printThresh(THRESH1, THRESH2, THRESH3); printThresh(THRESH1/mult, THRESH2/mult, THRESH3/mult); Serial.println(" "); digitalWrite(ledONE, HIGH); delay(80); digitalWrite(ledTWO, HIGH); delay(80); digitalWrite(ledTHREE, HIGH); delay(80); Serial.println("Ready!"); } |

Thank you to people who helped, and others!!!!

Golan Levin

Claire Hentschker

Zachary Rapaport

Gray Crawford

Daiki Itoh

Lucas Ochoa

Lucy Yu

Jake Scherlis

Imin Yeh

Jesse Klein

Dan Lockton

FRFAF

URO-SURF

Relax from all of those CMU Fine Arts crits in the Great Hall AR bathhouse.

Using Unity engine and the Vuforia library, I created a bathhouse that can be placed in the Great Hall in CMU’s CFA building. This idea was created from the fact that I felt that there weren’t really a lot of places to feel calm and relaxed. The shape of the model is rather simple, but it uses stencil buffers to create the effect of an in-ground pool. The buffers are applied to the pool itself and the water dropping into it. On the outside of the bathhouse, the reflection is created by an image based lighting technique. A panoramic image of the area was taken and then formatted to a cubemap. Then the shader takes the cubemap and reflects it based on the location of the cubemap relative to the location of the pixel and the normal.

The resources I used:

For assembling the in-ground pool and this too

Also a huge thanks to Golan, Claire, Joe Doyle, and tesh for help on shaders

Word Search

This an app that takes words in real life and uses them to compose Dada-style poetry.

Here are some shots of the app:

Here’s a mostly silent video showing the app in action:

In this app, you go around finding words that you might like to include in your generative poems, which, in the Dada style, are random arrangements of words. You tap your phone screen to save these words. When you are ready to generate a poem using some of these words, you may tap the orange hammer icon and see what the app churns out for you.

This is a project that I have been working on for the last five weeks. It started out as a game in which you would be given a scrambled list of words from an unknown poem from the 19th century and, using your phone, have to go out into the world and find all the words in order to have the list unscrambled and the composition of the poem revealed.

For that project, for which there is a brief documentation video below, I implemented the Google Cloud Vision API, which I allowed me to both read the words captured by the app and get the coordinates of their bounding boxes in the captured image. I also made requests to a poetry API so that upon each load of the game, the player was given a new, random scrambled poem to discover.

I was using the images of the words to check off found words in the player’s inventory when I reached a point where I just wasn’t sure if completing the discoverable poem app was the right way to continue this project. At the same time, I had been pushing back against Golan’s suggestions that I do some generative poetry with my found words because I thought that whatever I could generate would make for some bad poetry.

This is an early process flow that I created. I cut some of the elements from this chart early on, including animating the words using OpenCV.

But then Golan introduced me to Allison Parish’s approach to conceptual poetry, which is to use generative poetry to explore the nonsensical, in the hope that those poem-writing robots return to us with something valuable. I think there is something worthwhile to making generative poems, even dead-simple ones that only arrange words randomly. And I was able to keep using the bounding boxes for the words, which was an added bonus.

In this last week, I tried to use Google’s ngrams datasets before settling on just a functional, totally random poem generator. Despite this, part of the charm of my app is that the poems it generates are not actually completely random; they are specific to the place and person of its user.

And here’s another page from my sketchbook; I typically work out my ideas with just a crazy amount of words, which I guess is fitting.

Acknowledgements

Thank you Golan Levin, sheep, phiaq, Coco, and my friends in the Studio for your help talking through this project, generating new ideas and new ways of looking at it, and helping me move forward when I felt like I was in a rut. Thank you especially to phiaq for helping me document this.

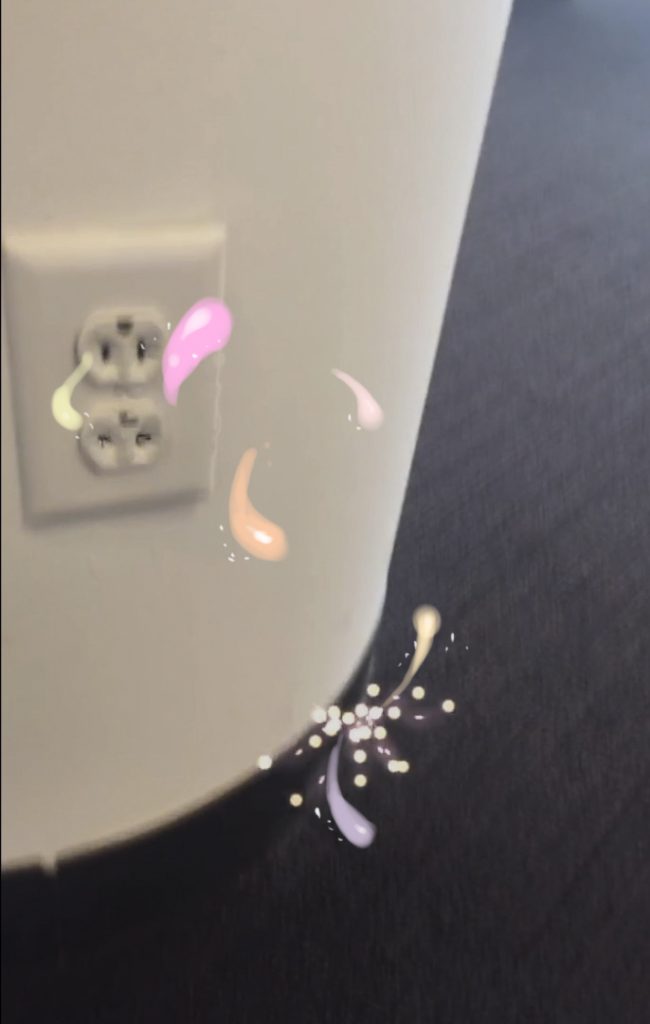

My final project visualizes a ghost-like, imaginary creature that lives in electric outlets and reacts when touched by users.

A screenshot

This was actually quite far from my original idea. At the beginning I brainstormed about fish and / or fireworks, as described in this post. After some time of jumping technical hurdles I got something like this ↓ where user uses the second image target (lighter) to approach the first one (firework) to light it and send it into the air.

However, the image targets were too obvious as image targets (don’t fit in the context well), and I couldn’t scale up the firework because 1) it doesn’t make sense to light huge fireworks indoors and not burn the ceiling, and 2) Vuforia’s image target tracking doesn’t always work perfectly, so when the user looks up, it’s very likely that the firework explodes at somewhere totally unexpected.

So I abandoned most of the physics rules about how a firework should look like (big thanks to everyone’s suggestions during our last critique). The “firework” no longer shoots from ground but from an electric outlet instead, in the form of some fish-like flocking creatures. After the “fish” explodes (which happens when the user uses the lighter to approach it), it doesn’t disappear right away- it splits itself into more “fish”, ready to be lit again by the user and explode.

Unfortunately I lost my screenshots at this stage, but this is how it looked like.

Unfortunately I lost my screenshots at this stage, but this is how it looked like.

The scale problem remained- fireworks are supposed to be huge and loud and overwhelming, but I can’t make anything huge and loud and overwhelming because iPhone’s viewport is tiny and in order to view something big I need to keep turning the phone and leave the image target, and by doing so Vuforia messes up objects’ scales.

Although I still like fish and fireworks, thanks to Golan’s advice I looked into alternatives to fish and fireworks, but make more sense to be visualized in a small scale.

I even tried a string of stones that can explode. Abandoned that idea quickly, though, because it makes even less sense to have stones dropping out of outlets.

Eventually I went with a transparent, ghost-like creature, which avoids the generic quality of pretty much any primitive 3D model and adds mysteriousness to the creature (I think). The creature can still be seen, though, because wherever it passes by the background is slightly warped, as shown in the video.

The way it’s implemented is very close to the fishworks idea, but instead of having a trail of balls moving around, there’s a trail of particle effects with Unity’s glass shader and a transparent but bump-mapped texture. Input for this program is just touch on screen, which then calls a raycasting function and detects if the ray hits the creature. Output other than the screen is vibration when a creature is touched. For this part I’m inspired by static electricity. Too bad I can’t actually produce that to shock the user.

I want to give great thanks to everyone in this class who gave me generous feedback in our last critique. I especially thank Jackalope and Erin Zhang (not in this class) for helping me brainstorm, and Conye for motivation as well as lending me her iPhone for testing and documentation, and of course Golan for his honest critique and suggestions.

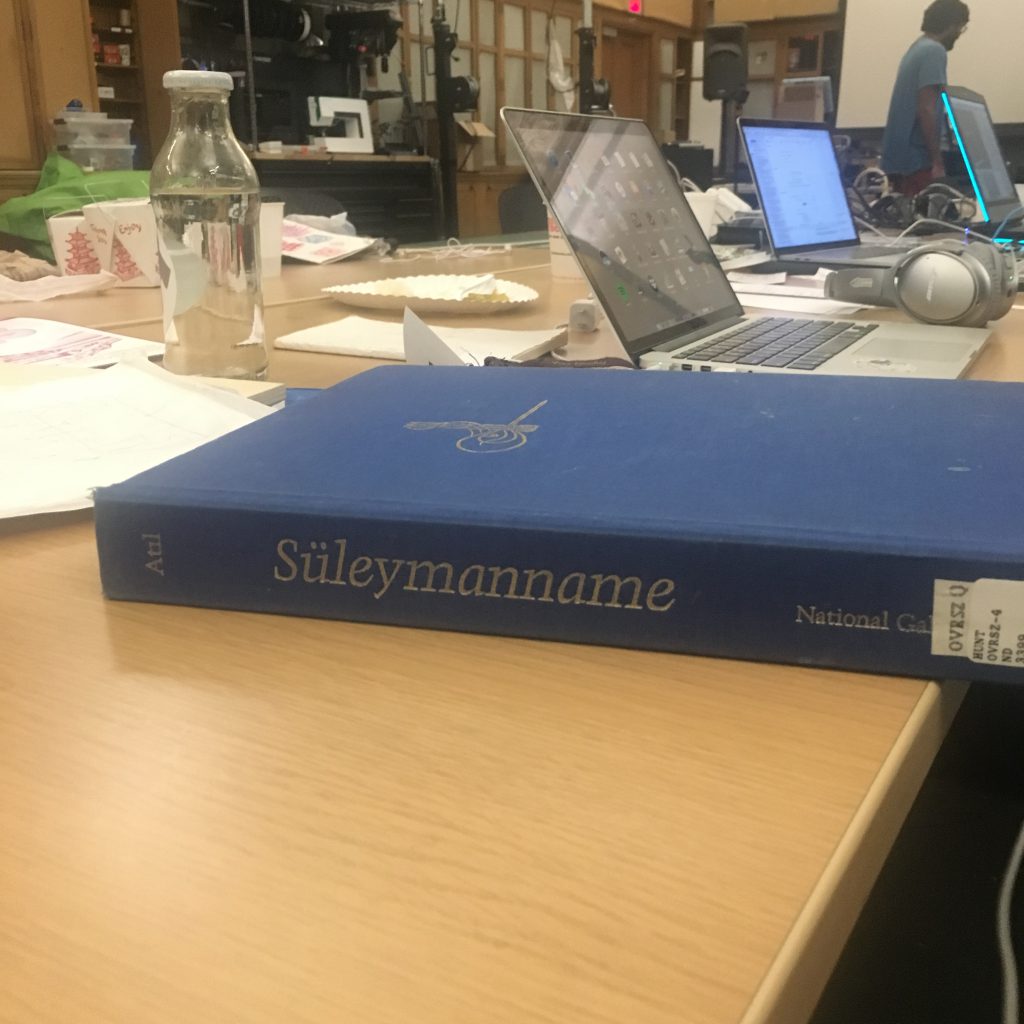

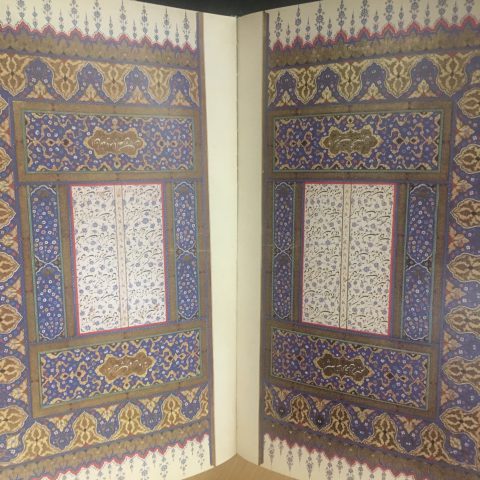

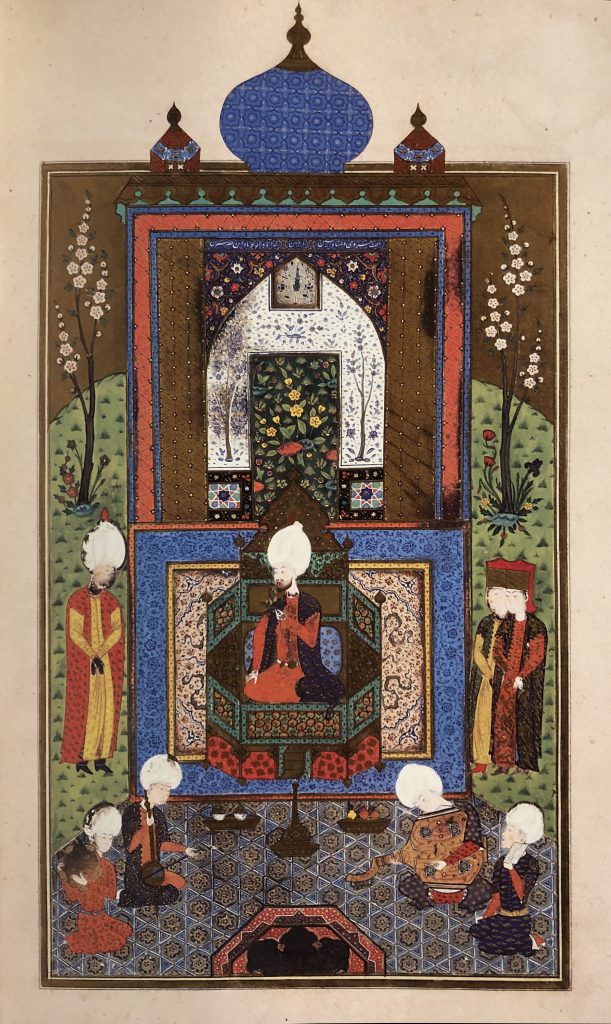

I love old illuminated manuscripts. I think they are beautiful. I love the occlusion of patterns, and I think AR might be a good way to highlight the layered geometries.

I went to the rare books collections in Hunt and got some help from the amazing librarian Mary Catharine Johnsen. We started with some religious texts, specifically The Book of Kells, but after receiving critique from class, I decided not to use anything of the religious nature.

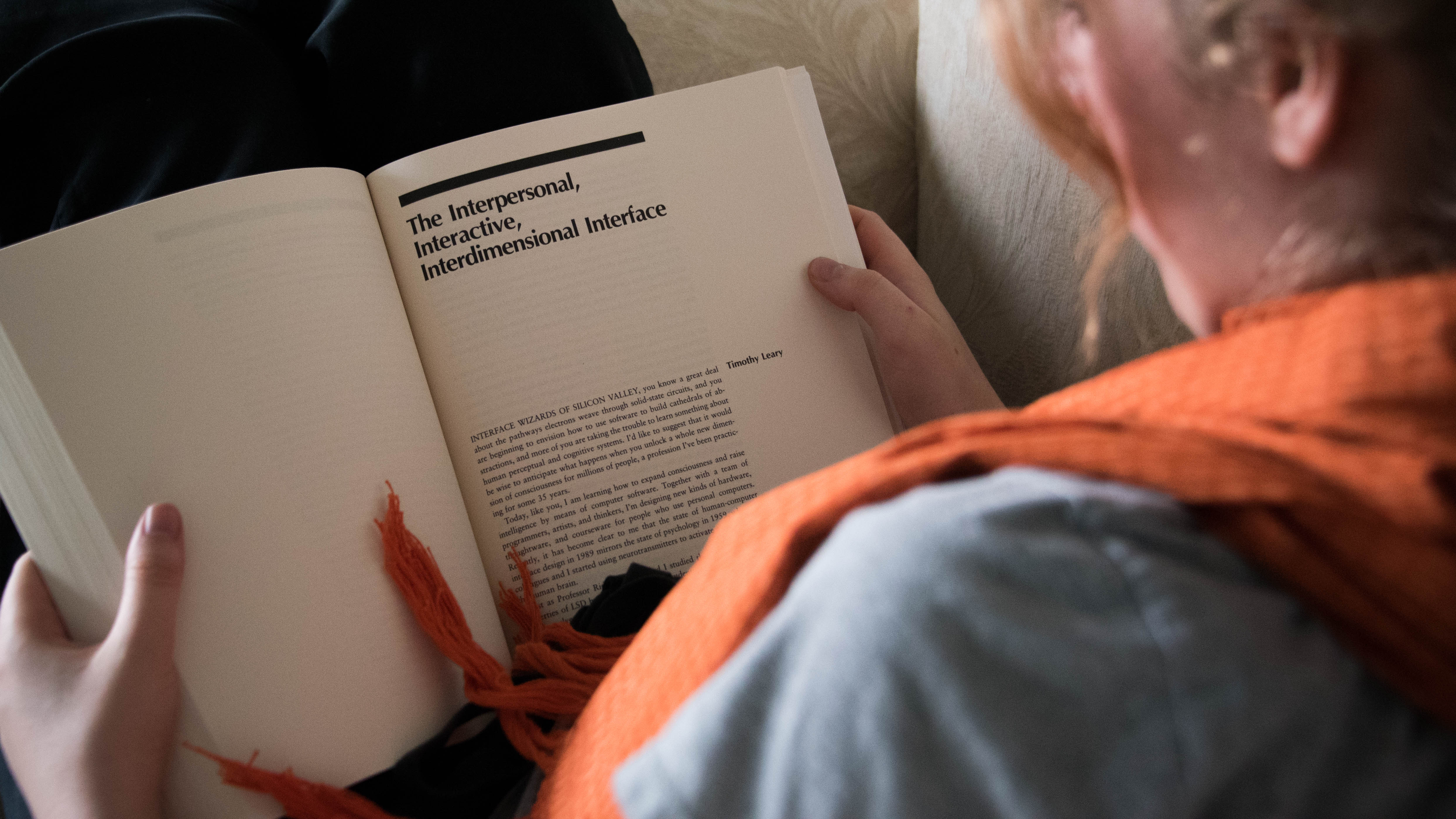

Then, after a bit of searching, I found this book.

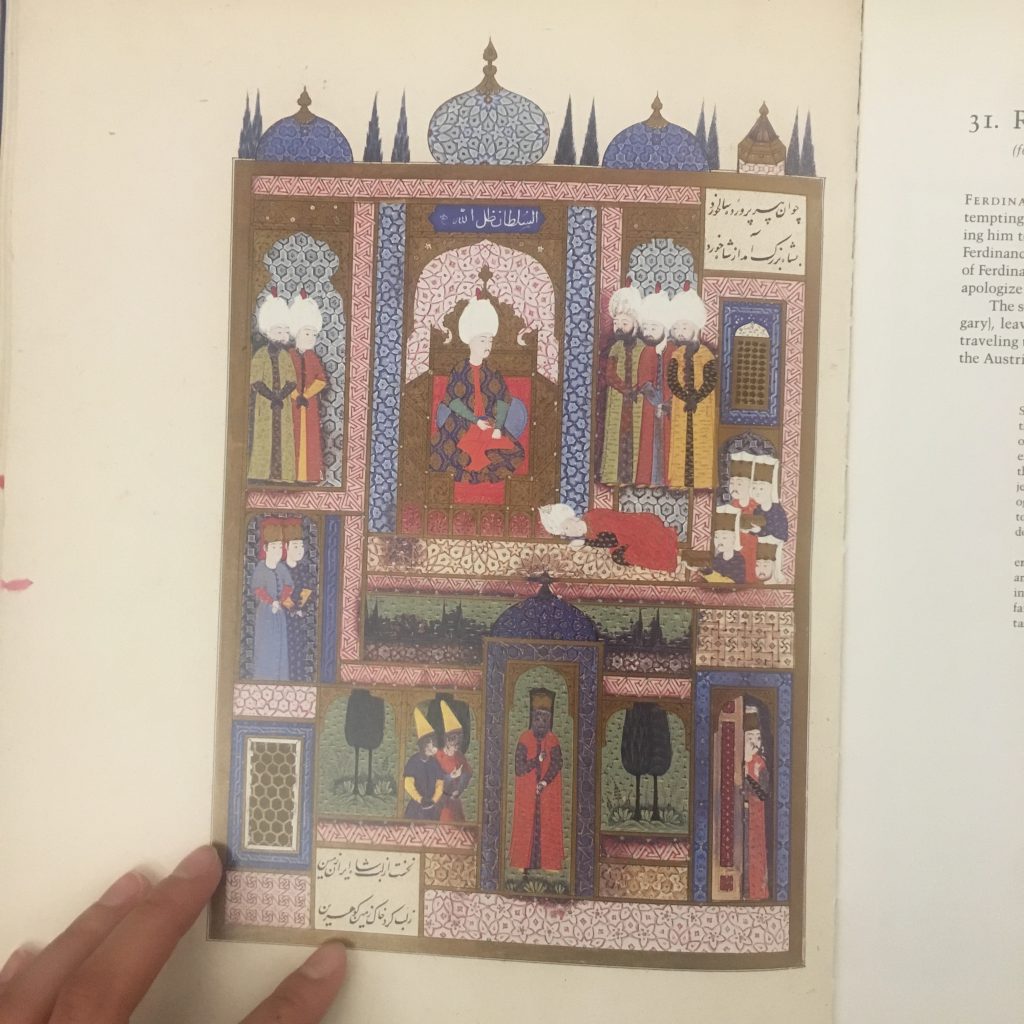

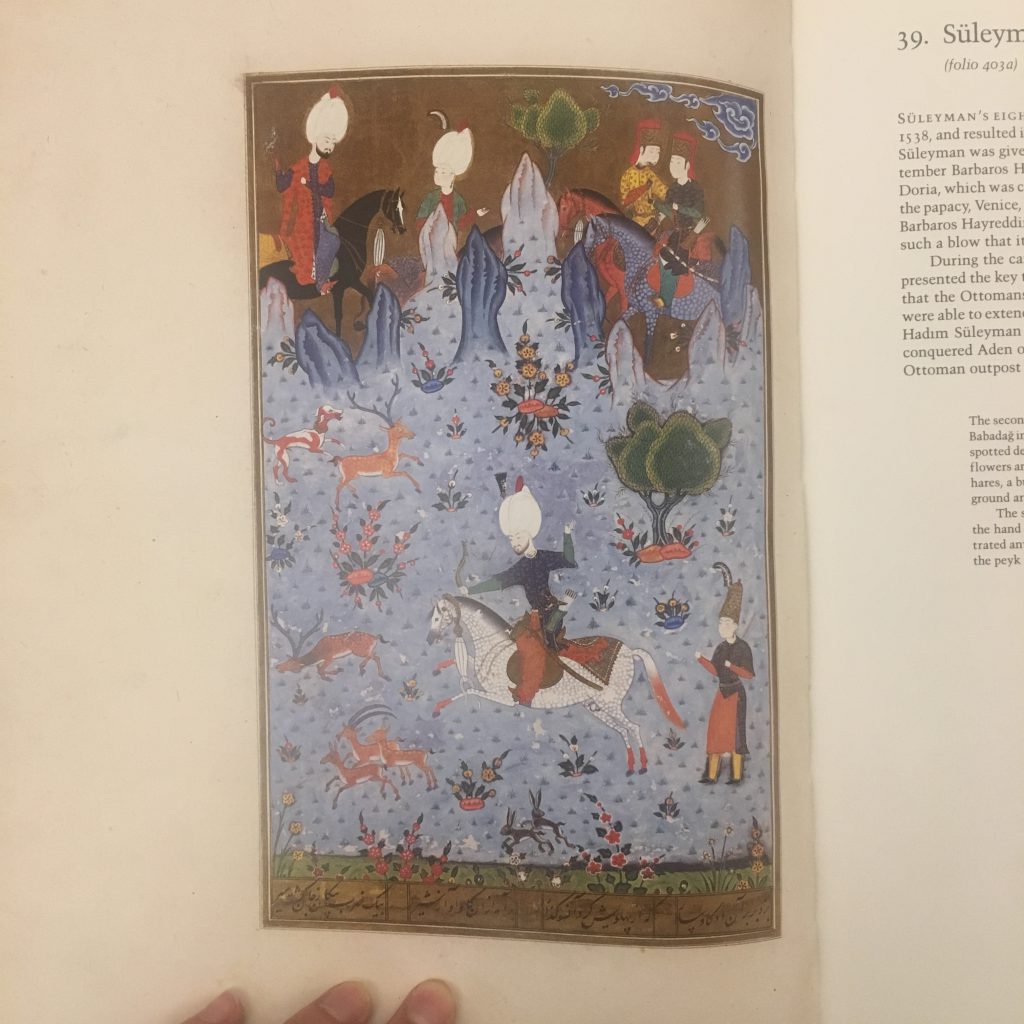

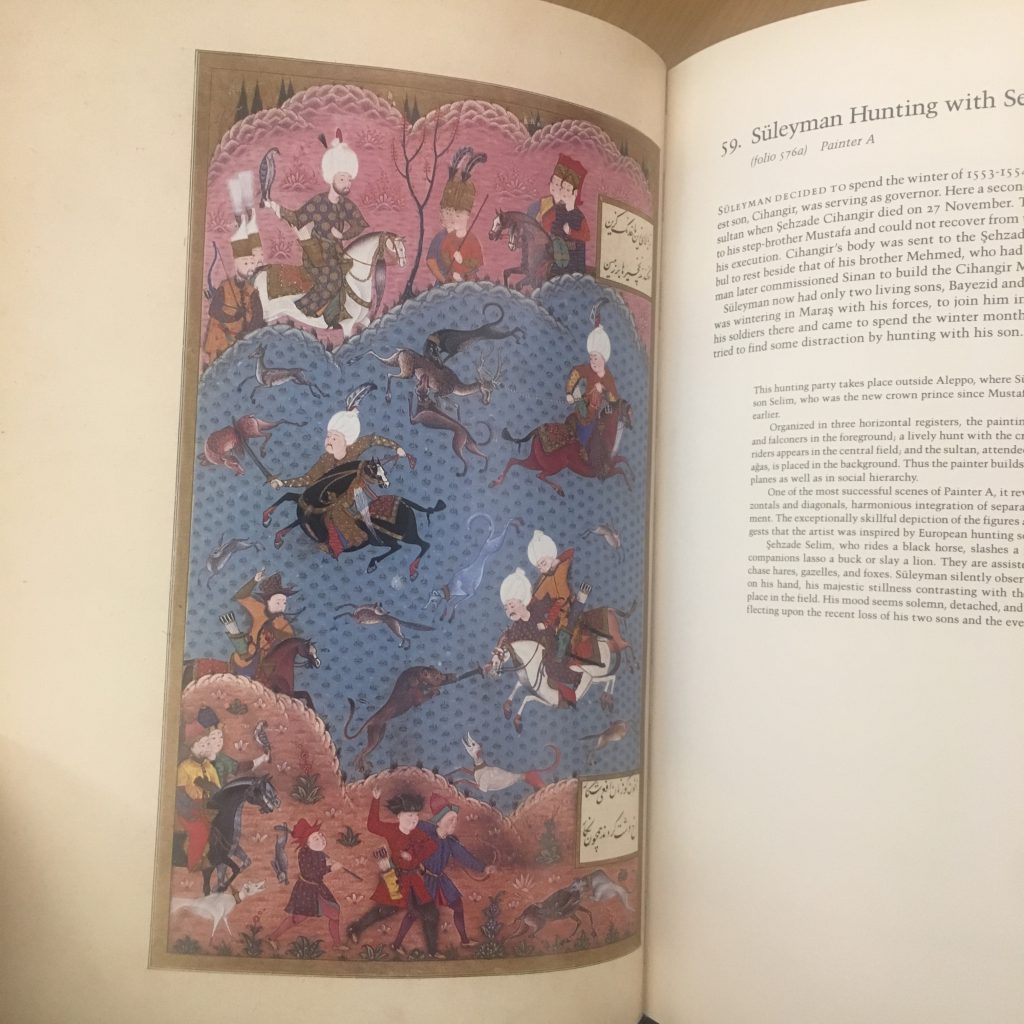

This book is a study of the illustrations in Arifi’s Süleymanname (lit. “Book of Suleiman”), which recreates many of the events, settings, and personages of Suleiman the Magnificent, longest-reigning Sultan of the Ottoman Empire from 1520 until his death in 1566.

I picked my favorite one.

This image describes the celebration of the circumcision of Süleyman’s three oldest princes. (tbh I didn’t know this was the content…I chose it initially simply because I thought it looked the prettiest…)

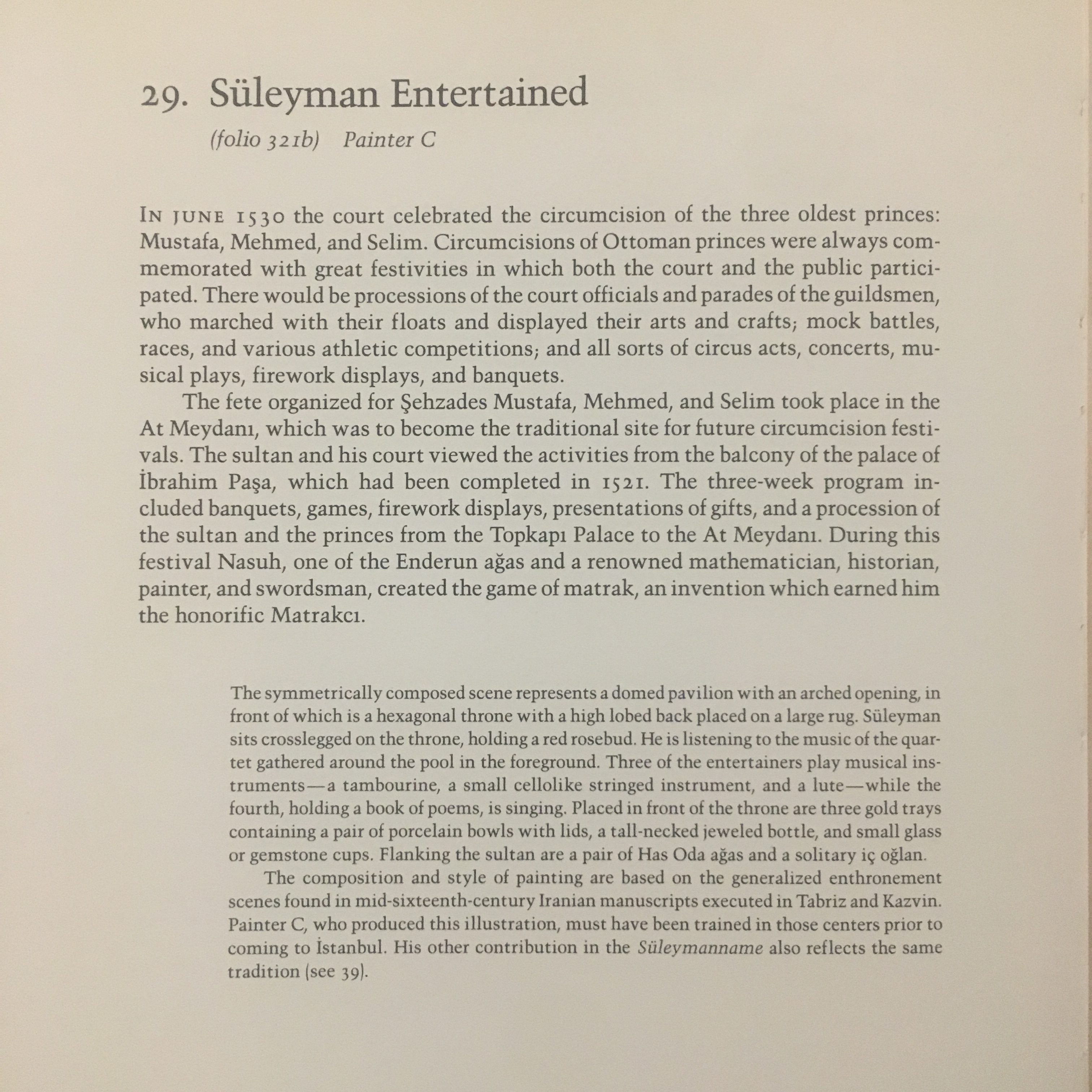

Here is a detailed description of the story behind the image.

I began by separating the layers.

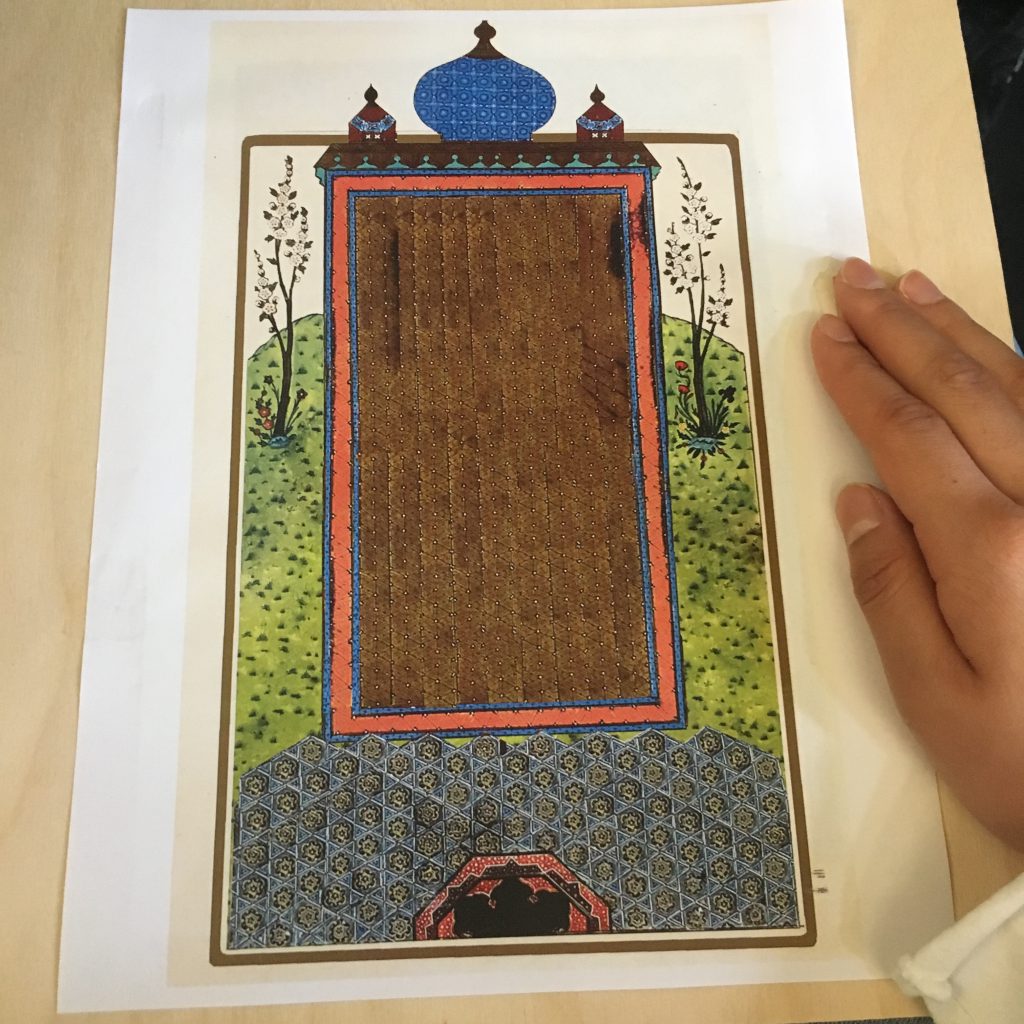

I used the bottom layers of the image as my target, so basically when you scan the bottom layers, they become 3D and more content come up.

Here is the way it looks when I tried it on Unity.

Currently this is simply an experimentation with aesthetics. What drew me to the materials and the idea initially was the rich history behind it, but the question that I don’t think I have explored enough is how to bring that history out, and celebrate it in some way.

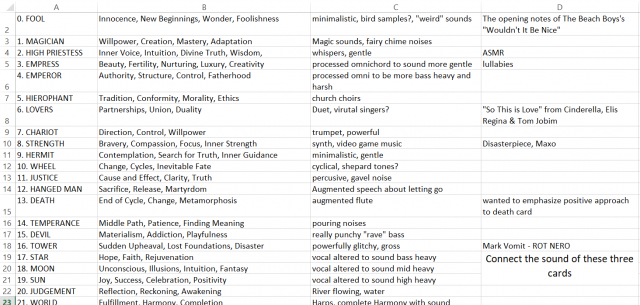

“An AR soundtrack to a tarot reading”

video documentation (headphones recommended):

Going into this piece, my main goal was to convey the message that one can get through a tarot reading through sound. As someone who casually practices tarot, I understand the significance that the images on the card have in conveying meaning. The arrangement of cards also serves an important part in a reading. I spent a significant amount of time on this project trying to get Unity to communicate with Ableton Live through Open Sound Control. In the end, due to time and other circumstances, I was unable to complete this. I decided that my energy was better put into creating an interesting soundscape.

I wanted to accomplish my original goal of having interesting sounds that could fit together in interesting ways while conveying the card’s meaning. I ended up making an excel document with my concepts for each sound to guide me in the process of composing them. Although many of these sounds eventually changed, the excel document was a good starting point and a chance for me to look for inspiration. I often worked with field recordings, altering them to fit the sound I wanted to achieve. I also took recordings from my own instruments, such as the flute and the omnichord.

Thanks to!

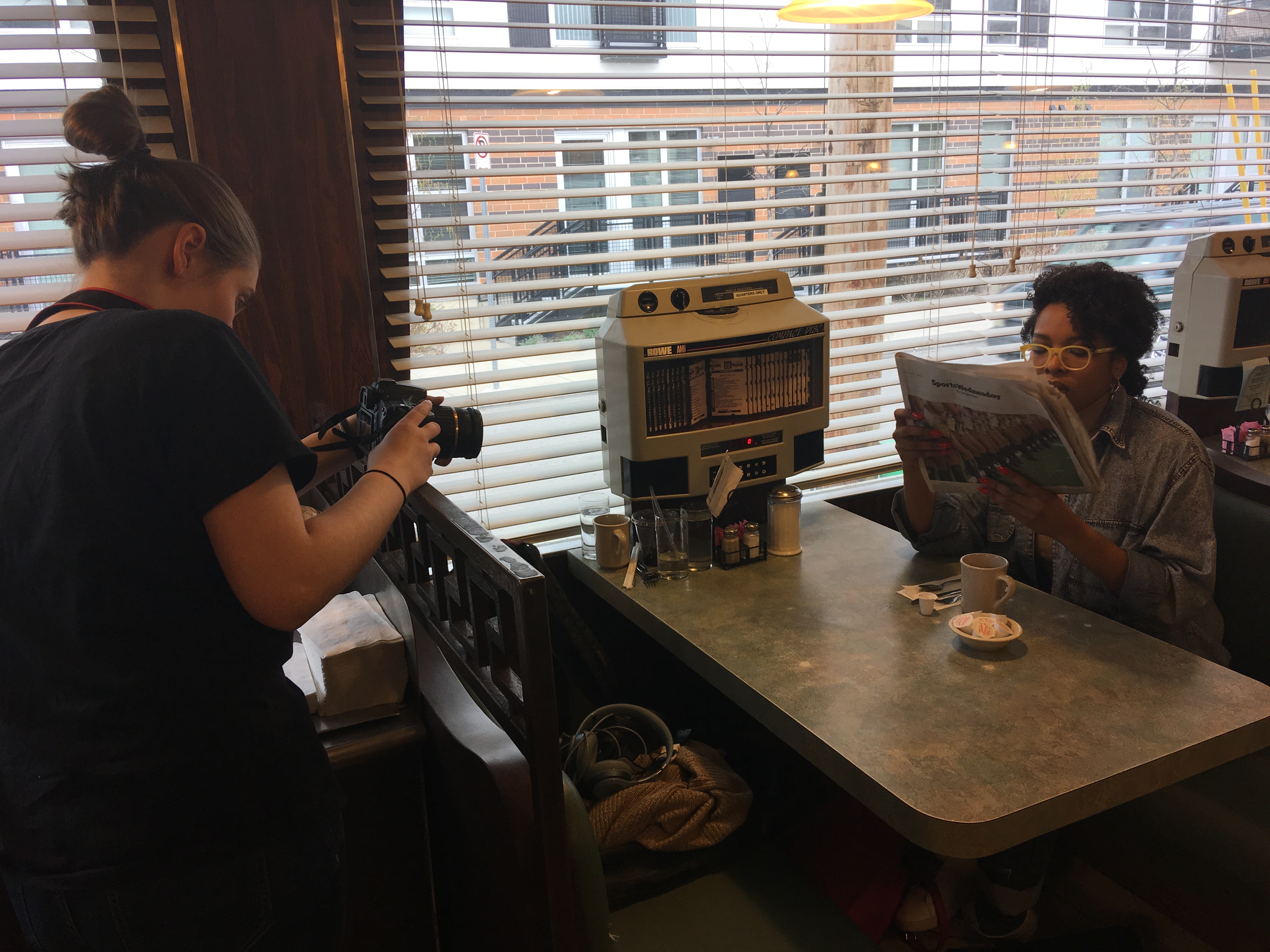

Curated compositions trigger image targets to reveal hidden intent in film.

For our final project, dechoes and I decided to continue with our last AR Project. The last project involved telling a story through the curation of the scene and having the AR be different peoples’ interpretation of the setting. This was more of a demo for a potential interactive piece where people can input their own perspective onto a curated scene.

In progress AR:

We have been playing with the idea of seeing what is on screen vs. what we want people to notice that’s not visible on screen. The AR is supposed to reveal and highlight different moments within a curated scene in a specific situation. We are using screenshots from a short film as our image target to reveal new visuals. The different kinds of AR are triggered through the different compositions that naturally occur when a variety of items are juxtaposed together in a scene.

The first part of the project involved filming the actual scene and documenting a moment. Our scene takes place at a diner. The character is doing her usual morning routine and is there for a nice coffee. The AR represents and enhances different parts of the mundane ritual. Dechoes and I talked a lot about why we were using specific animations for each moment.

We wanted to find a way to integrate the AR to the different scenes instead of just pasting something random on top of an existing scene. The image targets are extremely specific moments that trigger the AR to appear on the screen. There were definitely some technical hurdles that we had to figure out. We played and experimented with a lot of different animation and effects on Unity but had trouble with them when we were building the app.

complete ridiculous fails that look pretty sick:

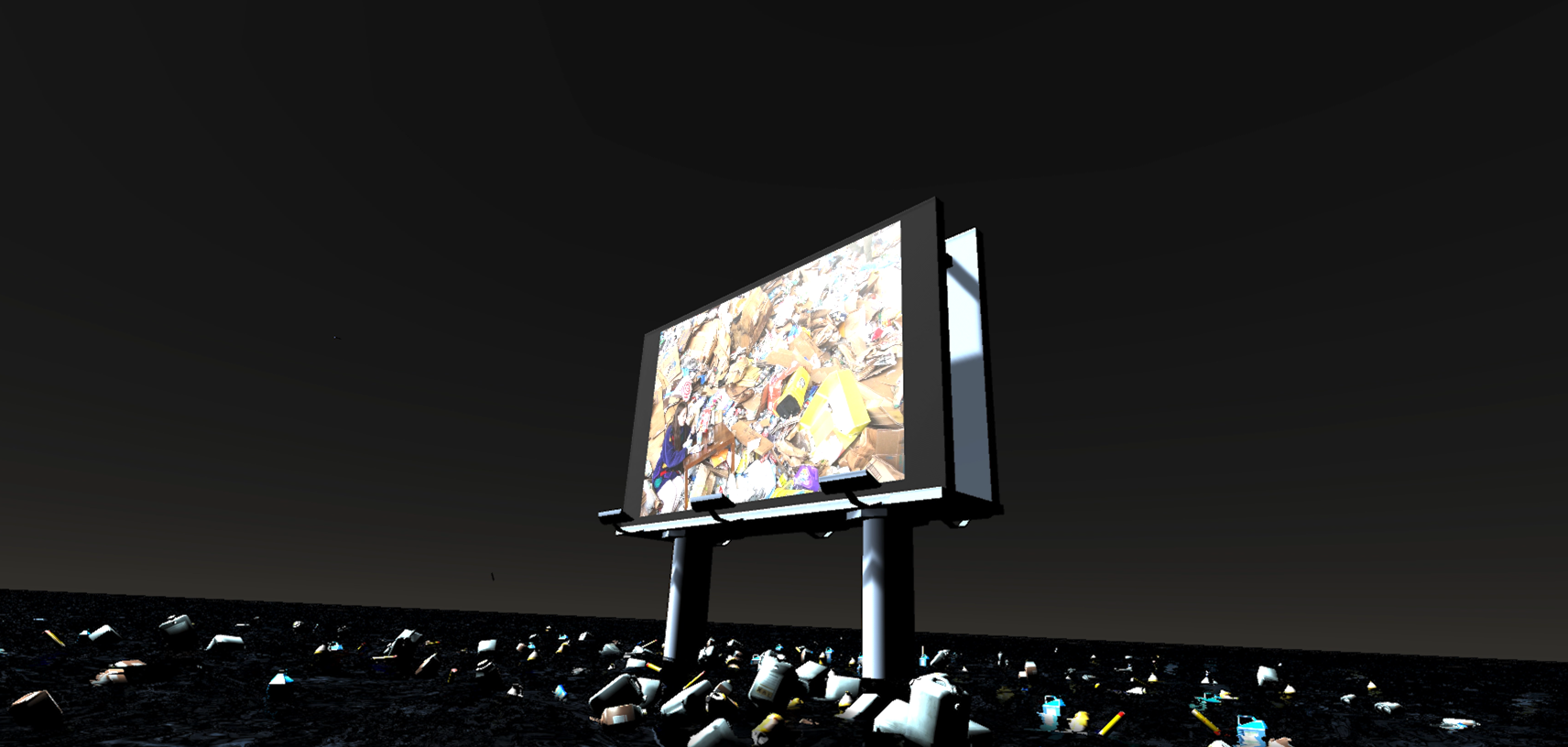

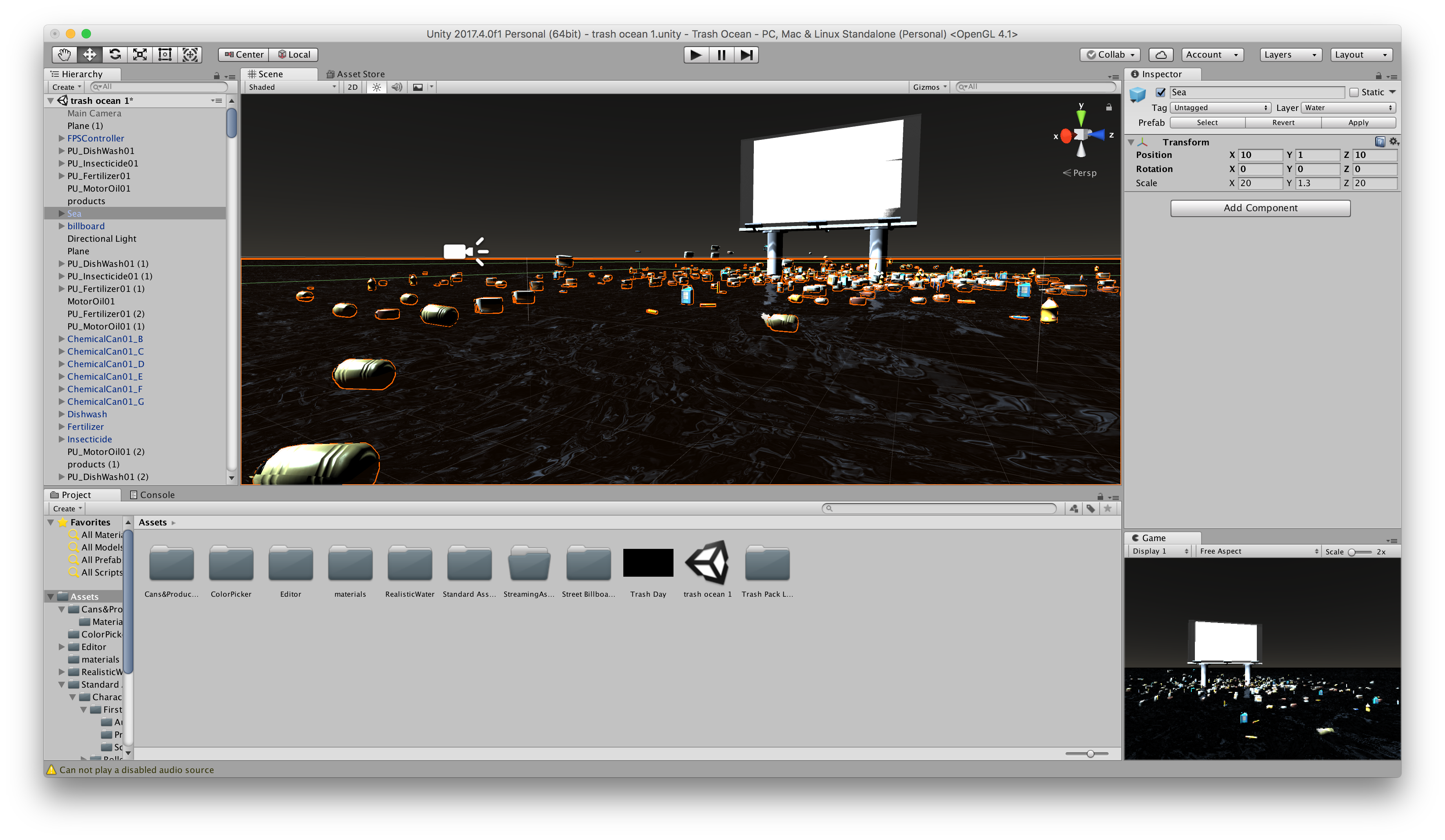

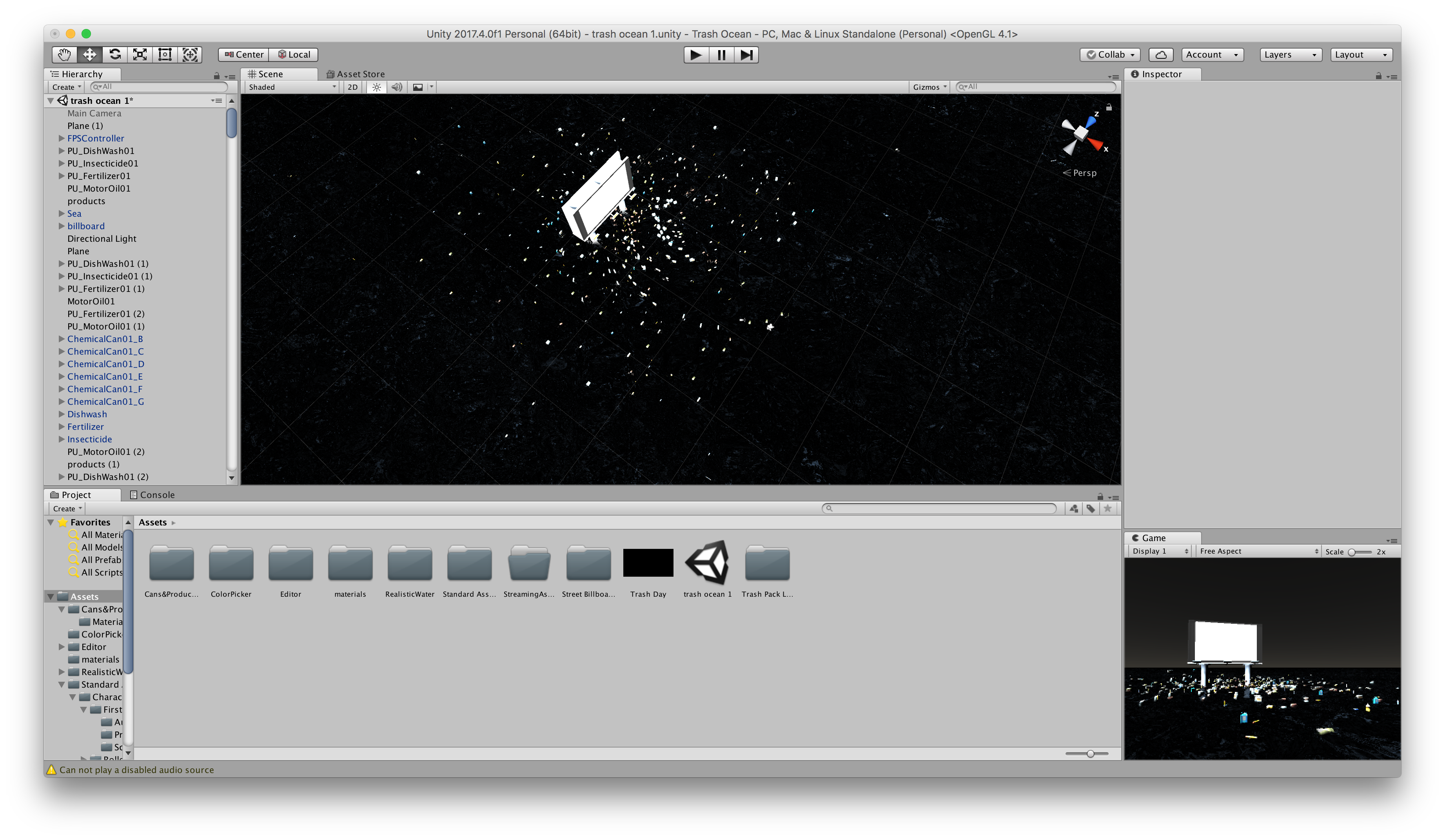

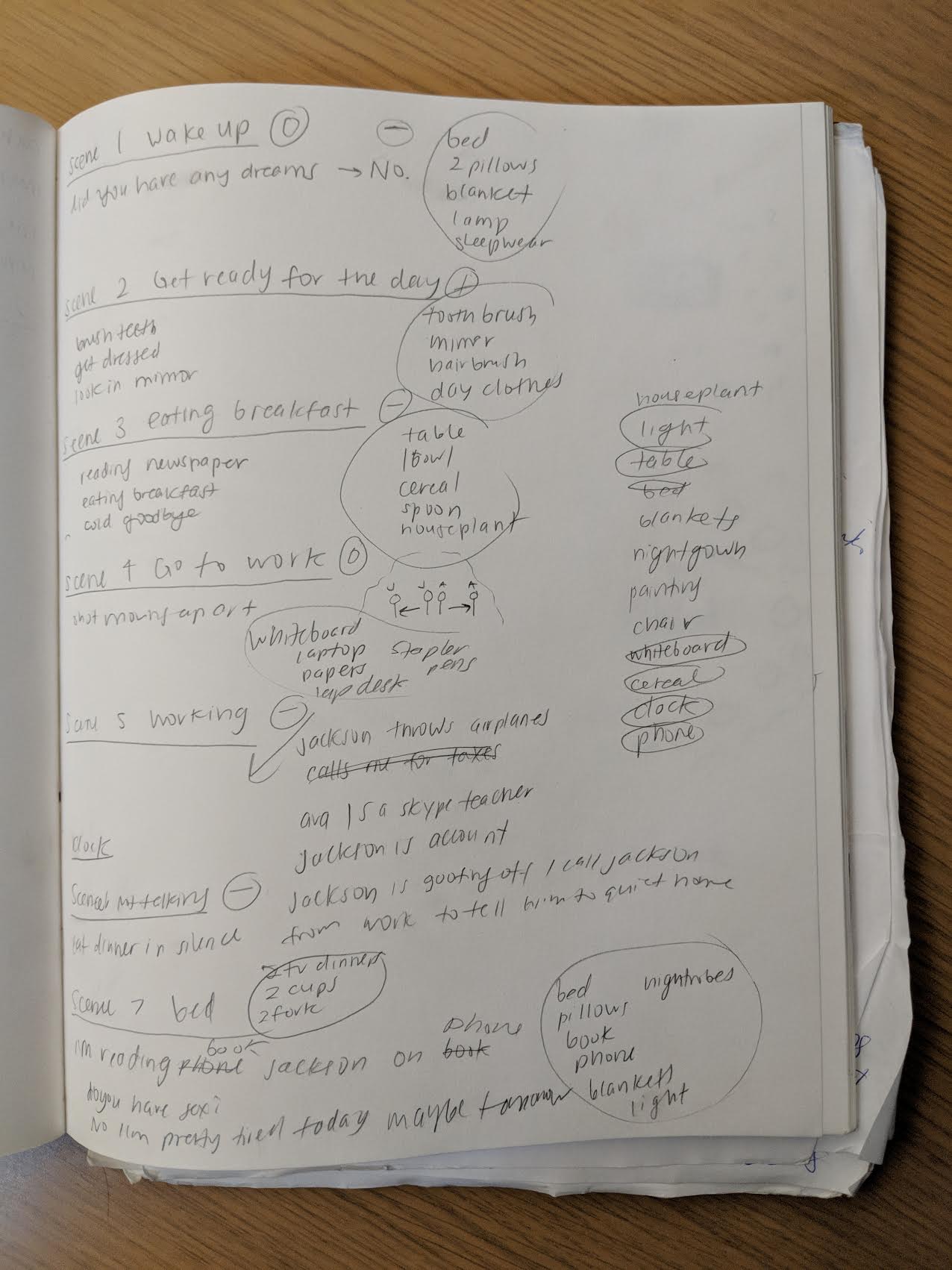

Trash Day

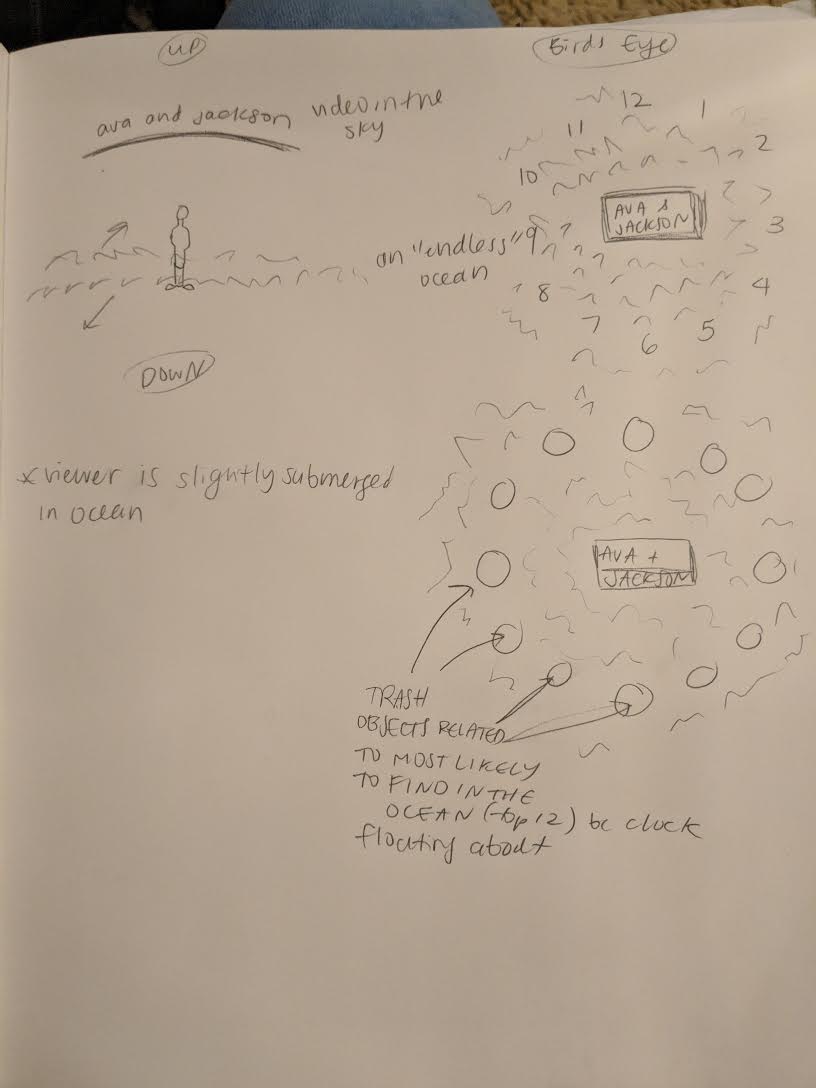

Trash Ocean

Some stills from the performance:

Behind the Scenes:

Scenes/Storyboardish/Prop list sketch

Sketch of VR

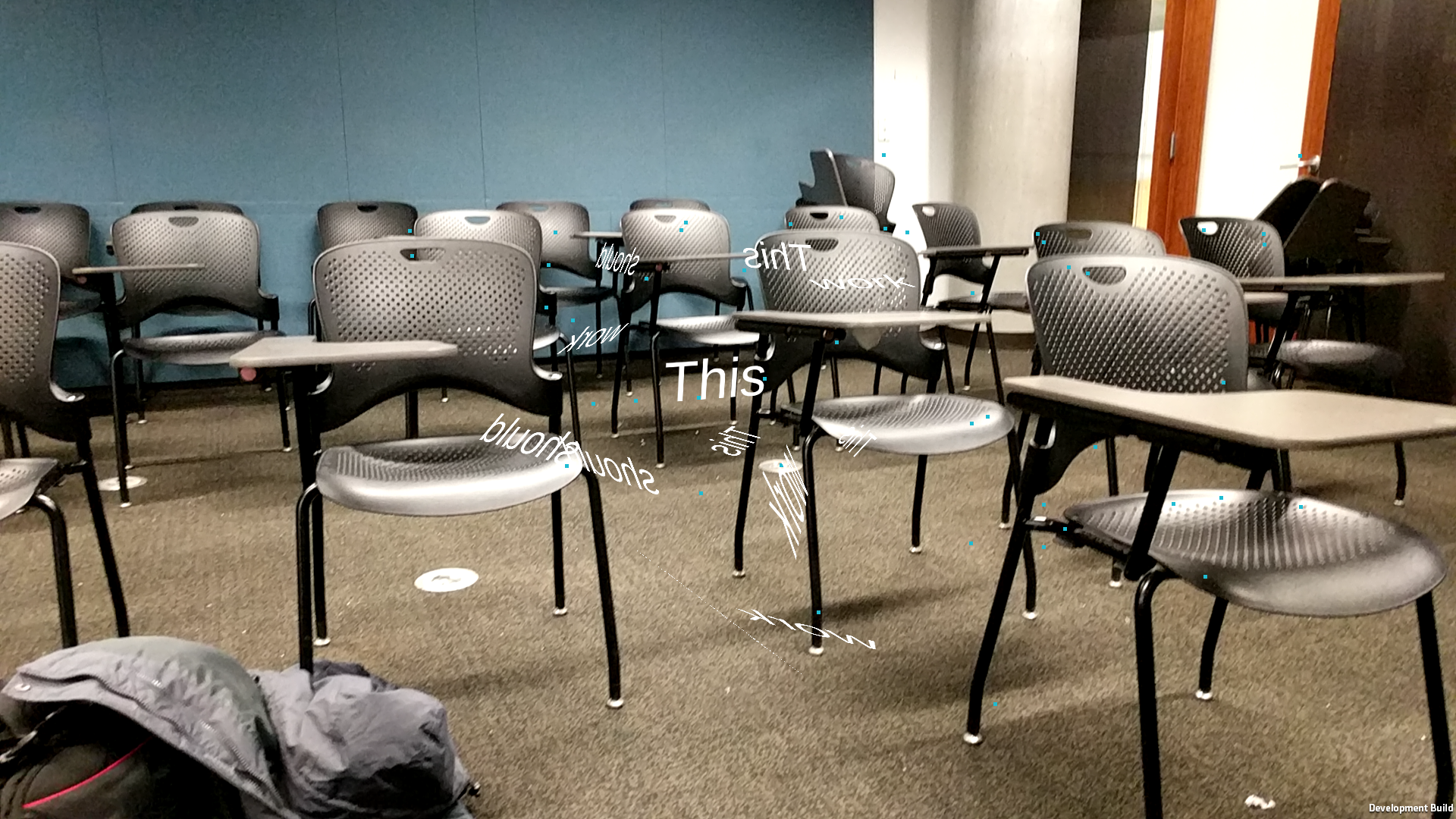

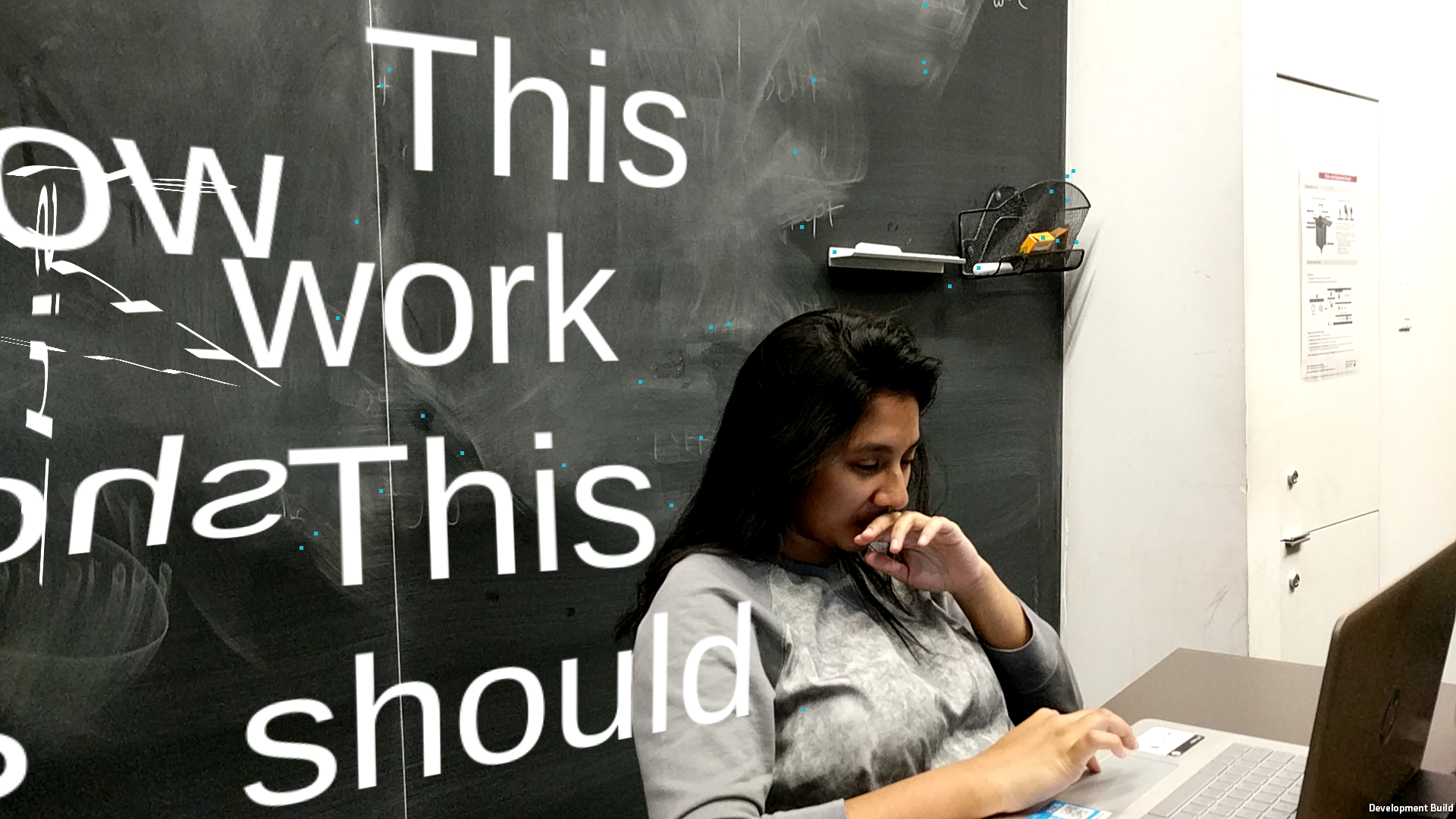

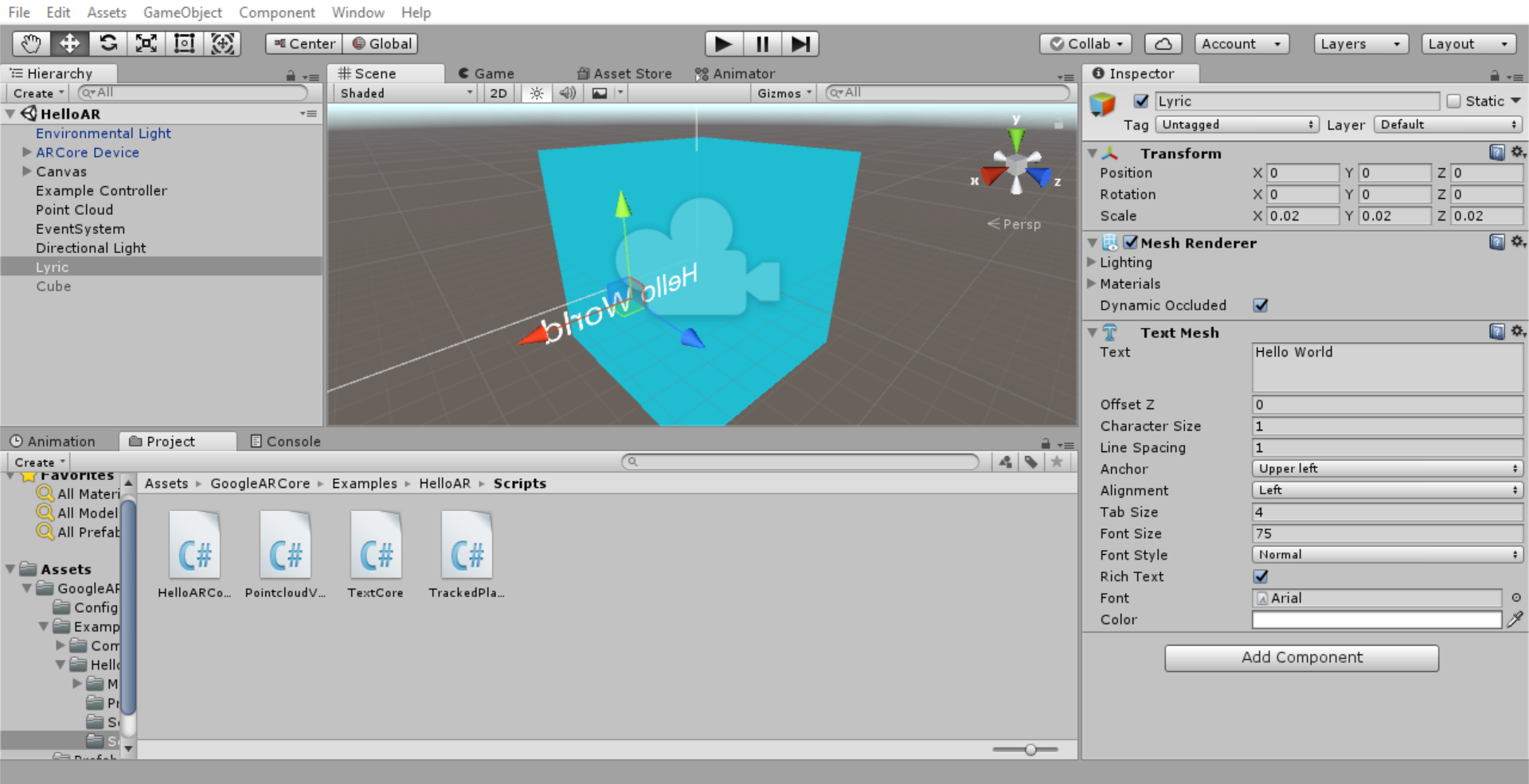

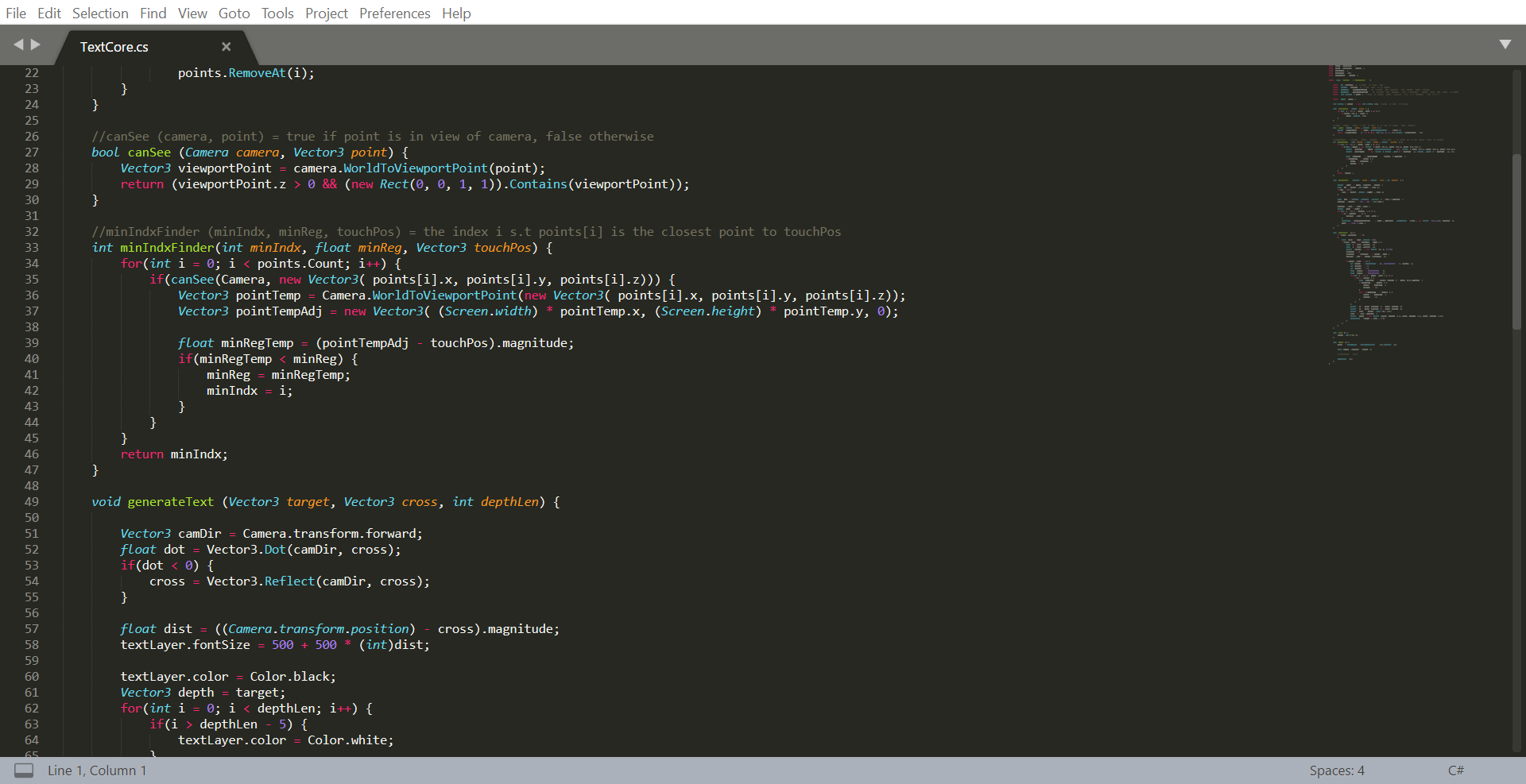

‘AR Typography is a mobile app that delivers real-time text motion tracking to video…’

AR Typography

AR Typography places text anywhere based on a simple touch of the screen. Built on AR Core in Unity, people can place multiple layers of 3D text, each with their own unique content, into one environment. The text tracks changes in movement, and will maintain its position and rotation values when moving around.

How It Works

AR Typography is able to generate 3D text onto flat surfaces (including walls) by intercepting the point cloud data from AR core and creating independent tracking planes for each object at the point the screen was tapped. When tapping the screen, the app will project the active 3D point cloud data (active referring to points visible to the camera) onto a 2D surface and find the closest point to where the screen was touched. Iterating over the point cloud data, this point will find the next two closest point in order to find the best cross product that represents the orientation of the point. The point now contains information about the position and orientation that can be used to generate the text.

In an effort to be able to film shots better without interruptions, I have effectively removed the point cloud and tracking plane visualizations.

AR Typography loads multiple strings of text into a list upon startup. The active text will modulate over the list of strings and generate a text object with the contents of the active text. This allows for effective storage and placement of multiple pieces of text in one scene.

In order to create a 3D effect for the text, a white text layer is placed at position p with orientation r, and 15 additional iterations of black text is placed at position (p – (i+1)*k*r) and orientation r, where i is the index [0,14] and k is some constant.

Visual Development

Rendering Environments

Final Product

AR Typography is a mobile app that delivers real-time text motion tracking to video, allowing for the creation of immersive, real-life kinetic typography videos for any videographer. To demonstrate this, I put together my own real-life kinetic typography video.

Far

In Collaboration With

AZScreenRecorder – Mobile Screen Capture

cmuTV – Found Footage

Carnegie Mellon – Logos, Branding, etc.

Friends of the Studio – For Being so Friendly

And to Viewers Like You : )

Note: This project is also my Engineering & Arts capstone, which I worked on as a part of 60-212.

Sometimes, people need to send a message that can’t be expressed as a text, or emoji. It’s a feeling.

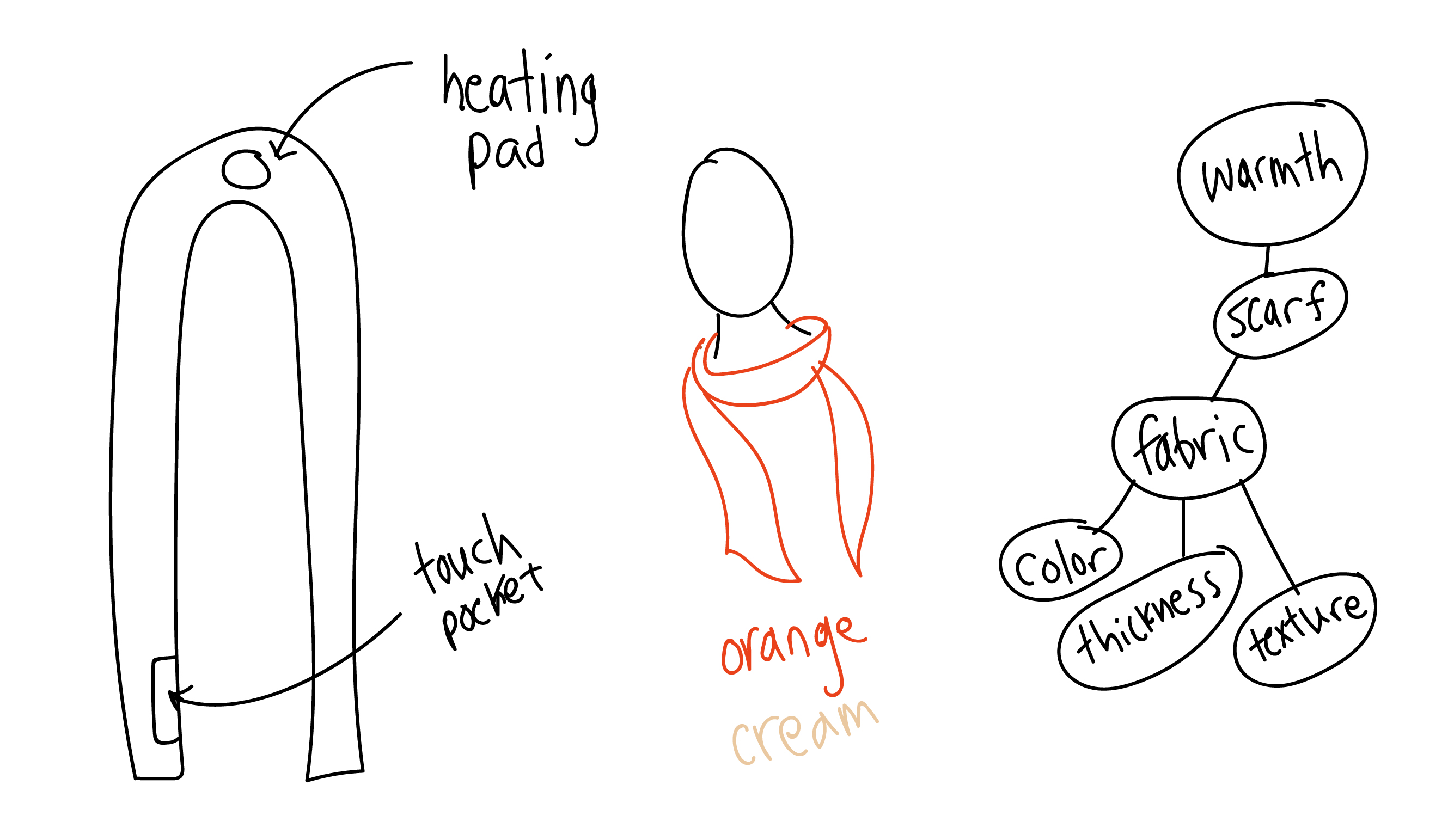

I created a pair of networked scarves that allow romantic partners to send feelings to one another, in the form of heat. When one partner puts a hand in the pocket of their scarf, the other person’s scarf warms up.

Distilling communication down to this simple gesture makes it possible to explore complex attention dynamics in relationships. Does one partner reach out more frequently than the other? Does the other partner respond? I’m interested in investigating the imperfections in relationships, and in codifying those complex dynamics using wearable technology.

I started off this project by researching attention dynamics in romantic relationships. In any relationship, giving and receiving attention is key to both partners level of satisfaction. When each partner gives and receives their attention in a balanced way, they both tend to be more satisfied. But when one person needs more than the other person is willing to give, the disconnect puts strain on both partners. This generally happens to most couples at some point or another for circumstantial reasons. However, in some relationships, it becomes a pattern.

My original inspiration for this project came out of my own history with imbalanced attention dynamics in a previous relationship. Being the anxiously attached partner, I would reach out over and over again and rarely receive a response. One can also empathize with the detached partner, who can feel bothered by the constant need for validation exhibited by their anxious partner.

In deciding on the form of the wearables, it was important to consider several factors. I wanted it to be easy to access a hidden compartment, where the wearer would send the signal to their partner. I also wanted it to be quick to take the item on and off, in case either partner needed a break from communication. The wearable needed to be large enough to house all of the electronics, with convenient spots for holding a Raspberry Pi and other technological components.

Looking at all of these requirements, scarves stood out to be an obvious choice. They live close to sensitive parts of the body, and the back of the neck is an ideal space for providing an intimate physical sensation. The front of the scarf has close access to the wearer’s hands, which allow them to interact easily with the pocket on the side. And the electronics can live at the back of the neck, where they won’t weigh down the garment.

The texture, color, and emotion of the scarves is very important to me. I explored different concepts, and ultimately settled on an aesthetic that I felt represented my goals for the project.

Often, wearable technology is intended to look as flashy and high-tech as possible. I wanted to depart from that, and create something earthy and intimate. I chose a muted color palette with natural tones. The two fabrics I picked play off of one another, with one more muted and one more active color. Both of the scarves are primarily made of a different fabric, with accents of the other color throughout, such as inside the pockets.

I chose a woven material for the fabric, as it was both a light fabric that could be worn in lots of different weather, and it was pleasant to work with and sew to.

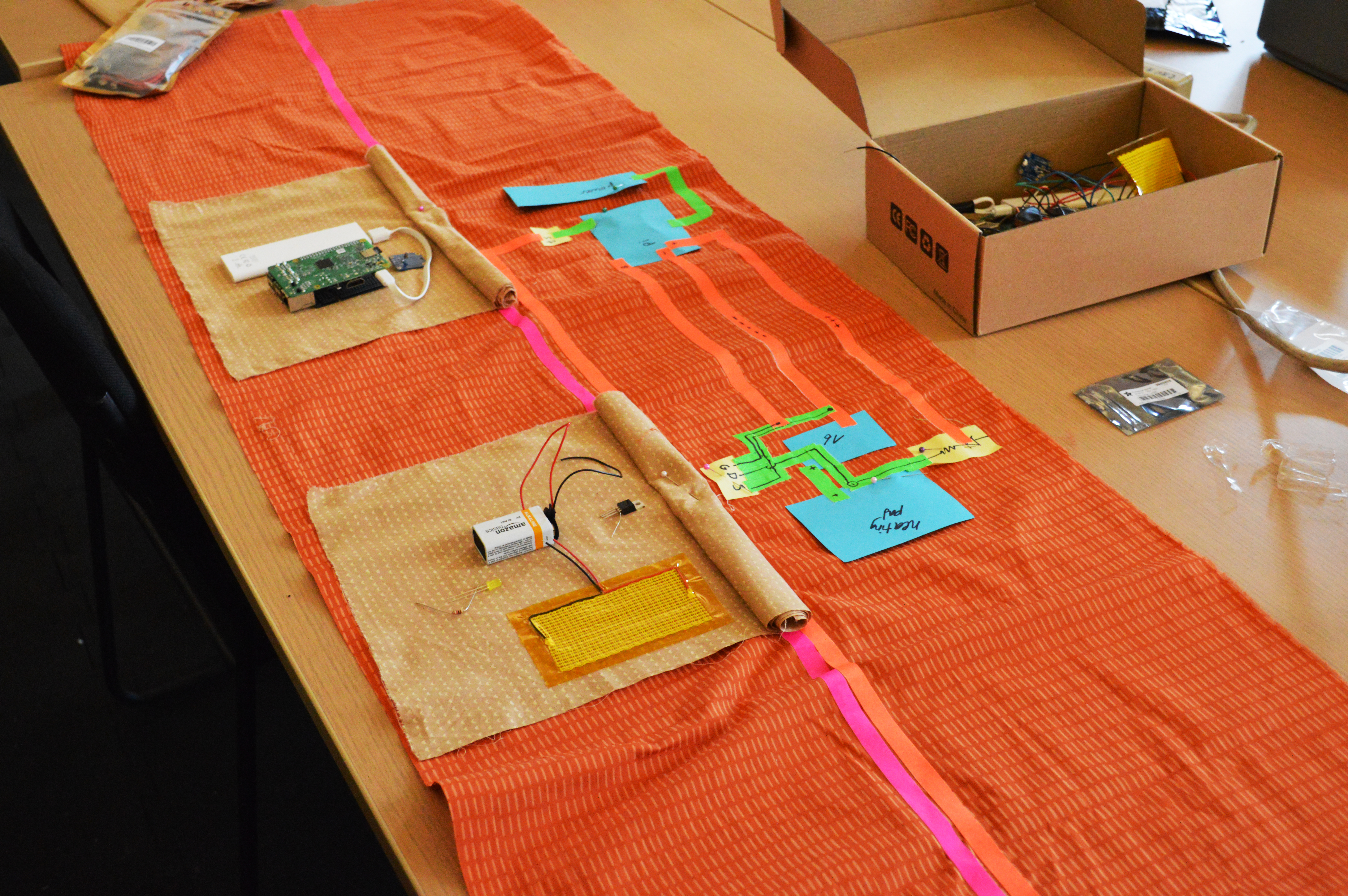

Before I started developing the real thing, I made several phases of prototypes.

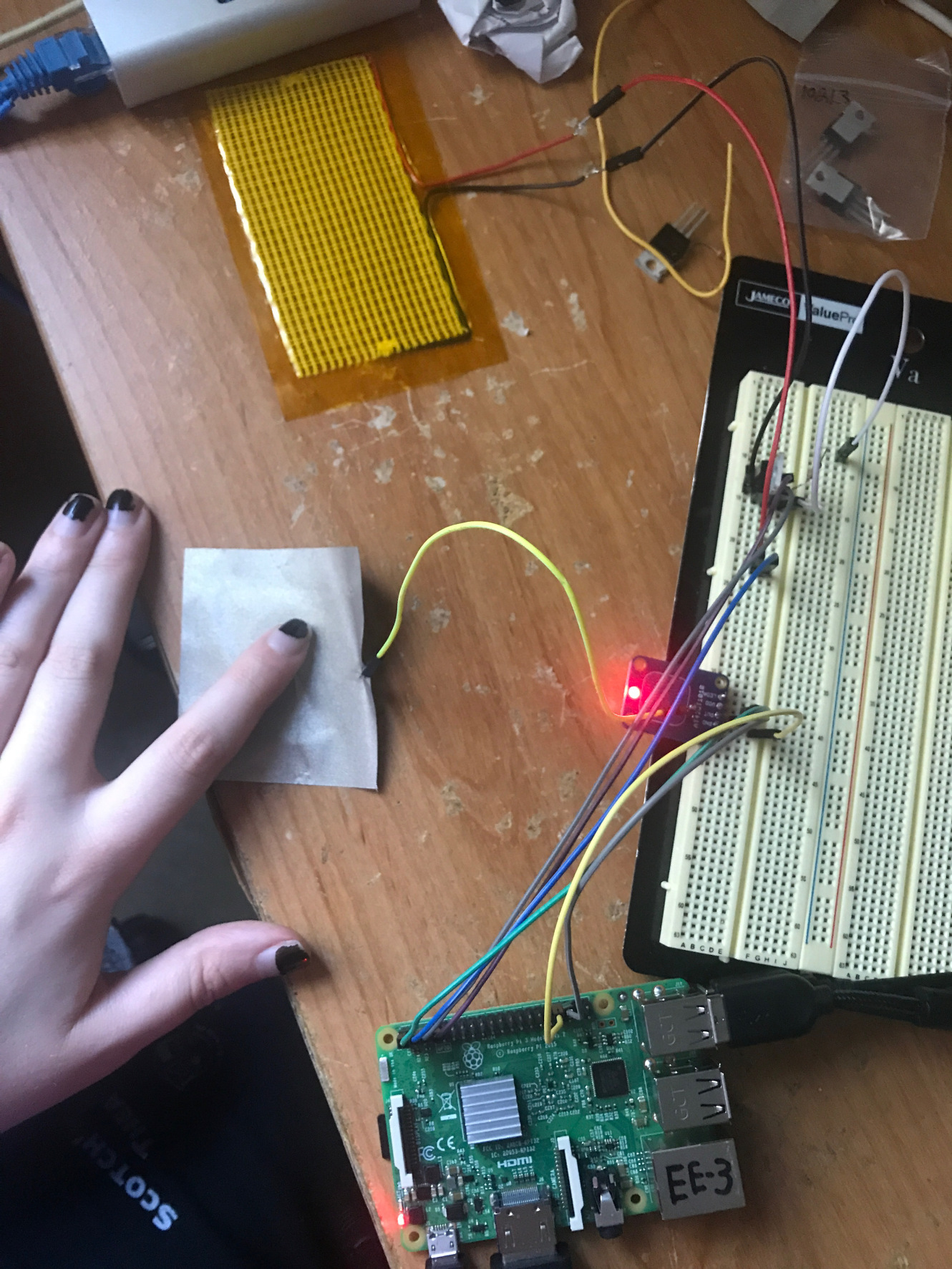

Prototype #1: Tech in Hoodies

I got all of the tech working, and to work through the challenges of sewing into physical material, I put them in a pair of black hoodies.

Prototype #2: Layout

I decided to do scarves for my final version, and laid out where each of the electronics would go.

Prototype #3: Weight

I put the heaviest circuit components actually inside of the scarf, to test if it would be horribly uncomfortable to wear. It wasn’t.

Prototype #4: Stitching

I made a prototype version just using the sewing machine, to assure myself that I would be able to do it.

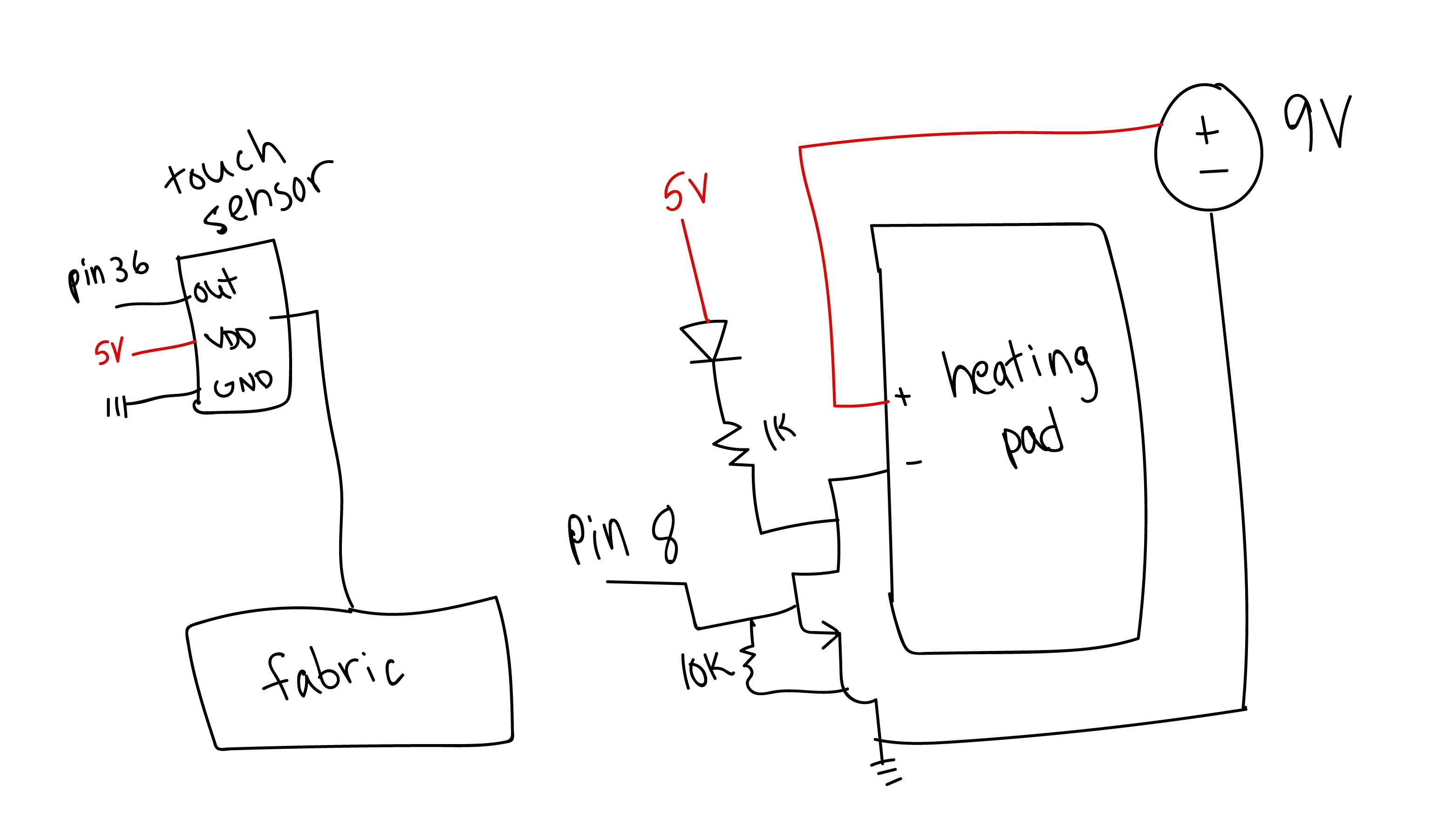

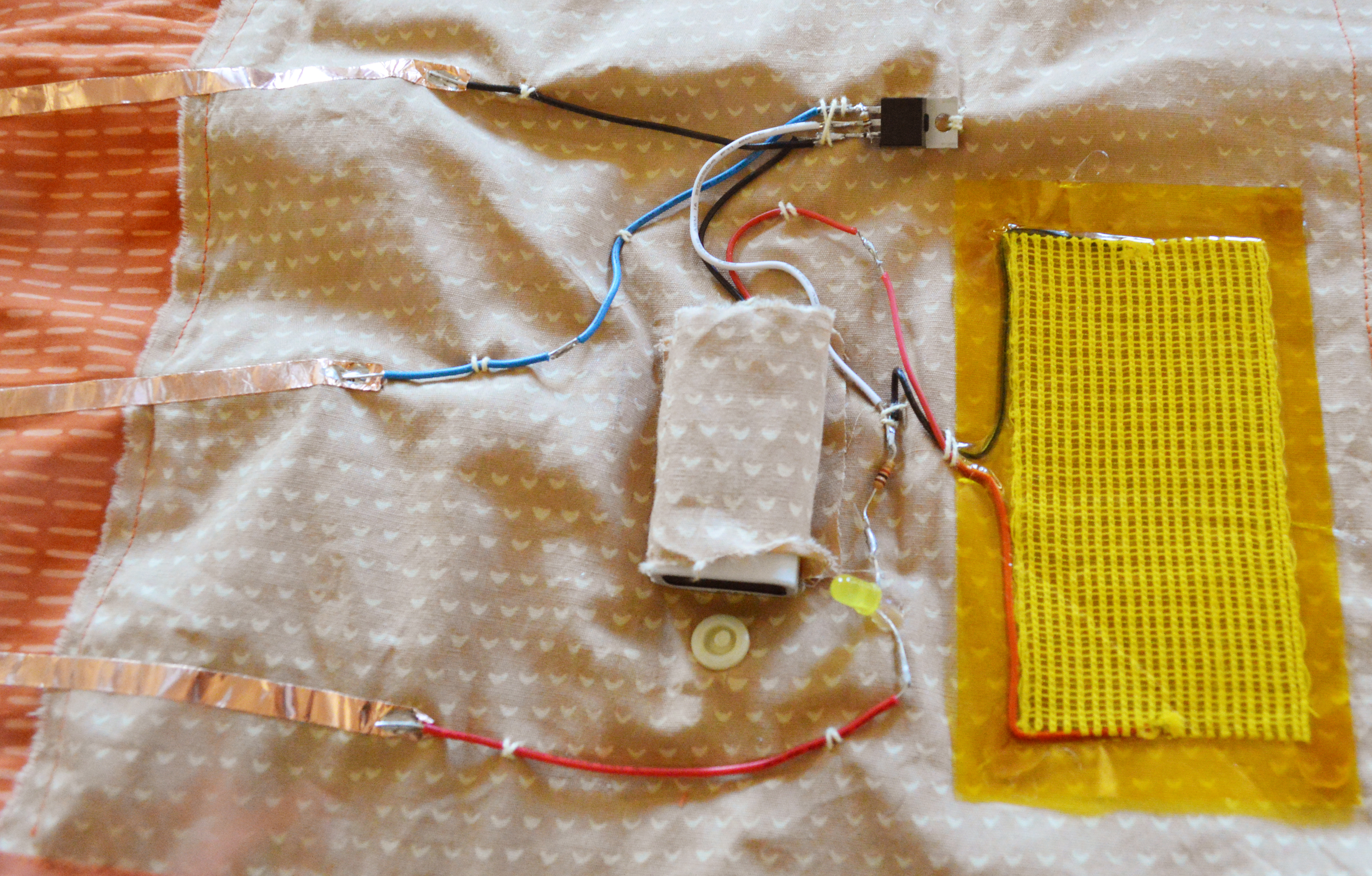

Each scarf is powered by a Raspberry Pi computer. When one Raspberry Pi senses that it’s touch sensor was activated, it sends a signal to the other scarf to turn on the heating pad.

Turning on the heating pad involves an extra battery and a transistor.

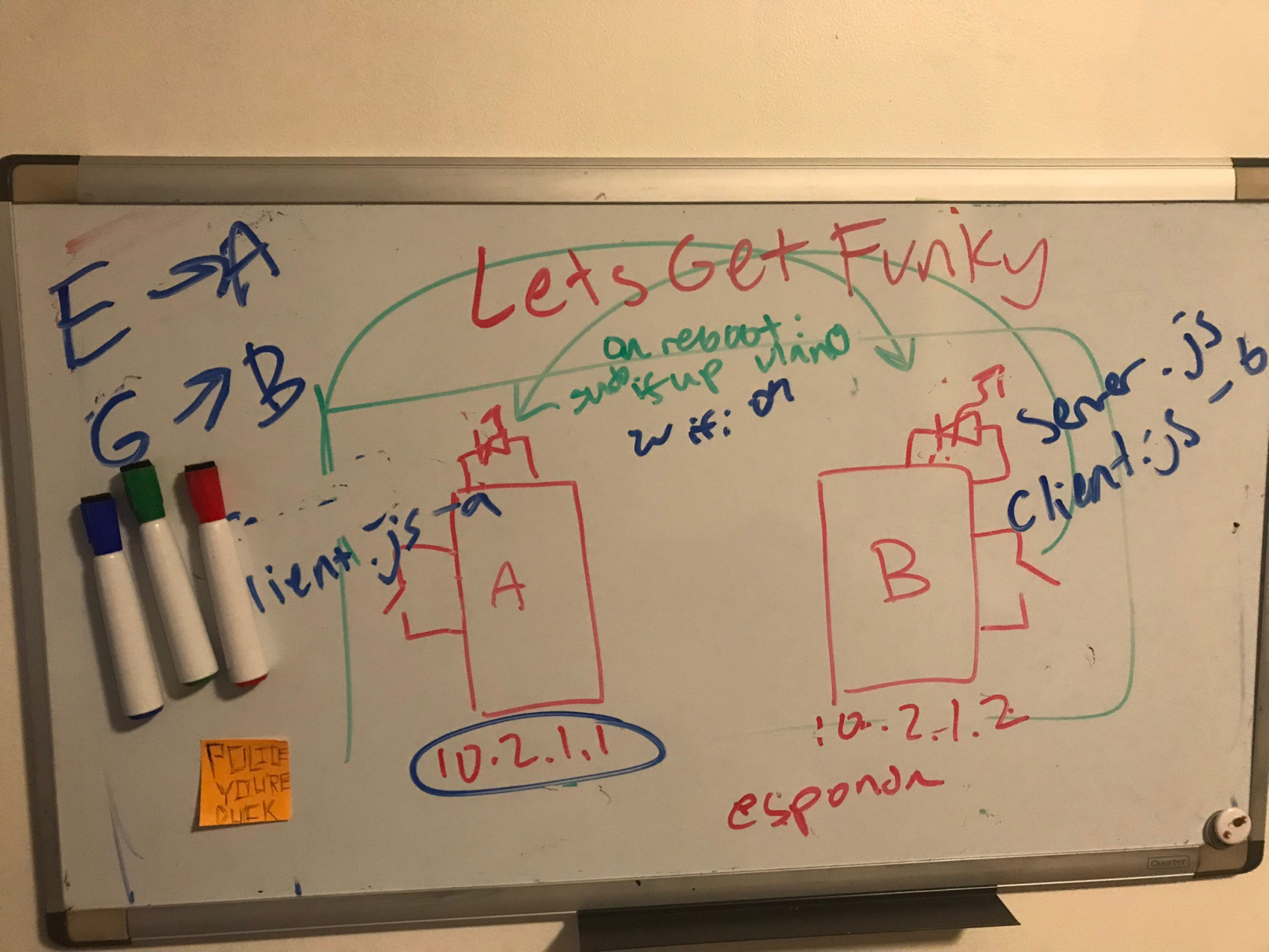

The hardest part was definitely creating an ad-hoc network between two Raspberry Pi’s so that they can communicate wirelessly without connecting to another network. CMU secure is so picky that I decided to bypass it, and just have the computers talk to each other without wifi.

I originally tried using an ESP8226 with an arduino, and ultimately realized that a raspberry pi was a way better option.

There are two Raspberry Pis, both nodes in this ad-hoc network. I learned that there are special ranges of IP addresses, one of them being the 10.2.x.x range, which is where we placed the Raspberry Pis. Raspberry Pi A is set to 10.2.1.1, and Raspberry Pi B is set to 10.2.1.2. A whole slew of other very particular and specific network settings had to be changed in order to make this a reality, but ultimately we were able to set up an ad-hoc network where A and B are connected to each other, so you can ssh into one from the other.

The server/client I wrote to test are simple. The architecture I set up is: The server is running on B (arbitrary choice). B and A are both running clients, which can update the server based on input to the Raspberry Pi. This models the ultimate architecture of the wearable I’m hoping to create: B is polling for information that A is providing, and A is symmetrically polling for information that B is providing.

I learned to sew for this project, which was a first for me. I had done some work with wearables before, but it was always on readymade clothing, never on something I created myself.

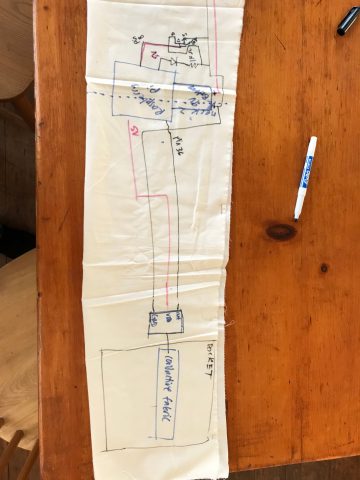

Working with wearables is a whole other beast than working with ordinary circuits. I had to consider the materiality, spacing, and layout in a way that I had never had to do before. My solution was to make a life-size diagram for myself before I ever started soldering connections. This way, I was able to be sure that I wouldn’t have any connections crossing over one another.

I had a whole fiasco with conductive thread, which caused me to abandon the idea of sewing my connections into the fabric. Instead, I opted to use wires in the electronics pockets, and have conductive tape running longer connections down the entire scarf.

Lots of sewing and hours of fiddling with tiny little pieces of electronics later, the scarves were functional. Pressing the touch pad on one heated up the touch pad on the other, and vice versa. It’s hard to put into words how tricky working with these materials and tiny little pieces can be. The scarves are now robust and trustworthy.

By developing wearables that distill attention down to a single gesture, I ask questions about what attention can mean in a romantic relationship. These scarves are partly a conceptual exploration into complex relationship dynamics, and partly an exercise in electrical engineering and physical craft.

Wearables are everywhere, and they are becoming more and more popular. The Apple Watch allows wearers to send one another their heartbeat. The Hug Shirt by Cutecircuit allows partners to hug each other from a distance. In these scenarios, what does it mean to send a heartbeat instead of a text, or a warm gesture instead of a phone call? How does that change the interaction? I ask how we can explore these abstract forms of communication to learn more about our relationships with one another.

I came across other wearables projects in my research, and have pages and pages in my Capstone Blog about various other projects. I’m going to mention a few here that I particularly enjoyed.

Afroditi Psarra: Afroditi led a workshop at Eyeo festival, which was my first exploration into wearables and soft circuits. Her work is so elegant, and a refreshing departure from overly “high tech” looking wearables.

Sophia Brueckner: Sophia Brueckner developed a project called the Empathy Amulet, which explores connecting strangers through warmth. I loved this idea of wordlessly sending feelings through physical sensation.

Cutecircuit: Cutecircuit develops The Hug Shirt, a wearable for hugging people from a distance. It is marketed as a product, though incorporates similar ideas of exchanging attention through clothing.

Molly Wright Steenson, for advising me

Avi Romanoff, for Raspberry Pi help and for modeling the scarves with me

Claire Hentschker, for conceptual help and sewing machine help and emotional support

Brianna Hudock, for helping a lot with the video

Golan Levin, for giving me space to work on this for his class

Tom Hughes, for knowing where everything is all the time

Joyce Wang, for conceptual help

Nitesh Sridhar, for helping me find my way to conductive tape

Lauren McCarthy, for speaking with me about early ideas

Josh Brown, for taking this project to another level with photography and filming

Malaika Handa, for being in my video even though she was studying for finals

Boeing, for recognizing my project at Meeting of the Minds

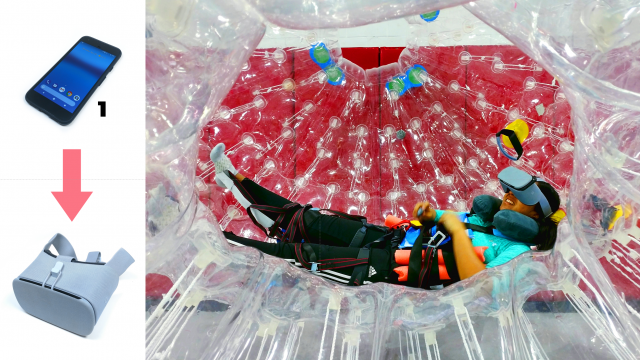

I am creating the experience of being a sphere.

I was inspired by the concept of spheres and new forms of self-being in the aftermath of an unhappy time in my life.

The project is twofold in its execution. Ultimately, the experience is both audiovisual and tactile.

The first aspect is the audiovisual experience, and the conceptual meaning tied to it. As I give the person the experience of being a sphere, I also encase them within a society of spheres with their own type of existence, logic, and dialogue.

The part I will be presenting here is the more tactile, technical side, on how I give a person a new body. The base of the physical body is a Zorb ball which is 3 meters on the outside and 2 meters on the inside.

the new body

the new body

The above picture is the Zorb ball taken within the Studio Theater in Cohon University Center.

viewer with straps and headset

viewer with straps and headset

Within the ball, the viewer is strapped in. The straps the ball came with were quite ineffective, and so I designed a new strapping system involving lots of lashing straps, velcro, and a rock-climbing harness. The person wears a Daydream virtual reality headset, and the virtual reality is communicated through a Pixel phone, generously loaned by the STUDIO.

orientation unit: connecting the physical and the virtual

orientation unit: connecting the physical and the virtual

In order to connect the reality of the physical body with that of the observed virtual reality, I allow the viewer to experience the effects of the rotation of their ball body. The rotation is communicated from the ball’s movement to VR using an orientation unit, shown on the right and placed in the blue outline within the ball.

controlling the ball

controlling the ball

Though the viewer inside the ball cannot, in reality, directly control the ball that they are in, they still perceive a sense of will over the ball. They use a controller to dictate their desired motion. Pushers will push the ball in the appropriate direction. A “coordinator,” highlighted in blue, tells how to push the ball correctly in physical reality given the controller output from the viewer and the orientation of the viewer in physical space.

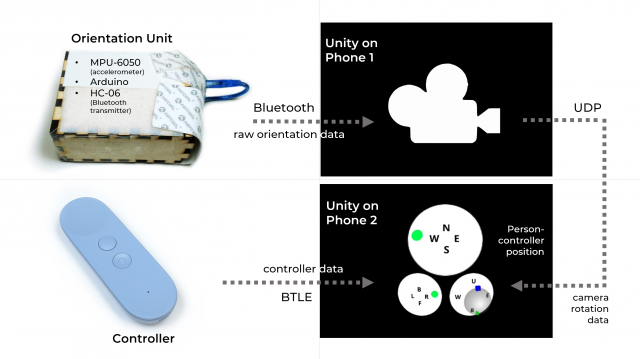

signal transfer

signal transfer

Here is a summary of how the signal processing works know that we have gone over the ball itself. The orientation unit within the ball sends ball-rotation information to the VR phone. The coordinator needs to tell the pushers how to push the ball in the right way, so they use an app on a second phone to help them. The second phone takes in controller output from the viewer as well as information about where the viewer is looking at. From those two bits of information, the second phone can compute how the force should be applied to the ball so that it rotates in the desired direction.

Here is both a GIF and video showing the physical aspect of my project, involving the ball.

Contributions [abridged]

Advisors:

Golan Levin

Garth Zeglin

Tom Hughes

Donors:

SURG/CW

FRFAF

Volunteers:

Lisa Lo

Rain Du

Maia Iyer

Anjie Cao

Connie Ye

Sophia Qin

Oscar Dadfar

Nitesh Sridhar

Peter Sheehan

Michael Becker

Winston Grenier

Parmita Bawankule

This is my sketch for my clock about my ex boyfriend, In Bad Taste. It is a collage of my resentment that he liked video games more than me, that I wasn’t invited on this vacation to Sedona, and how frequently he called me a bad girlfriend. Alas.

Here is my inspiration for the project.

The slot machine/ clock rotates with the time of day. When the digits of the clock are the same balloons pop up letting you know that the time is now.

// Global variables. PImage bg; PImage fg; PImage armChair; PImage slotMachine; PImage the; PImage time; PImage is; PImage now; PImage cherry; PImage[] numbers = new PImage[10]; int x; int y; void setup() { size (1000, 600); frameRate(60); bg = loadImage("background.png"); fg = loadImage("foreground.png"); armChair = loadImage("armChair.png"); slotMachine = loadImage("slotMachine.png"); the = loadImage("the.png"); time = loadImage("time.png"); is = loadImage("is.png"); now = loadImage("now.png"); cherry = loadImage("cherry.png"); for(int i = 0; i < 10; i++) { numbers[i] = loadImage(str(i) + ".png"); } } float clamp(float x, float min, float max) { if(x < min) return min; if(x > max) return max; return x; } void draw() { float hr = hour(); float mn = minute(); float sc = second(); float ml = (float)(System.currentTimeMillis() % 60000); sc = ml * 0.001; background(0); image(bg, 0, 0, 1000, 600); if(hr == mn){ float amountToRise = 600.0 + 1500.0; float lengthOfRise = 8.0; //clamp(sc, 0.0, lengthOfRise) float t = (clamp(sc, 0.0, lengthOfRise) / lengthOfRise); int offset = int(amountToRise * t); println(sc); moveBalloons(offset); } image(fg, 0, 0, 1000, 600); slots(hr, mn, sc); image(slotMachine, 500, 100, 220, 380); image(cherry, 628, 44.5, 50, 65); image(armChair, 550, 250, 300, 300); //System.out.println(hr); //System.out.println(mn); } void moveBalloons(int yOffset) { x = 100 ; y = 600 - yOffset; image(the, x + sin(yOffset * 0.012) * 20.0, y, 360, 240); image(time, x - 30 + sin(yOffset * 0.010) * 10.0, y + 150, 420, 280); image(is, x + 60 + sin(yOffset * 0.014) * 15.0, y + 340, 240, 260); image(now, x + sin(yOffset * 0.006) * 11.0, y + 520, 350, 250); } void slots(float hour, float minute, float second) { PImage[] currentHr = returnTime((int)floor(hour)); PImage[] nextHr = returnTime(((int)floor(hour) + 1 )% 24); pushMatrix(); translate(558, 195); scale(0.06, 0.09); rotate(-0.05); float heightm = currentHr[0].height; float tenhourLeft = ((hour % 10.0)) / 10.0; image(currentHr[0], 0, - heightm * tenhourLeft); image(nextHr[0], 0, heightm -heightm * tenhourLeft); popMatrix(); pushMatrix(); translate(590, 196); scale(0.05, 0.09); rotate(-0.05); float heighth = currentHr[1].height; float hourLeft = (minute) / 60.0; image(currentHr[1], 0, - heighth * hourLeft); image(nextHr[1], 0, heighth -heighth * hourLeft); popMatrix(); PImage[] currentMn = returnTime((int)floor(minute)); PImage[] nextMn = returnTime(((int)floor(minute) + 1 )% 60); pushMatrix(); translate(622, 192); scale(0.05, 0.09); rotate(-0.05); float tenminLeft = ((minute % 10.0))/10.0; float heighto = currentMn[0].height; image(currentMn[0], 0, - heighto * tenminLeft); image(nextMn[0], 0, heighto -heighto * tenminLeft); popMatrix(); pushMatrix(); translate(654, 194); scale(0.045, 0.09); rotate(-0.05); float heightn = currentMn[1].height; float minuteLeft = (second)/ 60.0; image(currentMn[1], 0, - heightn * minuteLeft); image(nextMn[1], 0, heightn -heightn * minuteLeft); popMatrix(); } PImage[] returnTime(int time) { int firstD = time % 10; int secondD = time / 10; print(secondD, firstD); PImage[] currentNum = {numbers[secondD], numbers[firstD]}; return currentNum; } |

I updated the UI from last time to look as below:

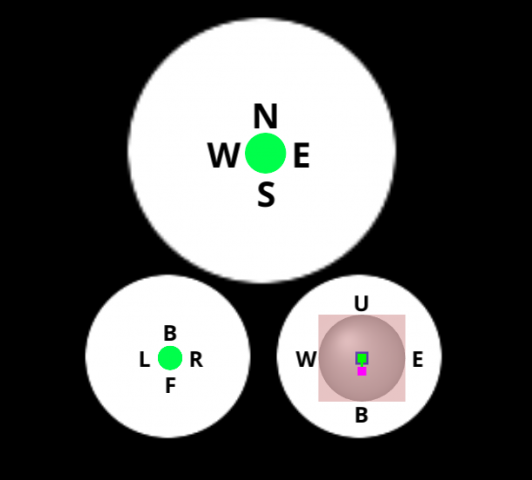

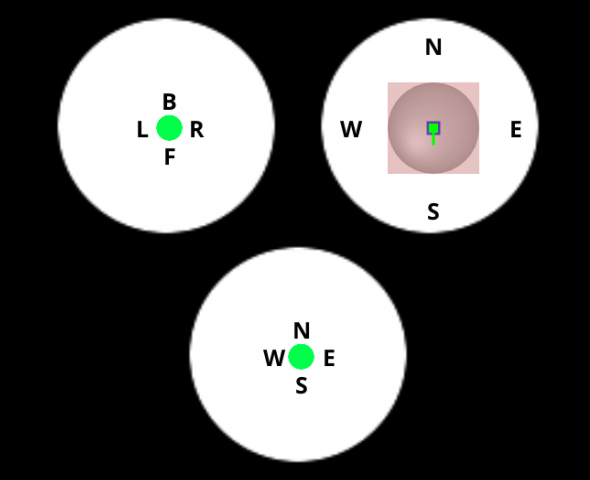

The top circle, this time, shows the position the person-controller should be in (north/south/east/west) relative to the room. The bottom left shows the viewer’s thumb on the hand-controller, and the bottom right shows the orientation of the ball, given by the MPU (orientation unit).

This UI is actually a Unity app running on a phone. However, there is another phone (phone 2) that also receives MPU signals: the phone (phone 1) that renders the Unity scene the viewer sees in VR. A significant challenge was getting the MPU signals to both phones at once. The MPU gives its signals over Bluetooth, but a Bluetooth device can only be paired to ONE other device at a time. I eventually decided that it should give signals to the VR on phone 1. Now I had to send the MPU signals from phone 1 to phone 2. After hacking around with everything from Unity Networking to TCP to Python/Twisted servers and Node.js, I finally found the perfect solution.

UDP!

UDP is blazing fast, prioritizing speed over transmission-acceptance, allowing for phone 2 to receive a continuous and up-to-date stream on the MPU signals. Phone 2 therefore has the most current orientation of the ball; the person-controller seeing the UI above will be able to react more quickly to where the viewer in the Zorb ball wants to go and direct the pushers to push in the proper direction.

Now, for a video of this person-controlling action-business:

It turns out that the system still isn’t perfect, and I’m still trying to investigate why. Viewers noted that signaling ‘left’ vs. ‘right’ would get switched, especially when they were near the top of the ball, facing downward. I’m not sure if it is an issue with the app itself. It is also possible that it might be because the ball cannot be rotated on its axis, so it only has two degrees of rotational freedom — however, I did try to overcome this in my app, and the viewer in the “up” position should theoretically not have this problem. It may also be reaction speed, or a case of not enough testing. I’ll try to figure out the problem this week.

As you can tell by the video, a lot of people helped out with this run. My friend, Parmita, was the person-controller (the person running around holding phone 2). sheep did the video recording. From the STUDIO, I had help from conye, farcar, miyehn, tesh, and phiaq. Outside the STUDIO, I had help from my friends, Maia, Michael, Anjie, Winston, and Lisa.

I eventually decided to make the “AR tech hurdle” I would work on to be a UI for my independent study project. I am giving a person the experience of feeling like a sphere, and part of that involves putting the person in a Zorb ball and having the ball being pushed according to the person’s will.

Here’s how it works: within the Zorb ball is an accelerometer that keeps track of the ball’s orientation in real space. The viewer also holds a controller, which indicates their desire to go left, right, forward, or backward. Based on the viewer’s desire and the orientation of the Zorb ball, a number of other people have to push the ball to make it roll in the right direction.

The problem I encountered at the last rolling-meeting was that the person who “calculates” the direction the ball should roll in, the “person-controller,” has a very difficult time making the computation quickly and efficiently. I thus decided to create a UI system that can help them.

I consider this an AR issue, because rolling the ball properly augments the reality the person encounters in real, physical space.

Below is the UI the person-controller will see. It is currently inactive.

The top left circle indicates the user’s thumb position on the hand controller. The green dot will move around the circle once the UI is activated. The B stands for roll backward, F for roll forward, L for left, and R for right. The top right circle indicates the person’s orientation with regards to a defined coordinate system of north/south/east/west. The bottom circle is the circle the person-controller will use to determine which direction the ball should be pushed from. If the green circle indicates N for north, then the ball should be pushed from the north direction.

I will test how well this system works at the next roller-meeting and report the results for the final AR-project.