I really struggled to come up with a compelling idea for this project. Initially, I wanted to do something with hands using openpose. Unfortunately, it was too slow on my computer to use live.

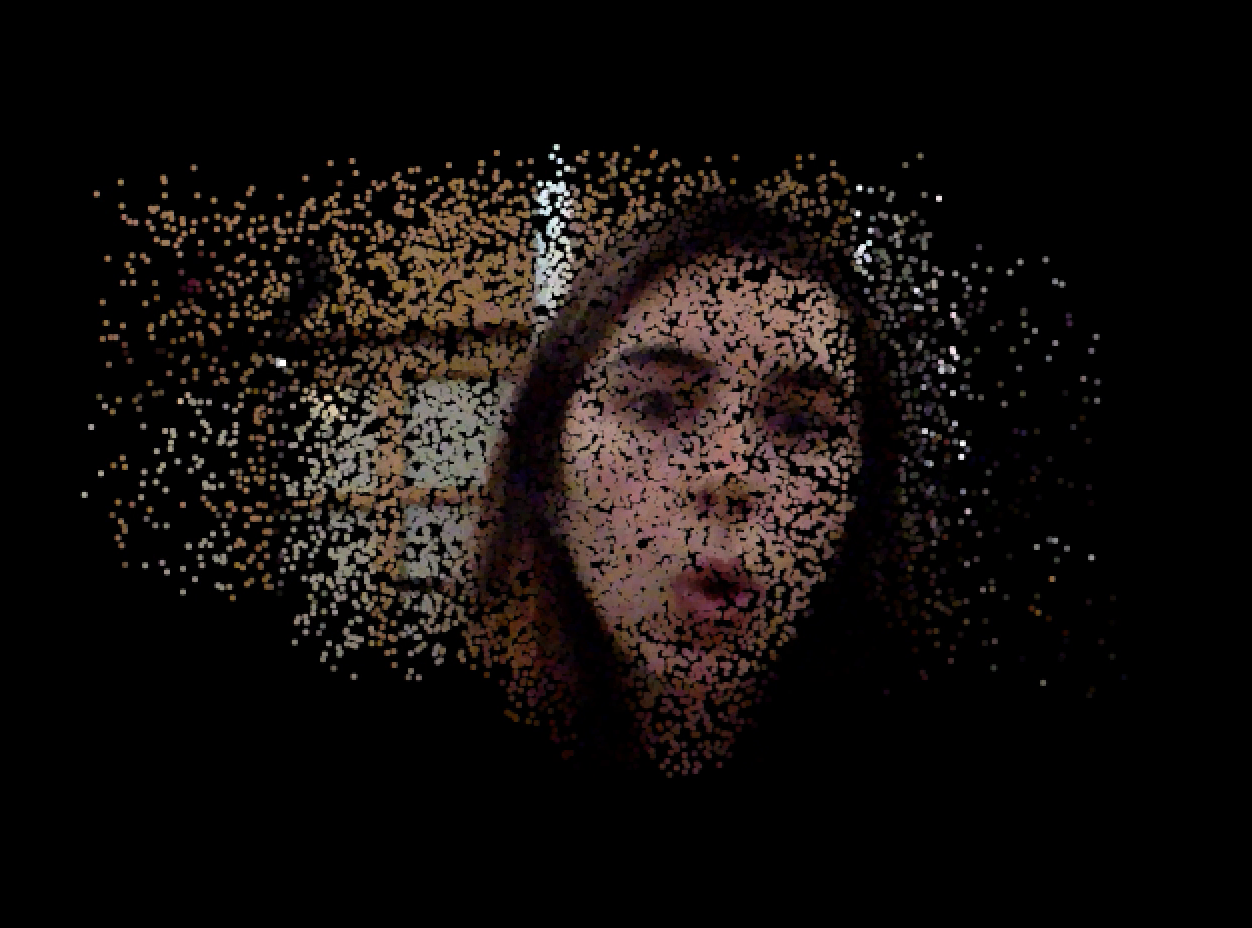

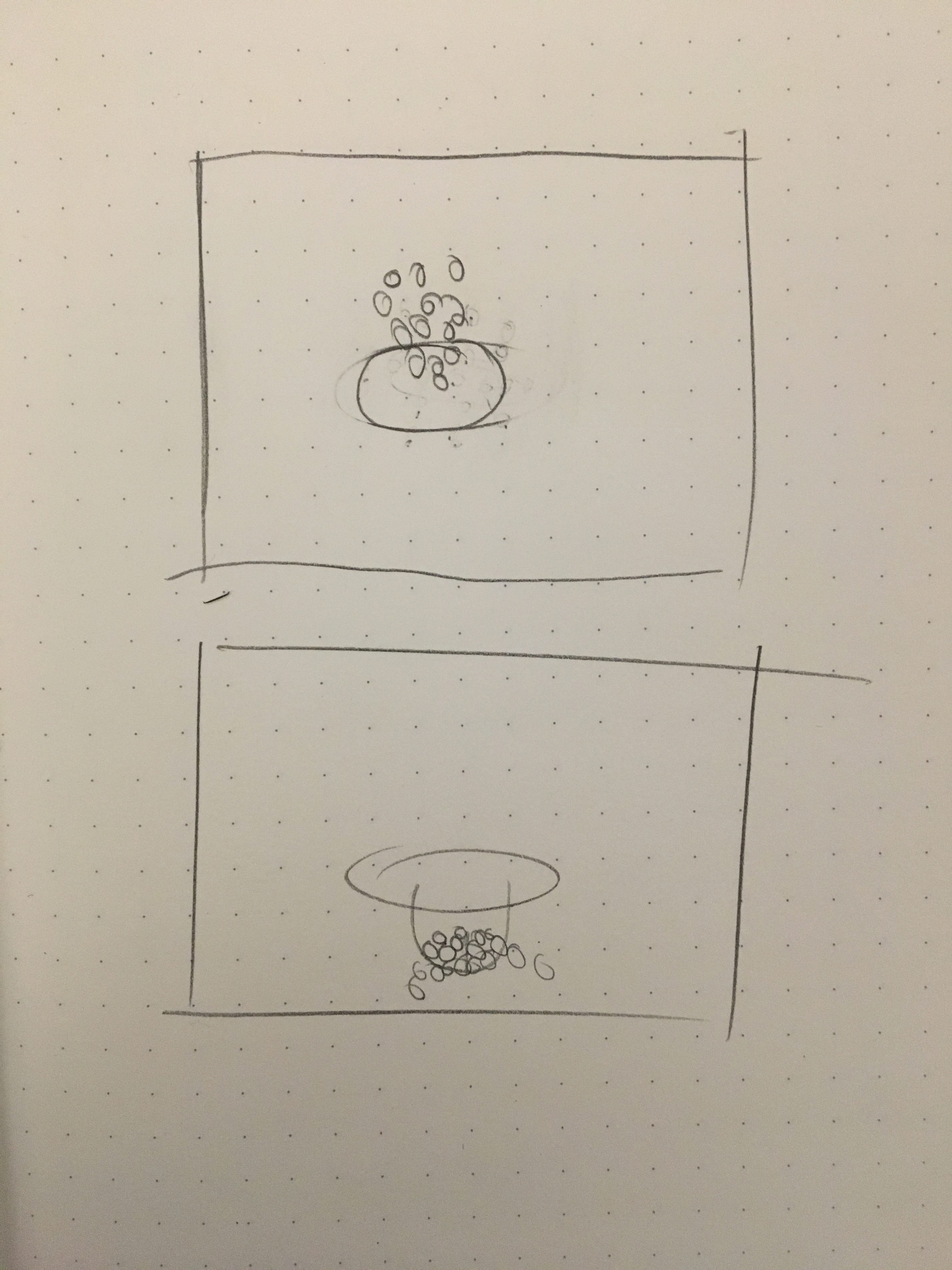

After unsuccessfully attempting openpose, I shifted my focus to FaceOSC. I explore a couple different options, including a rolling ping pong ball, before settling on this. Initially inspired by a project Marisa Lu made in the class, I wanted to create a drawing tool where the controller is one's face. Early functionality brainstorms included spewing particles out of one's mouth and 'licking' such particles to move them around. Unfortunately, faceOSC's tongue detection system is not great so I had to shift directions.

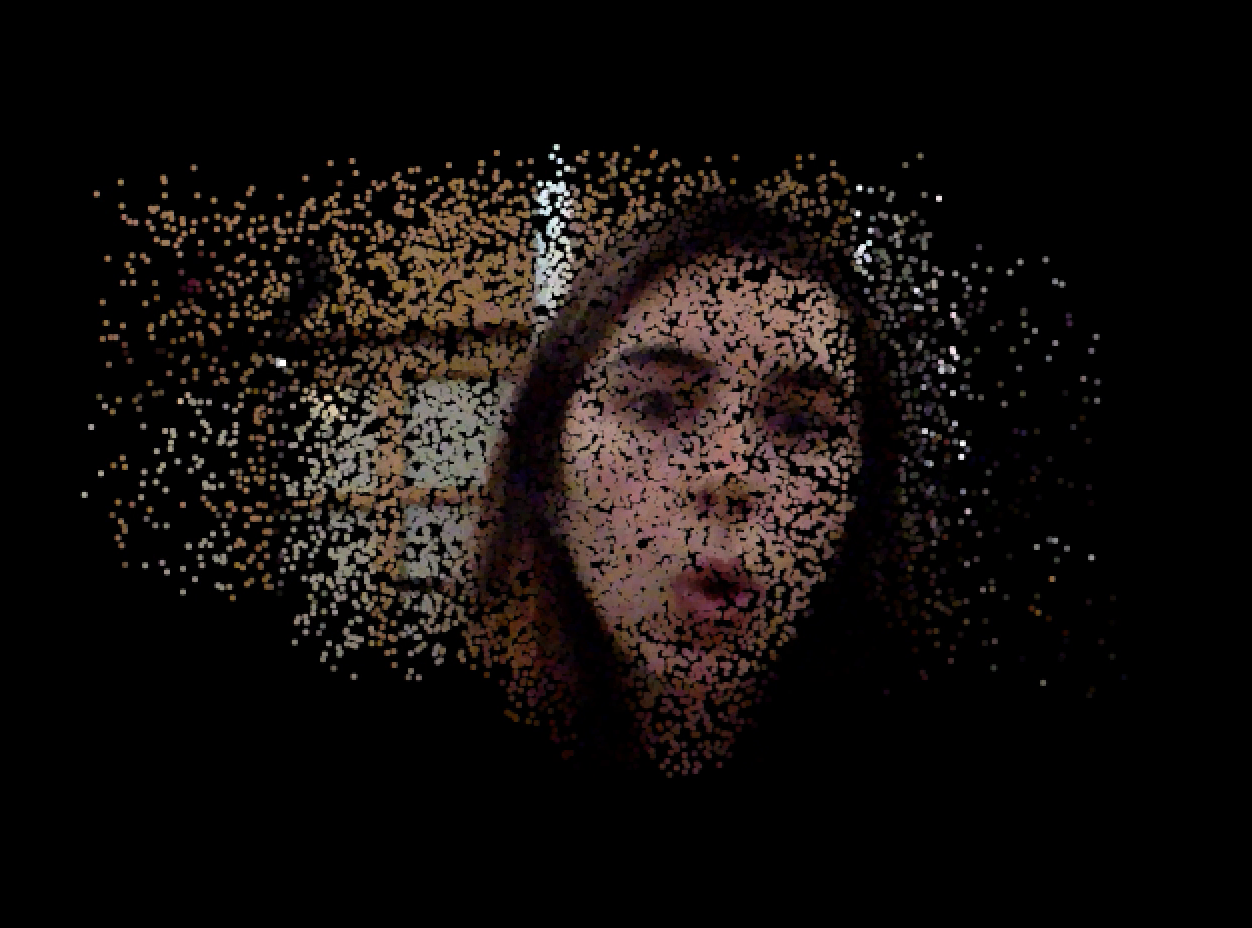

Thinking back to the image processing project for 15-104, I thought it would be fun if the particles 'revealed' the user's face. Overall, I'm happy with the elegant simplicity of the piece. I like Char's observation when she described it as a 'modern age peephole'.However, I'm still working on a debug mode in case faceOSC doesn't read the mouth correctly.

ParticleSystem ps;

import processing.video.*;

Capture cam;

import oscP5.*;

OscP5 oscP5;

int found;

float[] rawArray;

Mouth mouth;

int particleSize = 3;

int numParticles = 20;

void setup() {

size(640, 480);

ps = new ParticleSystem(new PVector(width/2, 50));

frameRate(30);

setupOSC();

setupCam();

mouth = new Mouth();

}

void setupOSC(){

rawArray = new float[132];

oscP5 = new OscP5(this, 8338);

oscP5.plug(this, "found", "/found");

oscP5.plug(this, "rawData", "/raw");

}

void setupCam(){

String[] cameras = Capture.list();

if (cameras.length == 0) {

println("There are no cameras available for capture.");

exit();

} else {

println("Available cameras:");

for (int i = 0; i < cameras.length; i++) {

println(cameras[i]);

}

// The camera can be initialized directly using an

// element from the array returned by list():

cam = new Capture(this, cameras[0]);

cam.start();

}

}

void draw() {

if (cam.available() == true) {

cam.read();

}

translate(width, 0);

scale(-1, 1);

background(0);

mouth.update();

//mouth.drawDebug();

ps.origin =new PVector(mouth.x, mouth.y);

if(mouth.isOpen || mouth.isBlowing){

ps.addParticle();

}

ps.run();

//image(cam, 0, 0);

}

void drawFacePoints() {

int nData = rawArray.length;

for (int val=0; val<nData; val+=2) {

fill(100, 100, 100);

ellipse(rawArray[val], rawArray[val+1], 11, 11);

}

}

// A class to describe a group of Particles

// An ArrayList is used to manage the list of Particles

class ParticleSystem {

ArrayList particles;

PVector origin;

ParticleSystem(PVector position) {

origin = position.copy();

particles = new ArrayList();

}

void addParticle() {

for(int i = 0; i < numParticles; i++){ particles.add(new Particle(origin)); } } void run() { for (int i = particles.size()-1; i >= 0; i--) {

Particle p = particles.get(i);

p.run();

if (p.isDead()) {

particles.remove(i);

}

}

}

}

class Mouth{

boolean isOpen;

boolean isBlowing;

float h;

float w;

float x;

float y;

float xv;

float yv;

void update(){

PVector leftEdge = new PVector(rawArray[96], rawArray[97]);

PVector rightEdge = new PVector(rawArray[108], rawArray[109]);

PVector upperLipTop = new PVector(rawArray[102], rawArray[103]);

PVector upperLipBottom = new PVector(rawArray[122], rawArray[123]);

PVector lowerLipTop = new PVector(rawArray[128], rawArray[129]);

PVector lowerLipBottom = new PVector(rawArray[114], rawArray[115]);

float lastx = x;

float lasty = y;

w = rightEdge.x - leftEdge.x;

x = (rightEdge.x - leftEdge.x)/2 + leftEdge.x;

y = (lowerLipBottom.y - upperLipTop.y)/2 + upperLipTop.y;

h = lowerLipBottom.y - upperLipTop.y;

float distOpen = lowerLipTop.y - upperLipBottom.y;

float avgLipThickness = ((lowerLipBottom.y - lowerLipTop.y) +

(upperLipBottom.y - upperLipTop.y))/2;

if(distOpen > avgLipThickness){ isOpen = true;}

else { isOpen = false;}

if(w/h <= 1.5){ isBlowing = true;}

else { isBlowing = false;}

xv = x - lastx;

yv = y - lasty;

}

void drawDebug(){

if(isOpen || mouth.isBlowing){

strokeWeight(5);

stroke(255, 255, 255, 150);

noFill();

ellipse(x, y, w, h);

}

}

}

// A simple Particle class

class Particle {

PVector position;

PVector velocity;

PVector acceleration;

float lifespan;

Particle(PVector l) {

acceleration = new PVector(0, 0.00);

velocity = new PVector(random(-1, 1), random(-2, 0));

position = l.copy();

lifespan = 255.0;

}

void run() {

update();

display();

}

// Method to update position

void update() {

velocity.add(acceleration);

position.add(velocity);

//lifespan -= 1.0;

velocity.x = velocity.x *.99;

velocity.y = velocity.y *.99;

}

// Method to display

void display() {

//stroke(255, lifespan);

//image(cam, 0, 0);

float[] col = getColor(position.x, position.y);

fill(col[0], col[1], col[2]);

noStroke();

ellipse(position.x, position.y, particleSize,particleSize);

}

// Is the particle still useful?

boolean isDead() {

if (lifespan < 0.0) { return true; } else { return false; } } } public float[] getColor(float x, float y){ cam.loadPixels(); int index = int(y)*width +int(x); float[] col = {0, 0, 0}; if(index > 0 && index < cam.pixels.length){

col[0] = red(cam.pixels[index]);

col[1] = green(cam.pixels[index]);

col[2] = blue(cam.pixels[index]);

}

return col;

}

public void rawData(float[] raw) {

rawArray = raw; // stash data in array

} |

ParticleSystem ps;

import processing.video.*;

Capture cam;

import oscP5.*;

OscP5 oscP5;

int found;

float[] rawArray;

Mouth mouth;

int particleSize = 3;

int numParticles = 20;

void setup() {

size(640, 480);

ps = new ParticleSystem(new PVector(width/2, 50));

frameRate(30);

setupOSC();

setupCam();

mouth = new Mouth();

}

void setupOSC(){

rawArray = new float[132];

oscP5 = new OscP5(this, 8338);

oscP5.plug(this, "found", "/found");

oscP5.plug(this, "rawData", "/raw");

}

void setupCam(){

String[] cameras = Capture.list();

if (cameras.length == 0) {

println("There are no cameras available for capture.");

exit();

} else {

println("Available cameras:");

for (int i = 0; i < cameras.length; i++) {

println(cameras[i]);

}

// The camera can be initialized directly using an

// element from the array returned by list():

cam = new Capture(this, cameras[0]);

cam.start();

}

}

void draw() {

if (cam.available() == true) {

cam.read();

}

translate(width, 0);

scale(-1, 1);

background(0);

mouth.update();

//mouth.drawDebug();

ps.origin =new PVector(mouth.x, mouth.y);

if(mouth.isOpen || mouth.isBlowing){

ps.addParticle();

}

ps.run();

//image(cam, 0, 0);

}

void drawFacePoints() {

int nData = rawArray.length;

for (int val=0; val<nData; val+=2) {

fill(100, 100, 100);

ellipse(rawArray[val], rawArray[val+1], 11, 11);

}

}

// A class to describe a group of Particles

// An ArrayList is used to manage the list of Particles

class ParticleSystem {

ArrayList particles;

PVector origin;

ParticleSystem(PVector position) {

origin = position.copy();

particles = new ArrayList();

}

void addParticle() {

for(int i = 0; i < numParticles; i++){ particles.add(new Particle(origin)); } } void run() { for (int i = particles.size()-1; i >= 0; i--) {

Particle p = particles.get(i);

p.run();

if (p.isDead()) {

particles.remove(i);

}

}

}

}

class Mouth{

boolean isOpen;

boolean isBlowing;

float h;

float w;

float x;

float y;

float xv;

float yv;

void update(){

PVector leftEdge = new PVector(rawArray[96], rawArray[97]);

PVector rightEdge = new PVector(rawArray[108], rawArray[109]);

PVector upperLipTop = new PVector(rawArray[102], rawArray[103]);

PVector upperLipBottom = new PVector(rawArray[122], rawArray[123]);

PVector lowerLipTop = new PVector(rawArray[128], rawArray[129]);

PVector lowerLipBottom = new PVector(rawArray[114], rawArray[115]);

float lastx = x;

float lasty = y;

w = rightEdge.x - leftEdge.x;

x = (rightEdge.x - leftEdge.x)/2 + leftEdge.x;

y = (lowerLipBottom.y - upperLipTop.y)/2 + upperLipTop.y;

h = lowerLipBottom.y - upperLipTop.y;

float distOpen = lowerLipTop.y - upperLipBottom.y;

float avgLipThickness = ((lowerLipBottom.y - lowerLipTop.y) +

(upperLipBottom.y - upperLipTop.y))/2;

if(distOpen > avgLipThickness){ isOpen = true;}

else { isOpen = false;}

if(w/h <= 1.5){ isBlowing = true;}

else { isBlowing = false;}

xv = x - lastx;

yv = y - lasty;

}

void drawDebug(){

if(isOpen || mouth.isBlowing){

strokeWeight(5);

stroke(255, 255, 255, 150);

noFill();

ellipse(x, y, w, h);

}

}

}

// A simple Particle class

class Particle {

PVector position;

PVector velocity;

PVector acceleration;

float lifespan;

Particle(PVector l) {

acceleration = new PVector(0, 0.00);

velocity = new PVector(random(-1, 1), random(-2, 0));

position = l.copy();

lifespan = 255.0;

}

void run() {

update();

display();

}

// Method to update position

void update() {

velocity.add(acceleration);

position.add(velocity);

//lifespan -= 1.0;

velocity.x = velocity.x *.99;

velocity.y = velocity.y *.99;

}

// Method to display

void display() {

//stroke(255, lifespan);

//image(cam, 0, 0);

float[] col = getColor(position.x, position.y);

fill(col[0], col[1], col[2]);

noStroke();

ellipse(position.x, position.y, particleSize,particleSize);

}

// Is the particle still useful?

boolean isDead() {

if (lifespan < 0.0) { return true; } else { return false; } } } public float[] getColor(float x, float y){ cam.loadPixels(); int index = int(y)*width +int(x); float[] col = {0, 0, 0}; if(index > 0 && index < cam.pixels.length){

col[0] = red(cam.pixels[index]);

col[1] = green(cam.pixels[index]);

col[2] = blue(cam.pixels[index]);

}

return col;

}

public void rawData(float[] raw) {

rawArray = raw; // stash data in array

}