#include "../../bundle/basil.js";

var numPages;

var neighborNums = [48, 40, 33];

var neighborNames = ["carpenter_fan_zhang", "cho_fang_nishizaki", "gersing_kim_powell"];

var natureNums = [5, 13, 6, 6, 5];

var natureNames = ["carpenter", "delgado", "fang", "powell", "zhai"];

var stuffNums = [8, 9, 2, 8, 19, 7];

var stuffNames = ["carpenter", "delgado", "fang", "powell", "vzhou", "zhai"];

var trashNums = [13, 12, 15, 6, 5];

var trashNames = ["carpenter_ezhou", "choe_fang", "choi_zhai", "delgado_powell", "zhai_vzhou"];

var usedImages = [];

function setup() {

var jsonString2 = b.loadString("neighborhood/essay (18).json");

var jsonString1 = b.loadString("stuff/essay (44).json");

b.clear (b.doc());

var jsonData1 = b.JSON.decode( jsonString1 );

var paragraphs1 = jsonData1.paragraphs;

var jsonData2 = b.JSON.decode( jsonString2 );

var paragraphs2 = jsonData2.paragraphs;

b.println("paragraphs: " + paragraphs1.length + "+" + paragraphs2.length);

var inch = 72;

var titleW = inch * 5.0;

var titleH = inch * 0.5;

var titleX = (b.width / 2) - (titleW / 2);

var titleY = inch;

var paragraphX = inch / 2.0;

var paragraphY = (b.height / 2.0) + (inch * 1.5);

var paragraphW = b.width - inch;

var paragraphH = (b.height / 2.0) - (inch * 2.0);

var imageX = inch / 2.0;

var imageY = inch / 2.0;

var imageW = b.width - (inch);

var imageH = (b.height * 0.5) + inch;

numPages = 0;

usedImages.push("");

//first page of book

//newPage();

numPages++;

b.fill(0);

b.textSize(52);

b.textFont("Archivo Black", "Regular");

b.textAlign(Justification.CENTER_ALIGN, VerticalJustification.CENTER_ALIGN);

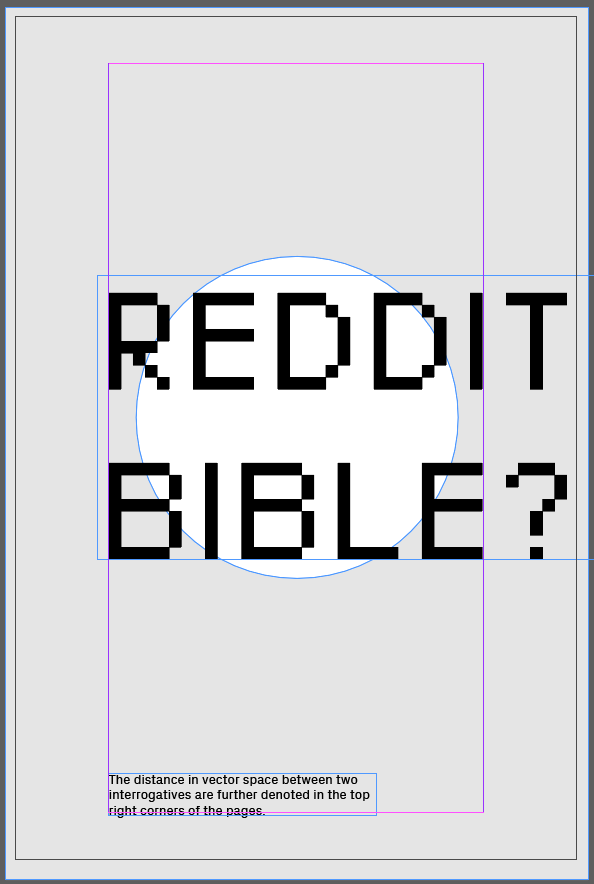

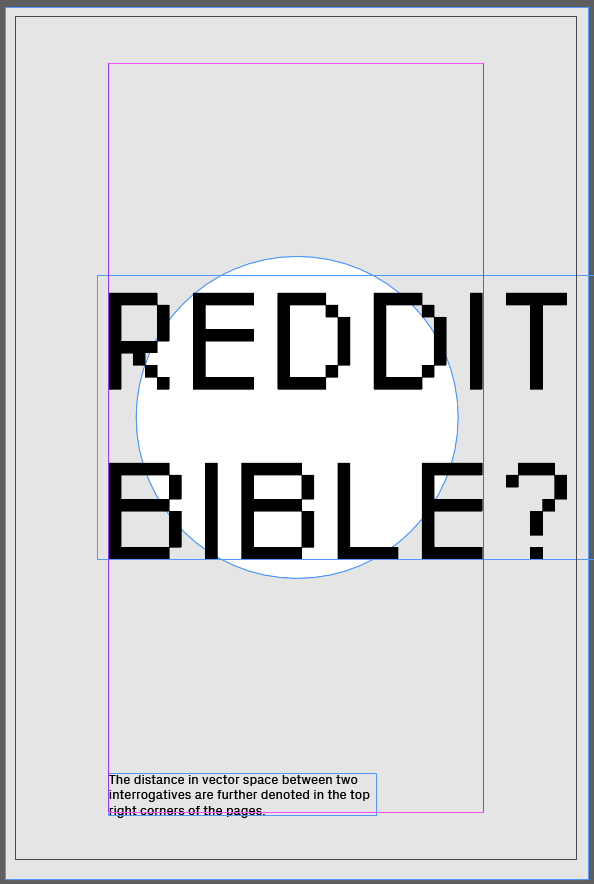

b.text("Plagiarizing:", inch / 2.0, b.height / 2.0 - inch, b.width - inch, inch);

b.fill(0);

b.textSize(20);

b.textFont("Archivo", "Bold");

b.textAlign(Justification.CENTER_ALIGN, VerticalJustification.CENTER_ALIGN);

b.text("A reinterpretation of other people's photo-essays from Placing", inch / 2.0, b.height / 2.0,

b.width - inch, inch);

//introduce first essay

newPage();

b.fill(0);

b.textSize(36);

b.textFont("Archivo Black", "Regular");

b.textAlign(Justification.CENTER_ALIGN, VerticalJustification.CENTER_ALIGN);

b.text(paragraphs1[0].title + "ing", inch / 2.0, b.height - inch * 2,

b.width - inch, inch);

b.noStroke();

var coverImageName = imageName(paragraphs1[0].title);

var coverImage = b.image(coverImageName, inch / 2.0,

inch / 2.0, b.width - inch, (b.height / 2.0) + (2 * inch));

coverImage.fit(FitOptions.PROPORTIONALLY);

for(var i = 0; i < paragraphs1[0].text.length; i++) {

newPage();

b.fill(0);

b.textSize(12);

b.textFont("Archivo", "Regular");

b.textAlign(Justification.LEFT_ALIGN, VerticalJustification.TOP_ALIGN);

b.text("\t" + paragraphs1[0].text[i].substring(1), paragraphX, paragraphY,

paragraphW, paragraphH);

b.noStroke();

var imgName = imageName(paragraphs1[0].title);

var img = b.image(imgName, imageX, imageY, imageW, imageH);

img.fit(FitOptions.PROPORTIONALLY);

};

if(numPages % 2 == 0) {

newPage();

}

//Second Photo Essay

newPage();

b.fill(0);

b.textSize(36);

b.textFont("Archivo Black", "Regular");

b.textAlign(Justification.CENTER_ALIGN, VerticalJustification.CENTER_ALIGN);

b.text(paragraphs2[0].title + "ing", inch / 2.0, b.height - inch * 2,

b.width - inch, inch);

usedImages = [""];

b.noStroke();

coverImageName = imageName(paragraphs2[0].title);

coverImage = b.image(coverImageName, inch / 2.0, inch / 2.0, b.width - inch,

(b.height / 2.0) + (2 * inch));

coverImage.fit(FitOptions.PROPORTIONALLY);

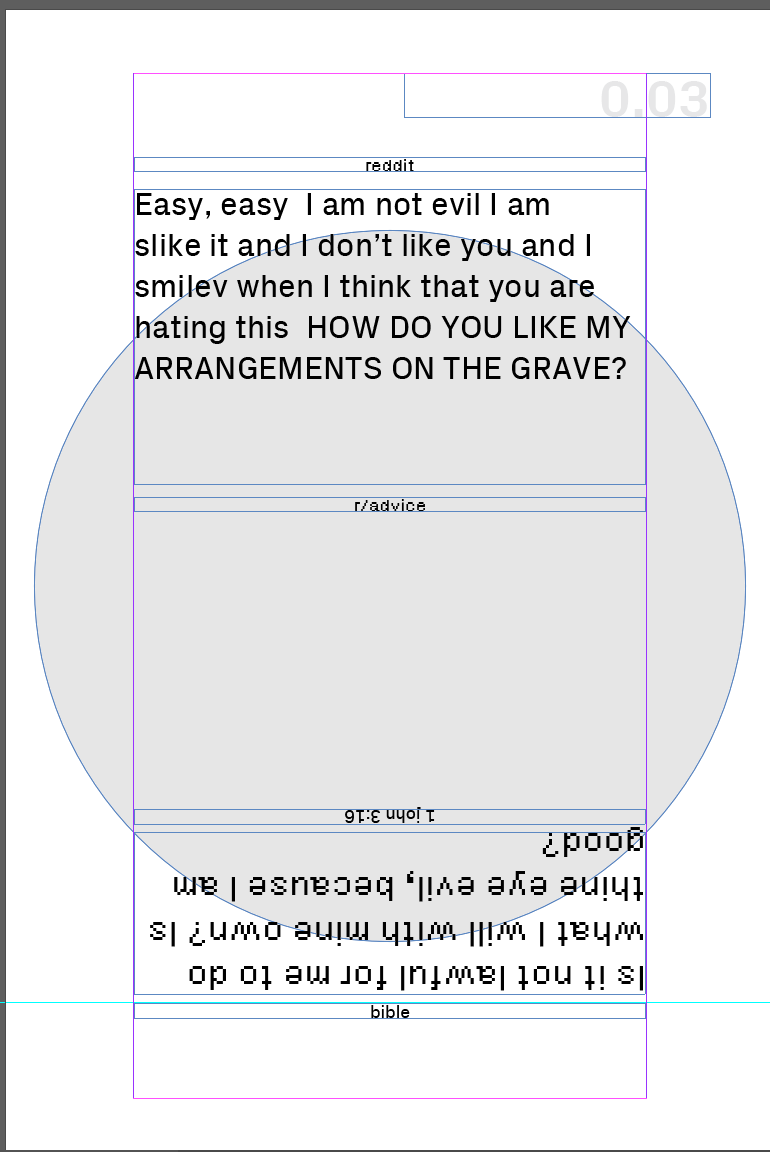

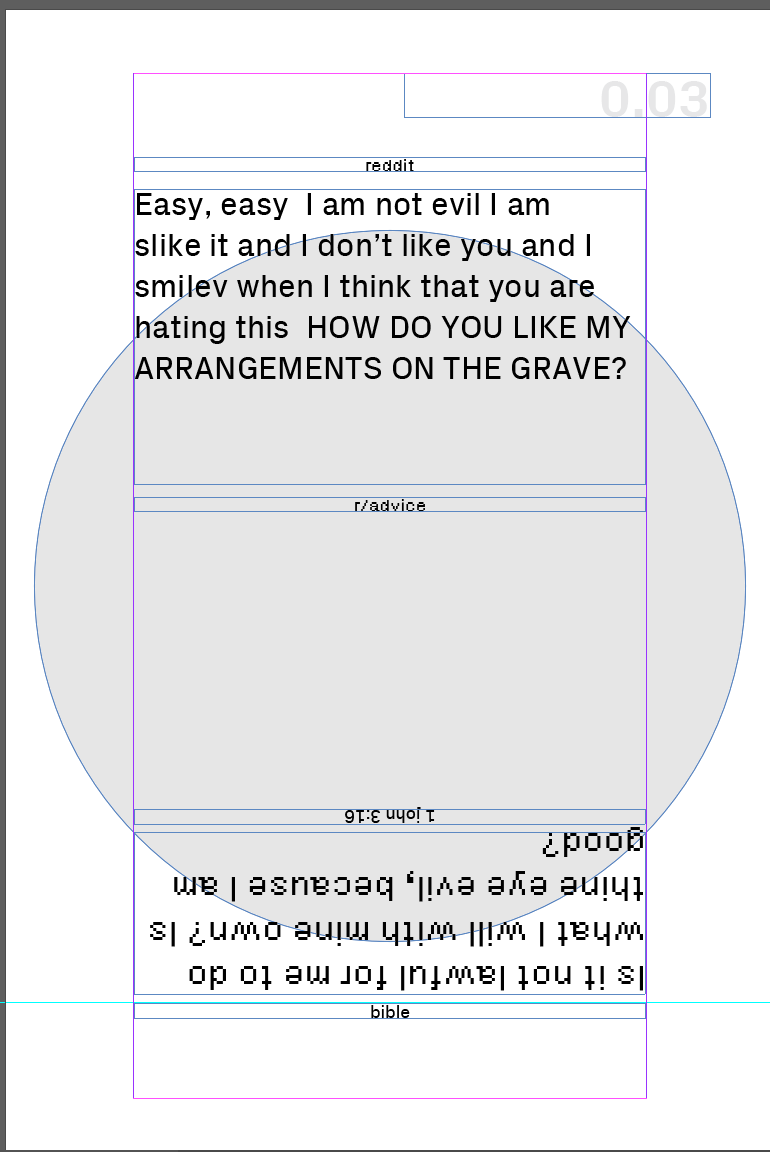

for(var i = 0; i < paragraphs2[0].text.length; i++) {

newPage();

b.fill(0);

b.textSize(12);

b.textFont("Archivo", "Regular");

b.textAlign(Justification.LEFT_ALIGN, VerticalJustification.TOP_ALIGN);

b.text("\t" + paragraphs2[0].text[i].substring(1), paragraphX, paragraphY,

paragraphW, paragraphH);

b.noStroke();

var imgName = imageName(paragraphs2[0].title);

var img = b.image(imgName, imageX, imageY, imageW, imageH);

img.fit(FitOptions.PROPORTIONALLY);

};

//give credit to original authors and photographs

newPage();

b.fill(0);

b.textSize(14);

b.textFont("Archivo", "Bold");

b.textAlign(Justification.CENTER_ALIGN, VerticalJustification.CENTER_ALIGN);

b.text(paragraphs1[0].title + "ing", inch / 2.0, (b.height / 2.0) - (inch * 1.5),

b.width - inch, inch / 2.0);

var authors = generateCredits(paragraphs1[0].title);

b.textSize(12);

b.textFont("Archivo", "Regular");

b.textAlign(Justification.LEFT_ALIGN, VerticalJustification.TOP_ALIGN);

b.text("Original text and photos by:", inch / 2.0, (b.height / 2.0) - inch,

b.width - inch, 14.4);

b.text(authors.join(", "), inch, (b.height / 2.0) - inch + 14.4,

b.width - (inch * 1.5), inch - 14.4);

b.textSize(14);

b.textFont("Archivo", "Bold");

b.textAlign(Justification.CENTER_ALIGN, VerticalJustification.CENTER_ALIGN);

b.text(paragraphs2[0].title + "ing", inch / 2.0, (b.height / 2.0),

b.width - inch, inch / 2.0);

authors = generateCredits(paragraphs2[0].title);

b.textSize(12);

b.textFont("Archivo", "Regular");

b.textAlign(Justification.LEFT_ALIGN, VerticalJustification.TOP_ALIGN);

b.text("Original text and photos by:", inch / 2.0,

(b.height / 2.0) + (inch * 0.5), b.width - inch, 14.4);

b.text(authors.join(", "), inch, (b.height / 2.0) + (inch * 0.5) + 14.4,

b.width - (inch * 1.5), inch - 14.4);

if(numPages % 2 != 0) {

newPage();

}

}

function newPage() {

b.addPage();

numPages++;

}

function imageName(assignment) {

var fileName = "";

while(usedImagesIncludes(fileName)){

if(assignment == "Neighborhood") {

var i = b.floor(b.random(neighborNames.length));

fileName = neighborNames[i] + b.floor(b.random(neighborNums[i]) + 1);

} else if(assignment == "Nature") {

var i = b.floor(b.random(natureNames.length));

fileName = natureNames[i] + b.floor(b.random(natureNums[i]) + 1);

} else if(assignment == "Trash") {

var i = b.floor(b.random(trashNames.length));

fileName = trashNames[i] + b.floor(b.random(trashNums[i]) + 1);

} else {

var i = b.floor(b.random(stuffNames.length));

fileName = stuffNames[i] + b.floor(b.random(stuffNums[i]) + 1);

}

}

usedImages.push(fileName);

return "images/" + assignment + "/" + fileName + ".jpg";

}

function usedImagesIncludes(fileName) {

for(var i = 0; i < usedImages.length; i++) {

if(usedImages[i] == fileName)

return true;

}

return false;

}

function generateCredits(assignment) {

if(assignment == "Neighborhood") {

return ["Sebastian Carpenter", "Danny Cho", "Sophia Fan", "Alice Fang", "Margot Gersing",

"Jenna Kim", "Julia Nishizaki", "Michael Powell", "Jean Zhang"];

} else if(assignment == "Nature") {

return ["Sebastian Carpenter", "Daniela Delgado", "Alice Fang",

"Michael Powell", "Sabrina Zhai"];

} else if(assignment == "Trash") {

return ["Sebastian Carpenter", "Eunice Choe", "Julie Choi", "Daniela Delgado", "Alice Fang",

"Mimi Jiao", "Michael Powell", "Sabrina Zhai", "Emily Zhou"];

} else {

return ["Sebastian Carpenter", "Daniela Delgado", "Michael Powell",

"Sabrina Zhai", "Vicky Zhou"];

}

}

b.go(); |