var kernels = [];

var activeKernels;

var colSize;

var rowSize;

var img;

var kern1;

var kern2;

var kern3;

var kernMask;

var randDelay;

var t = 0;

var blink = 0;

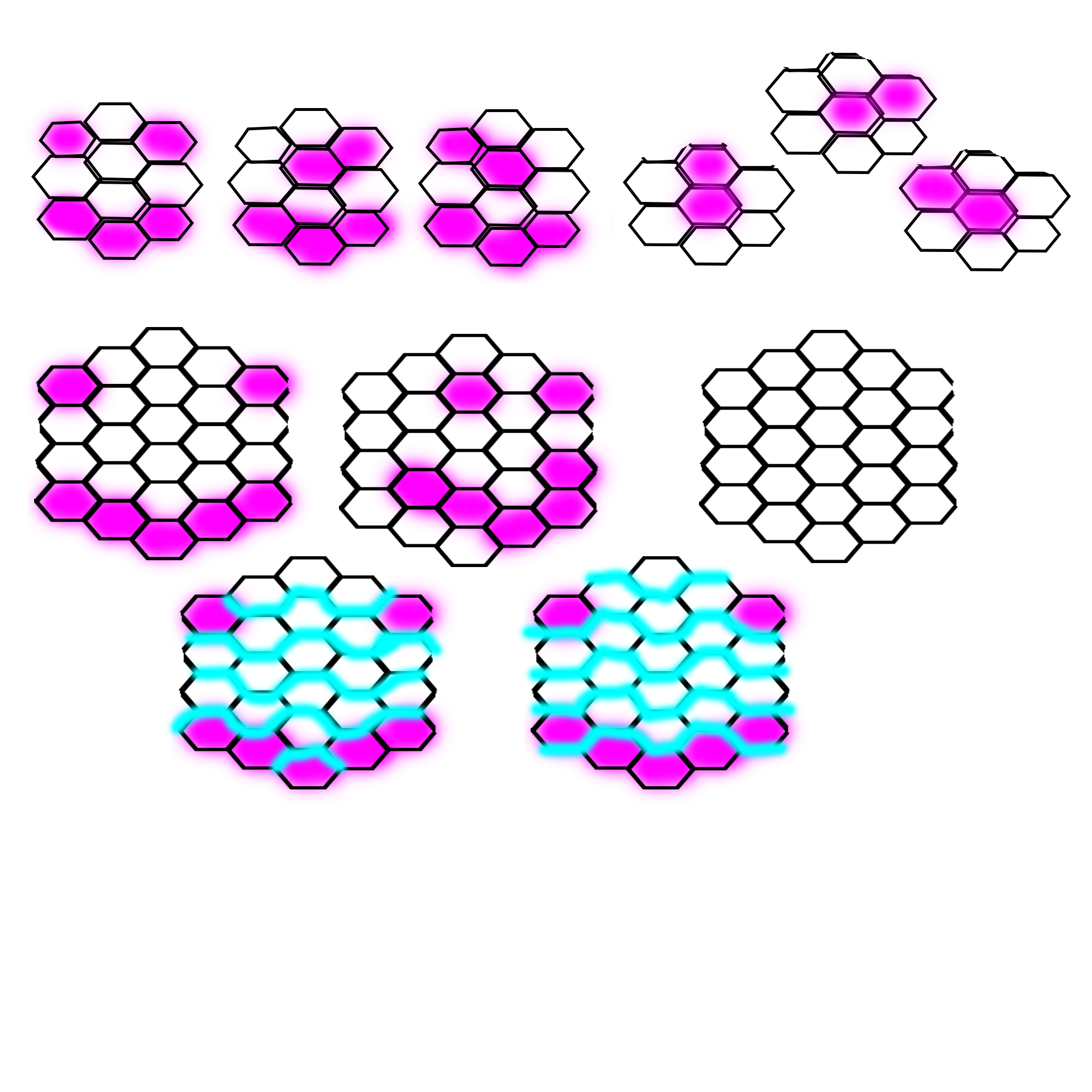

var faces = [

[

[

[1, -1],

[-1, -1],

[-1, 1],

[0, 1],

[1, 1]

],

[

[1, -2],

[-1, -2],

[1, 0],

[-1, 0],

[0, 1]

]

],

[

[

[2, -2],

[-2, -2],

[-2, 1],

[-1, 2],

[0, 2],

[1, 2],

[2, 1]

],

[

[2, -2],

[-2, -2],

[-2, 1],

[-1, 1],

[0, 2],

[1, 1],

[2, 1]

]

]

,[[[0,0],[0,-1]],[[0,0],[1,-1]]]];

var rat;

function preload() {

img = loadImage("assets/corn_0.png");

kern1 = loadImage("assets/corn_1.png");

kern2 = loadImage("assets/corn_2.png");

kern3 = loadImage("assets/corn_3.png");

kernMask = loadImage("assets/corn_matte.png");

}

var ctracker;

function setup() {

// setup camera capture

randDelay = random(50,200);

var videoInput = createCapture();

videoInput.size(400, 300);

videoInput.position(0, 0);

// setup canvas

cnv = createCanvas(800, 800);

rat = 2000 / width;

rowSize = height / 16;

colSize = width / 11;

loadKernels();

cnv.position(0, 0);

// setup tracker

ctracker = new clm.tracker();

ctracker.init(pModel);

ctracker.start(videoInput.elt);

noStroke();

}

function draw() {

push();

translate(width,0);

scale(-1,1);

clear();

strokeWeight(5);

image(img, 0, 0, width, height);

//image(kern1,0,0,width, height);

//image(kernels[ck[0]][ck[1]].img,0,0,width,height);

// get array of face marker positions [x, y] format

var positions = ctracker.getCurrentPosition();

var p = positions[62];

var q = positions[28];

var r = positions[23];

// for (var i = 0; i < positions.length; i++) { // stroke("black"); //if (i == 23) stroke("green"); // set the color of the ellipse based on position on screen //fill(map(positions[i][0], width * 0.33, width * 0.66, 0, 255), map(positions[i][1], height * 0.33, height * 0.66, 0, 255), 255); // draw ellipse at each position point //ellipse(positions[i][0], positions[i][1], 8, 8); // } if (p != undefined) { var d = dist(q[0],q[1],r[0],r[1]); var size = 0; if (d>110) size =1;

if (d<40) size =2; var ck = getCoors(map(p[0],0,255,0, 800),map(p[1],0,255,0, 800)); drawFace(ck[0], ck[1], size); } pop(); if (t>=randDelay) {

t=0;

blink=7;

randDelay = random(50,200);

}

t++;

if (blink>0) blink--;

print (p);

}

function Kernel(col, row) {

this.col = col;

this.row = row;

this.x = colSize * (col + 0.5);

this.y = rowSize * (row + 0.75);

this.upCol = col % 2 == 0;

if (this.upCol) this.y -= rowSize / 2;

this.img = img;

if (col % 2 == 0) {

if (row % 3 == 0) this.img = kern2;

if (row % 3 == 1) this.img = kern3;

if (row % 3 == 2) this.img = kern1;

} else {

if (row % 3 == 0) this.img = kern1;

if (row % 3 == 1) this.img = kern2;

if (row % 3 == 2) this.img = kern3;

}

this.show = function() {

var l = this.x - colSize;

var gLeft = l * rat;

var t = this.y - rowSize;

var gTop = t * rat;

var w = colSize * 2;

var gWidth = w * rat;

var h = rowSize * 2;

var gHeight = h * rat;

var dis = this.img.get(gLeft, gTop, gWidth, gHeight);

dis.mask(kernMask);

image(dis, l, t, w, h);

};

}

function loadKernels() {

var col = [];

for (var c = 0; c < 11; c++) {

for (var r = 0; r < 16; r++) {

col.push(new Kernel(c, r));

}

kernels.push(col);

col = [];

}

}

function getCoors(x, y) {

var col = x / colSize - 0.5;

if (round(col) % 2 == 0) y += rowSize / 2;

var row = y / rowSize - 0.75;

if (col < 0) col = 0; if (col > 10) col = 10;

if (row > 15) row = 15;

if (row < 0) row = 0;

return [int(round(col)), int(round(row))];

}

function drawFace(col, row, size) {

var face = faces[size][0];

if (col % 2 == 0) face = faces[size][1];

var p;

var c = 0;

var r = 0;

for (var i = 0; i < face.length; i++) { p = face[i]; c = int(col + p[0]); r = int(row + p[1]); if ((c >= 0 && r >= 0 && c < 11 && r < 16)&&

!(blink!=0&&p[1]<0))kernels[c][r].show();

}

} |