Feedback on FaceOSC

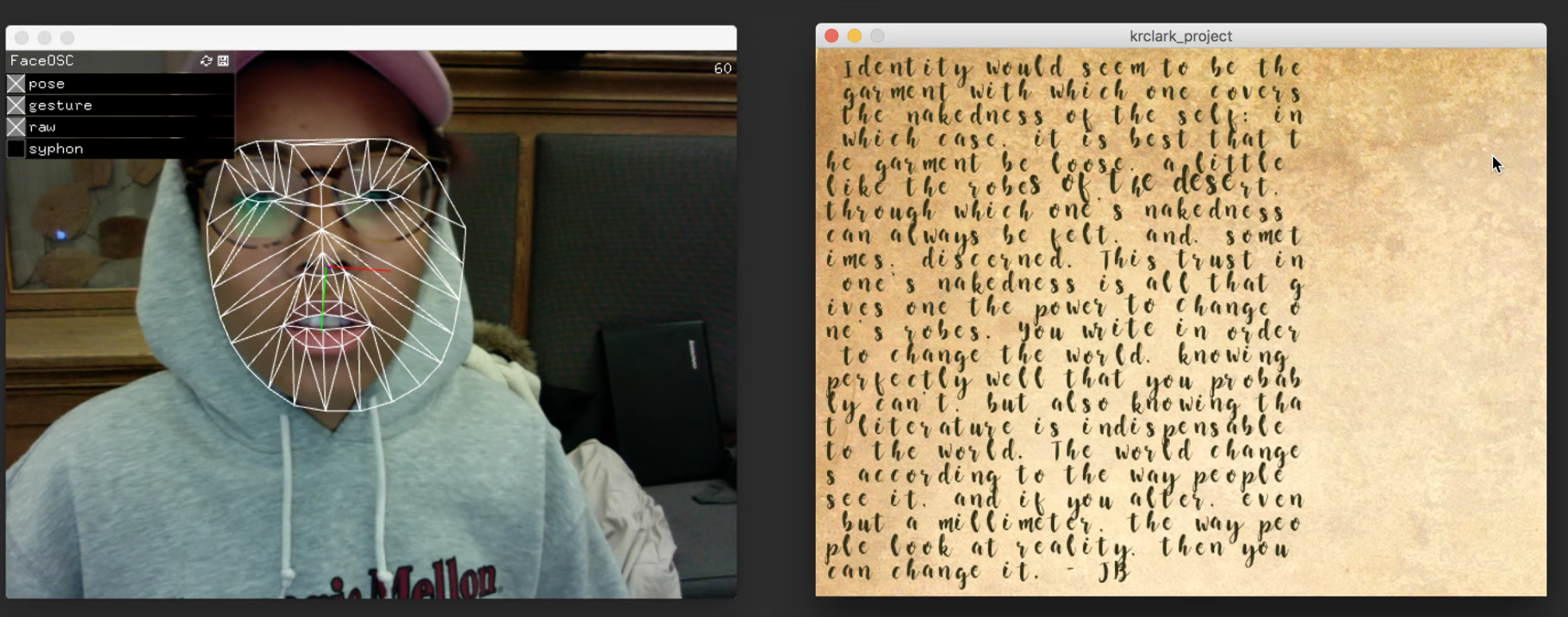

For this project, students were asked to develop a piece of “face controlled software” using FaceOSC, a high-quality real-time face tracker. Most students developed their projects in Processing.

External Reviewers

Our reviewers for this assignment were Kyle McDonald and Caroline Record.

Caroline Record is an artist and technologist who uses code to create her own artistic systems. These systems are at once clever and sensual, incorporating extreme tactility with ephemeral, abstract logic. Caroline works in Pittsburgh as an independent artist and freelance consultant.

Kyle McDonald is an artist who works in the open with code. He is a contributor to arts-engineering toolkits like openFrameworks, and spends a significant amount of time building tools that allow artists to use new algorithms in creative ways. His work is very process-oriented, and he has made a habit of sharing ideas and projects in public before they’re completed. He enjoys creatively subverting networked communication and computation, exploring glitch and embedded biases, and extending these concepts to reversal of everything from personal identity to work habits.

aliot

aliot-faceosc: a face-controlled eating game

Caroline Record: It’s a satisfying and intuitive interaction. Good attention to detail with the scores and assets. I want some more campy grotesque detail. Perhaps some drool and teeth?

Kyle McDonald: Funny idea and reasonable execution. “Better” graphics are less important than “consistent” graphics. The ellipse and white default font is the only thing making it feel like a demo.

anson

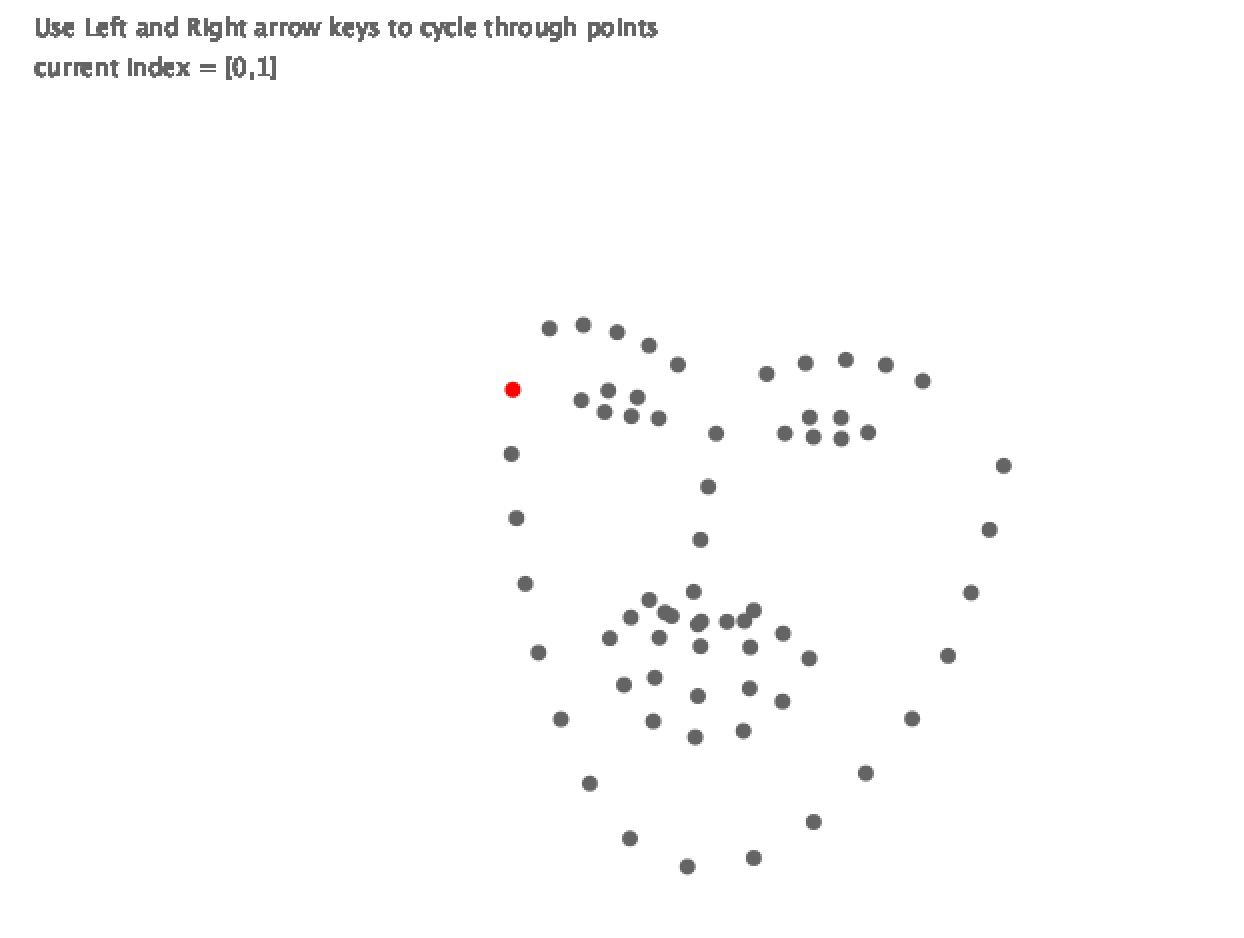

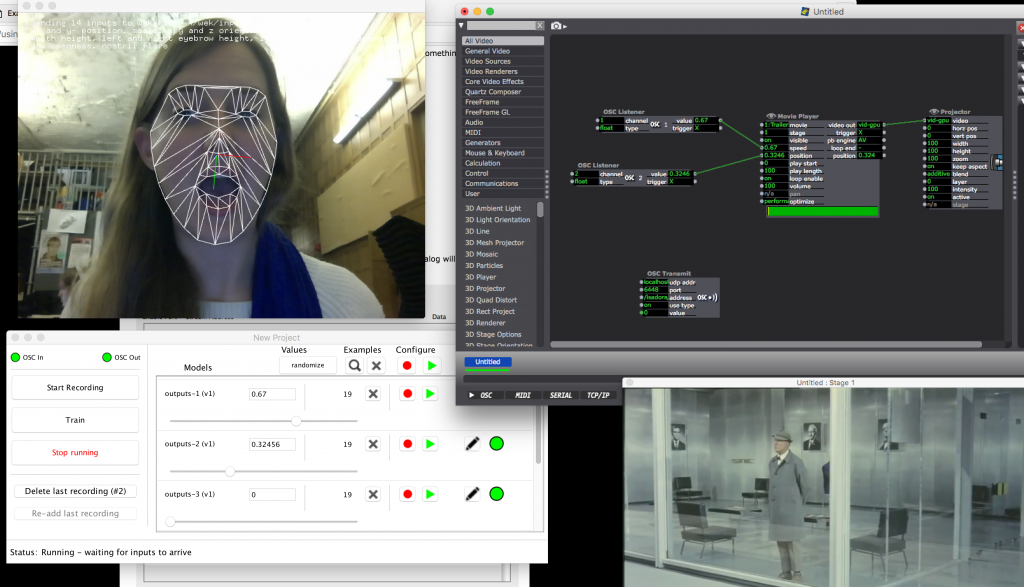

anson-FaceOSC: video playback articulated by facial expression

Caroline Record: I want to know more about how you envision this existing as an experience in the world. Perhaps a sensorial installation? An internet meditation tool? The connection between the mouth opening and the waves coming in is intriguing, but not intuitive and seems unconsidered.

Kyle McDonald: No relationship between gesture and experience/content. Technically, Wekinator is better suited for recognizing more complex gestures than a mouth opening.

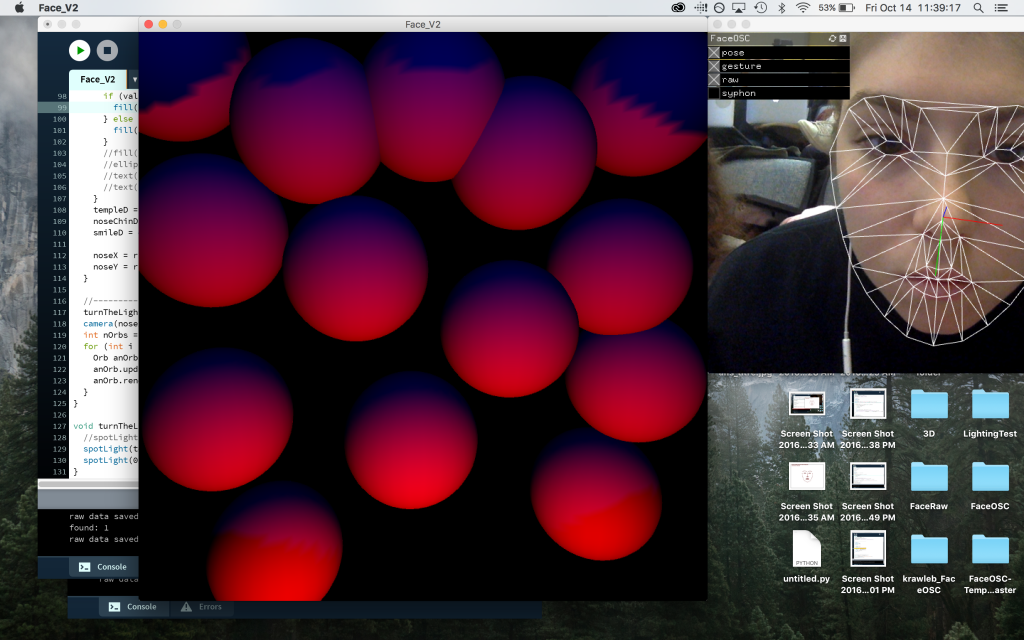

antar

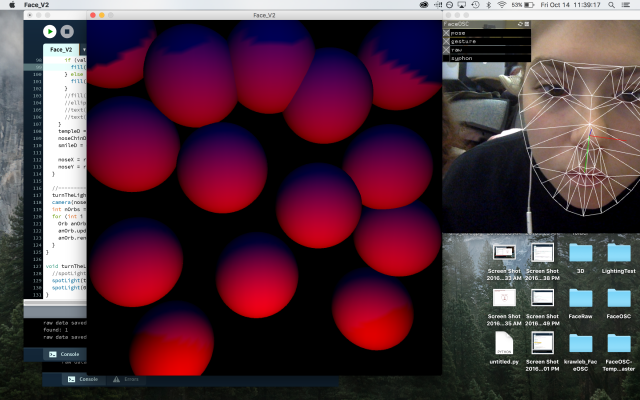

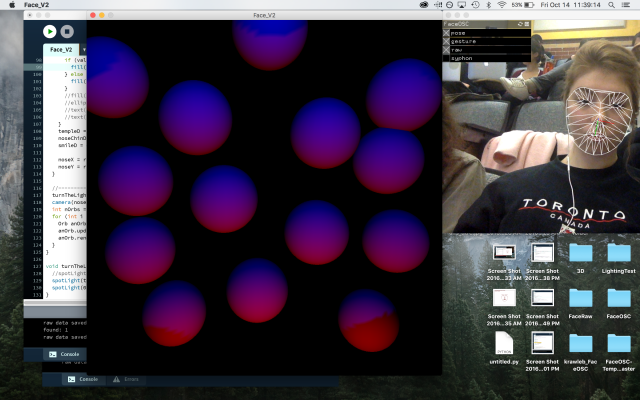

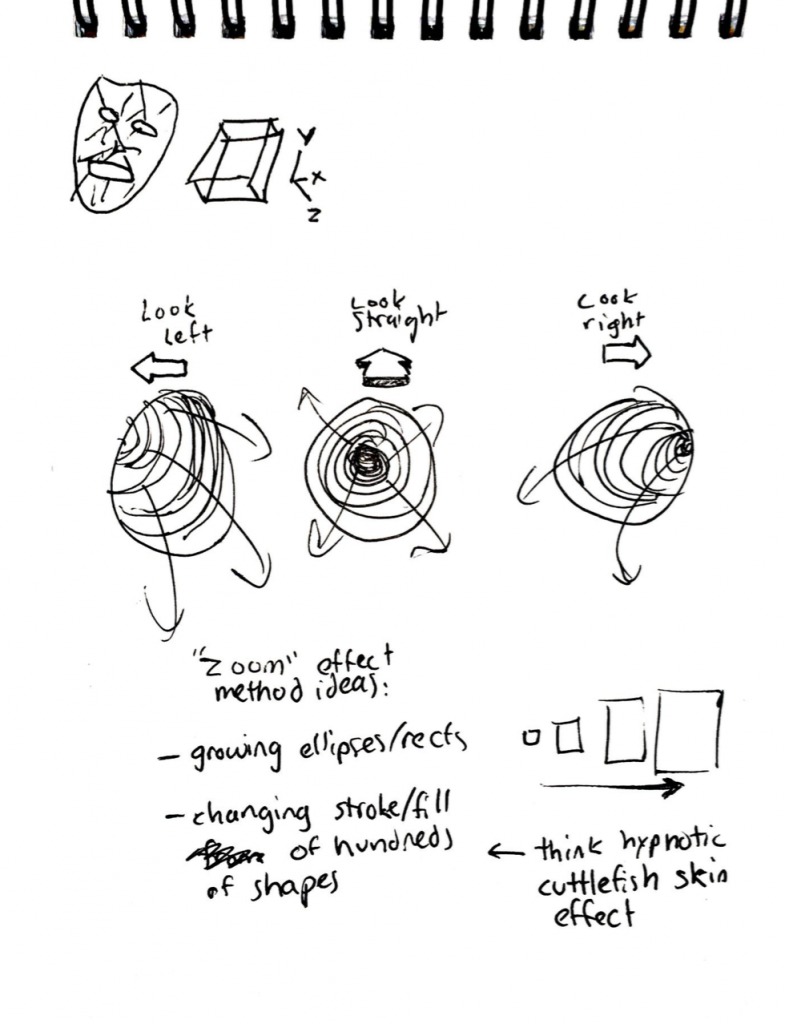

Antar-FaceOSC: face-controlled 3D spheres

Caroline Record: Nice jiggling. The lighting and volumetric nature of this reminds me of Robert Hodgin’s Body Dysmorphia project. It would of been interesting to use the z dimension more dynamically and perhaps apply some simple physics based off x and y position.

Kyle McDonald: Good starting point, clean code. Needs to be explored further visually and conceptually. Orbs are in an ambiguous zone between “abstract living creature” and “minimally-designed demo”, and need to go harder in one or both directions.

arialy

arialy-faceosc: first-person face-controlled navigation experience

Caroline Record: Nice pulsing and sound design. I think it would of much improved the project to have the face directly control something other than a neutral white circle. Something that fits within the landscape and would scare away the fireflies if aggravated.

Kyle McDonald: Good concept (stillness). Needs more visual work, there’s no reason non-generative/interactive elements like the background need to be drawn with Processing, they could be imported as an image. Use of audio amplitude is subtle and effective.

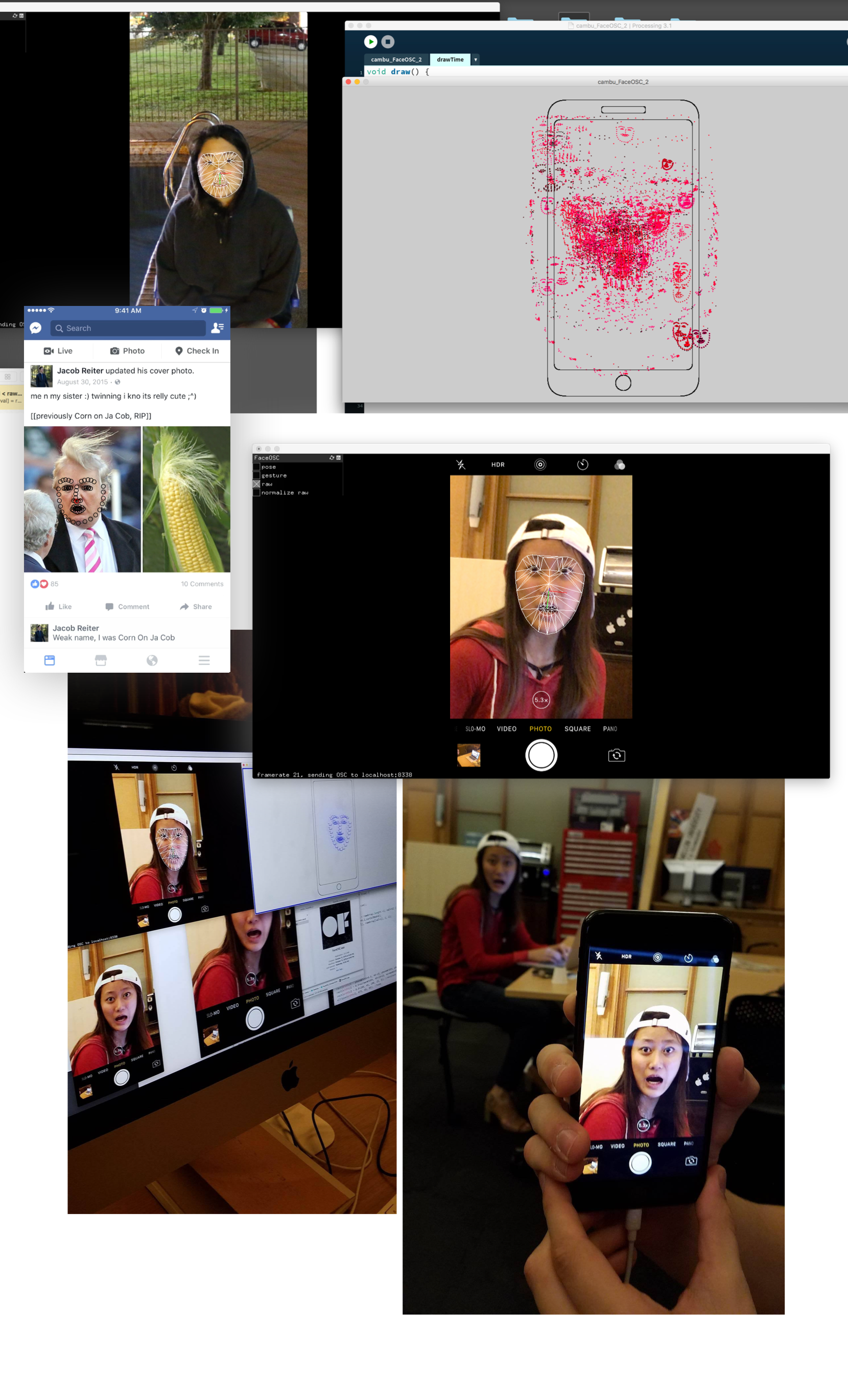

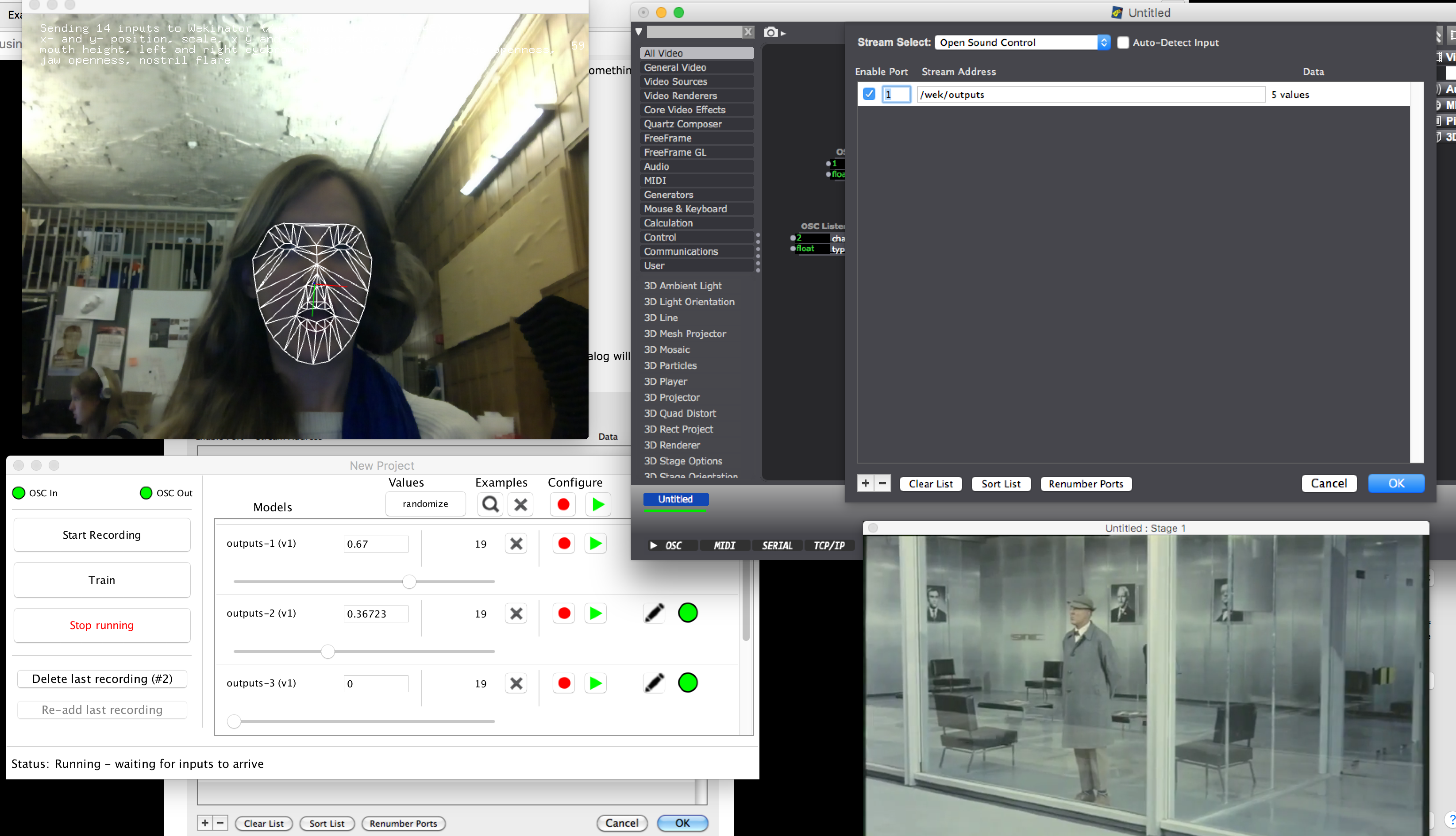

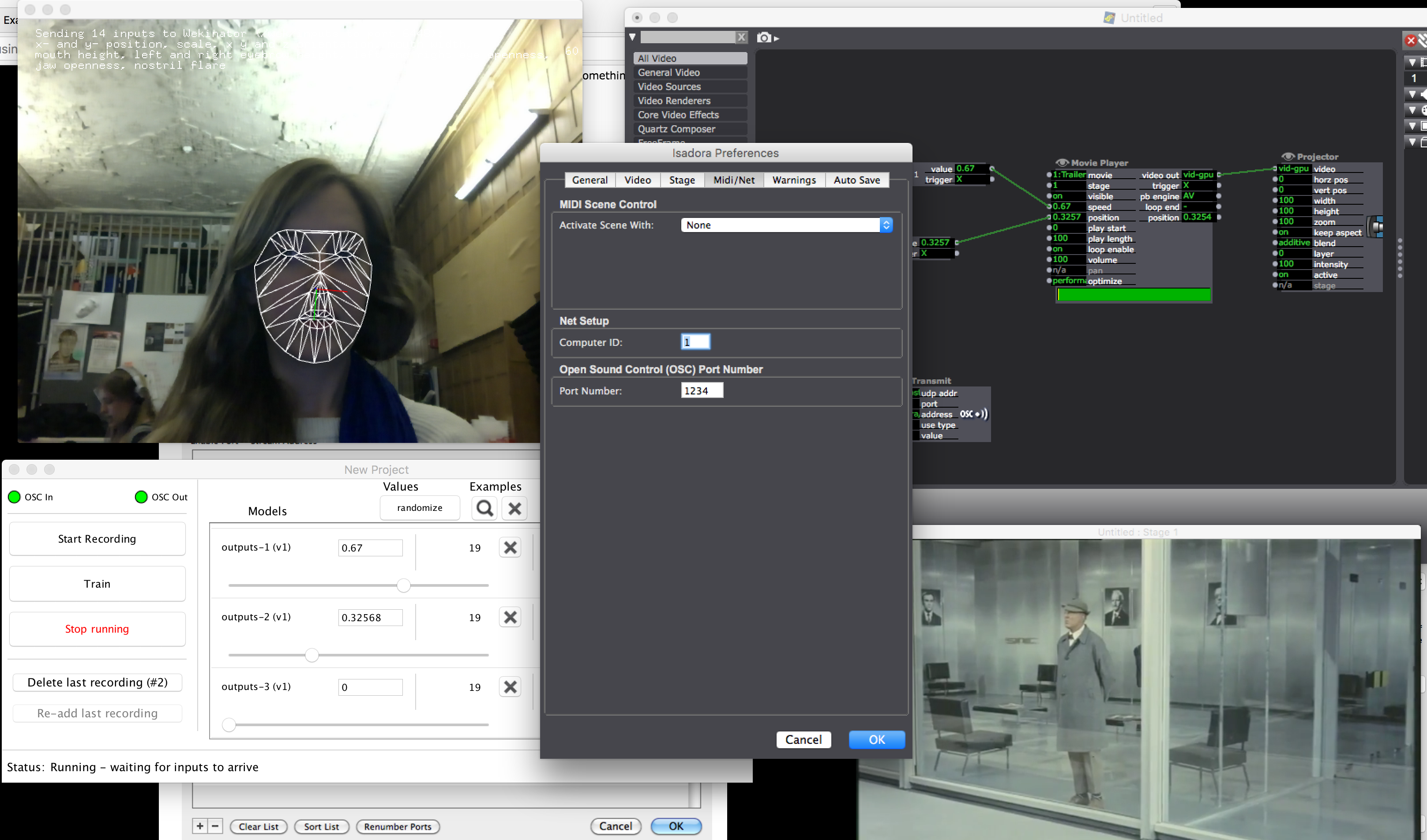

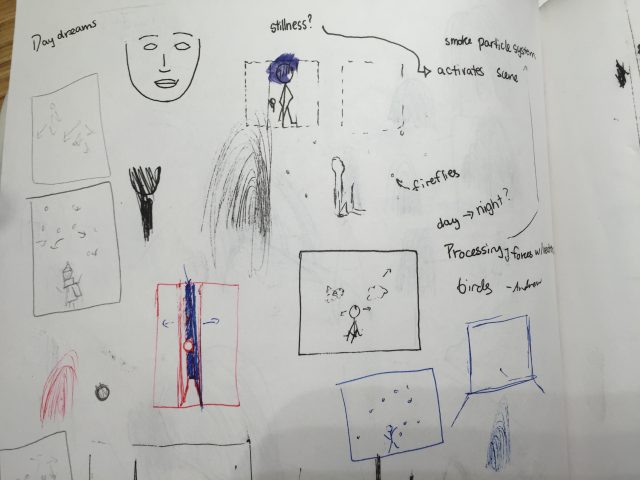

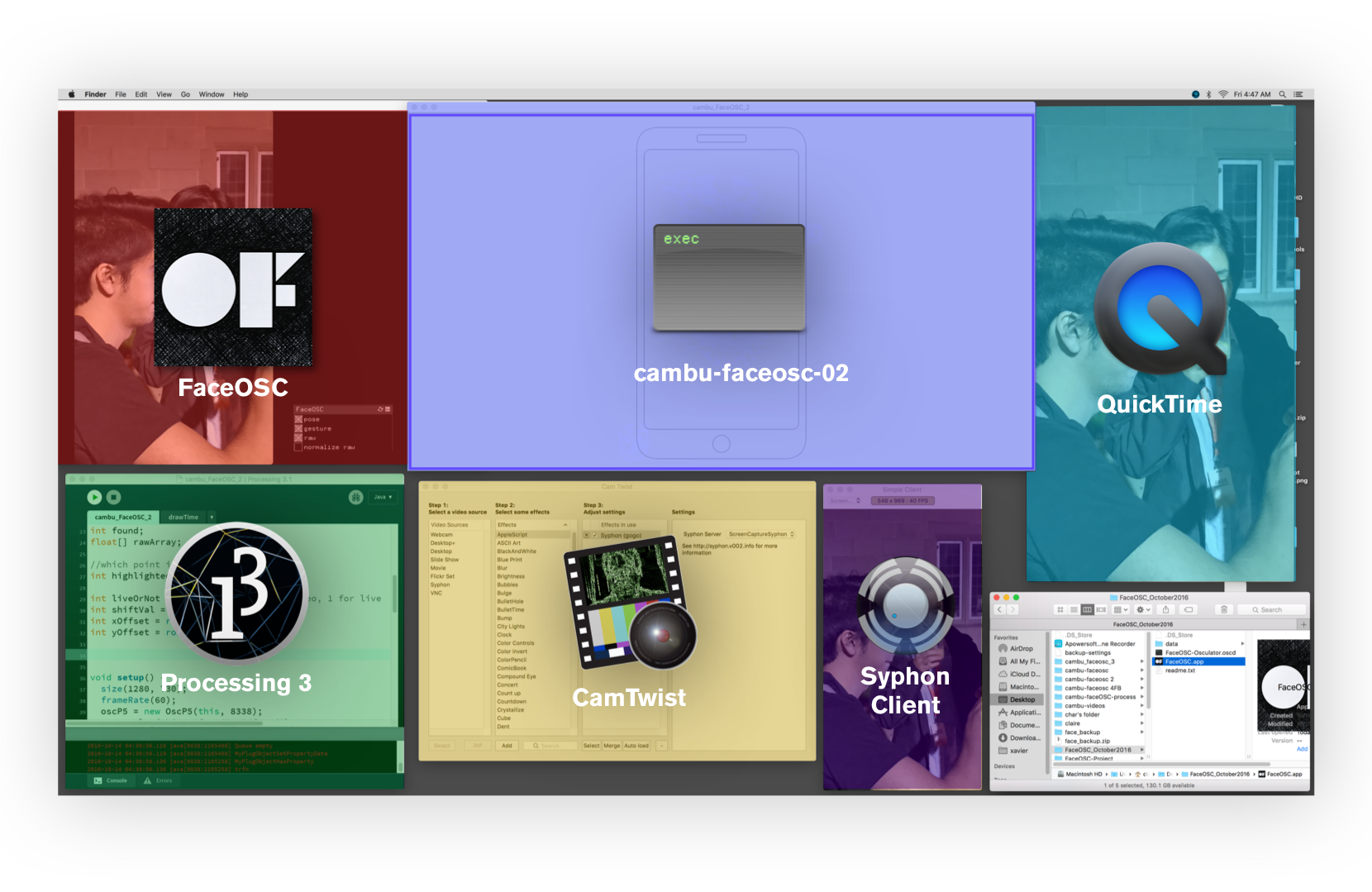

cambu

cambu-faceosc: examination of faces encountered during Facebook browsing

Caroline Record: It took me a bit to figure out what was happening here and I think you could refine how you tell the story of this piece. However I think that it is a very solid technical and conceptual investigation. It reminds me of finger smudges left on phone screens. I would experiment more with how those residual faces prints are expressed visually.

Kyle McDonald: Good concept, good use of chaining software. Difficult to interpret the significance/meaning from sample videos alone, and still retains a bit of “demo aesthetics”. Technically, this is a situation where offline processing would work better, recording a video from the phone and later detecting the faces.

catlu

Catlu–FaceOSC: face-controlled abstract avatar

Caroline Record: I second your self-assesment of this project. It feels like you fullfilled this assignment with no verve.

Kyle McDonald: Uninteresting concept, but the mechanism is well executed. Needs visual (and maybe sound) work, but more importantly needs a context or rationale for producing this interaction.

darca

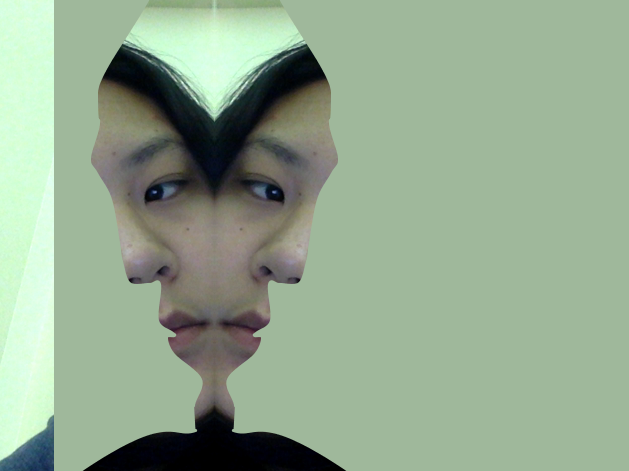

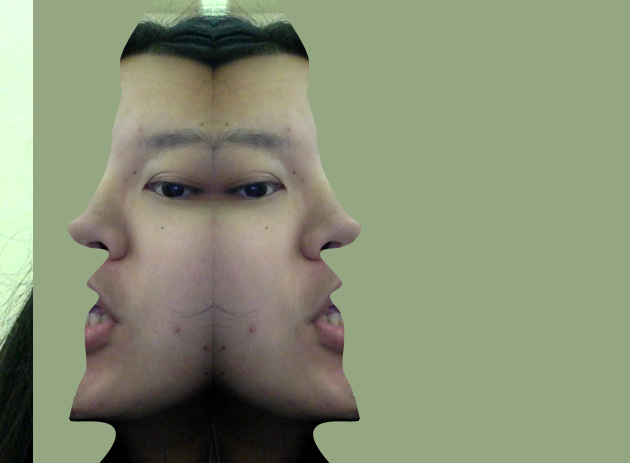

Darca-FaceOSC: profile-play: an ambiguous transformation of the face

Caroline Record: Lovely and subtle play on negative space and simulating the shape falling over the form of the face. Nice detail of having the overlay match the background color. I think you could of done without the mouth interaction as this interrupts central mechanic. You need more written documentation.

Kyle McDonald: Well done, but the way the mirror effect breaks down on the edges is very visually distracting. Part of a long tradition exploring the boundary between the portrait/frontal and profile perspectives of a face. Lovely forms, and the few hand experiments show that you’re watching for the unexpected outcomes.

drewch

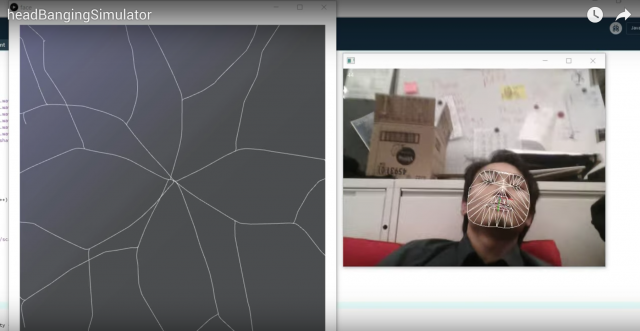

Drewch – FaceOSC: face-controlled “head-banging simulator”

Caroline Record: I think this is a delightfully irreverent project where the interaction is intuitive and makes sense in the context of the laptop screen. It would improve it to be full-screened and to be grabbing the texture of the entire desktop and overlaying the cracking pattern on-top of it.

Kyle McDonald: Made me laugh. The graphics are simple but effective. Documentation would have been better with the app fullscreen, and a handheld video showing someone headbanging.

guodu

guodu-faceosc: face-driven accretive painting system

Caroline Record: What is strong about Text Rain is the direct link between the physical silhouette and the virtual letters. The link between the face movement and the text on the screen is very indirect here. It could of done with drawing an abstract avatar of the face or having the previous words fade away. The post needs more written context.

Kyle McDonald: Totally unnecessary that it was made with face tracking, but the quality of some results make up for the lack of concept.

hizlik

hizlik-faceosc: first-person face-driven starfield navigation

Caroline Record: Pristine and self-contained project. It would be worthwhile to investigate translating this into a VR experience. It was unclear what choice you were making with drawing the raw points in the upper left corner of the screen. Perhaps you can center this and make it more visually distinct from the background marks. You could even abstract this to a set of shapes reaching into space.

Kyle McDonald: Impressed by the simplicity of the code. Headmounted GoPro would be a great way to communicate the experience. Graphics are simple and effective.

jaqaur

Jaqaur – FaceOSC: face-responsive baby character simulation

Caroline Record: This is a very clever interpretation of this assignment and very comprehensive documentation. Even though there are only a couple interactions it stands on its own. If you haven’t seen it look at Rosalind Paradis’s Park Walk project.

Kyle McDonald: Great to see the iteration from your initial idea to the final one. Good to see you break away from the “puppeteering” approach. Hopefully this basic concept will stick with you until you find exactly the right way to use it for something more compelling.

kadoin

kadoin-FaceOSC: face-controlled, sound-responsive 3D robot head avatar

Caroline Record: Playful, well documented, and good craftsmanship. Nice attention to detail on the illuminated spheres and soundwave mouth. I think you might enjoy Dan Wilcox’s Robot Cowboy performances.

Kyle McDonald: Hilarious, and while it’s not visually or conceptually groundbreaking the dedication & attention to detail (and not straying from the simple aesthetic) makes it work. Perfect starting point for a funny interactive music video. You need to port your project to a single-serve website with clmtrackr and p5.js. So good.

kander

kander – FaceOSC: paint program for face-controlled horizontal lines

Caroline Record: This needs to be stepped up a few notches. I would like to see a more compelling set of compositional elements and a more intuitive interaction that could conceivably be discovered with little to no explanation. It needs to answer the question -why make a drawing tool for the face? For the performative nature of it? For the variety of input possibilities? For people who can’t use their arms?

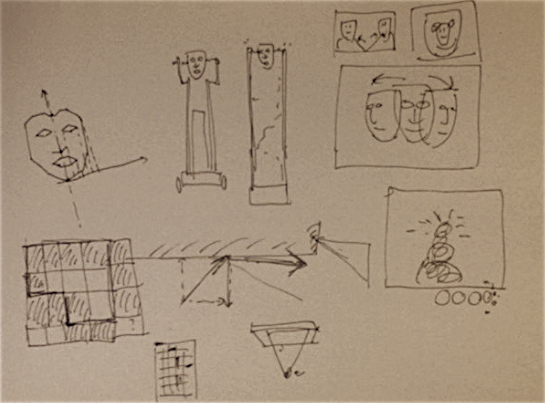

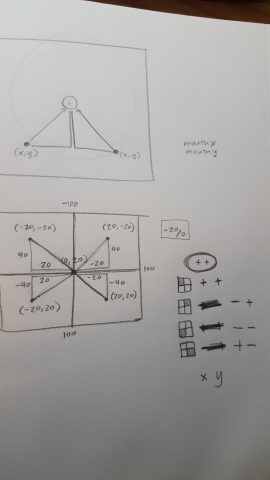

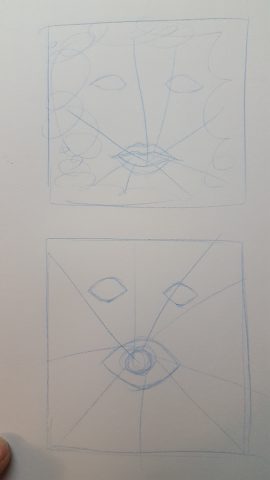

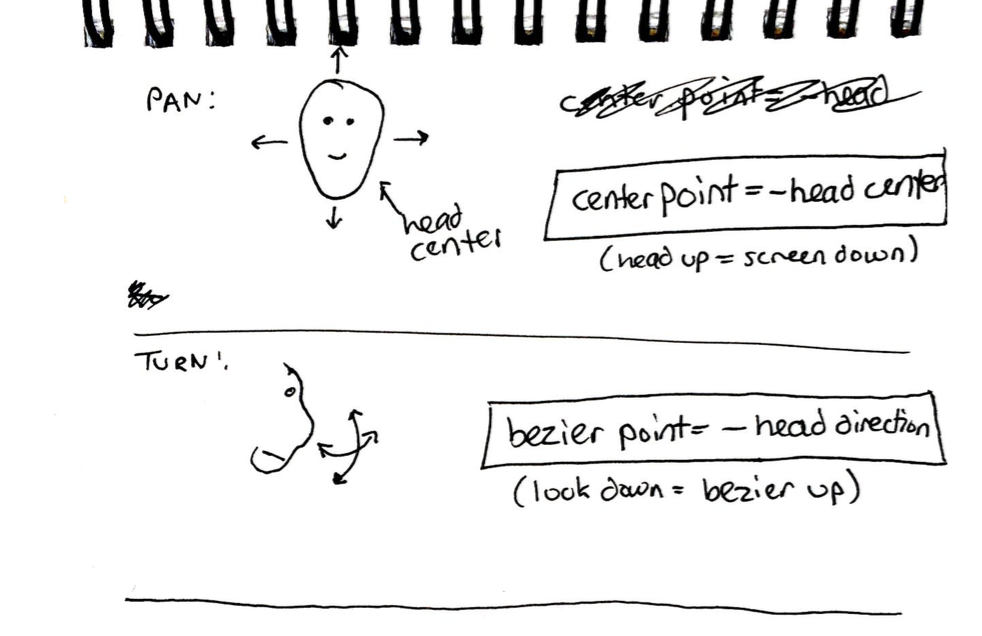

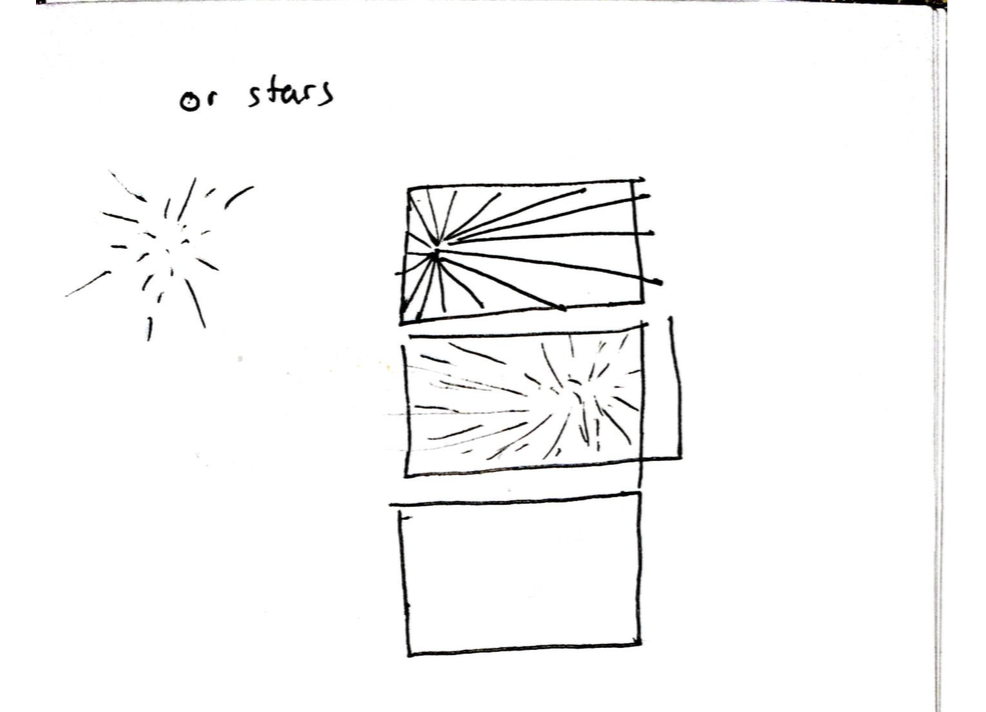

Kyle McDonald: Some interesting visual ideas in your sketchbook, but doesn’t seem like any of them made it into the work. It feels rigid and unnatural, like it would be better to use a tablet or other interface for this kind of simultaneous control of multiple variables. Lots of low-hanging fruit was not explored, such as different shapes, or continuous drawing instead of stamp-like line placement.

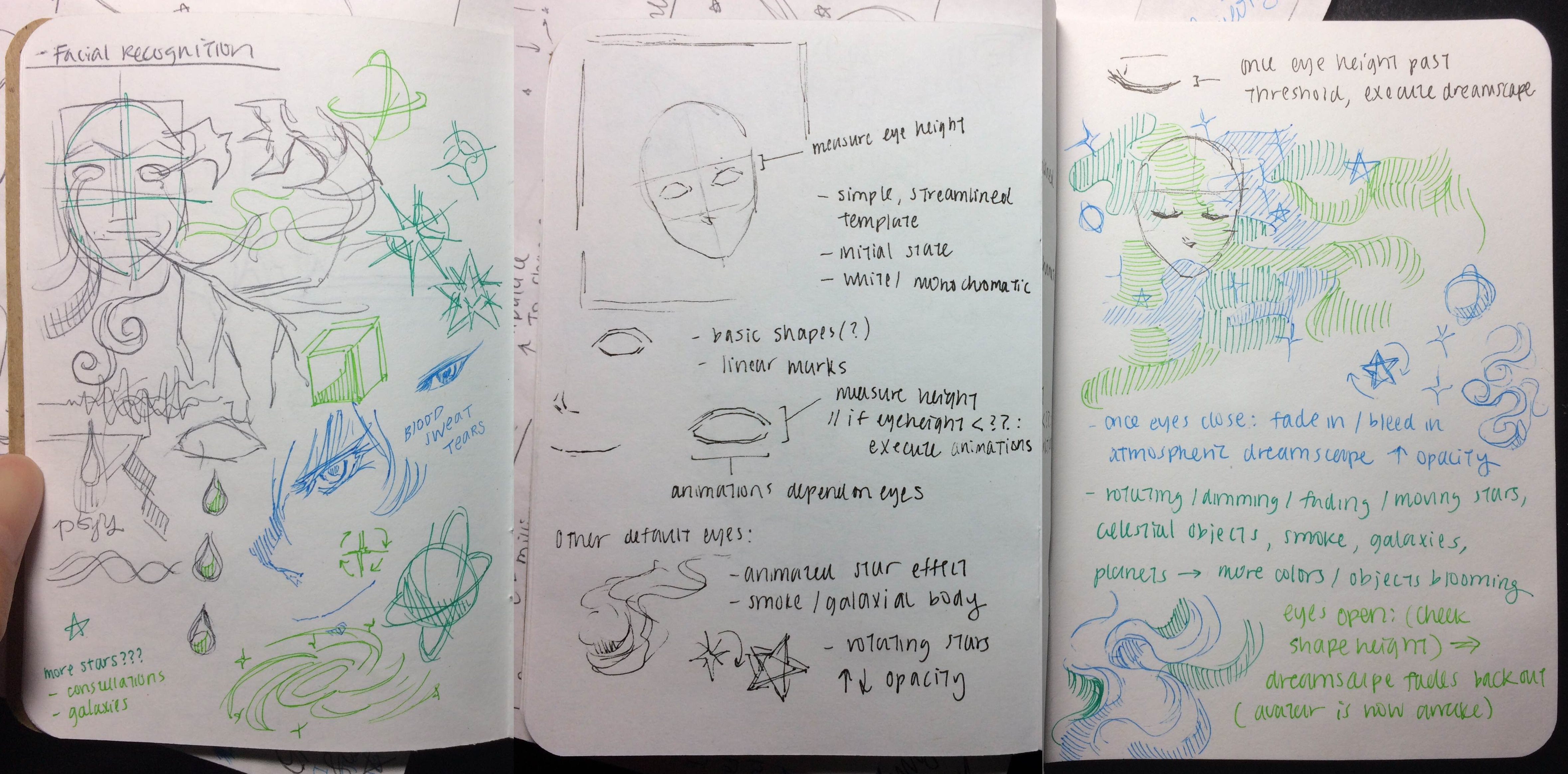

keali

Keali-FaceOSC: face-controlled starfield

Caroline Record: Strong basic mechanic of switching environments between the tranquil sleeping mind and the alert wakeful one and thorough documentation. Although I think you could of easily been more playful miming sleeping then wakefulness. It is ironic that the more developed composition occurs when the eyes are closed. Details that need attention: The transition between night and day, why are the wakeful eyes present but not the sleeping ones?

Kyle McDonald: Well documented, well executed, visually clean, but overall the interaction is not compelling to me.

kelc

Kelc – FaceOSC: face-driven interactive typography

Caroline Record: Nice styling on the quivering text. I like how you can navigate through it highlighting different phrases in a nonlinear fashion. As of now this project leaves a lot to be desired in terms of documentation and context. For instance the title “Face Text” could use some more attention and consideration.

Kyle McDonald: Good attention to some details, text is very difficult to read, concept feels like a starting point for something more compelling. Needs a few more iterations of experimentation.

krawleb

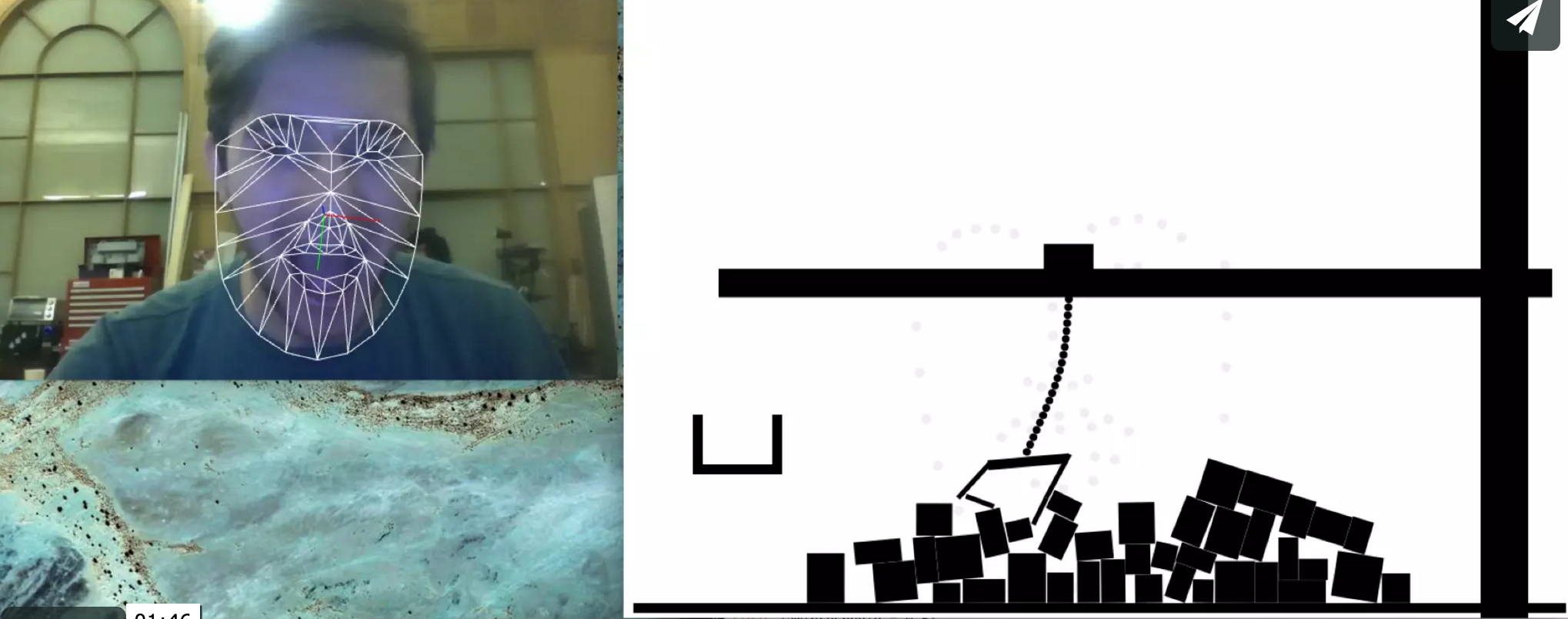

Krawleb-FaceOSC: face-driven physics game

Caroline Record: Well done! Gold star for creating such a feature complete game with thoughtful physics for each element. It is suspenseful and entertaining to watch. Good choice mapping the pincers to the mouth movement. It is enough of a intuitive interaction without loosing its delightfulness.

Kyle McDonald: Great experiment combining elements of pupeteering with gameplay. Looks incredibly frustrating and slightly addictive. Refine the graphics a little (not too much!) and install this somewhere around school with high score photos.

lumar

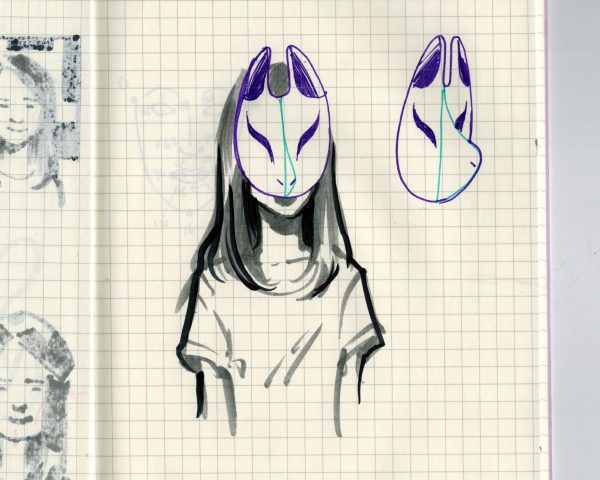

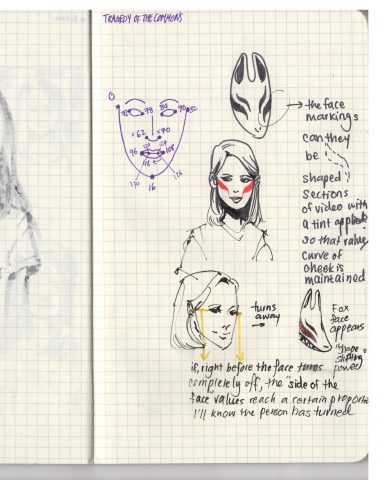

Lumar – FaceOSC: face-driven costume overlays and effects

Caroline Record: Imaginative project, playful and thorough documentation. It succeeds in creating a character that has engaging characteristics and abilities. The appearance of the full mask feels too abrupt and a glitchy right now. Perhaps a quick transition would be helpful, or having it appear when the eyes are closed instead of the current interaction.

Kyle McDonald: Really thorough exploration of the possibilities, good combination of concept & visuals. If the mask is meant to be surprising and out of the periphery, it shouldn’t vibrate so much, and perhaps should fade in instead of blinking in (which draws too much attention to it)? “Lumar” reminds me of Karolina Sobecka, with sketches and interest in subtle perception. So thorough. I hope Lumar has an opportunity to share their work with more people soon. Lumar might need to get out of her own headspace a little and see what other people experience.

ngdon

ngdon-FaceOSC: face-driven first-person shooter & 3D navigation environment

Caroline Record: Well designed and excellently executed. My only suggestion is to experiment with how the stream of circles originate out of the mouth. Perhaps they can bubble out or start at the bottom, as they do now, and directly pass through the location of the mouth.

Kyle McDonald: Excellent minimal aesthetic, well-tuned responsiveness from the game. The names over the NPCs heads make me wonder what this would be like if it were networked. Attention to details like the falling-over animations is well done. The creator of this work seems like a machine — they should be paired up with someone else who can work conceptually & visually with them, or they need to continue pursuing their minimalism and own it hardcore.

takos

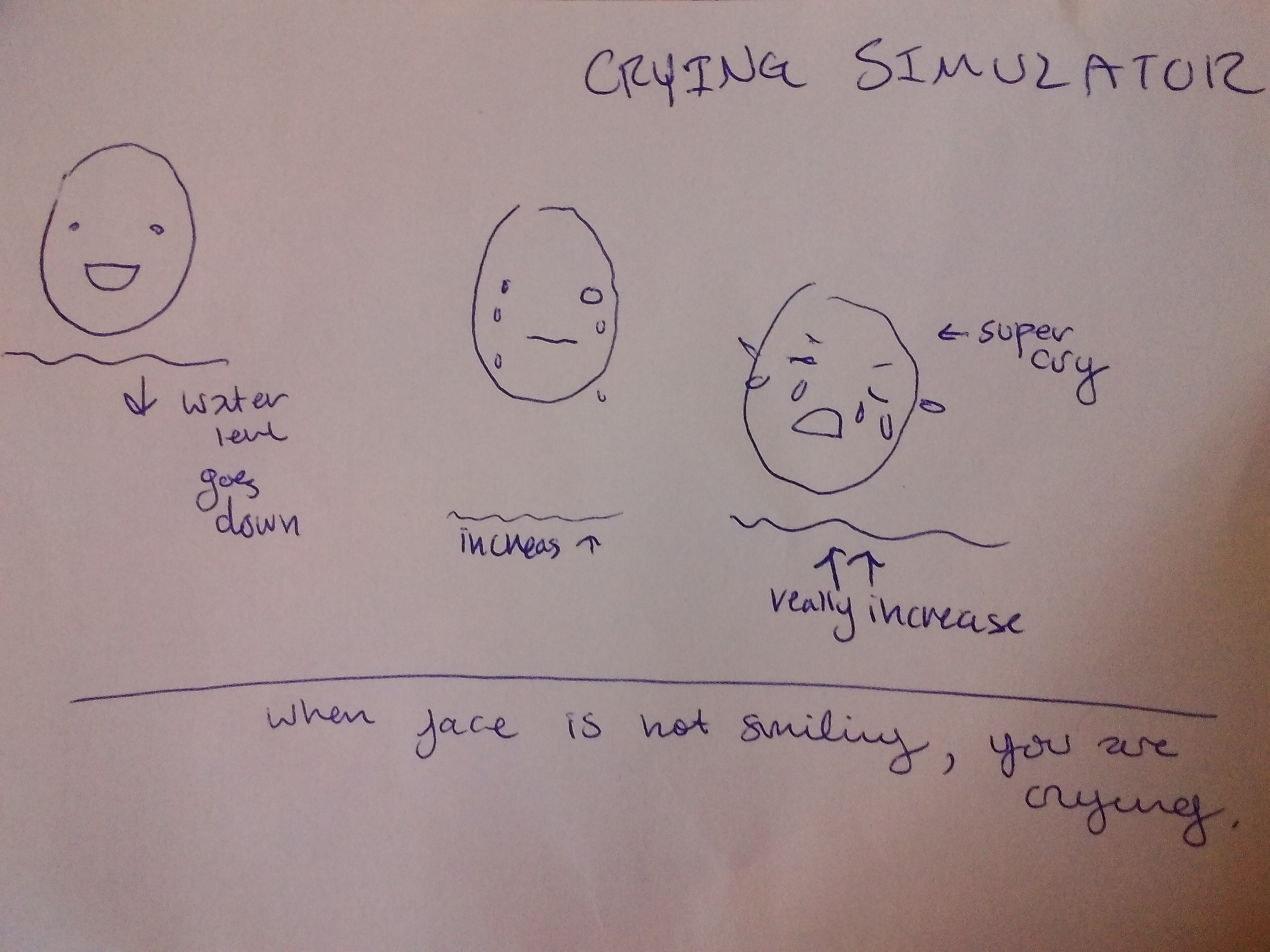

takos-faceosc: simulation driven by facial expression, themed on crying

Caroline Record: There is a meaningful mapping from the face to the visuals. It could be refined by playing with how you represent the eyes in the sketch and the physics of how the tears fall out of them.

Kyle McDonald: Good starting point with thinking about crying, but feels like it’s barely made it to the first step. Needs more iterations. Not adding anything interesting beyond combining three prepackaged things (FaceOSC, particle generator, water simulator).

tigop

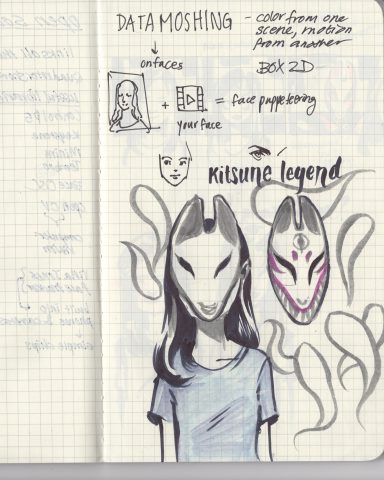

tigop-faceosc: character controlled by the face

Caroline Record: Plushulu has personality and considered visual and audio choices. The only perfectly smooth shape is the mouth, which appears to be an ellipse. Perhaps this could have also been styled more like the rest of the character.

xastol

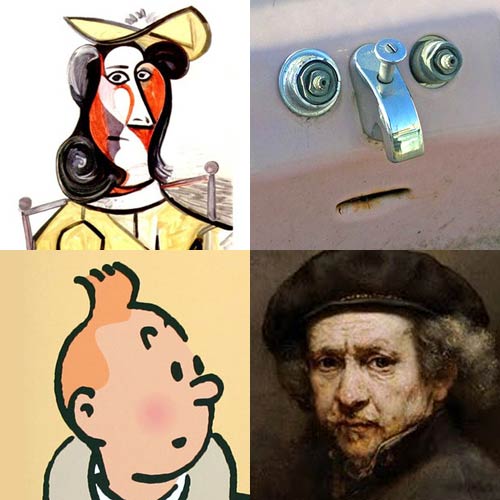

Xastol – FaceOSC: elliptic reinterpretation of the face

Caroline Record: This project needs more time and love. Right now it appears to be dangerously close to the bare minimum.

Kyle McDonald: Working with only circles could be a challenging and compelling design decision, but here it fails. Needs a few more iterations before vaguely interesting. Look into other random faces and masks like Munari’s, or Zach Lieberman’s masks, or Mokafolia’s faces.

zarard

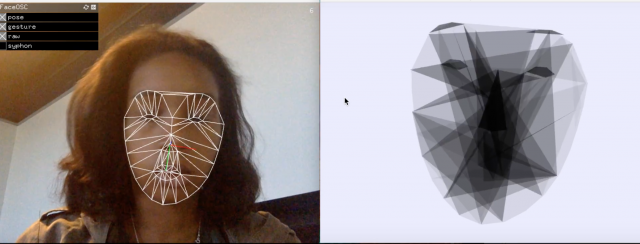

Zarard-FaceOSC: abstract reinterpretation of the user’s face

Caroline Record: I am not seeing the illusionistic quality from the references you described. The display method is an important detail here – displaying it through pepper’s ghost or projecting it into smoke could make a big difference. Look up the history of the phantasmagoria for some historical references and some inspiration.

Kyle McDonald: Interesting starting point, but feels like it never gets to that surprise of an abstract painting that you’re going for. It might be the way the eyes, eybrows, and nose are always present in a very obvious way. Or maybe the framerate is too high, and you need to take “excerpts”/”snapshots”. Consider breaking up the data that comes back from the tracker a bit more, maybe adding some noise or randomness to break up the visual consistency.

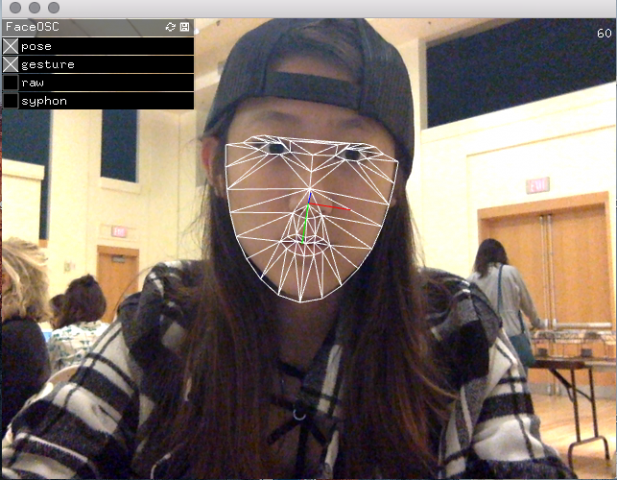

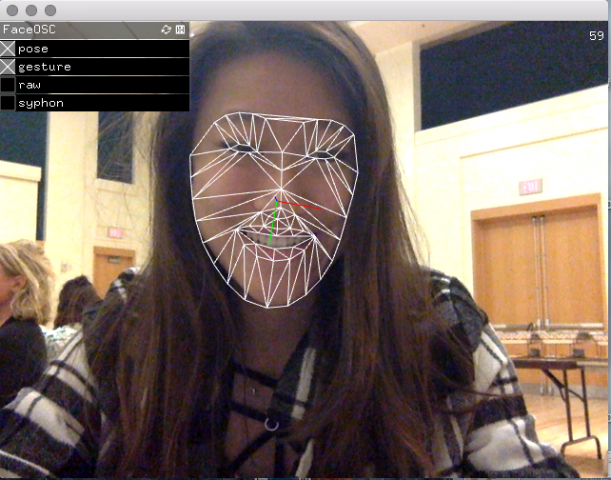

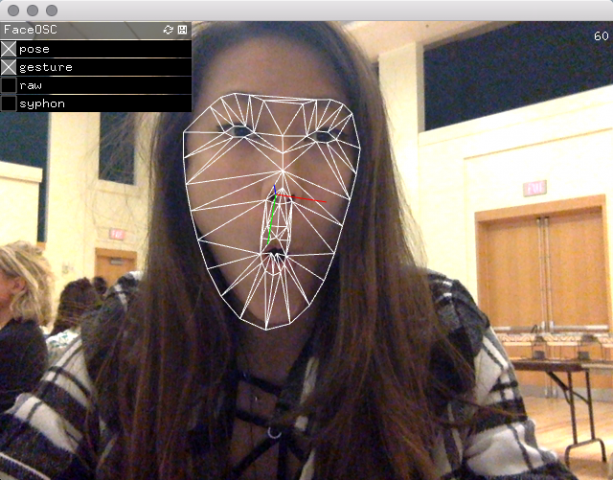

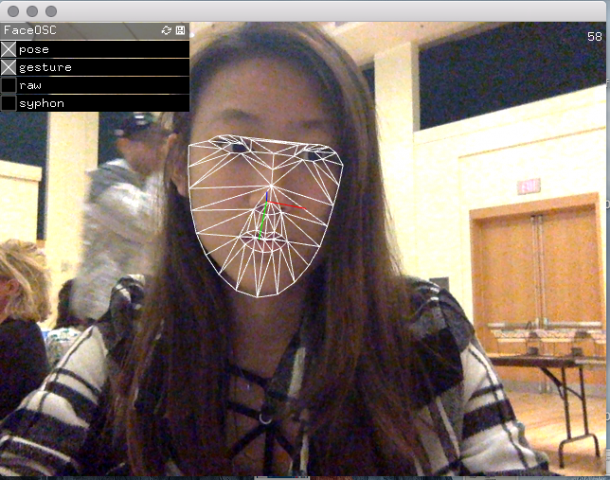

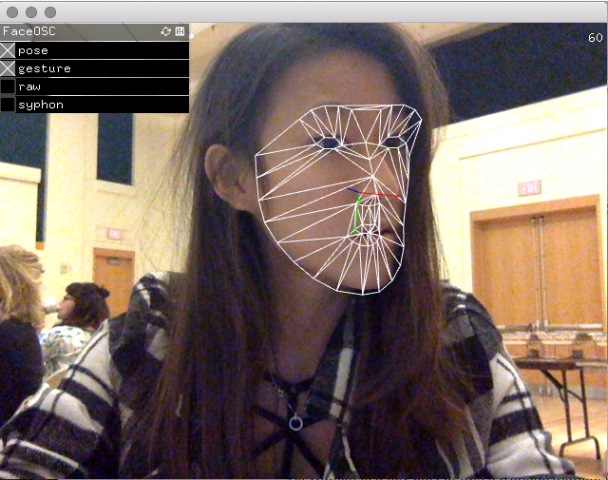

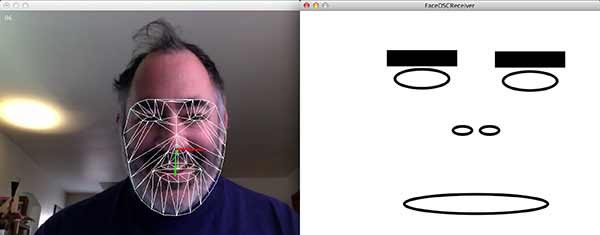

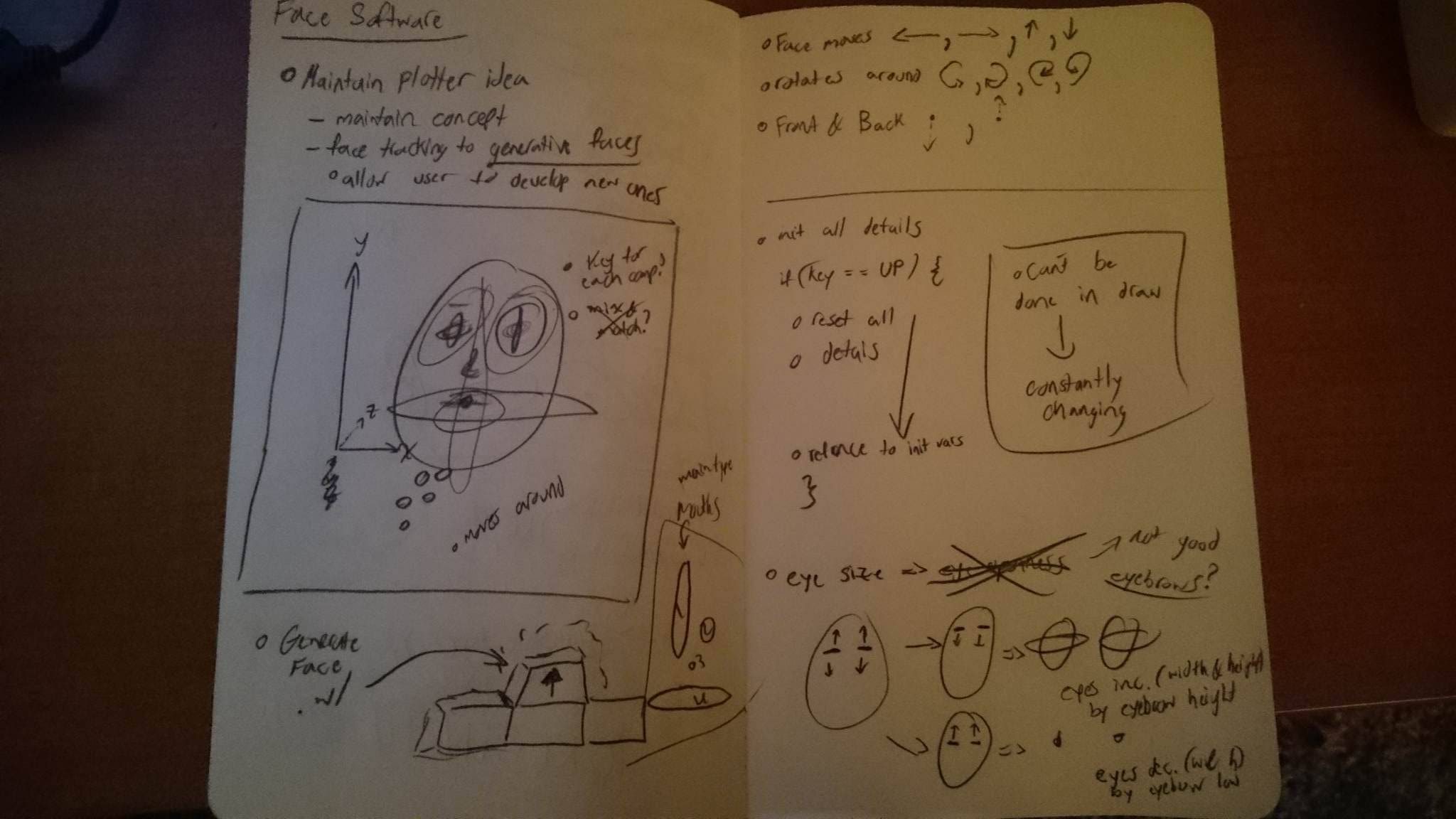

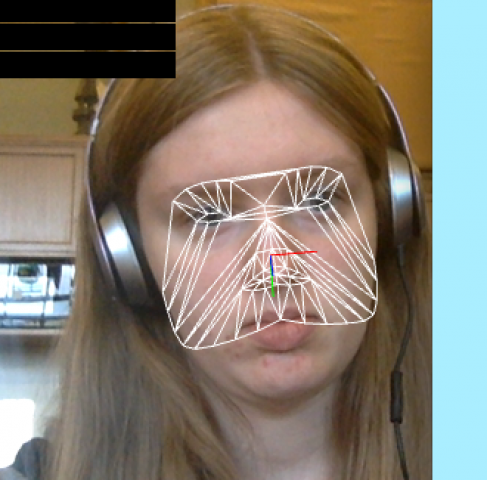

GIF of me making a composition using FaceOSC

GIF of me making a composition using FaceOSC