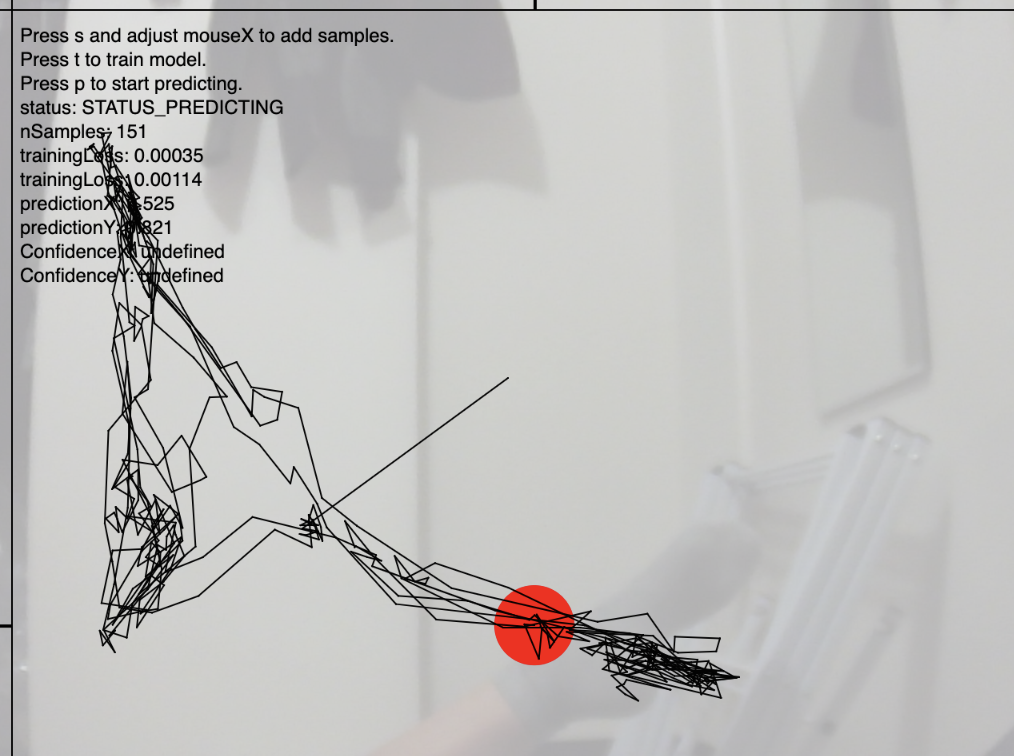

Eye tracker drawing

For this project, I wanted to use machine learning to create a device that explored subtle changes in the eyes. Using a two dimensional image regressor, most of my development involved exploring the nuances of how I interacted with the tracker rather than the code itself. While my original intention was a tracker that would allow the user to draw with their eyes, I spent a lot of time experimenting with how to collect data points, my proximity to the camera, and the range and speed of how I moved my eyes.

Process / Early Iterations

Knowing I wanted to make a program detecting eye movements, I experimented with pupil dilation, lying, and smile lines by recording myself as I performed different tasks. While I was interested in the concept of detecting nuances expressions in people's eyes, I felt the observable changes were too subtle to detect for the scope of this project.

Before adding specific points of reference for the training set, I added samples by clicking in the vicinity of where I was moving. This helped me while I was starting out but did not produce the most accurate results.

Training

For greater precision and a method for other users to reproduce the tracker, I created a series of points to use during the training set with indicators of when each point had at least 30 samples.

Setup

My final setup involved a large monitor screen, a precise webcam, and a number keypad to train the program. The larger screen allowed for a greater sense of eye movement for the program to track, and the keypad let me train the set without having to glance at my laptop and disrupt eye movement.