I started this project thinking about busses and the chaotic/fleeting feeling they represent. They're a big part of city life in my experience, and I wanted to respond to that chaos and rush by replacing the windows with a window into a forest or similar, where the outside is just slowly moving by in a straight line. I was inspired by AR portals like this one:

I did some sketches to see what a bus would look like with the windows switched, and imagining somebody cutting off all the chaotic input to replace it with the calming view out the window (sorry it's really hard to see, I drew lightly).

So I watched a lot of videos on how to do this, mainly this great guy called Pirates Just AR, which is a great name, by the way.

I had some trouble extending his tutorials to a nice nature landscape though, and I never even got to the point of adding motion, which I'm sure would have been hard too.

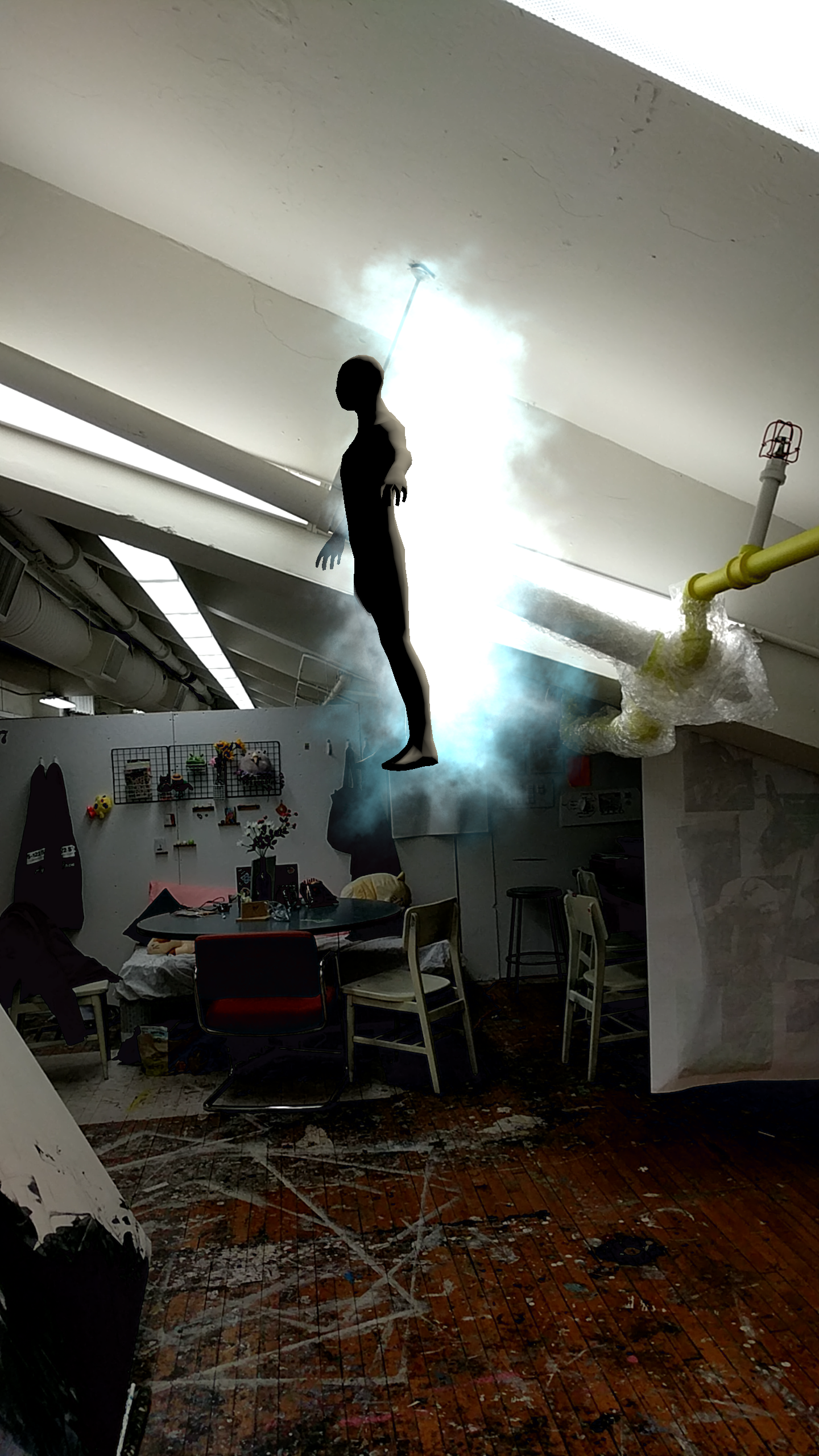

I thought I could instead put a hole where the "Emergency Exit" on the roof of the bus is, and put a tree actually inside the bus breaking out through the hole. I also didn't want to go out and look for a bus at that point in the night, so I decided an elevator was a similar idea. So I changed the image target to the roof of the elevator, and added some calming music when the hole and tree appear. I think I can do a lot more with this concept, especially by adding elevator dings and bird sounds; I think that'd be a good contrast. It would also be nice to have flying birds, and add motion of the elevator somehow.

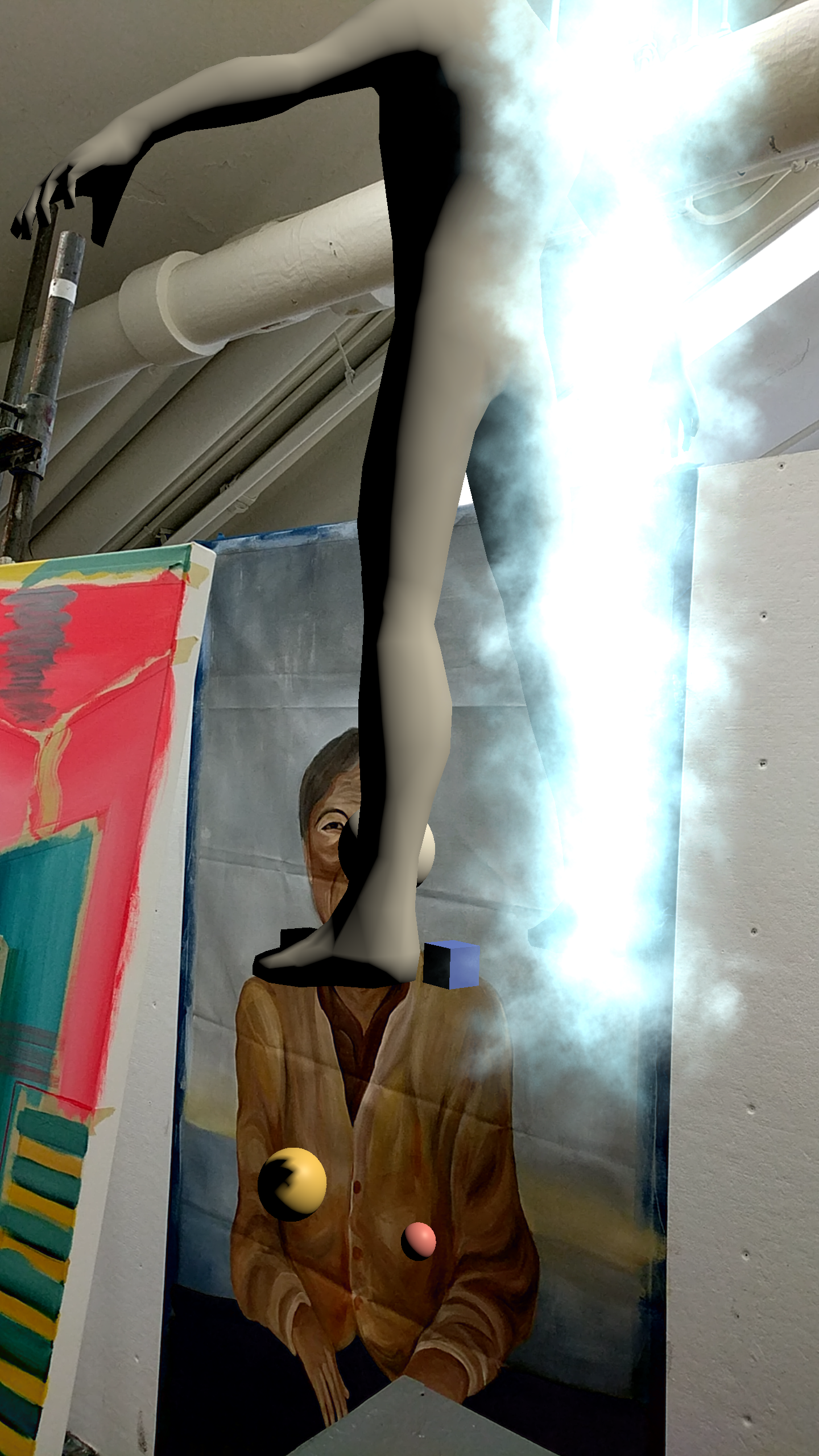

Here's my imagined use of this sculpture:

This an AR app that lets you place a wooden toy train set on flat vertical surfaces.

This an AR app that lets you place a wooden toy train set on flat vertical surfaces.