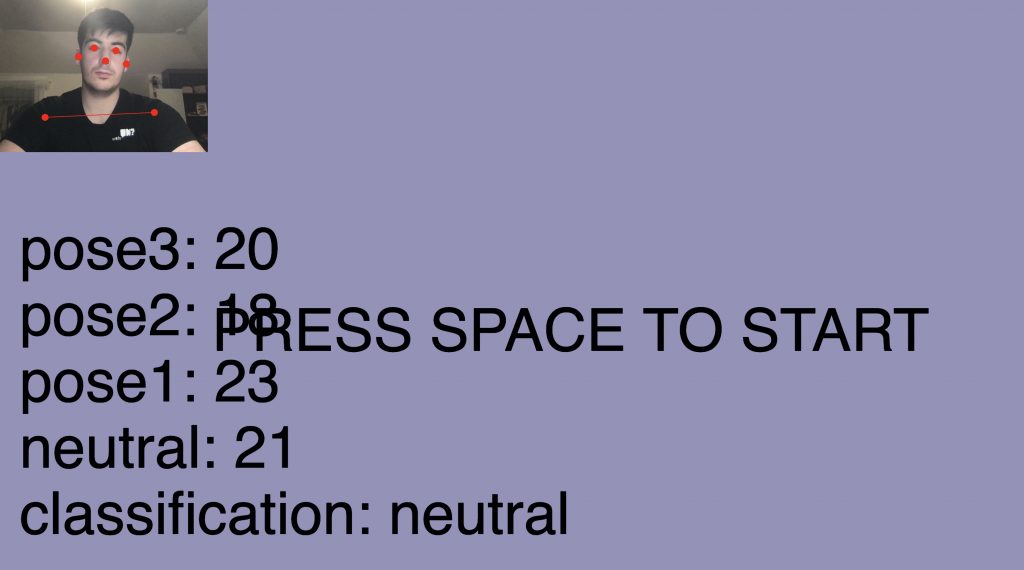

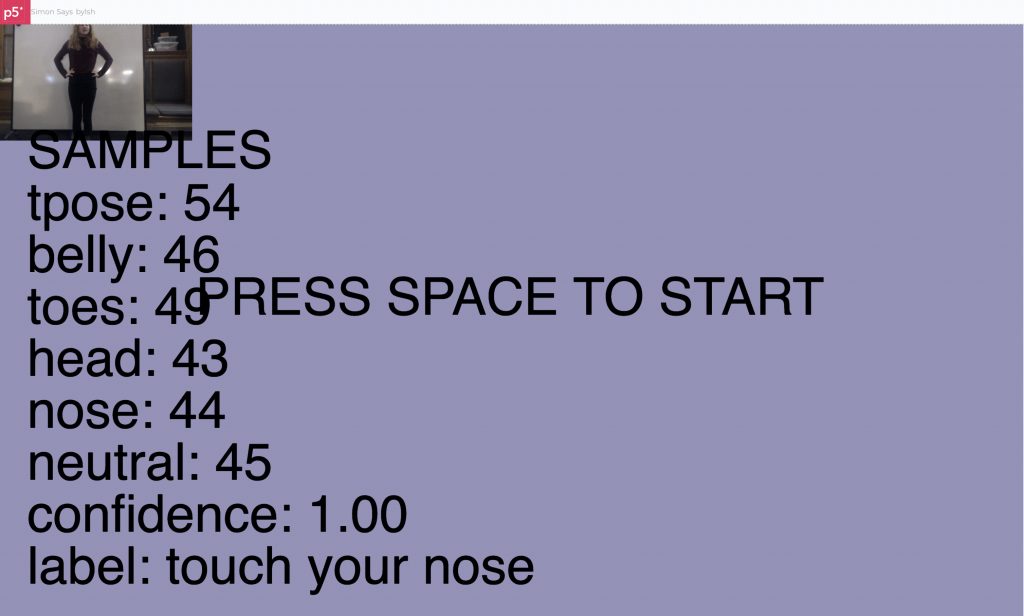

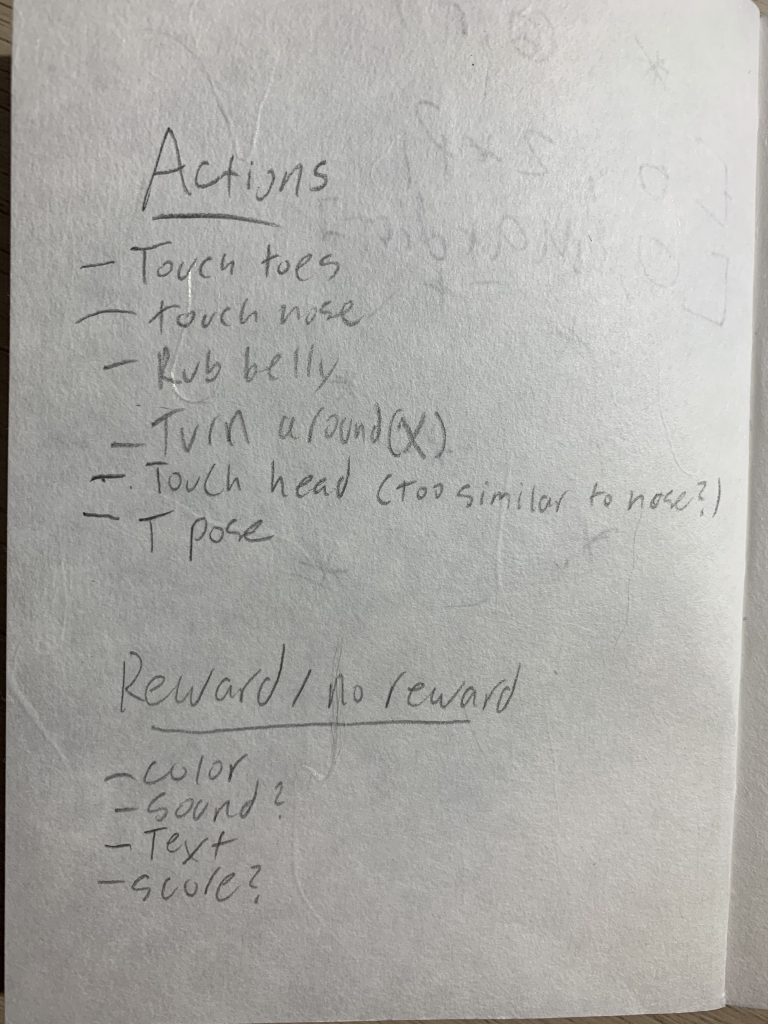

Last year, a visiting guest in the studio mentioned that they consider many of our interactions with smart assistants quite rude and that these devices reinforce an attitude of barking commands without giving thanks. I think back to this conversation every so often, and ponder to what extent society anthropomorphizes technology. In this project I decided to flip the usual power dynamic of human and computer. The artificial intelligence generally serves our commands and does nothing (other than ping home and record data) when not addressed. Simon says felt like a fun way to explore this relationship by having the computer give the human commands, and chide us when we are wrong. I also decidedly made the gap between commands short as a way to consider how promptly we expect a response from technology. I would say this project is fun to play. My housemate giggled as the computer told him he was doing the wrong motions. However, one may not consider the conceptual meaning of the dynamic during the game. Another issue I ran into during development is that when trained on more than three items, the network rapidly declined in accuracy. In the end, I switched to training KNN on PoseNet data, which worked significantly better. There are still a few tiny glitches, but the basic app works.