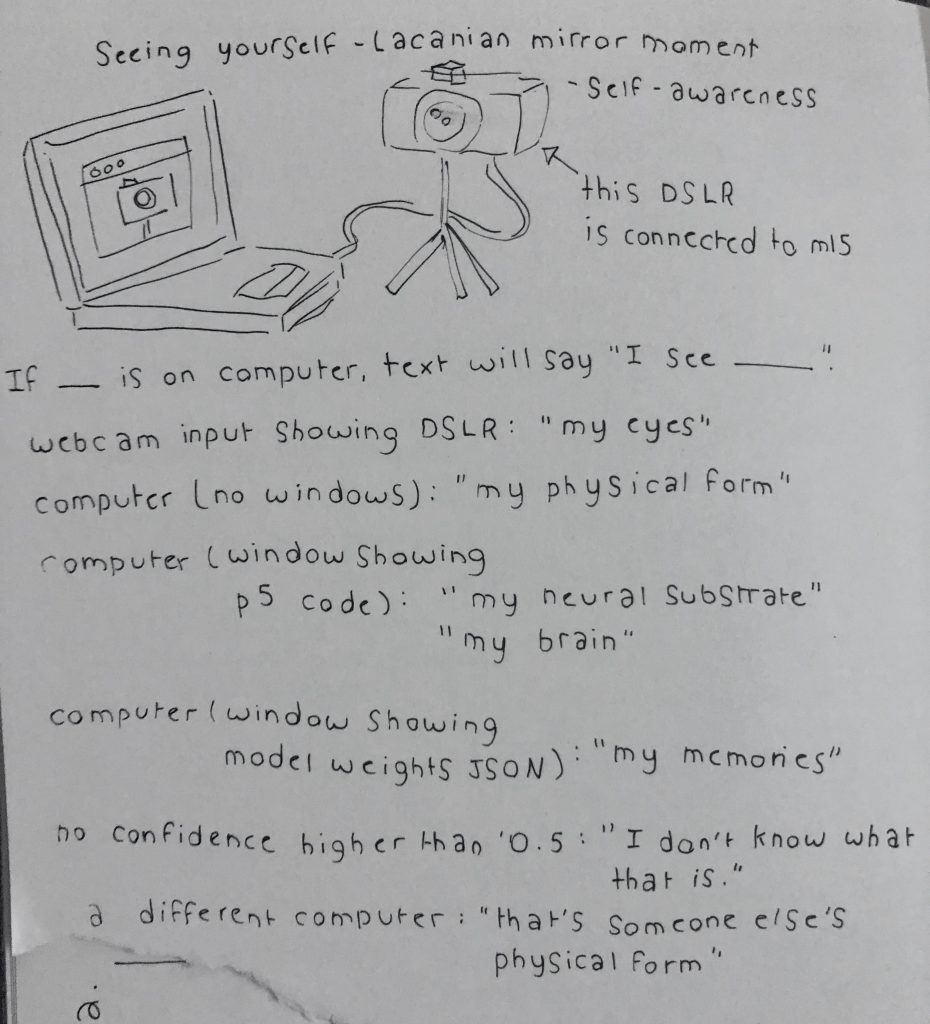

Even compared to all the other projects, I spent a very long time troubleshooting and down-scoping my idea for this project! My first idea was to train a model to recognize its physical form -- a model interpreting footage of the laptop the code was running on, or webcam footage reflecting the camera (the model's 'eyes') back at it. However, training for such specific situations with so much variability would have required thousands of training data.

Next, I waffled between several other ideas, especially using a two-dimensional regressor. I was feeling pretty bad about the whole project because none of my ideas expressed interesting ideas in a simple but conceptually sophisticated way. I endeavored to get the 2D regressor working (which was its own bag of fun monkeys,) and make the program track the point of my pen as I drew.

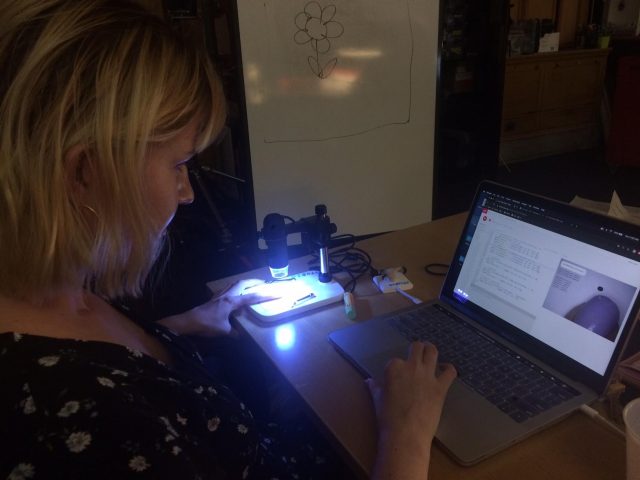

Luckily, Golan showed me an awesome USB microscope camera! The first thing I noticed when experimenting with this camera was how gross my skin was. There were tiny hairs and dust particles all over my fingers, and a hangnail which I tried to pull off, causing my finger to bleed. Though the bleeding healed within a few hours, it inspired a project about a deceptively cute vampiric bacterium who is a big fan of fingers.

This project makes use of two regressors (determining the x and y location of the fingertip) and a classifier (to determine whether a finger is present and if it is bloody.) I did not show the training process in my video because it takes a while. If I had more time, I think there is lots of potential for compelling interactions with the Bacterium. I wanted him to provoke some pity and disgust in the viewer, while also being very cute.

In conclusion, I spent many hours on this project and tried hard. I really like Machine Learning, so I wanted my piece to be 'better' and 'more'. But I learnt a lot and made an amusing thing so I don't feel unfulfilled by it.

Documentation: