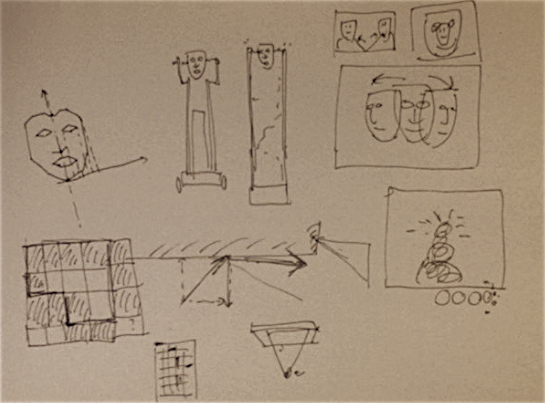

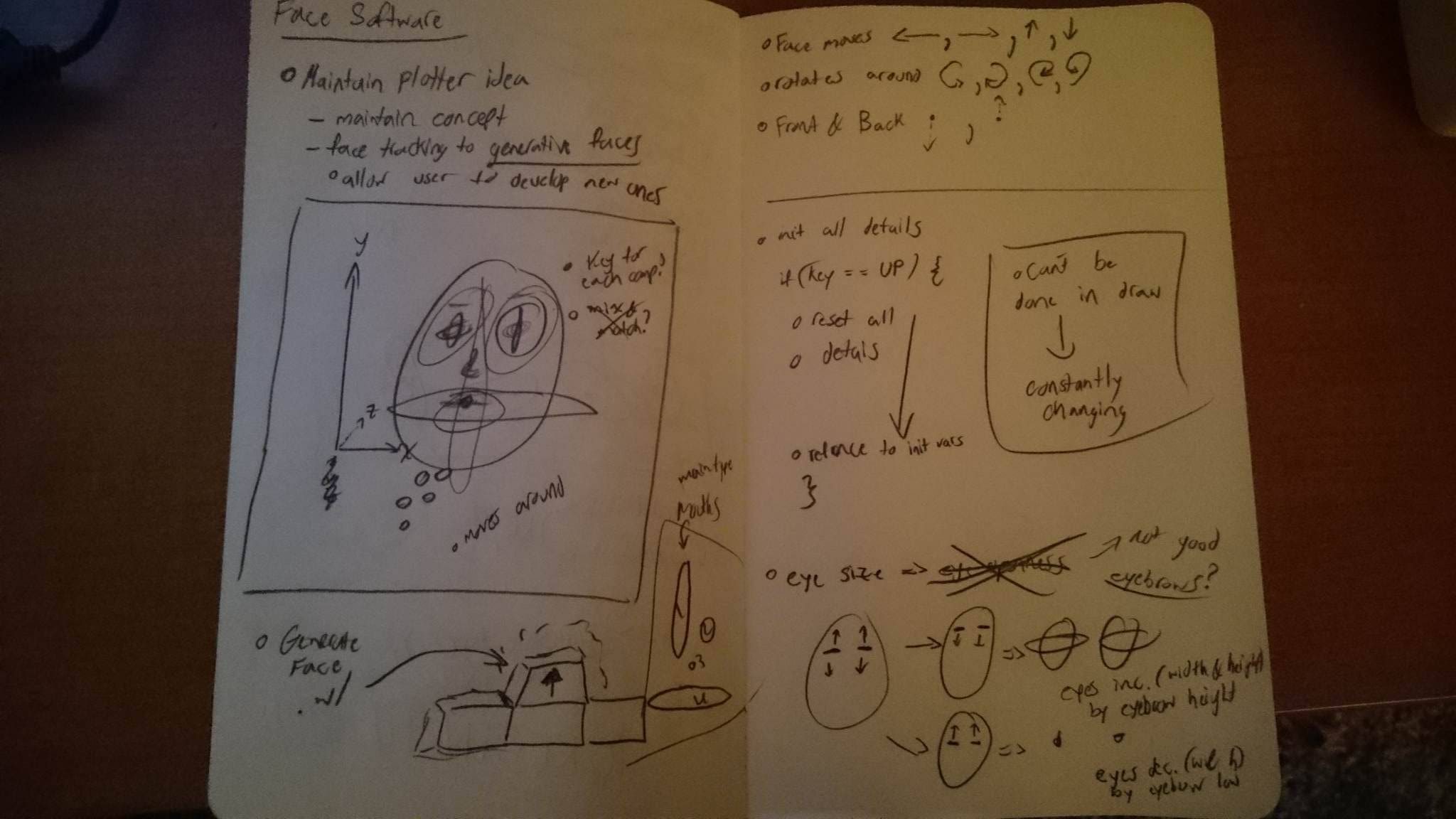

I observed an interesting fact that many people (including me) brandish their heads while playing games. For example, when they want to go left, they tilt their heads toward that direction in addition to pressing the left key on the keyboard. They also exhibit different facial expressions when they’re doing different actions in the game.

Therefore I thought that in order to know what the player wants to do in the game, we only need to look at his/her face, and the mouse and keypress input are in fact redundant.

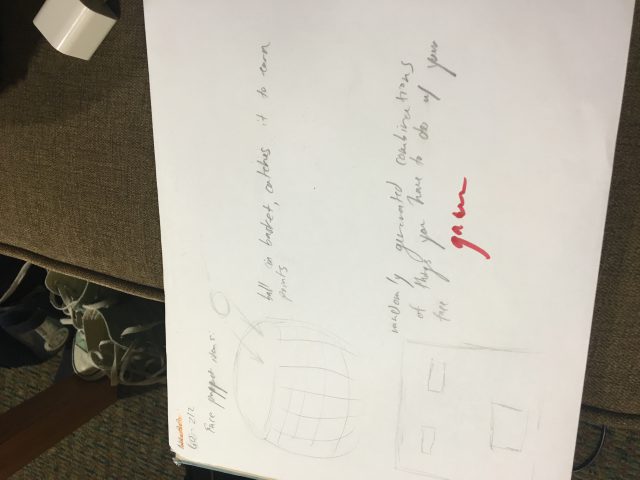

I decided to implement this idea in a first person shooter game. In this world, everyone has no body, but only a face mounted on a machine controlled by it.

I used in P3D mode lots of rotates and translates to render the 3D effect. The map is generated using prim algorithm. The enemies are controlled by a simple AI, and wander around if the player is not near, and slowly try to turn and move toward the player and attack him if he comes into a certain range.

The gameplay is really an interesting experience. It is so intuitive that I almost feel effortless controlling my character. When using keyboard/mouse to play a game, no matter how proficient I am with the binding, I always have to go through the process of: “Enemy’s shooting at me->I need to dodge left->tell finger to press left key->finger press left key->character dodge left”. But controlling with face is very different:”Enemy’s shooting at me->my head automatically tilt left->character dodge left”. So happy.

I plan on making it into a multiplayer game, so that people can compete with each other over the internet and see who’s got the best facial muscles.

import oscP5.*;

OscP5 oscP5;

int found;

float[] rawarr;

float mouthWidth;

float mouthHeight;

float poseScale;

PVector orientation = new PVector();

PVector posePos = new PVector();

public void found(int i) {

found = i;

}

public void rawData(float[] raw) {

rawarr = raw;

}

public void mouthWidth(float i) {

mouthWidth = i;

}

public void mouthHeight(float i) {

mouthHeight = i;

}

public void orientation(float x, float y, float z) {

orientation.set(x, y, z);

}

public void poseScale(float x) {

poseScale = x;

}

public void posePos(float x, float y) {

posePos.set(x, y);

}

int[][] mat = new int[64][64];

int dw = 20;

String multStr(String s, int n) {

String ms = "";

for (int i = 0; i < n; i++) {

ms += s;

}

return ms;

}

public class Prim {

int[][] walls;

int[][] visited;

int[][] wallist;

int wlen = 0;

int w;

int h;

public Prim(int w, int h) {

this.w = w;

this.h = h;

walls = new int[w * h * 2][3];

visited = new int[h][w];

wallist = new int[w * h * 2 + 1][3];

}

public void addcellwalls(int j, int i) {

addwall(j, i, 1);

addwall(j, i, 2);

addwall(j, (i + 1), 1);

addwall((j + 1), i, 2);

}

public void addwall(int j, int i, int t) {

wallist[wlen] = new int[] {

j, i, t

};

wlen++;

}

public void delwall1(int j, int i, int t) {

for (int k = 0; k < walls.length; k++) {

if (walls[k][0] == j && walls[k][1] == i && walls[k][2] == t) {

walls[k] = new int[] {

-1, -1, -1

};

}

}

}

public void delwall2(int j, int i, int t) {

for (int k = 0; k < wlen; k++) {

if (wallist[k][0] == j && wallist[k][1] == i && wallist[k][2] == t) {

for (int l = k; l < wlen; l++) {

wallist[l] = wallist[l + 1];

}

wlen -= 1;

}

}

}

public int[][] getadjcells(int j, int i, int t) {

if (t == 1) {

return new int[][] {

{

j, i

}, {

j, i - 1

}

};

} else {

return new int[][] {

{

j, i

}, {

j - 1, i

}

};

}

}

public boolean isvisited(int j, int i) {

if (i < 0 || j < 0 || i >= h || j >= w) {

return true;

}

return visited[i][j] == 1;

}

public void gen() {

for (int i = 0; i < h; i++) {

for (int j = 0; j < w; j++) {

walls[(i * w + j) * 2] = new int[] {

j, i, 1

};

walls[(i * w + j) * 2 + 1] = new int[] {

j, i, 2

};

visited[i][j] = 0;

}

}

int[] o = new int[] {

floor(random(w)), floor(random(h))

};

addcellwalls(o[0], o[1]);

visited[o[1]][o[0]] = 1;

int count = 0;

while (wlen > 0 && count < 1000000) {

count++;

int i = floor(random(wlen));

int[][] adjs = getadjcells(wallist[i][0], wallist[i][1], wallist[i][2]);

if (isvisited(adjs[0][0], adjs[0][1]) != isvisited(adjs[1][0], adjs[1][1])) {

int uv = isvisited(adjs[0][0], adjs[0][1]) ? 1 : 0;

visited[adjs[uv][1]][adjs[uv][0]] = 1;

addcellwalls(adjs[uv][0], adjs[uv][1]);

delwall1(wallist[i][0], wallist[i][1], wallist[i][2]);

delwall2(wallist[i][0], wallist[i][1], wallist[i][2]);

} else {

delwall2(wallist[i][0], wallist[i][1], wallist[i][2]);

}

}

}

}

public class Bullet {

float x;

float y;

float z;

PVector forw;

float spd = 1;

float size = 1;

float g = 0;

float dec = 0.5;

int typ = 1;

int mast = 1;

public Bullet(float x, float y, float z, PVector forw) {

this.x = x;

this.y = y;

this.z = z;

this.forw = forw;

}

public void update() {

x += forw.x * spd;

y += forw.y * spd + g;

z += forw.z * spd;

g += 0.01;

if (typ == 2) {

size = size * dec;

if (size <= 0.001) {

typ = 0;

}

}

}

}

void drawelec(float r) {

pg.beginShape();

for (int i = 0; i < 22; i++) {

pg.vertex(i * 0.1, noise(r, 0.1 * i, 0.5 * frameCount));

}

pg.endShape();

}

public class Enemy {

float x;

float y;

PVector forw = new PVector(0, 0, 1);

PVector fdir = new PVector(0, 0, 1);

float spd = 0.2;

int state = 1;

int hp = 12;

float fall = 0;

String[] names = new String[] {

"James", "John", "Robert", "Michael", "Mary",

"William", "David", "Richard", "Charles", "Joseph", "Thomas", "Patricia",

"Christopher", "Linda", "Barbara", "Daniel", "Paul", "Mark", "Elizabeth", "Donald"

};

String name = names[floor(random(names.length))];

float[][] mockface = new float[][] {

{

0, 0

}, {

2, 1

}, {

5, 3

}, {

6, 10

}, {

4, 12.5

}, {

2, 12.5

}, {

0, 12

}

};

int[][] mockmouth = new int[][] {

{

0, 1

}, {

1, 1

}, {

3, 4

}, {

1, 7

}, {

0, 7

}

};

float[][] mockeye = new float[][] {

{

0.5, 10

}, {

2.5, 9

}, {

4.5, 10

}, {

2.5, 11

}, {

0.5, 10

}

};

public Enemy(float x, float y) {

this.x = x;

this.y = y;

}

public void nav(Prim p) {

if (state == 1 || state == 2) {

fdir.lerp(forw, 0.1);

}

if (dist(x, y, px, py) < dw * 2 && state != 0) {

state = 3;

}

if (state == 1) {

x += forw.x * spd;

y -= forw.z * spd;

fdir.lerp(forw, 0.1);

for (int k = 0; k < p.walls.length; k++) {

if (p.walls[k][2] != -1) {

float wallx = p.walls[k][0] * dw;

float wally = p.walls[k][1] * dw;

if ((p.walls[k][2] == 1 && x >= wallx && x <= wallx + dw && y >= wally - 3 && y <= wally + 3) || (p.walls[k][2] == 2 && y >= wally && y <= wally + dw && x >= wallx - 3 && x <= wallx + 3)) {

x -= forw.x * spd * 2;

y += forw.z * spd * 2;

state = 2;

}

}

}

if (random(1.0) < 0.005) {

state = 2;

}

} else if (state == 2) {

PVector v = new PVector(forw.x, forw.z);

v.rotate(0.1);

forw.x = v.x;

forw.z = v.y;

if (random(1.0) < 0.1) {

state = 1;

}

} else if (state == 3) {

x += forw.x * spd * 0.5;

y -= forw.z * spd * 0.5;

for (int k = 0; k < p.walls.length; k++) {

if (p.walls[k][2] != -1) {

float wallx = p.walls[k][0] * dw;

float wally = p.walls[k][1] * dw;

if ((p.walls[k][2] == 1 && x >= wallx && x <= wallx + dw && y >= wally - 3 && y <= wally + 3) || (p.walls[k][2] == 2 && y >= wally && y <= wally + dw && x >= wallx - 3 && x <= wallx + 3)) {

x -= forw.x * spd * 2;

y += forw.z * spd * 2;

}

}

}

PVector v = new PVector(-px + x, py - y);

v.rotate(PI);

fdir.lerp(new PVector(v.x, 0, v.y), 0.005);

fdir.limit(1);

forw.lerp(fdir, 0.1);

PVector v2 = new PVector(-fdir.x, fdir.z);

v2.rotate(PI);

if (noise(0.5 * frameCount) > 0.65) {

bullets[bl] = new Bullet(x, -1.5, y, new PVector(v2.x, 0, v2.y));

bullets[bl].size = 0.6;

bullets[bl].spd = 0.9;

bullets[bl].mast = 2;

bl++;

}

if (dist(x, y, px, py) > dw * 2) {

state = 2;

}

}

for (int i = 0; i < bl; i++) {

if (bullets[i].mast == 1 && bullets[i].typ == 1 && state > 0) {

if (dist(bullets[i].x, bullets[i].z, x, y) < 2) {

bullets[i].typ = 0;

hp -= 1;

for (int j = 0; j < 3; j++) {

bullets[bl] = new Bullet(bullets[i].x, bullets[i].y, bullets[i].z - 0.01, PVector.random3D());

bullets[bl].size = 0.8;

bullets[bl].spd = 0.4;

bullets[bl].typ = 2;

bullets[bl].dec = 0.8;

bl++;

}

if (hp <= 0) {

score += 100;

for (int j = 0; j < 10; j++) {

bullets[bl] = new Bullet(x, -3, y, PVector.random3D());

bullets[bl].size = 3;

bullets[bl].spd = 0.4;

bullets[bl].typ = 2;

bullets[bl].dec = 0.8;

bl++;

}

}

}

}

}

if (hp <= 0) {

this.state = 0;

}

}

public void drawenem() {

pg.pushMatrix();

pg.translate(x, 0, y);

pg.rotateY(-PI / 2 + atan2(forw.z, forw.x));

if (this.state == 0) {

pg.translate(0, 7, 0);

//rotateY(random(PI*2));

pg.rotateX(-fall);

if (fall < PI / 2) {

fall += 0.1;

}

pg.translate(0, -7, 0);

pg.stroke(100);

pg.strokeWeight(2);

pg.fill(100);

pg.pushMatrix();

pg.translate(0, 7, 0);

pg.box(2.5, 2, 2.5);

pg.translate(0, -3, 0);

//pg.box(1.5,6,0.4);

pg.translate(-1.1, -2, 0);

pg.box(0.1, 9, 0.1);

pg.translate(2.2, 0, 0);

pg.box(0.1, 9, 0.1);

pg.popMatrix();

pg.fill(255);

} else {

pg.stroke(100);

pg.strokeWeight(2);

pg.fill(100);

pg.pushMatrix();

pg.translate(0, 7, 0);

pg.box(2.5, 2, 2.5);

pg.translate(0, -3, 0);

//pg.box(1.5,6,0.4);

pg.translate(-1.1, -2, 0);

pg.box(0.1, 9, 0.1);

pg.translate(2.2, 0, 0);

pg.box(0.1, 9, 0.1);

pg.popMatrix();

pg.fill(255);

pg.pushMatrix();

pg.translate(0, -1.2, -0.5);

//scale(0.2);

pg.fill(100);

pg.textSize(12);

if (dist(x, y, px, py) < dw * 3) {

pg.pushMatrix();

pg.translate(0, -3, 0);

pg.scale(0.05);

pg.textAlign(CENTER);

pg.textMode(SHAPE);

pg.rotateY(PI);

pg.text(name, 0, 0);

pg.popMatrix();

}

pg.fill(255);

pg.rotateY(PI / 2 - atan2(forw.z, forw.x));

pg.rotateY(-PI / 2 + atan2(fdir.z, fdir.x));

pg.rotateY(-PI / 8);

pg.beginShape();

for (int i = 0; i < mockface.length; i++) {

pg.vertex(mockface[i][0] * 0.15, -mockface[i][1] * 0.15);

}

pg.endShape();

pg.beginShape();

for (int i = 0; i < mockmouth.length; i++) {

pg.vertex(mockmouth[i][0] * 0.15, 0.15 * (-4 + (mockmouth[i][1] - 4) * noise(0.5 * frameCount)));

}

pg.endShape();

pg.beginShape();

for (int i = 0; i < mockeye.length; i++) {

pg.vertex(mockeye[i][0] * 0.15, -mockeye[i][1] * 0.15);

}

pg.endShape();

pg.rotateY(PI / 4);

pg.beginShape();

for (int i = mockface.length - 1; i >= 0; i--) {

pg.vertex(-mockface[i][0] * 0.15, -mockface[i][1] * 0.15);

}

pg.endShape();

pg.beginShape();

for (int i = mockmouth.length - 1; i >= 0; i--) {

pg.vertex(-mockmouth[i][0] * 0.15, 0.15 * (-4 + (mockmouth[i][1] - 4) * noise(0.5 * frameCount)));

}

pg.endShape();

pg.beginShape();

for (int i = mockeye.length - 1; i >= 0; i--) {

pg.vertex(-mockeye[i][0] * 0.15, -mockeye[i][1] * 0.15);

}

pg.endShape();

pg.popMatrix();

pg.pushMatrix();

pg.translate(-1.1, -2.2, 0);

for (int i = 0; i < 2; i++) {

drawelec(i);

}

pg.popMatrix();

for (int i = 0; i < 3; i++) {

pg.pushMatrix();

pg.translate(-1.1, -2 + 8 * noise(i * 10, 0.1 * frameCount), 0);

drawelec(i);

pg.popMatrix();

}

}

pg.popMatrix();

}

}

Prim p = new Prim(16, 16);

float px = dw * 8.5;

float py = dw * 8.5;

PVector forward;

PVector left;

PVector thwart;

PVector movement;

Bullet[] bullets = new Bullet[1024];

int bl = 0;

float[] farr = new float[256];

Enemy[] enemies = new Enemy[256];

int el = 0;

PGraphics pg;

float health = 100;

int score = 0;

PFont tfont;

PFont dfont;

void setup() {

health = 100;

score = 0;

tfont = createFont("OCR A Std", 18);

dfont = createFont("Lucida Sans", 12);

size(720, 576, P3D);

pg = createGraphics(720, 576, P3D);

frameRate(30);

oscP5 = new OscP5(this, 8338);

oscP5.plug(this, "found", "/found");

oscP5.plug(this, "rawData", "/raw");

oscP5.plug(this, "orientation", "/pose/orientation");

oscP5.plug(this, "mouthWidth", "/gesture/mouth/width");

oscP5.plug(this, "mouthHeight", "/gesture/mouth/height");

oscP5.plug(this, "poseScale", "/pose/scale");

oscP5.plug(this, "posePos", "/pose/position");

p.gen();

//mat = makeMaze(mat[0].length,mat.length);

forward = new PVector(0, 1, 0);

left = new PVector(0, 1, 0);

for (int i = 0; i < 100; i++) {

enemies[el] = new Enemy((floor(random(20)) + 0.5) * dw, (floor(random(20) + 0) + 0.5) * dw);

while (dist(enemies[el].x, enemies[el].y, px, py) < 50) {

enemies[el] = new Enemy((floor(random(20)) + 0.5) * dw, (floor(random(20) + 0) + 0.5) * dw);

}

el++;

}

}

void draw() {

if (found != 0 && health > 0) {

left.x = forward.x;

left.y = forward.y;

left.z = forward.z;

left.rotate(PI / 2);

thwart = new PVector(1, 1, 1);

pg.beginDraw();

pg.pushMatrix();

pg.background(240);

//beginpg.camera();

pg.camera(px, 0, py, px + forward.x, 0, py + forward.y, 0, 1, 0);

if (keyPressed) {

if (key == 'm') {

pg.camera(px, -100, py, px + forward.x, 0, py + forward.y, 0, 1, 0);

}

}

pg.frustum(-0.1, 0.1, -0.1, 0.1, 0.1, 200);

pg.scale(1, 0.72 / 0.576, 1);

pg.pushMatrix();

pg.fill(255, 0, 0);

pg.translate(px, 0, py);

//pg.sphere(2);

pg.popMatrix();

pg.pushMatrix();

pg.fill(255, 0, 0);

pg.translate(px + forward.x * 3, 0, py + forward.y * 3);

//pg.sphere(1);

pg.popMatrix();

pg.stroke(100);

pg.strokeWeight(2);

//pg.noStroke();

//pg.translate(100,100);

//pg.translate(-px,0,-py);

//rotateY(frameCount*0.1);

for (int i = 0; i < p.walls.length; i++) {

pg.pushMatrix();

if (p.walls[i][2] != -1) {

float wallx = p.walls[i][0] * dw;

float wally = p.walls[i][1] * dw;

pg.translate(wallx, 0, wally);

pg.fill(constrain(map(dist(wallx, wally, px, py), 0, 100, 255, 240), 240, 255));

if (p.walls[i][2] == 1) {

if (px >= wallx && px <= wallx + dw && py >= wally - 2 && py <= wally + 2) {

//thwart.x = 0;

//thwart.y = 0;

px -= movement.x;

py -= movement.y;

//pg.fill(255,0,0);

}

pg.translate(dw / 2, 0, 0);

pg.box(dw, 16, 2);

} else {

if (py >= wally && py <= wally + dw && px >= wallx - 2 && px <= wallx + 2) {

//thwart.x = 0;

//thwart.y = 0;

px -= movement.x;

py -= movement.y;

//pg.fill(255,0,255);

}

pg.translate(0, 0, dw / 2);

pg.box(2, 16, dw);

}

}

pg.popMatrix();

}

for (int i = 0; i < bl; i++) {

pg.pushMatrix();

pg.translate(bullets[i].x, bullets[i].y, bullets[i].z);

pg.fill(100);

pg.noStroke();

pg.sphere(bullets[i].size / 2 + bullets[i].size / 2 * noise(0.5 * i, 0.1 * frameCount));

bullets[i].update();

pg.popMatrix();

if (bullets[i].typ == 1) {

if (bullets[i].y > 8) {

for (int j = 0; j < 3; j++) {

bullets[bl] = new Bullet(bullets[i].x, bullets[i].y, bullets[i].z - 0.01, PVector.random3D());

bullets[bl].size = 0.8;

bullets[bl].spd = 0.4;

bullets[bl].typ = 2;

bullets[bl].dec = 0.8;

bl++;

}

bullets[i].typ = 0;

}

for (int k = 0; k < p.walls.length; k++) {

if (p.walls[k][2] != -1) {

float wallx = p.walls[k][0] * dw;

float wally = p.walls[k][1] * dw;

float bx = bullets[i].x;

float by = bullets[i].z;

if ((p.walls[k][2] == 1 && bx >= wallx && bx <= wallx + dw && by >= wally - 1 && by <= wally + 1) || (p.walls[k][2] == 2 && by >= wally && by <= wally + dw && bx >= wallx - 1 && bx <= wallx + 1)) {

for (int j = 0; j < 3; j++) {

bullets[bl] = new Bullet(bullets[i].x, bullets[i].y, bullets[i].z - 0.01, PVector.random3D());

bullets[bl].size = 0.8;

bullets[bl].spd = 0.4;

bullets[bl].typ = 2;

bullets[bl].dec = 0.8;

bl++;

}

bullets[i].typ = 0;

}

}

}

if (bullets[i].mast == 2 && dist(bullets[i].x, bullets[i].z, px, py) < 2) {

health -= 2;

bullets[i].typ = 0;

}

}

if (bullets[i].typ == 0) {

for (int j = i; j < bl; j++) {

bullets[j] = bullets[j + 1];

}

bl--;

}

}

for (int i = 0; i < el; i++) {

enemies[i].drawenem();

enemies[i].nav(p);

}

pg.popMatrix();

pg.endDraw();

image(pg, 0, 0);

noFill();

for (int i = 0; i < rawarr.length; i++) {

farr[i] = rawarr[i];

if (i % 2 == 0) {

farr[i] = (640 - farr[i]) * 720 / 640;

}

if (i % 2 == 1) {

farr[i] = farr[i] * 576 / 480;

}

}

stroke(150);

line(width / 2 - 200, height / 2 - 200, width / 2 - 200, height);

line(width / 2 + 200, height / 2 - 200, width / 2 + 200, height);

pushMatrix();

translate(width / 2 - 280, height / 2 - 200);

beginShape();

for (int i = 0; i < 57; i++) {

//vertex(i*10,50*noise(0.1*i,0.5*frameCount));

}

endShape();

popMatrix();

beginShape();

for (int i = 0; i < 34; i += 2) {

vertex(farr[i], farr[i + 1]);

}

for (int i = 52; i > 32; i -= 2) {

vertex(farr[i], farr[i + 1]);

}

endShape(CLOSE);

beginShape();

for (int i = 72; i < 84; i += 2) {

vertex(farr[i], farr[i + 1]);

}

endShape(CLOSE);

beginShape();

for (int i = 84; i < 96; i += 2) {

vertex(farr[i], farr[i + 1]);

}

endShape(CLOSE);

beginShape();

for (int i = 96; i < 110; i += 2) {

vertex(farr[i], farr[i + 1]);

}

for (int i = 124; i > 118; i -= 2) {

vertex(farr[i], farr[i + 1]);

}

endShape(CLOSE);

beginShape();

for (int i = 108; i < 118; i += 2) {

vertex(farr[i], farr[i + 1]);

}

vertex(farr[96], farr[97]);

for (int i = 130; i > 124; i -= 2) {

vertex(farr[i], farr[i + 1]);

}

endShape(CLOSE);

//println(mouthHeight);

if (mouthHeight > 1.8) {

bullets[bl] = new Bullet(px + forward.x, 0.8, py + forward.y, new PVector(forward.x, 0, forward.y));

bullets[bl].size = 0.6;

bl++;

for (int i = 0; i < 5; i++) {

bullets[bl] = new Bullet(px + forward.x * 0.2, 0.3, py + forward.y * 0.2, PVector.random3D());

bullets[bl].size = 0.2;

bullets[bl].spd = 0.08;

bullets[bl].typ = 2;

bl++;

}

}

if (poseScale > 5.3) {

px += 0.3 * forward.x * thwart.x;

py += 0.3 * forward.y * thwart.y;

movement = forward.copy();

}

if (poseScale < 4.7) {

px -= 0.3 * forward.x * thwart.x;

py -= 0.3 * forward.y * thwart.x;

movement = forward.copy();

movement.rotate(PI);

}

float roty = degrees(orientation.y);

if (roty > 4) {

forward.rotate(0.04 + 0.01 * (roty - 5));

}

if (roty < -4) {

forward.rotate(-0.04 + 0.01 * (roty + 5));

}

if (posePos.x > 340) {

px -= 0.3 * left.x;

py -= 0.3 * left.y;

movement = left.copy();

movement.rotate(PI);

}

if (posePos.x < 300) {

px += 0.3 * left.x;

py += 0.3 * left.y;

movement = left.copy();

}

println(health);

}

fill(150);

noStroke();

//textFont(tfont);

textAlign(CENTER);

textSize(16);

textFont(dfont);

//text("["+multStr(".",floor(100-health)/4)+multStr("|",floor(health/2))+multStr(".",floor(100-health)/4)+"]",width/2,100);

text("[" + multStr("|", floor(health / 2)) + "]", width / 2, 550);

textFont(tfont);

text(score, width / 2, 50);

//rect(0,0,health*7.2,4);

if (health <= 0) {

textAlign(CENTER);

textFont(tfont);

text("GAME OVER", width / 2, height / 2);

}

}

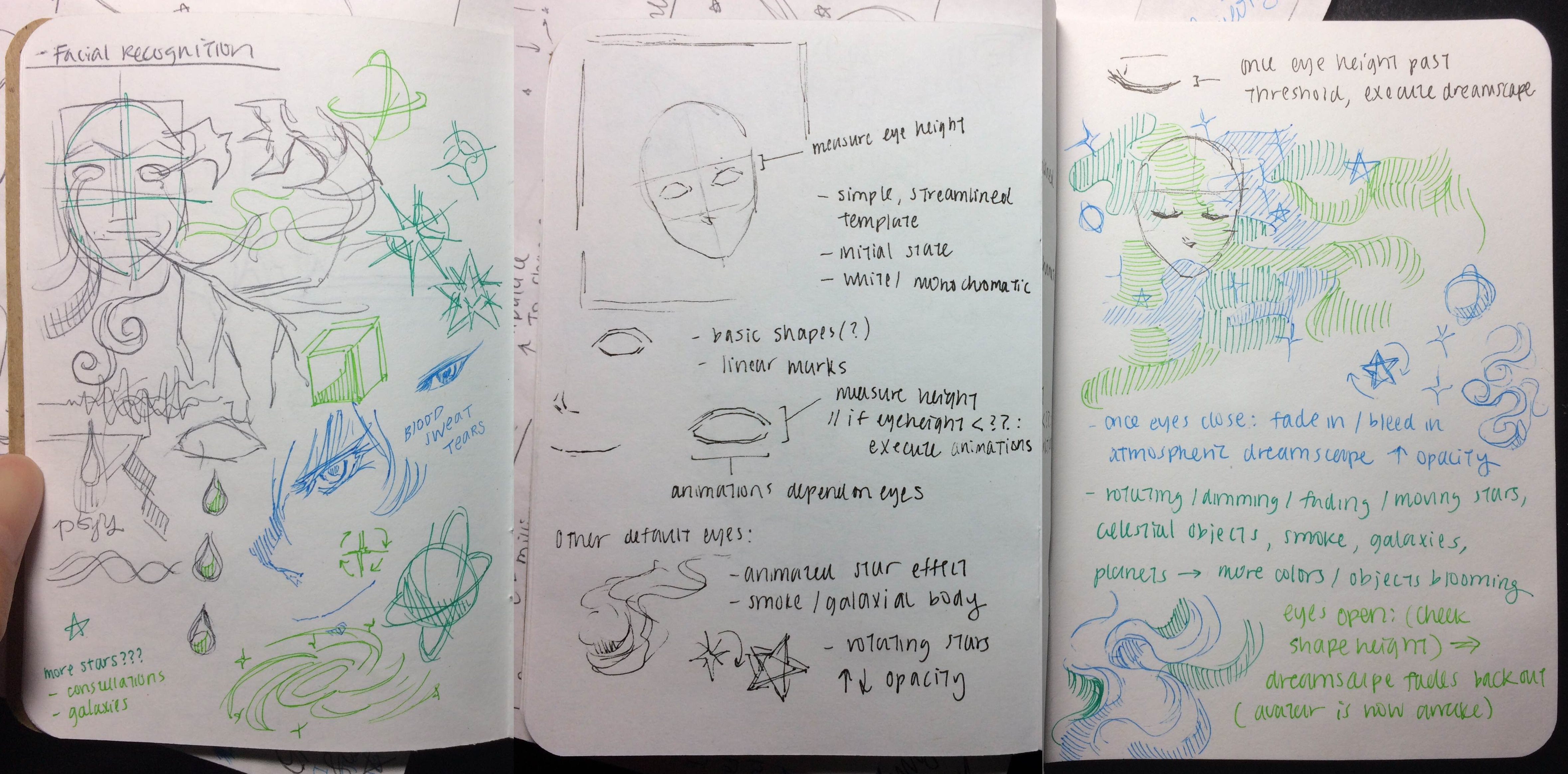

Type as a path

Type as a path

Got into rotating type

Got into rotating type

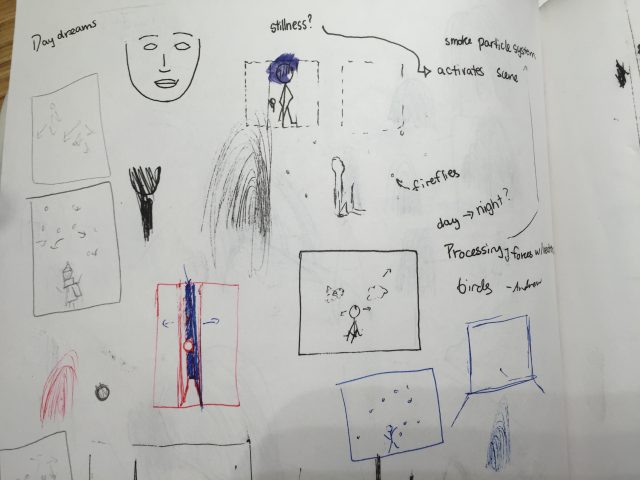

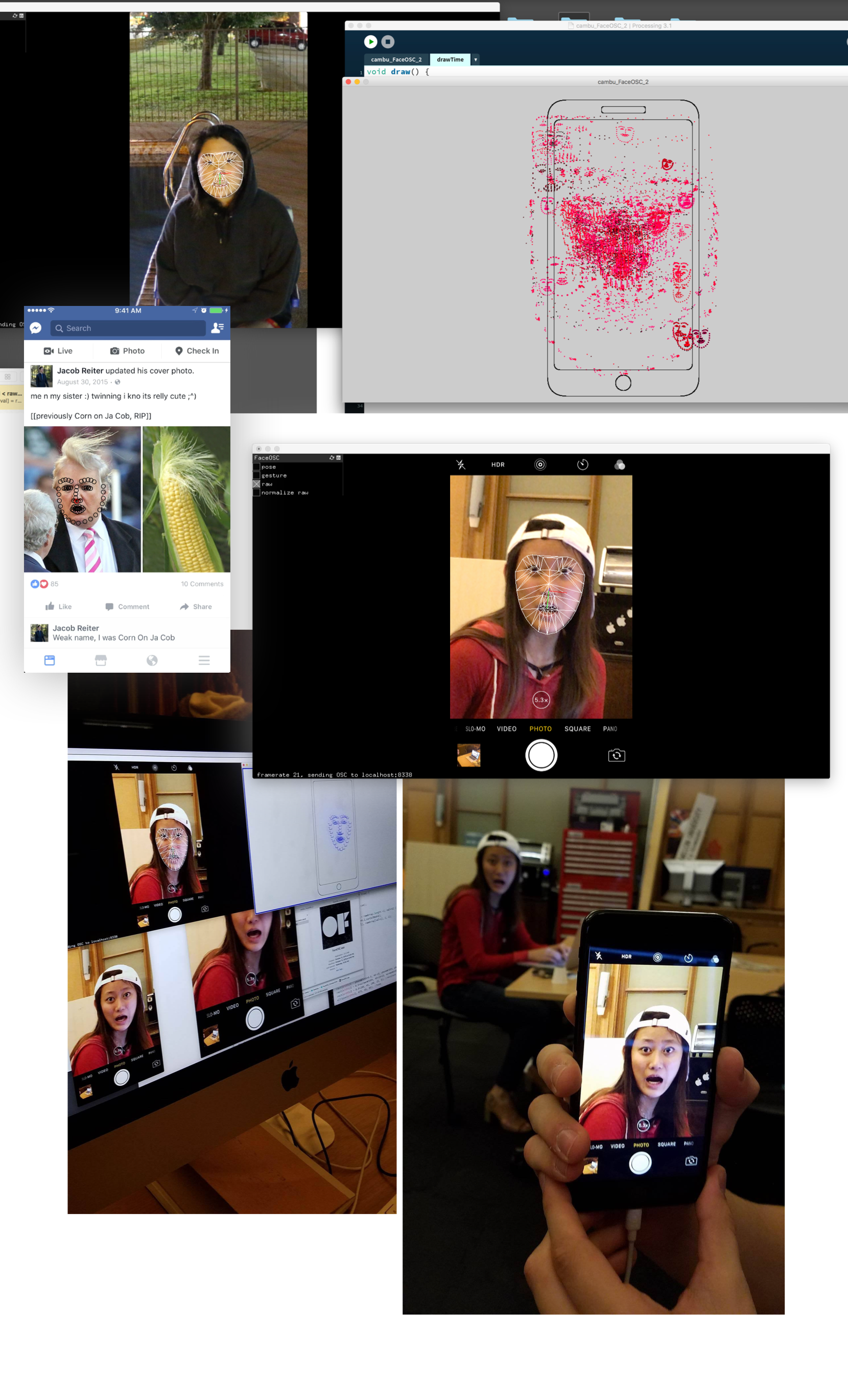

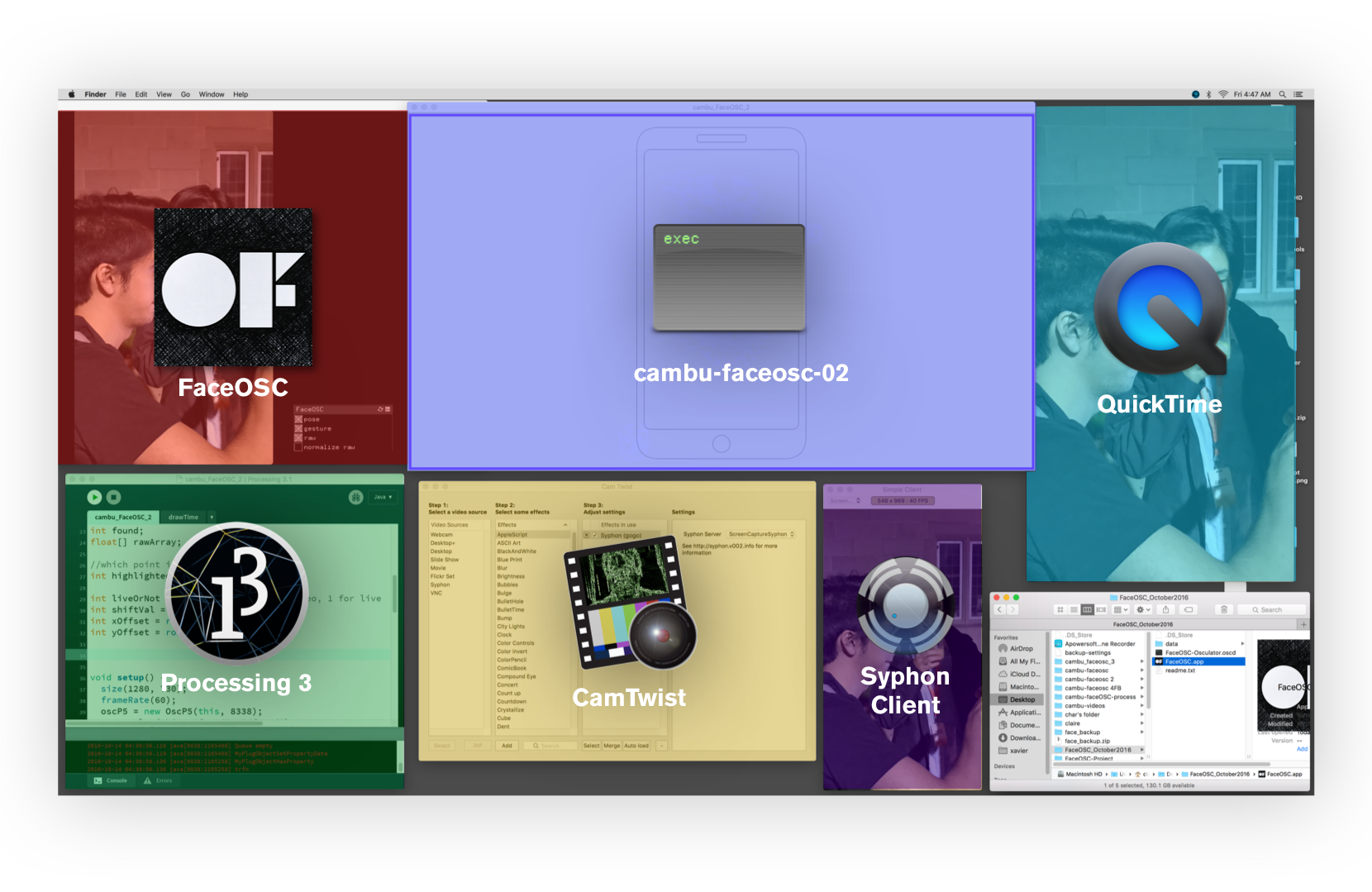

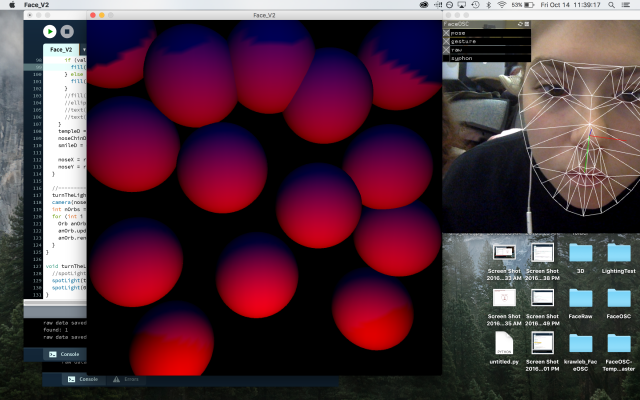

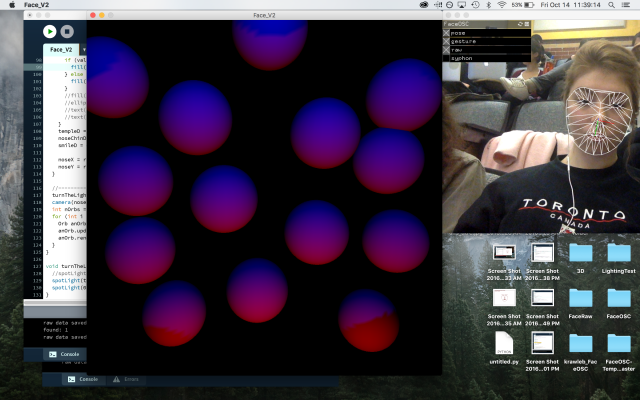

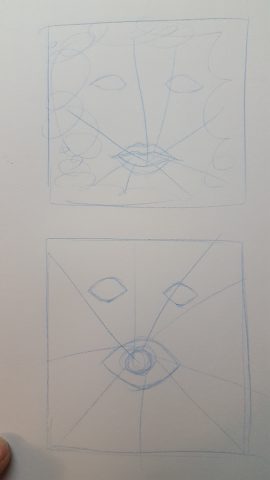

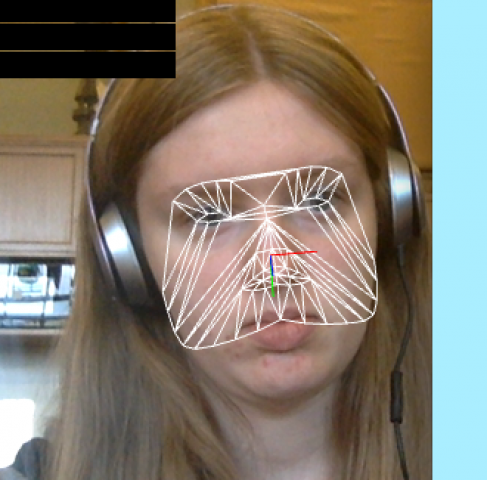

GIF of me making a composition using FaceOSC

GIF of me making a composition using FaceOSC