Keali-Book

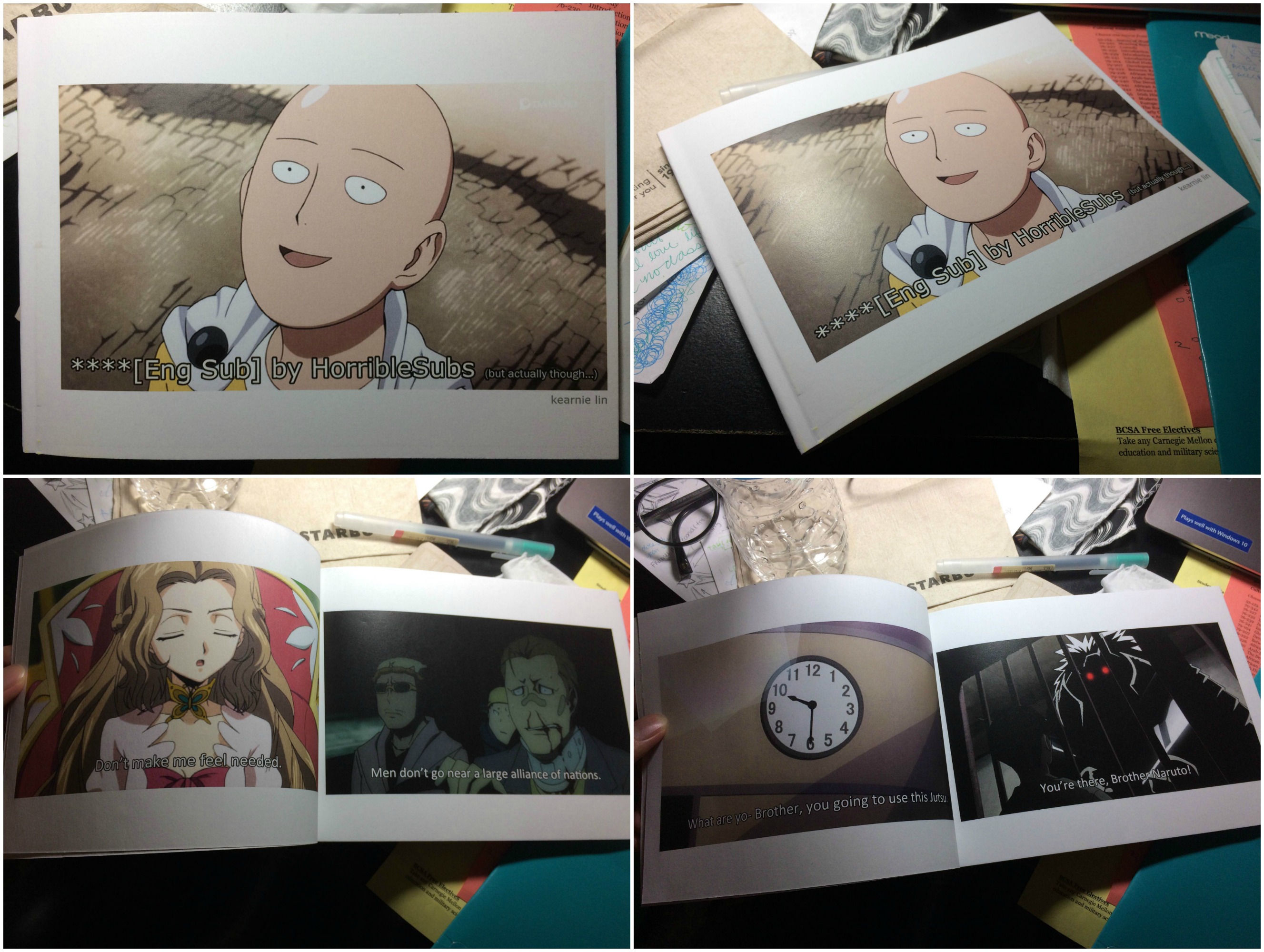

****[Eng Sub] by HorribleSubs (but actually though…) is a collection of randomly selected anime episode screencaps mismatched with arbitrarily generated, nonsensical, and irrelevant subtitles created through Markov Chains. A light-hearted and comedic production, the book pays homage to the dear memories of classic Japanese animated shows around which my adolescent life revolved; the collection of such franchises was a big influence on me wanting to become an artist. The title is reminiscent of the general title formats of the videos, as non-Japanese speakers attentively sought for the episodes that had the [eng sub] tag in the search results. *HorribleSubs is a popular provider of such subtitled episodes for numerous seasons.

Process:

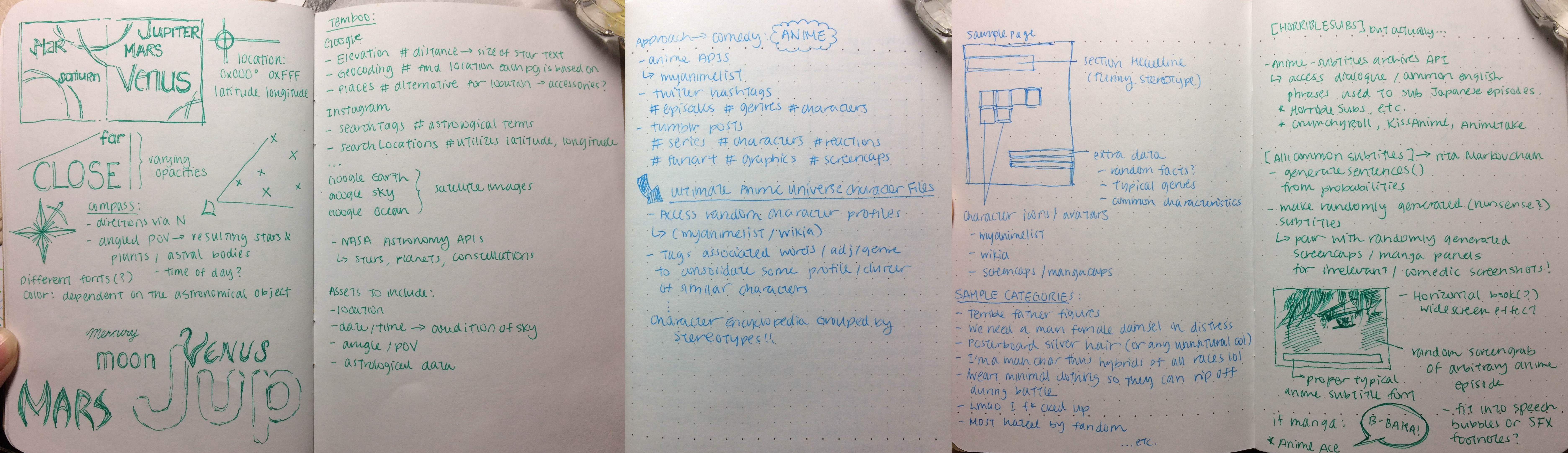

My initial idea was entirely different–in retrospect, it’s amusing that my ideas throughout the process and the final resulting product are both appealing aesthetics to me, though visually and thematically different. I originally intended to make my book using APIs regarding nature astronomy–I thus researched Google APIs, particularly those affiliated with Google Maps and Google Earth (which favorably extended to data of oceans, landmarks, and stars). I wanted to randomly generate latitude and longitude locations on the planet, and then visually represent the state of the sky, and possibly the surroundings, at that location using designs and typography. I sketched out some visuals, having different fonts and sizes for stars and planets being laid onto the page depending on the angle point of view and the distance of the celestial bodies and Earth. This plan eventually fell farther from my grasp as I failed to find the relevant APIs, and also discovered that many of the astronomy-related APIs were no longer updated or accessible.

It was then when I noticed that plenty of the generative book type arts I had seen up to that point were comedic, meant to be a light and comical read, and I thought perhaps I could attempt this as well being an illustrator that usually does not work on comedy-related artworks. As such came my anime idea, where I just had the most arbitrary thought of pairing up random screencaps of episodes to nonsensical, maybe gibberish, captions. I laughed at the thought of the most ordinary images captioned with the most irrelevant subtitles underneath. This also meant a lot to me personally as someone who decided to pursue art because of the cartoons and games with which I grew up. It was refreshing to, in a way, revisit and work on a project that displayed this field of art which, to my own experience, has been such a taboo at this institution for reasons I cannot comprehend. (Please take cartoons seriously…) I feel like it has been so long since I’ve made something this potentially funny and light-hearted.

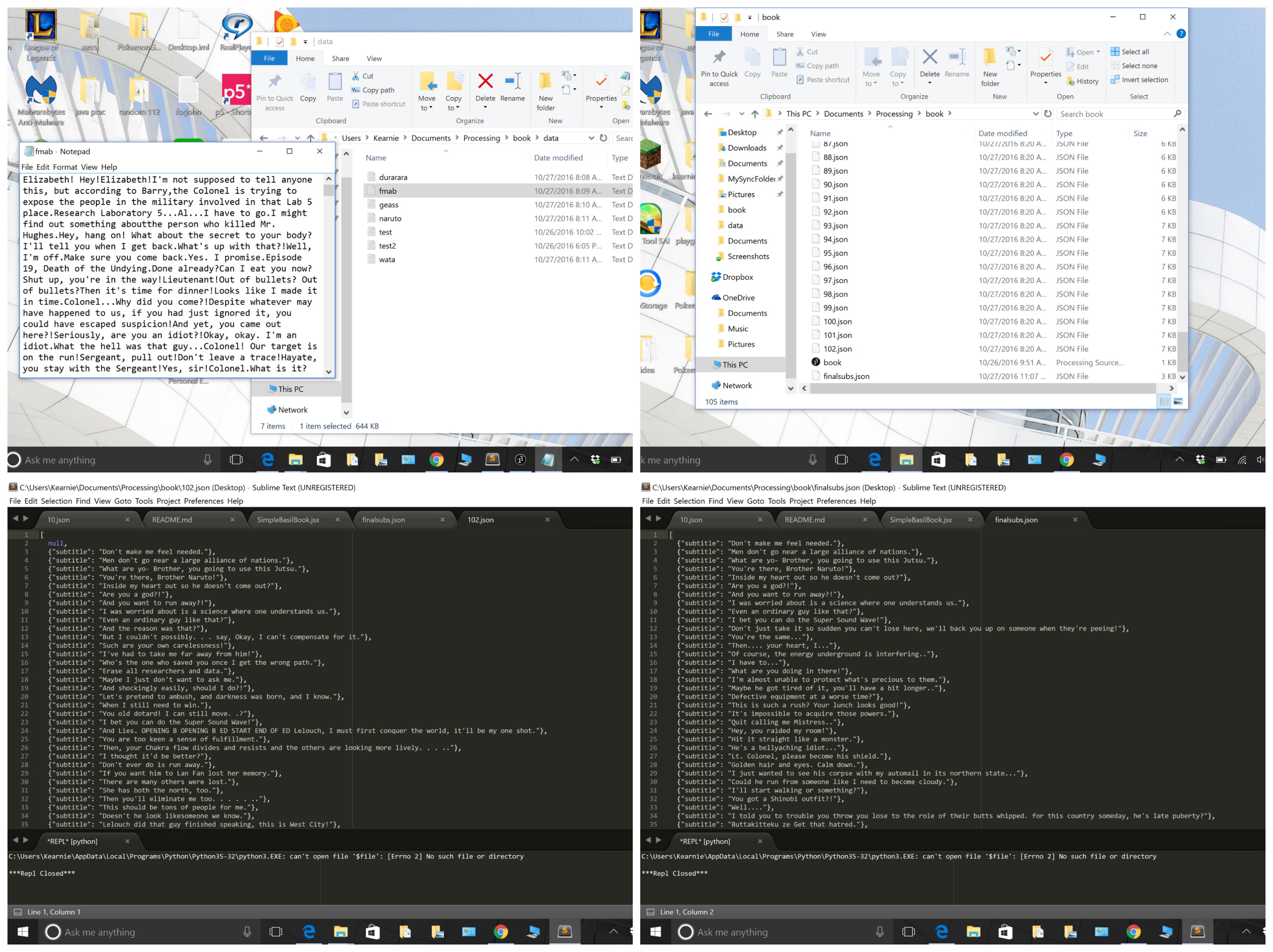

The main key in getting started was familiarizing myself with Rita–something completely new: I needed to learn about Markov Chains. This meant Dan Shiffman tutorials, dissecting references, and downloading and analyzing the example code that generated random sentences whenever the user clicked. Essentially, I modified the example code to feed in my own text files (collected anime subtitles), and to output the JSON objects as an array with {“subtitle”:} types.

I then wrote a script in Python to take and save random screenshots per all the downloaded episode files, and to save and condense all the subtitles per video into .txt files, organized by series. I brought all the series’ subtitles into the Processing file to generate sentences using Markov from them. I then ran the program and clicked as many times as I wanted to collect plenty randomly generated, nonsense subtitles that would be placed in my book.

Bringing the code into basil was more bearable with the sample book which Golan provided; the necessary lines and files were replaced with my own, and from there on the priority was to mimic the aesthetic of an adolescent’s exposure to anime: I wanted to the book to be unrefined, simple, informal. The screencaps were aligned per page in the center, as if it was directly captured from the video itself, and the subtitles were positioned to be at the bottom of the screen, also center-aligned and not past the video borders. The subtitle font was purposely chosen to be of the ones typically used to sub episodes, with easy readability, reasonable size, and a white or pale yellow fill color. I wanted to represent the online streaming environment as much as possible to fulfill the vision of the book (as direct representations of a subbed episode on some browser video player).

Streaming the episodes of a foreign cartoon from the states actually felt risky at times; if you couldn’t find the episode you wanted on youtube, you had to rely on google, and I personally was always wary with which sites could be trusted/which one had reliable subtitles (haha)/which one had good quality/which one may give my computer a virus, etc… I also factored this “experience” into the overall visual aspect of the book: once again, childish, innocent, perhaps even ratchet–the outcome is simple with minimal elements, but I feel like it fulfilled the task of essentially representing the necessary attributes. The final result made me, and others, laugh–which I am more than thankful for. I would also say the simple outcome is deceptively simple from all the generativity behind it…(personal benchmark: first time writing a script…!)

Here’s a video of the professor, flipping through the book:

final pdf: kearniebook5

Python script:

import subprocess

import shutil

import shlex

import re

import ass

import os

def takeSnapshots(fileName,ep):

amountPerEp = 10

episodeTime = 20*60

ignoreTime = 60*3

interval = int((episodeTime - 2*ignoreTime)/amountPerEp - 0.01)

base = "kearnie/screencaps/"

extractTimes = [i for i in range(ignoreTime,episodeTime-ignoreTime,interval)]

for i in range(len(extractTimes)):

time = extractTimes[i]

args = ["mpv","-ao","null","-sid","no","-ss",str(int(time)),"-frames","1","-vo","image",

"--vo-image-format=png", fileName]

try:

subprocess.run(args)

shutil.move("00000001.png",base + "%d.png" % (ep*amountPerEp+i))

except:

print("fal")

trackRegex = re.compile("mkvextract:\s(\d)")

removeBrackets = regex = re.compile(".*?\((.*?)\}")

def getSubtitleTracks(fileName):

output = subprocess.check_output(["mkvinfo",fileName],universal_newlines=True).splitlines()

currentTrack = None

sub_tracks = []

for line in output:

if "Track number:" in line:

trackNumber = trackRegex.search(line).group(1)

currentTrack = trackNumber

if "S_TEXT/ASS" in line:

sub_tracks.append(currentTrack)

return sub_tracks

def exportSRT(fileName, track):

srtName = fileName + "-%s.srt" % track

args = ["mkvextract", "tracks",fileName, "%s:%s" % (track,srtName)]

subprocess.run(args)

return srtName

def cleanLine(line):

newLine = ""

inBracket = False

lastBackSlash = False

for c in line:

if c == "{":

inBracket = True

elif c == "}":

inBracket == False

elif not inBracket:

if c == "\\":

lastBackSlash = True

elif c != "N" or not lastBackSlash:

newLine += c

lastBackSlash = False

return newLine

def extractTextFromSubtitles(fileName):

tracks = getSubtitleTracks(fileName)

output = ""

for track in tracks:

srtName = exportSRT(fileName, track)

lines = []

with open(srtName,"r") as f:

doc = ass.parse(f)

for event in doc.events:

lines.append(cleanLine(event.text))

combined = "\n".join(lines)

if "in" in combined or "to" in combined or "for" in combined:

output += combined

return output

def extractFromFile(fileName,ep):

os.makedirs("kearnie/screencaps/",exist_ok=True)

text = extractTextFromSubtitles(fileName)

with open("kearnie/subs.txt","a") as f:

f.write(text)

takeSnapshots(fileName,ep)

def extractSeries():

ep = 0

for filename in os.listdir("."):

if filename.endswith(".mkv"):

extractFromFile(filename,ep)

ep += 1

extractSeries()

Processing code:

import rita.*;

import java.util.*;

//RiTa Markov Chain example template

//Does not contain JSON attributes yet 🙁

JSONArray subtitles;

int count = 0;

RiMarkov markov;

String line = "click to (re)generate!";

String[] files = { "../data/durarara.txt",

"../data/fmab.txt",

"../data/geass.txt",

"../data/naruto.txt",

"../data/wata.txt" };

int x = 160, y = 240;

void setup()

{

size(500, 500);

fill(0);

textFont(createFont("times", 16));

// create a markov model w' n=2 from the files

markov = new RiMarkov(2);

markov.loadFrom(files, this);

subtitles = new JSONArray();

}

void draw()

{

background(250);

text(line, x, y, 400, 400);

}

void mouseClicked()

{

if (!markov.ready()) return;

x = y = 50;

String[] lines = markov.generateSentences(1);

line = RiTa.join(lines, " ");

JSONObject subtitle = new JSONObject();

subtitle.setString("subtitle", lines[0]);

subtitles.setJSONObject(count, subtitle);

println(lines[0]);

if (lines[0] != null) {

saveJSONArray(subtitles, count + ".json");

count++;

}

}

Basil.js code:

#includepath "~/Documents/;%USERPROFILE%Documents";

#includepath "d:\\Documents";

#include "basiljs/bundle/basil.js";

// Load a data file containing your book's content. This is expected

// to be located in the "data" folder adjacent to your .indd and .jsx.

var jsonString = b.loadString("finalsubs.json");

var jsonData;

//--------------------------------------------------------

function setup() {

var pageFlip = 0;

// Clear the document at the very start.

b.clear (b.doc());

// Make a title page.

// b.fill(0,0,0);

// b.textSize(24);

// b.textFont("Calibri");

// b.textAlign(Justification.LEFT_ALIGN);

// b.text("A Basil.js Alphabet Book", 72,72,360,36);

// b.text("Golan Levin, Fall 2016", 72,108,360,36);

// Parse the JSON file into the jsonData array

jsonData = b.JSON.decode( jsonString );

b.println("Number of elements in JSON: " + jsonData.length);

// Initialize some variables for element placement positions.

// Remember that the units are "points", 72 points = 1 inch.

var titleX = 72;

var titleY = 72;

var titleW = 72;

var titleH = 72;

var captionX = 38;

var captionY = b.height - 115;

var captionW = 500;

var captionH = 36;

var imageX = 38;

var imageY = 72;

var imageW = 500;

var imageH = 288;

// Loop over every element of the book content array

// (Here assumed to be separate pages)

for (var i = 0; i < jsonData.length; i++) {

// Create the next page.

b.addPage();

b.println(pageFlip);

if (pageFlip == 0) {

b.println("*******");

// Load an image from the "images" folder inside the data folder;

// Display the image in a large frame, resize it as necessary.

b.noStroke(); // no border around image, please.

var anImageFilename = "images/" + b.floor(b.random(0,40)) + ".png"

var anImage = b.image(anImageFilename, imageX, imageY, imageW, imageH);

anImage.fit(FitOptions.PROPORTIONALLY);

pageFlip = 1;

b.println("*")

}

if (pageFlip == 1) {

// Create textframes for the "title" field.

// Draw an ellipse with a random color behind the title letter.

/*b.noStroke();

b.fill(b.random(180,220),b.random(180,220),b.random(180,220));

b.ellipseMode(b.CORNER);

b.ellipse (titleX,titleY,titleW,titleH);

b.fill(255);

b.textSize(56);

b.textFont("Calibri");

b.textAlign(Justification.CENTER_ALIGN, VerticalJustification.CENTER_ALIGN );

b.text(jsonData[i].title, titleX,titleY,titleW,titleH);*/

// Create textframes for the "caption" fields

b.stroke(0);

b.fill(255);

b.textSize(20);

b.textFont("Calibri");

b.textAlign(Justification.CENTER_ALIGN, VerticalJustification.TOP_ALIGN );

b.text(jsonData[i].caption, captionX,captionY,captionW,captionH);

pageFlip = 0;

}

};

}

// This makes it all happen:

b.go();