Keali-FaceOSC

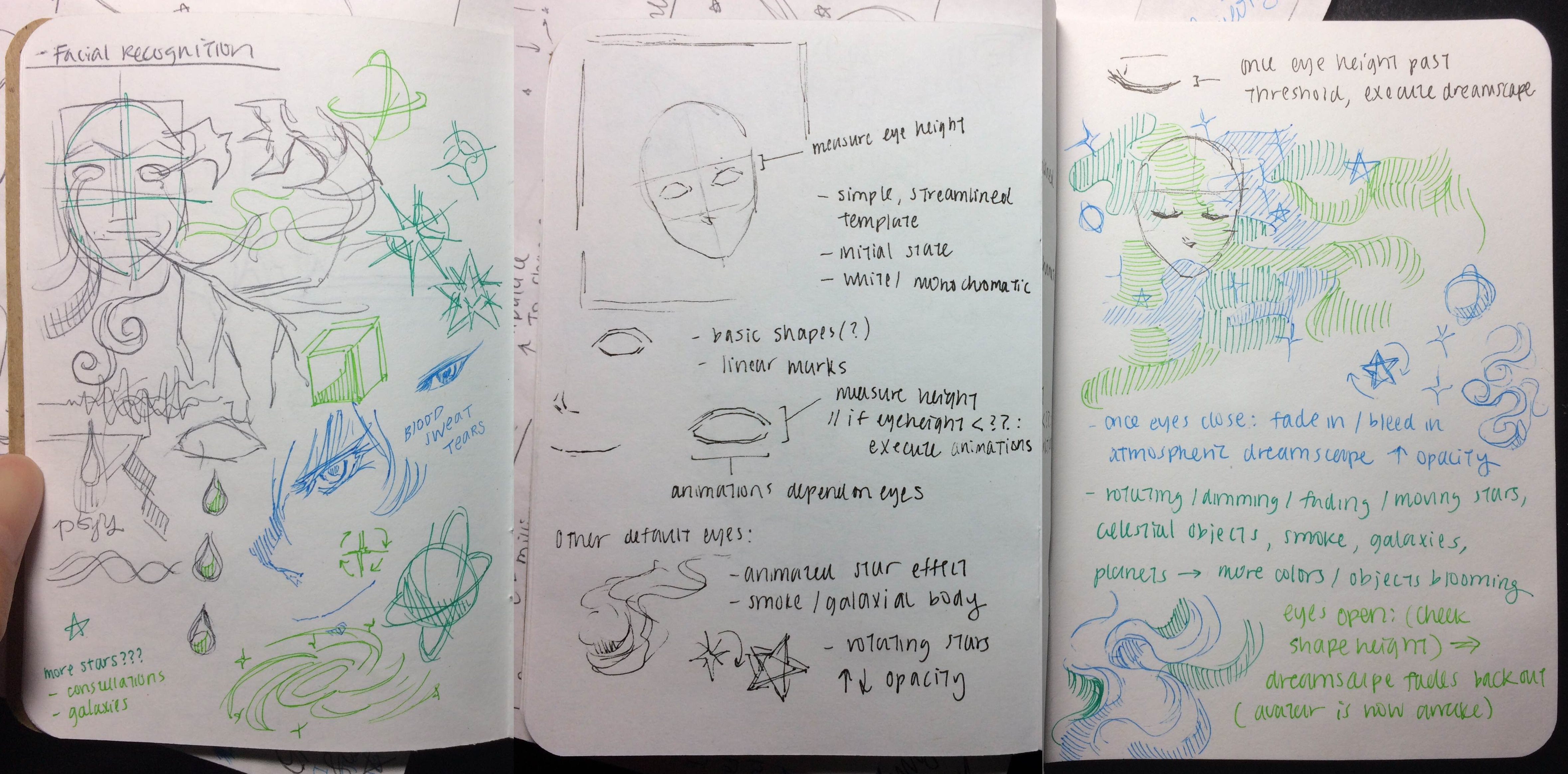

Rather than experimenting with a concept that was instinctively face-controlled, such as objects inherently representing faces, or masks/robots/other images, I wanted to explore something more abstract and relatable to my own aesthetics: that translated into more introspective, tranquil, and atmospheric ideas on which I base a lot of my interactive works. I almost automatically thought of skies/seas, gearing towards the starry path of galaxies and stardust in which someone can lose him or herself.

As such came the daydreaming concept of my project, being reliant on the openness of one’s eyes; I would allow a dreamscape of night stars light specks to fade in if the user’s eyes are closed or lower than/default. However, if the user is to open his or her eyes wider to a noticeable threshold, the dreamscape would snap back to a blank reality abruptly, as if one were suddenly woken up from a daydream by a third party. I also wanted to toy with the juxtaposition of having visuals that one ironically wouldn’t be able to see if his or her eye’s were opened (haha)–afterwards I just changed this to a daydream, rather than actual sleeping/eyes had to be fully closed, concept–for practical reasons; I also feel like this didn’t stray too far from my intrinsic intentions of “being” in another world.

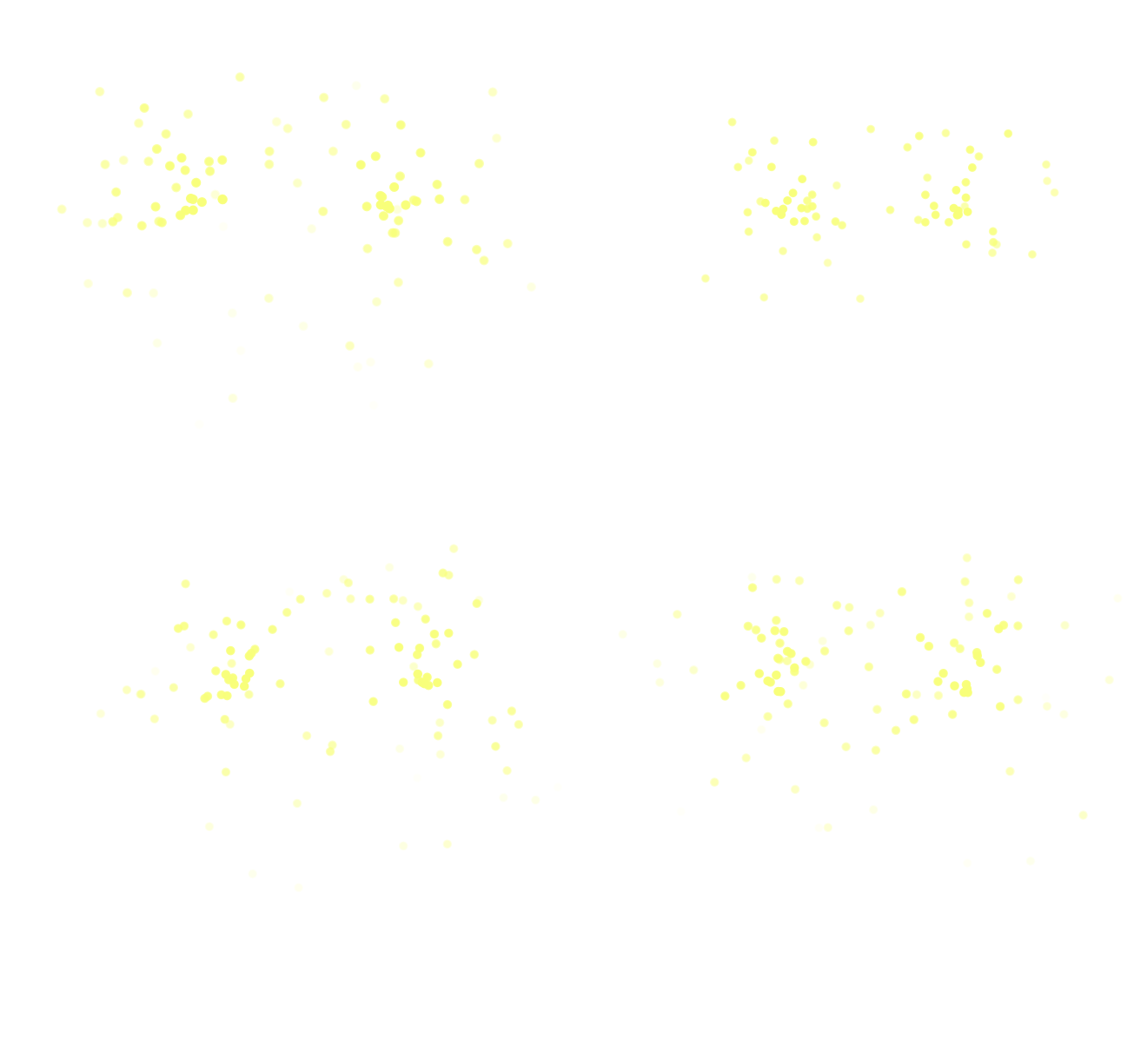

The visuals this time hinged on the atmosphere of the dreamscape, dealt with through gradients, flickering/fading opacities, and the subtle movements of a new particle class that I learned. Rather than having a blank template or default face, I thought to translate the daydream aspect abstractly to the “awake” portion as well. At the default, “awake” phase of the simulation, I removed the traces of an avatar and instead designated two particle bursts of where the eyes would be–these minor explosions follow the user’s eyes and represent how the stardust from the dreamscape realm is almost itching to come to fruition, to burst out–indicating how difficult it is for someone to perhaps not daydream usually. Once someone blanks out or loses focus, or in this case lessen the openness of eyes, the dreamscape reappears and the stardust particles float effortlessly and arbitrarily throughout the screen. When the simulation is first run, the stardust of the dreamscape expand from a cluster in the middle, otherwise abstracted as the center of the user’s mind, and travel outwards to the boundaries, giving a sense of the user moving forward and falling deeper, traveling further into the dream; the dreamscape is otherwise decorated by flickering stars and a fading night gradient.

I also wanted to advance the dreamscape with calming, therapeutic music, and I did so by utilizing the Processing audio capabilities, modifying the amp() to be higher when the eyes are smaller/dreamscape is occurring, and the amp() be 0 and for the music to stop abruptly if the eyes were open/avatar was in “reality”. The audio I selected is the soundtrack Will of the Heart from the animated show Bleach.

Hence I wanted this to be a sort of introspective interaction between the person and him or herself: that people are fighting their own concentration and awareness to not accidentally fade in to a daydream–or perhaps purposely daydream and enjoy the experience. Other than that, it is an interaction between the user and the interface, to enjoy the visuals of an abstract, idyllic, and atmospheric setting.

**personal notes with troubleshooting/my own laptop in particular: I think it’s because my laptop is of a newer model with Windows, so its screen resolution is supposedly “crazy high”–that being, a lot of my software windows (i.e. Processing, Photoshop, CamStudio, OBS, etc.) turn up tiny because of their inherently smaller windows resolutions… as such when I run the Processing code, the output windows is just a small square 🙁 It’s unfortunate that I feel like the size takes away from fully being immersed in the visuals but it can’t be helped–I’ve checked online for problem-solving, and this has actually been tagged as a “high-priority” problem in Processing’s open-source GitHub forum, but no solution has been provided yet.. this project, especially when it got to the screengrab/video-recording portion, also really made me wish I had a Mac/used OSX haha.. finding a software to properly record the screen was hard–and then working with the software’s windows to properly click the Play/Stop button on my “high-res” laptop was even harder because–surprise–the windows for the software were tiny too. And then the outputted files were huge. (my first attempt outputted a file of 4.0 GB…) but–I just wanted to record some benchmarks for personal’s sake. This was quite a journey–and in the end I’m thankful I got what I needed to get done, done.

//

// a template for receiving face tracking osc messages from

// Kyle McDonald's FaceOSC https://github.com/kylemcdonald/ofxFaceTracker

//

// 2012 Dan Wilcox danomatika.com

// for the IACD Spring 2012 class at the CMU School of Art

//

// adapted from from Greg Borenstein's 2011 example

// http://www.gregborenstein.com/

// https://gist.github.com/1603230

//

//modified 10.14.2016 for faceosc project

//dreamscape by kearnie lin

import oscP5.*;

OscP5 oscP5;

import java.util.concurrent.ThreadLocalRandom;

import java.util.*;

import processing.sound.*; //for dreamscape audio

SoundFile file;

// num faces found

int found;

// pose

float poseScale;

PVector posePosition = new PVector();

PVector poseOrientation = new PVector();

// gesture

float mouthHeight;

float mouthWidth;

float eyeLeft;

float eyeRight;

float eyebrowLeft;

float eyebrowRight;

float jaw;

float nostrils;

// Constants

int Y_AXIS = 1;

int X_AXIS = 2;

color b1, b2, c1, c2;

int dim;

ParticleSystem ps;

ParticleSystem ps2;

Dust[] dustList = new Dust [60];

float gMove = map(.15,0,.3,0,30); //thank you ari!

void setup() {

size(640, 640);

frameRate(30);

c1 = color(17,24,51);

c2 = color(24,55,112);

ps = new ParticleSystem(new PVector(eyeRight-25,-10));

ps2 = new ParticleSystem(new PVector(eyeLeft+25, -10));

for (int i = 0; i < dustList.length; i++) { dustList[i] = new Dust(); } file = new SoundFile(this, "faceoscmusic.mp3"); file.loop(); file.amp(0); //play audio when avatar is awake oscP5 = new OscP5(this, 8338); oscP5.plug(this, "found", "/found"); oscP5.plug(this, "poseScale", "/pose/scale"); oscP5.plug(this, "posePosition", "/pose/position"); oscP5.plug(this, "poseOrientation", "/pose/orientation"); oscP5.plug(this, "mouthWidthReceived", "/gesture/mouth/width"); oscP5.plug(this, "mouthHeightReceived", "/gesture/mouth/height"); oscP5.plug(this, "eyeLeftReceived", "/gesture/eye/left"); oscP5.plug(this, "eyeRightReceived", "/gesture/eye/right"); oscP5.plug(this, "eyebrowLeftReceived", "/gesture/eyebrow/left"); oscP5.plug(this, "eyebrowRightReceived", "/gesture/eyebrow/right"); oscP5.plug(this, "jawReceived", "/gesture/jaw"); oscP5.plug(this, "nostrilsReceived", "/gesture/nostrils"); } float eyesClosedValue = 0; void draw() { background(255); stroke(0); boolean eyesClosed = false; if(found > 0) {

pushMatrix();

translate(posePosition.x, posePosition.y);

scale(poseScale);

noFill();

if (eyeLeft < 3.0 || eyeRight < 3.0 || eyebrowLeft < 7.8 || eyebrowRight < 7.8) { eyesClosed = true; } print(eyeLeft); //debugging (finding threshold vals) print(eyeRight); if (eyesClosed == false) { ps.addParticle(); ps.run(); ps2.addParticle(); ps2.run(); } popMatrix(); } if (eyesClosed) { file.amp(eyesClosedValue/255.0); c1 = color(17,24,51,eyesClosedValue); c2 = color(24,55,112,eyesClosedValue); eyesClosedValue += 3; if (eyesClosedValue > 255) eyesClosedValue = 255;

//gradient

setGradient(0, 0, width, height, c1, c2, Y_AXIS);

Random ran = new Random(50);

//implement stars

for (int i = 0; i < 60; i++) {

noStroke();

int[] r = {230,235,242,250,255};

int[] g = {228,234,242,250,255};

int[] b = {147,175,208,240,255};

int starA = (int)(min(ran.nextInt(100),eyesClosedValue) + sin((frameCount+ran.nextInt(100))/20.0)*40);

fill(r[(ran.nextInt(5))],

g[(ran.nextInt(5))],

b[ran.nextInt(5)], starA);

pushMatrix();

translate(Float.valueOf(String.valueOf(width*ran.nextFloat())), Float.valueOf(String.valueOf(height*ran.nextFloat())));

rotate(frameCount / -100.0);

float r1 = 2 + (ran.nextFloat()*4);

float r2 = 2.0 * r1;

star(0, 0, r1, r2, 5);

popMatrix();

}

for (int j = 0; j < dustList.length; j++) { dustList[j].update(); dustList[j].display(); } } else { eyesClosedValue = 0; file.amp(0); } } class Dust { PVector position; PVector velocity; float move = random(-7,1); Dust() { position = new PVector(width/2,height/2); velocity = new PVector(1 * random(-1,1), -1 * random(-1,1)); } void update() { position.add(velocity); if (position.x > width) { position.x = 0; }

if ((position.y > height) || (position.y < 0)) {

velocity.y = velocity.y * -1;

}

}

void display() {

fill(255,255,212,100);

ellipse(position.x,position.y,gMove+move, gMove+move);

ellipse(position.x,position.y,(gMove+move)*0.5,(gMove+move)*0.5);

}

}

class ParticleSystem {

ArrayList particles;

PVector origin;

ParticleSystem(PVector location) {

origin = location.copy();

particles = new ArrayList();

}

void addParticle() {

particles.add(new Particle(origin));

}

void run() {

for (int i = particles.size()-1; i >= 0; i--) {

Particle p = particles.get(i);

p.run();

if (p.isDead()) {

particles.remove(i);

}

}

}

}

class Particle {

PVector location;

PVector velocity;

PVector acceleration;

float lifespan;

Particle(PVector l) {

acceleration = new PVector(0,0.05);

velocity = new PVector(random(-1,1),random(-2,0));

location = l.copy();

lifespan = 255.0;

}

void run() {

update();

display();

}

// update location

void update() {

velocity.add(acceleration);

location.add(velocity);

lifespan -= 5.0;

}

// display particles

void display() {

noStroke();

//fill(216,226,237,lifespan-15);

//ellipse(location.x,location.y,3,3);

fill(248,255,122,lifespan);

ellipse(location.x,location.y,2,2);

ellipse(location.x,location.y,2.5,2.5);

}

// "irrelevant" particle

boolean isDead() {

if (lifespan < 0.0) {

return true;

} else {

return false;

}

}

}

//draw stars

void star(float x, float y, float radius1, float radius2, int npoints) {

float angle = TWO_PI / npoints;

float halfAngle = angle/2.0;

beginShape();

for (float a = 0; a < TWO_PI; a += angle) {

float sx = x + cos(a) * radius2;

float sy = y + sin(a) * radius2;

vertex(sx, sy);

sx = x + cos(a+halfAngle) * radius1;

sy = y + sin(a+halfAngle) * radius1;

vertex(sx, sy);

}

endShape(CLOSE);

}

//draw gradient

void setGradient(int x, int y, float w, float h, color c1, color c2, int axis ) {

noFill();

if (axis == Y_AXIS) { // Top to bottom gradient

for (int i = y; i <= y+h; i++) {

float inter = map(i, y, y+h, 0, 1);

color c = lerpColor(c1, c2, inter);

stroke(c);

line(x, i, x+w, i);

}

}

}

// OSC CALLBACK FUNCTIONS

public void found(int i) {

println("found: " + i);

found = i;

}

public void poseScale(float s) {

println("scale: " + s);

poseScale = s;

}

public void posePosition(float x, float y) {

println("pose position\tX: " + x + " Y: " + y );

posePosition.set(x, y, 0);

}

public void poseOrientation(float x, float y, float z) {

println("pose orientation\tX: " + x + " Y: " + y + " Z: " + z);

poseOrientation.set(x, y, z);

}

public void mouthWidthReceived(float w) {

println("mouth Width: " + w);

mouthWidth = w;

}

public void mouthHeightReceived(float h) {

println("mouth height: " + h);

mouthHeight = h;

}

public void eyeLeftReceived(float f) {

println("eye left: " + f);

eyeLeft = f;

}

public void eyeRightReceived(float f) {

println("eye right: " + f);

eyeRight = f;

}

public void eyebrowLeftReceived(float f) {

println("eyebrow left: " + f);

eyebrowLeft = f;

}

public void eyebrowRightReceived(float f) {

println("eyebrow right: " + f);

eyebrowRight = f;

}

public void jawReceived(float f) {

println("jaw: " + f);

jaw = f;

}

public void nostrilsReceived(float f) {

println("nostrils: " + f);

nostrils = f;

}

// all other OSC messages end up here

void oscEvent(OscMessage m) {

if(m.isPlugged() == false) {

println("UNPLUGGED: " + m);

}

}