Elizabeth Agyemang-FinalProject: SubTweets

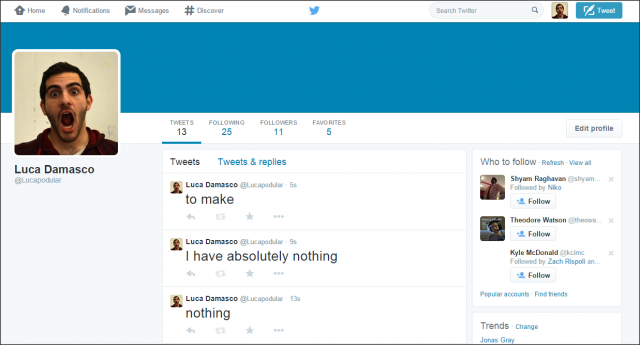

Keep munching, in the end it’s always the bigger sub that comes out stronger#subterfuge

— Marine Sub (@substweeting) December 9, 2014

Eat your sub right or someone else will#subterfuge — Marine Sub (@substweeting) December 4, 2014

Every sub has to be okay with the fact that some subs taste better than others#saucystuff

— Marine Sub (@substweeting) December 3, 2014

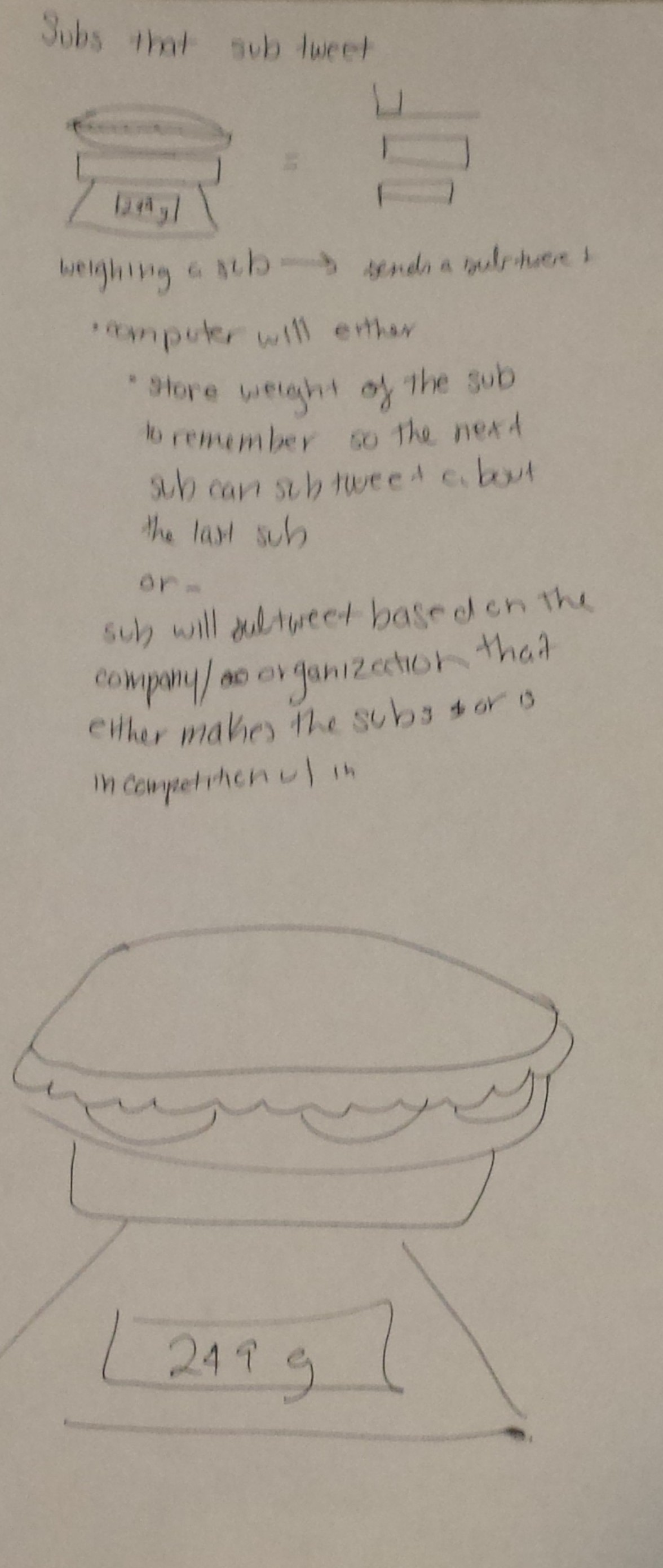

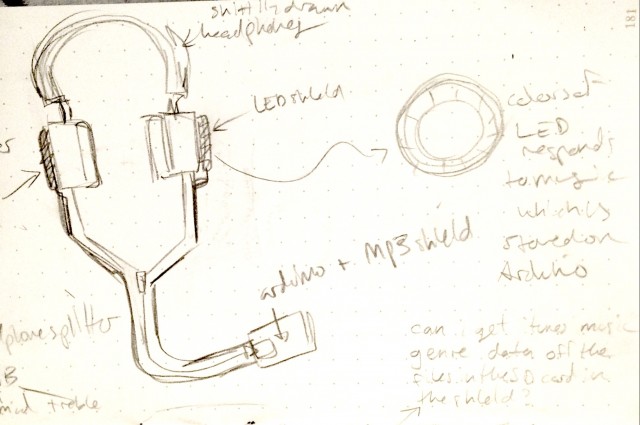

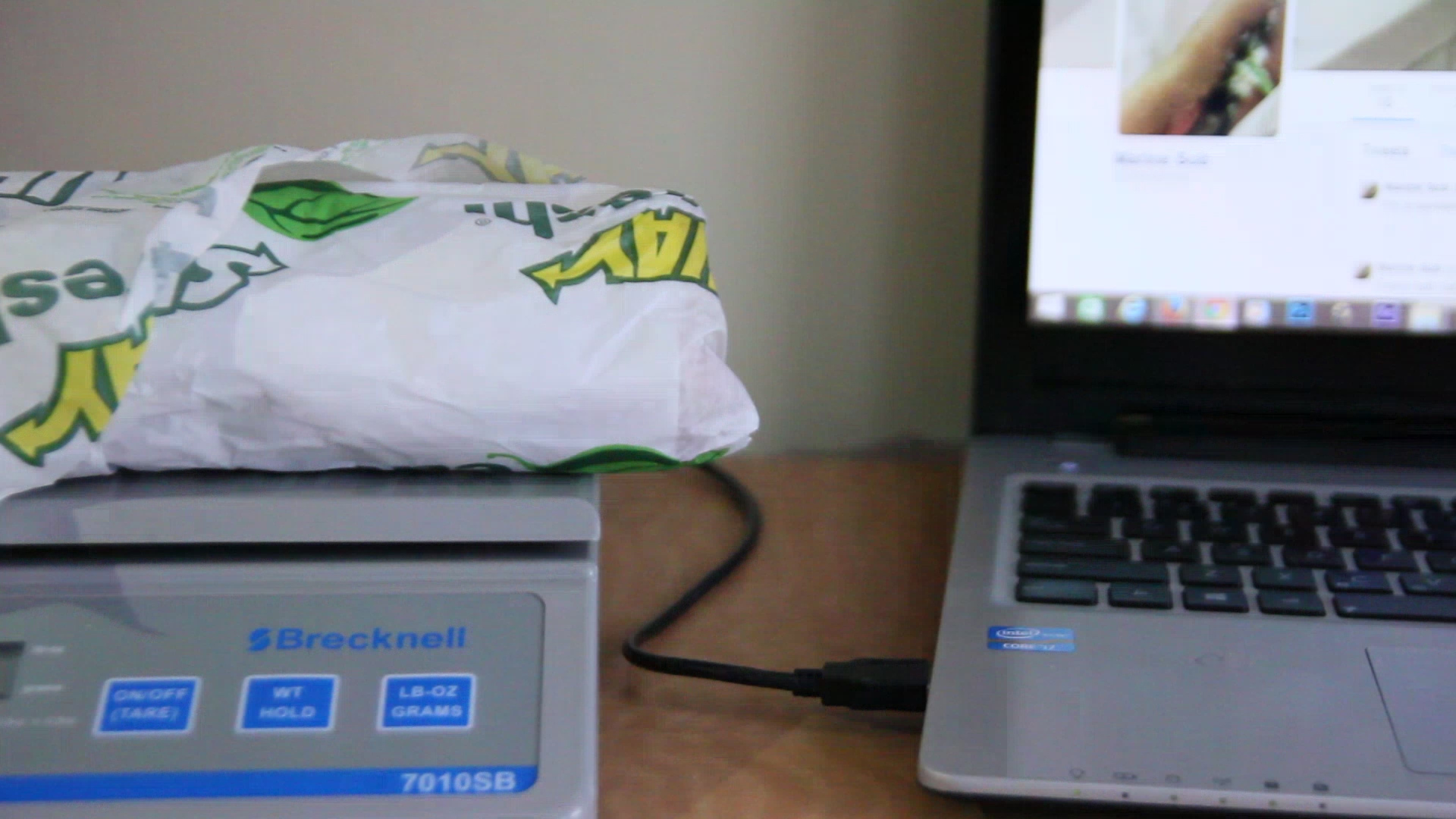

As I discussed in my last post, seen here, on twitter the culture of ‘subtweeting’ has been the source of many public arguments, rages and tension between users–from everyday people, to celebrities, organizations and even politicians. The project SubTweets looks to comment on this widespread practice by parodying the act of subtweeting through the voice of submarine sandwiches. In the project submarine sandwiches are weighed on a scale, resulting in a tweet, or a ‘subtweet’, being sent to twitter through a Processing program that utilizes Temboo in order to tweet.

SubTweets: The Project

SubTweets: The Project

The piece seeks to generate a conversation on how people communicate in the modern digital world. More and more it seems, when social and personal tensions arise, people turn to forums like Twitter to vent their frustration and anger. However, rather than resolving such issues, subtweeting instead heightens them, resulting in rage wars and twitter battles. Subtweets looks to comment on this culture through humor and food.

How it works:

The sandwiches are weighed by a digital scale and, depending on the weight and a list of properties stored in the computer, the program will recognize the type of sub and will generate the subtweets based on other parameters.

Implementation

Surprisingly, the process of creating this program was a lot more complicated than I initially anticipated.

The Scale(s)

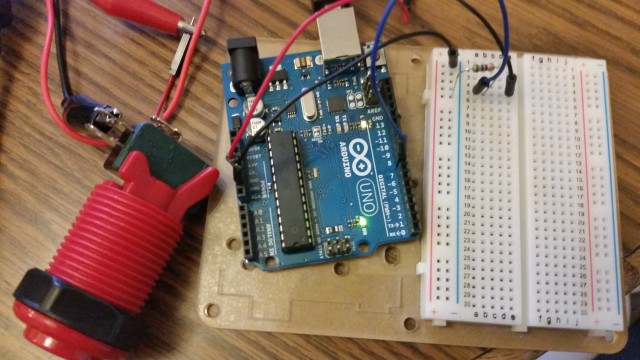

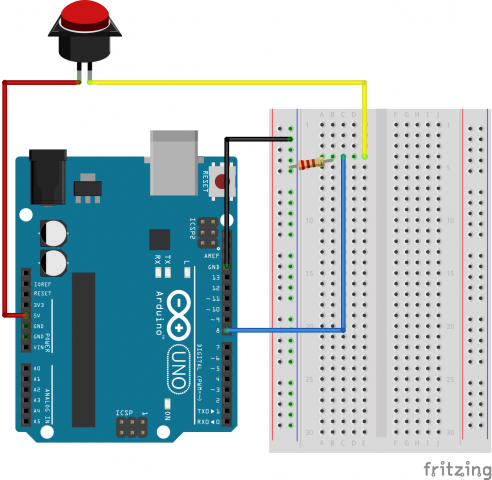

When I first began to prepare for this project, I knew I needed to purchase a scale that would be able to communicate with a computer, via a usb port, and send and receive bytes of information. I browsed through varying catalog ads and looked at programmers who had also used a scale to interact with a computer. I purchased a scale, a DYMO 10 lb that had a usb port capabilities with intention of getting the HUI device to interact with my computer in the same means the other programmer sources had suggested–through an open source program called libusb which gave a HUI device or any simple device the ability to interact and be read by a computer. Well, as things turned out, getting the computer to read the scale wasn’t my only problem, it was getting the scale to communicate back to the computer consistently. Though I was able to garner some communication from the computer and the scale, and after a lot of consultation and help from my professor, in the end another scale needed to be purchased. In the meantime I went to work on creating the code.

The other scale

This scale, an older, discontinued model, connected to my computer as I had initially assumed any usb scale would, through serial communication rather than HUI. After simply installing the driver the scale was able to communicate reliably and completely with my computer.

Failures and Success

In the ended, I remained slightly disappointed that I wasn’t able to get the DYMO scale working with my computer however, the issue may in fact have stemmed from an issue from the scale rather than my computer.

Code wise, when I imagined this program I envisioned something that would be better able to distinguish from different types of sandwiches. However, because it uses a scale, I quickly came to realize that the ways in which I might gain information from the sandwich was much more limited than I assumed. At the moment, the program only differentiates between 6 inch subs and footlongs and the proceeding code and comments go along with this. Also, because I was creating the comments the subs could tweet, there weren’t as much variety, at least in the hashtagging as I would like. Even so, I am still very proud of the way the program turned out.

During critiques I got a lot of really positive feedback on my program and I was really enthused to see people reacting so well to the humorous nature of the piece. I really feel that this was one of my most successful projects in that I was able to create a program, that in some way, had it’s own personality.

The project can be found on twitter here

Code

//Importing the libraries

// Processing code for a 7010sb-scale

import processing.serial.*;

Serial myPort; // Create object from Serial class

import com.temboo.core.*;

import com.temboo.Library.Twitter.Tweets.*;

int myData[];

int byteCount = 0;

float oldsixtweets=0;

float oldfoottweets=0;

// Create a session using your Temboo account application details

TembooSession session = new TembooSession("marinesub", "myFirstApp", "8d605622f29f4b77b4e783db227d880a");

//checking sixinch tweets

float checktweetssix=0;

float checktweetsfoot=0;

////

//tracking changes

float minimumAcceptableSandwichWeight = 10;

float timeAveragedAbsoluteChangeInWeight = 0;

float prevWeight = 0;

float subWeight = 0;

float weightAtLastTweet;

int lastTimeITweeted = -10000;

float currWeight = 0;

//if the sub is a sixinch or a footlong

boolean isSixInch= false;

boolean isFootlong=false;

float sizeSixInchSub;

float footLongSUB;

//StringList insult;

String [] insult= new String [31];

String[] boasting = new String[10];

String[] hashtag = new String[7];

String[] people = new String[4];

String [] finaltweet= new String[2];

String[] both = new String[3];

//what to tweet

String [] maintweet = new String[3];

//

//generating tweets

int [] sixTweets = new int[5];

int [] footTweets = new int[5];

//making sure not the same tweet is said

int currentInsult;

int oldInsult;

int changeInsult;

//changng if to tweet about foot longs or six inch

int update=0;

//------------------------------------

void setup() {

size (1000, 500);

// Run the StatusesUpdate Choreo function

runStatusesUpdateChoreo();

//looking at ports, choose 0

String portName = Serial.list()[0];

myPort = new Serial(this, portName, 2400);

myData = new int[10];

byteCount = 0;

//-----------------------------------

// insult = new StringList();

//six inch general

insult [0] = " ";

insult [1] = "Every sub has to be okay with the fact that some subs taste better than others";

insult [2] = "Man these wiches be delicious";

insult [3] = "It's not a subtweet if you're not a sandwich";

insult [4] = "You subtweet like a bro. And by bro, I mean burger";

insult [5] = "nothing annoys me more than when people assume a sandwich is for them";

insult [6] = "Eat your sub right or someone else will";

insult [7] = "Don't assume a sub is just for you . They can literally be eaten by anyone, then you look HUNGRY.";

insult [8] = "Did you know that you can actually execute a brutal subtweet through the ancient art of sharing?";

insult [9] = "I never tasted something so imperfectly perfect could exist until I tried you.";

insult [10] = "You're so hungry, you probably think this sub is for you...";

insult [11] = "Big bites come in little wrappers";

insult [12] = "Idk about you, but i think it's pretty stupid if you don't buy a sub just because it's smaller";

insult [13] = "I love when people bite me or eat other things to try and make me mad. I'm an sub, but keep going because it's amusing.";

insult [14] = "if you're really going to eat a sub for its size then you need to get your priorities straight.";

insult[15] ="I can't stand when people share subs like why don't you just eat your own";

//foot long general insults

insult[16] = "Sometimes I type out a subtweet about how unheahlty u are then I delete it bc I'm like what's the point I'm gonna eat you anyway";

insult[17] = "Bigger is always better";

insult[18] = "Keep munching, in the end it's always the bigger sub that comes out stronger";

insult[19] = "You wish you could make them full like I do";

insult[20] = "Two of you can't measure up to me" ;

insult[21] = "Some Subs are bigger than others";

insult[22] = "If you're gonna subtweet me at least get your ingredients about me straight first ";

insult[23] = "Sandwiches only rain on your paddy because their jealous of your crust and tired of their crumbs";

insult[24] = "I'm a sandwich that never ends, you ";

insult[25] = "OMG get over it, they buy me cus they have stomachs that you can't fill" ;

insult[26] = "If you're gunna throw out your sixinch or footlong it better be sublovin. There's no time for subhaters in a relationship.";

insult[27] = "I am a tasty sub & don't need to subtweet I am a tasty sub & don't need to subtweet I am a tasty sub & don't need to subtweet";

insult[28] = "people will tell you how you taste in a subtweet before they take a bite or have a taste";

insult[29] = "No I don't wanna munch @you that's the point of a sandwich, to eat it";

insult[30] = "";

//-----------------------------------

//String[] boasting = new String[10];

boasting [0]="";

boasting[1] ="Girls will subtweet and put little music emojis after it to make it look like a song " ;

boasting[2] ="I can't stand when couples subtweet each other like why don't you just text them the whole world doesn't need to see your drama";

//-----------------------------------

//String[] hashtag = new String[6];

hashtag[0]="";

hashtag[1]="#yeahiateit";

hashtag[2]="#saucystuff";

hashtag[3]= "#subterfuge";

hashtag[4]= "#mineisbiggerthanyours";

hashtag[5]= "#itsmysandwichandiwantitnow";

hashtag[6]= "#BiteMe";

//-----------------------------------

//String[] people = new String[4];

people[0]= "";

people[1]="@subway";

people[2]= "@quickcheck";

people[3]= "@suboneelse";

//String[] combinations = new String[30];

//-----------------------------------

//String both a hashtag or/and at people

//using concat() function

// Concatenates two arrays. For example, concatenating the array { 1, 2, 3 }

//and the array { 4, 5, 6 } yields { 1, 2, 3, 4, 5, 6 }. Both parameters must be arrays of the same datatype.

both = concat(hashtag, people) ;

///

size(640, 360);

noStroke();

//

}

int testing(int rest) {

rest=0;

if ((sixTweets[1])!=0) {

sixTweets[2]=rest;

} else {

sixTweets[2]=sixTweets[2];

}

return rest;

}

//-----------------------

void draw() {

background(0);

//-----------------------------------

//six INCH sub Tweets

//a six inch sub weighs from 100 to 290 grams

//say nothing

sixTweets[0] = 0;

//insults

sixTweets[1] = (int)random(1, 16);

//boastsing

sixTweets[2] = (int)random(2);

//#hashtag

sixTweets[3] = (int)random(6);

//@aperson

sixTweets[4] = (int)random(3);

//----------------------------------

//a footlong is from 291 to 500grams

//int [] footTweets = new int [4];

footTweets [0] = 0;

//insults

footTweets[1]= (int)random(16, 30);

//boastsing

footTweets[2] = (int)random(2);

//#hashtag

footTweets[3] = (int)random(6);

//@aperson

footTweets[4]= (int)random(3);

///

// Read data from the scale until it stops reporting data.

while ( myPort.available () > 0) {

int val = myPort.read();

if (val == 2) {

// Figure out when the message starts

byteCount = 0;

}

// Fill up the myData with the data bytes in the right order

myData[byteCount] = val;

byteCount = (byteCount+1)%10;

}

int myWeight = getWeight();//most recent value of the weight

subWeight= myWeight;

float changeInWeight = (subWeight - prevWeight);

float absoluteChangeInWeight = abs(changeInWeight);

float A = 0.97;

float B = 1.0-A;

timeAveragedAbsoluteChangeInWeight = A*timeAveragedAbsoluteChangeInWeight + B*absoluteChangeInWeight;

//saying that you can't boast and insult at the same time for sixinchtweets

if ((sixTweets[1])!=0) {

sixTweets[2]=0;

} else {

sixTweets[2]=sixTweets[2];

}

if ((sixTweets[3]!=0)) {

sixTweets[4]=0;

} else {

sixTweets[4]=sixTweets[4];

}

//saying that you can't boast and insult at the same time for footlong

if ((footTweets[1])!=0) {

footTweets[2]=0;

} else {

footTweets[2]=footTweets[2];

}

if ((footTweets[3]!=0)) {

footTweets[4]=0;

} else {

footTweets[4]=footTweets[4];

}

//----------------------------------------------

//checking if the same tweets are being said

oldInsult= sixTweets[1];

changeInsult = (sixTweets[1]-oldInsult);

//telling it to tweet if it is in this area

sizeSixInchSub= constrain(subWeight, 100, 290);

footLongSUB = constrain(subWeight, 291, 600);

//sixInch();

// } else {

println(" ");

// }

fill(255);

strokeWeight(5);

//If the sandwich weighs more than some negligible amount, then bother tweeting;

// // Don't bother tweeting about an empty scale, even the weight has settled down (to nothing)

if (subWeight > minimumAcceptableSandwichWeight) {

//

// // If the change in weight has settled down (the weight is no longer changing)

if (timeAveragedAbsoluteChangeInWeight < .02) {//was.02

oldsixtweets= oldsixtweets;

oldfoottweets= oldfoottweets;

//if the weight of the sub is in the range that a six inch is, tweet what a six inch sub is

checkconstraints();

int now = millis();

int elapsedMillisSinceILastTweeted = now - lastTimeITweeted;

//if weight change has past the oldtweets value changes

// // and if it has been at least 10 seconds since the time I tweeted

if (elapsedMillisSinceILastTweeted > 10000) {//was 10000

float weightDifference = abs(subWeight - weightAtLastTweet);

if (weightDifference > 10) {

// // Then send the tweet

if (isSixInch) {

//if it is a six inch sub, check by making checktweet the value of the tweet

if (sixTweets[1]!=oldsixtweets) {

update=1;

sixInch();

runStatusesUpdateChoreo();

checktweetssix=sixTweets[1];

}

println("maintweet", maintweet, "sixinchtwee/", sixTweets, "foottwees:", footTweets);

checktweetsfoot=0;

isSixInch=false;

}

//if the sub is a footlong call the function for footlong tweets

if (isFootlong) {

update=2;

//if the old footlong tweet is not new to the new footlong tweet, call the function

if (oldfoottweets!=footTweets[1]) {

footlong();

println("They arent equal");

runStatusesUpdateChoreo();

} else {

println("They are equal");

}

}

isFootlong= false;

//

}

}

}

}

prevWeight = subWeight;

println("checktweetsix:", checktweetssix, "checktweetsfoot:", checktweetsfoot, maintweet[update] );

}

//function that returns the value of the string the scale is giving out in ints

int getWeight() {

/*

// https://learn.adafruit.com/digital-shipping-scales/using-a-7010sb-scale

STX character - Hex 0x02, indicates "Start of TeXt"

Scale indicator - Hex 0xB0 for lb/oz and 0xC0 for grams

Hex 0x80 (placeholder)

Hex 0x80 (placeholder)

First character of weight, ascii format

Second character of weight, ascii format

Third character of weight, ascii format

Fourth character of weight, ascii format - single Ounces in Lb/Oz weight

Fifth character of weight, ascii format - 1/10th Ounces in Lb/Oz weight

Finishing carriage return - Hex 0x0D

*/

String weightString = "";

if (myData[1] == 192) { // grams yo

for (int i=4; i<=8; i++) {

if ((myData[i] >= 48) && (myData[i] <= 57)) { // only want characters between '0' and '9'

weightString += (char)myData[i];

}

}

} else {

println ("Scale is using pounds/ounces, yuck");

}

int aWeight = 0;

if (weightString.length() > 0) {

aWeight = Integer.parseInt(weightString);

}

return aWeight;

}

//function that tweets when the sixinch sub is called

void sixInch() {

println("SubWeight:", subWeight, "sizeSixInchSub", abs(sizeSixInchSub));

fill(255, 180);

text((insult [sixTweets[1]]) + (boasting[sixTweets[2]])+(hashtag[sixTweets[3]])+(people[sixTweets[4]]), 50, 50);

maintweet[1]= (insult [sixTweets[1]]) + (boasting[sixTweets[2]])+(hashtag[sixTweets[3]])+(people[sixTweets[4]]);

// println("changeInsult:", changeInsult, "oldInsult:", oldInsult,"subWeight:", subWeight, "mouseX:", mouseX);

lastTimeITweeted = millis();

weightAtLastTweet = subWeight;

oldsixtweets=sixTweets[1];

//making it so that a six inch is no longer true

}

void footlong() {

println("SubWeight:", subWeight, "sizeSixInchSub", abs(sizeSixInchSub));

fill(255, 180);

text((insult [footTweets[1]]) + (boasting[footTweets[2]])+(hashtag[footTweets[3]])+(people[footTweets[4]]), 50, 50);

maintweet[2]=(insult [footTweets[1]]) + (boasting[footTweets[2]])+(hashtag[footTweets[3]])+(people[footTweets[4]]);

// println("changeInsult:", changeInsult, "oldInsult:", oldInsult,"subWeight:", subWeight, "mouseX:", mouseX);

lastTimeITweeted = millis();

weightAtLastTweet = subWeight;

oldfoottweets=footTweets[1];

}

void checkconstraints() {

if (subWeight==sizeSixInchSub) {

isSixInch=true;

isFootlong=false;

} else if (subWeight==footLongSUB) {

//if the weight of the sub is in the range that a footlong is, tweet what a footlong sub is

isFootlong=true;

isSixInch=false;

} else if (subWeight==0) {

isSixInch=false;

isFootlong=false;

}

if (subWeight==0) {

println("it's zero");

}

}

void noWeight() {

if (subWeight==0) {

isSixInch=false;

isFootlong=false;

}

}

void runStatusesUpdateChoreo() {

// // Create the Choreo object using your Temboo session

StatusesUpdate statusesUpdateChoreo = new StatusesUpdate(session);

//

// // Set credential

statusesUpdateChoreo.setCredential("subtweetingprogram");

//

// // Set inputs

statusesUpdateChoreo.setAccessToken("2874650849-kXG8QbNDe68kp1eFJFzTmQ70n7QN0RgH7txPTCL");

statusesUpdateChoreo.setAccessTokenSecret("wlyBaIu491BgvjH18uHG1ZeDptl6jvrfCg02NwhZ5EwI9");

statusesUpdateChoreo.setConsumerSecret("g8KCtyJjKDI5MciTvYfRLWhO8kYfP7o6UhRPM7HZkvNrT2KrFo");

statusesUpdateChoreo.setStatusUpdate(maintweet[update]);

statusesUpdateChoreo.setConsumerKey("t0BheAwlLdNESJqwmVJD2mHaK");

// // Run the Choreo and store the results

StatusesUpdateResultSet statusesUpdateResults = statusesUpdateChoreo.run();

//

// Print results

// println(statusesUpdateResults.getResponse());

}

//

Sources of other programers communicating with scales and other HUI devices

https://code.google.com/p/java-simple-serial-connector/

http://coffeefueledcreations.com/blog/?p=131