Dave – Looking Outwards Final Project

Inspirations

1. Simstudent

Simstudent is a research project at HCII at CMU. Humans learn by teaching, so we can take advantage of that fact by having real students teach algebra to a computer program, the Simstudent, so in turn the students themselves can learn. It uses machine learning algorithms to find patterns in algebra problems to solve them in logical steps. At each phase of the problem, the Simstudent will perform a step in an algebra problem, and the real student has the option to accept it as right, or correct the computer, which will proceed to remember the operations taken so it will not make the same mistakes next time. This is not an art project, but I love the feeling of accomplishment I get when my once-derpy Simstudent became a master at algebra and starts mowing problems down with ease. Thus, I wanted to create my final project based on a machine learning algorithm, so that my audiences can feel what I had felt.

2. Falling Stars

“Falling Stars” is an iPad app that lets the user draw objects on screen, which will produce sounds when drops of falling stars collide with them. The ability to easily create a musical composition that sounds decently coherent with seemly random drawings impresses me. The visuals themes are also great looking and create a calming mood. As an interactive program, it allows the user to create something that not only looks cool but also sounds great. I have always wanted to create a project that uses music and sounds, but I myself do not have enough understanding of music theory to create compositions myself. However, if all I do is set up the environment, and let the audiences and computer take care of generation of music instead, just as Falling Stars did, that might just be possible.

3. MIDI keyboard in Processing

I originally wanted all the music creation to be taken care of by the computer. However, if the user’s only interactions are to rate a composition as good or bad, then he or she might have to brute force through a lot of bad music to find one that is good, which is not exactly a rewarding experience that I had been hoping for. In order to make the experience more interactive, I decided to use a Processing library which can receive input from MIDI keyboards known as RWMidi. I also found an example project made from this library, which displays the robustness that I need. I am currently waiting for my MIDI keyboard to be shipped to me so that I can test it as soon as possible.

Ideas:

1. There is a fish tank filled with fish. When each of them flails up out of water, it sings a note. When these fishes do this over a sequence of time, they will produce a composition. The user will then vote whether it is good or not. With a machine learning algorithm, the fishes will remember the label and modify their future compositions based on the user’s taste. This effectively lets the user train the fish to produce “music”. The user can also choose to play music with his/her MIDI keyboard, thus giving more teaching power to the user.

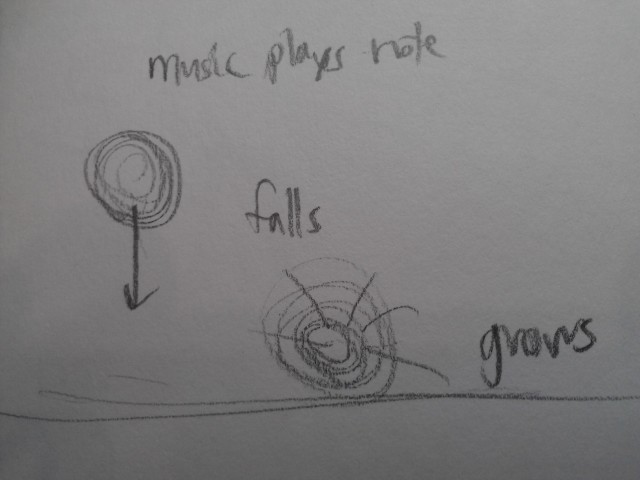

2. Something with music information visualization. I invested money into a MIDI keyboard so I will be using it no matter what. It would be nice to create a visual story with music. This will be something similar to the reverse of “Falling Stars”, so it will probably grow things via pattern of music.

Update: 11/18

I have finished the machine learning backend, and it can play music correctly. All that is left to do is to implement the frontend and sync its timing with the backend, which will not be trivial, but it is not going to be planning intensive.